Grantmaking work

Introduction

Financial support for promising work aligned with our mission.

Grants process

We are excited to offer multiple opportunities to apply for support:

RFPs and Contests

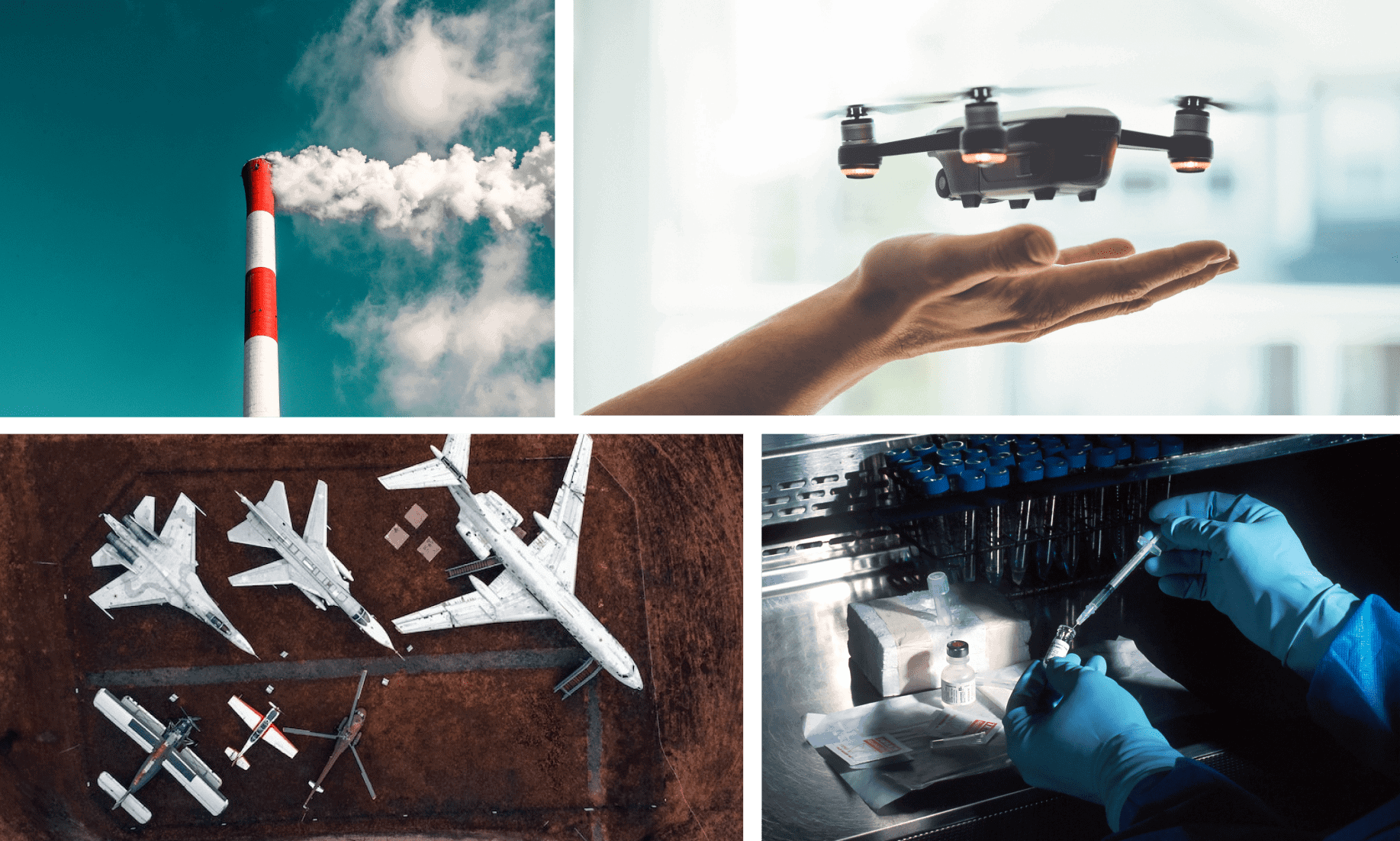

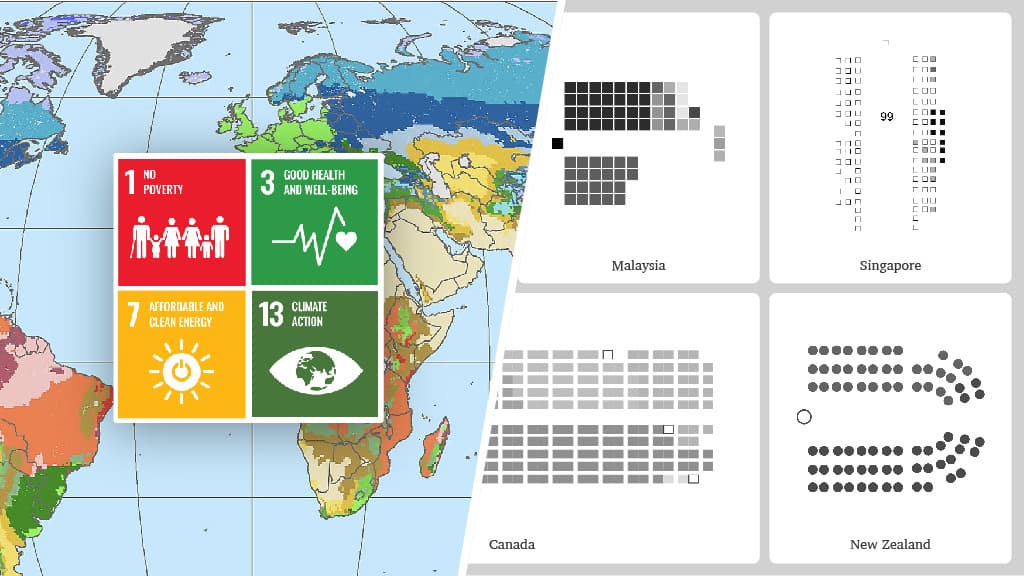

Requests for Proposals (RFPs) and Contests, which we publish on our website on a regular basis. Examples include our grants program on the Humanitarian Impacts of Nuclear War, our call for papers on AI and the SDGs, and our Worldbuilding competition.

Collaborations

Collaborations in direct support of FLI internal projects and initiatives. For example, FLI has funded film production on autonomous weapons at partner organisations. FLI staff work closely with these grantees.

Fellowships

AI Existential Safety Community: A community dedicated to ensuring AI is developed safely, including both faculty and AI researchers. Members are invited to attend meetings, participate in an online community, and apply for travel support.

We do not accept unsolicited requests. Please subscribe to our newsletter and follow us on social media to learn more about our upcoming RFPs and Contests. Any questions about grants can be sent to grants@futureoflife.org.

All our grant programs

Closed or completed programs

2024 Grants

Multistakeholder Engagement for Safe and Prosperous AI

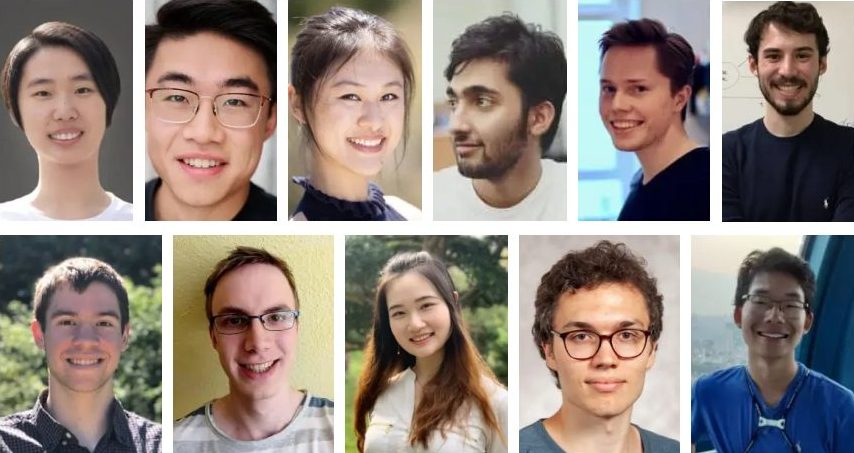

US-China AI Governance PhD Fellowships

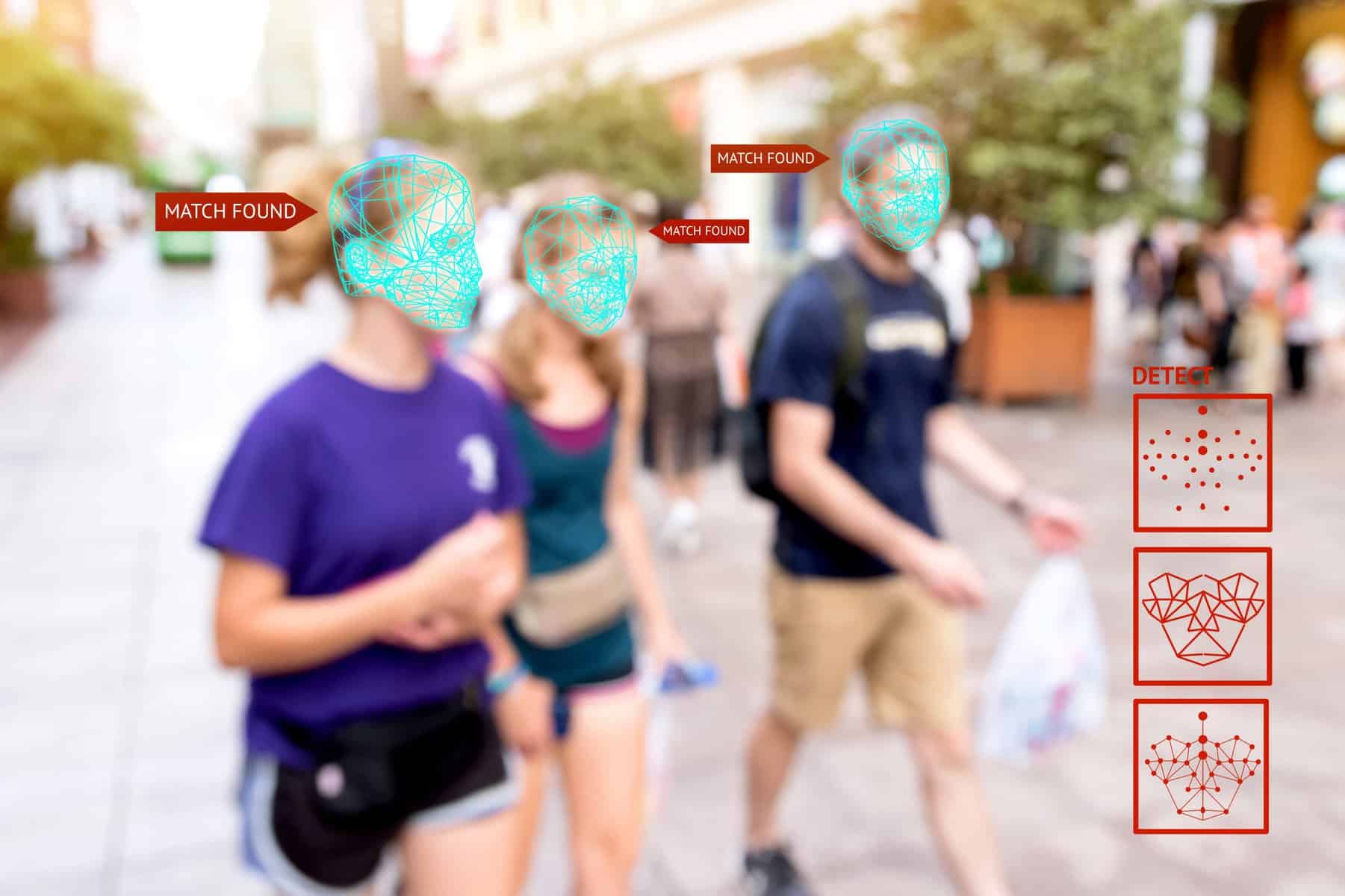

How to mitigate AI-driven power concentration

2023 Grants

2022 Grants

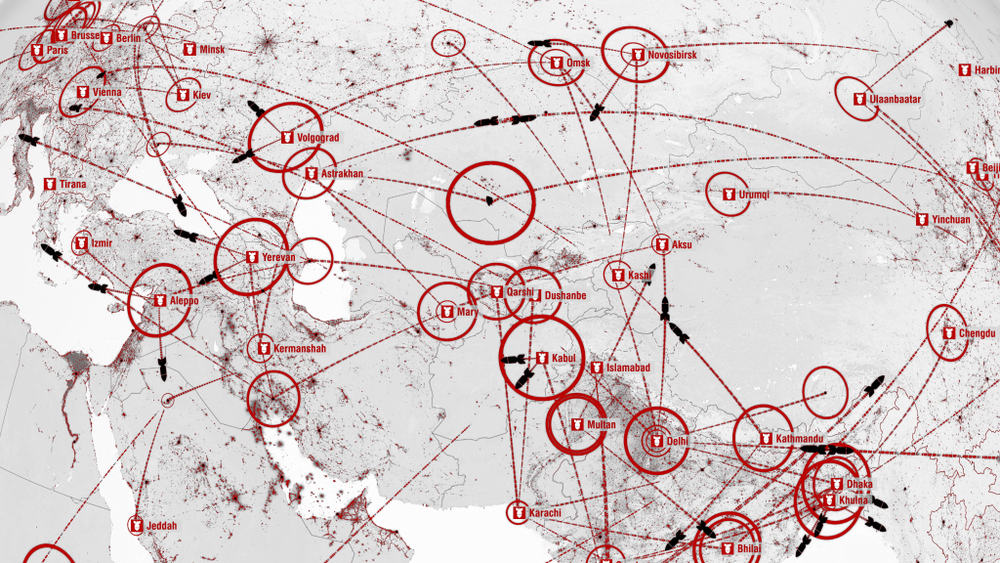

Nuclear War Research

Technical PhD Fellowships

Technical Postdoctoral Fellowships

2021 Grants

2018 AGI Safety Grant Program

2015 AI Safety Grant Program

AI Safety Community

A community dedicated to ensuring AI is developed safely.

The way to ensure a better, safer future with AI is not to impede the development of this new technology but to accelerate our wisdom in handling it by supporting AI safety research.

Since it may take decades to complete this research it is prudent to start now. AI safety research prepares us better for the future by pre-emptively making AI beneficial to society and reducing its risks.

This mission motivates research across many disciplines, from economics and law to technical areas like verification, validity, security, and control. We’d love you to join!

Related posts

Future of Life Institute Announces 16 Grants for Problem-Solving AI

Realising Aspirational Futures – New FLI Grants Opportunities

Statement on a controversial rejected grant proposal

FLI September 2022 Newsletter: $3M Impacts of Nuclear War Grants Program!

The Future of Life Institute announces grants program for existential risk reduction