Call for proposed designs for global institutions governing AI

Alongside the AI for SDGs grants track in 2024, FLI launched a request for design proposals for global institutions to govern advanced AI, or artificial general intelligence (AGI). Out of a diverse set of proposals, six were selected to receive grants of $15,000. The resulting papers, hailing from diverse backgrounds in Europe, the US and South America, laid out a range of institutions and mechanisms designed to harness safely the powers of AI, from a ‘CERN for AI’ to ‘Fair Trade AI’ and a Global AGI agency.

Grants archive

Project Summary

Justin Bullock at the University of Washington suggests as his mechanism a Global AGI Agency, which would be a public private partnership led by a US-EU-China coalition alongside major private national R&D labs within leading technology firms. This partnership would be institutionally housed by the IEEE and the UN together. In this approach, national governments would partner more closely with leading technology firms, subsidise projects, provide evaluation and feedback on models, and generate public good uses alongside the profit making uses.

Project Summary

Katharina Zuegel at the Forum on Information and Democracy in France proposes the development of a "Fair Trade AI" mechanism, inspired by the success of the Fair Trade certification model in promoting ethical practices within commodity markets. By leveraging Fair Trade principles, this project aims to foster the creation, deployment, and utilization of AGI systems that are ethical, trustworthy, and beneficial to society. Fair Trade’s success informs this project, including enhanced living and working conditions, economic empowerment of labor, cultural preservation, and environmental sustainability.

Project Summary

Haydn Belfield, a researcher at the University of Cambridge’s Centre for the Study of Existential Risk and the Leverhulme Centre for the Future of Intelligence, proposes two reinforcing institutions: an International AI Agency (IAIA) and CERN for AI. The IAIA would primarily serve as a monitoring and verification body, enforced by chip import restrictions: only countries that sign a verifiable commitment to certain safe compute practices would be permitted to accumulate large amounts of compute. Meanwhile, a “CERN for AI” is an international scientific cooperative megaproject on AI which would centralise frontier model training runs in one facility. As an example of reinforcement, frontier foundation models would be shared out of the CERN for AI, under the supervision of the IAIA.

Project Summary

José Villalobos Ruiz, based at the Institute for Law & AI, proposes an international treaty on the prohibition of misaligned AI. Ruiz will focus on what the legal side of an ideal treaty on the prohibition of misaligned AGI might look like, addressing the question in three steps. The first step will be a comparative analysis of existing legal instruments that prohibit technologies or activities that may pose an existential risk, from nuclear weapons to greenhouse gas emissions beyond a certain cap; the second step will be to identify how existing legal frameworks may fail to address risks posed by AGI; the third will be to recommend ways in which those weaknesses could be tackled in a treaty that prohibits (misaligned) AGI.

Project Summary

To enable his ideal one-AGI scenario, Joel Christoph at the European University Institute proposes the creation of an "International AI Governance Organization" (IAIGO), a new treaty-based institution under the auspices of the United Nations. All countries would be eligible to join, with major AI powers guaranteed seats on the executive council, akin to the UN Security Council permanent members. The IAIGO would have three primary mandates: regulate, monitor and enforce a moratorium on AGI development, until a coordinated international project can be initiated with adequate safety precautions; pool financial and talent resources from member states to create an international AGI project, potentially absorbing leading initiatives like OpenAI and DeepMind and subjecting ‘rogue’ projects to severe sanctions; finally, oversee controlled AGI deployment, distributing benefits equitably to all member states and enforcing strict safety and security protocols.

Project Summary

Joshua Tan, an American researcher at the University of Oxford, makes the case for a world of multiple safe AGIs, overseen by an institution that is, in effect, the practical realisation of Yoshua Bengio’s recent proposal: a multilateral network of publicly-funded AI laboratories that prioritize humanity's safety and well-being. Tan then focuses on how to establish such a network, as well as on resolving some operational and incentive problems he sees with Bengio's original proposal.

Results

Here is a selection of results from this grant program:

- Stable AGI Governance through the International AI Governance Organization, by Joel Christoph. Download

- Towards a Global Agency for Governing Artificial General Intelligence - Challenges and Prospects, by Justin Bullock. Download

- Beyond a Piecemeal Approach: Prospects for a Framework Convention on AI, by José Jaime Villalobos and Matthijs M. Maas. Download

- Domestic frontier AI regulation, an IAEA for AI, an NPT for AI, and a US-led Allied Public-Private Partnership for AI: Four institutions for governing and developing frontier AI, by Haydn Belfield. Download

I. Background on FLI

The Future of Life Institute (FLI) is an independent non-profit that works to steer transformative technology towards benefiting life and away from extreme large-scale risks. We work through policy advocacy at the UN, in the EU and the US, and have a long history of grants programmes supporting such work as AI existential safety research and investigations into the humanitarian impacts of nuclear war. This current request for proposals is part of FLI’s Futures program, which aims to guide humanity towards the beneficial outcomes made possible by transformative technologies. The program seeks to engage a diverse group of stakeholders from different professions, communities, and regions to shape our shared future together.

II. Request for Proposal

Call for proposed designs for global institutions governing AI

FLI is calling for research proposals with the aim of designing trustworthy global governance mechanisms or institutions that can help stabilise a future with 0, 1, or more AGI projects. These proposals should outline the specifications needed to reach or preserve a secure world, taking into account the myriad threats posed by advanced AI. Moreover, we expect proposals to specify and justify whether global stability is achieved by banning the creation of all AGIs, enabling just one AGI system and using it to prevent the creation of more, or creating several systems to improve global stability. There is the option for proposals to focus on a mechanism not dependent on a particular scenario, or one flexible enough to adapt to 0, 1 or more AGI projects. Nonetheless, it is recommended that applicants consider in which scenario their proposed mechanism would best perform, or be most valued. In that sense, as well as pitching a particular control mechanism, each proposal is also making a case for how humanity is kept safe in a specific future scenario.

FLI’s rationale for launching this request for proposal

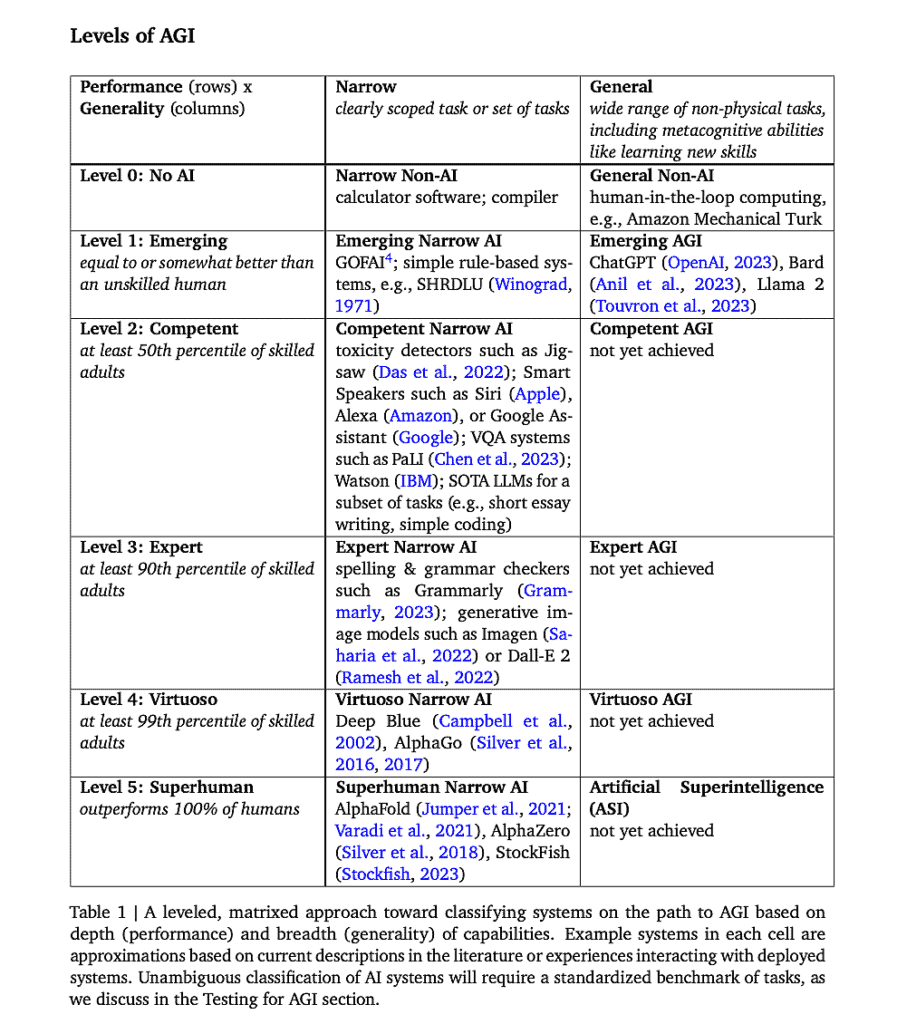

Reaching a future stable state may require restricting AI development such that the world has a.) no AGI projects; b.) a single, global AGI project, or c.) multiple AGI projects. By AGI, we refer to Shane Legg’s definition of ‘ASI’: a system which outperforms 100% of humans at a wide range of non-physical tasks, including metacognitive abilities like learning new skills (see grid below, taken from Legg’s paper on this). A stable state would be a scenario that evolves at the cautious timescale determined by thorough risk assessments rather than corporate competition.

a. No AGI projects

As recently argued by the Future of Life Institute’s Executive Director, Anthony Aguirre, if there is no way to make AGI – or superhuman general-purpose AI – safe, loyal, and beneficial, then we should not go ahead with it. In practice, that means that until we have a way of proving that an AGI project will not, upon completion or release, take control away from humanity or cause a catastrophe, AGI models should be prevented from being run. Equally, harnessing the benefits and preventing the risks of narrow AI systems and controllable general purpose models still requires substantial global cooperation and institutional heft, none of which currently exists. Proposals focusing on such initiatives might include mechanisms for:

Risk analysis;

Predicting, estimating, or evaluating the additive benefit of continuing AI capability development;

Ensuring no parties are cheating on AGI capability development;

Building trust in a global AI governance entity; or,

Leveraging the power of systems to solve bespoke problems that can yield societal and economic benefits.

b. One AGI project

As Aguirre points out, even if an AGI is ‘somehow, made both perfectly loyal/subservient to, and a perfect delegate for, some operator’, that operator will rapidly acquire far too much power – certainly too much for the comfort of existing powers. He notes that other power structures ‘will correctly think… that this is an existential threat to their existence as a power structure – and perhaps even their existence period (given that they may not be assured that the system is in fact under control.)’ They may try to destroy such a capability. To avoid this imbalance or its disastrous results, the single AGI will either need to be created by a pre-existing cooperation of the great powers, or brought under the control of a new global institution right after it is produced. Again, the world lacks these institutions, and forming them will require extensive research and thought. Alongside variations on the ideas listed above, proposals for such establishments and connected questions needing answers could also include:

Mechanisms for distributing the benefits of the centralised capabilities development efforts: how is this done from a technical perspective? Is it all of humanity? Only the signatories of an international treaty? How are economic benefits shared and is that the same or different than new knowledge?

Mechanisms for preventing authoritarian control? How can power be centralised without corruption?

Intellectual property structures that would enable and incentivize commercialization of breakthroughs discovered in an AGI system.

Mechanisms for determining if a new discovery should be developed by a private entity or if it is for the common good (e.g. radical climate intervention)

Potential governance structures for such an entity: who makes the decisions about development? Risks? How is the concentration of power kept in check and accountable to the globe? How is capture by special interests, spies, or geopolitical blocs prevented?

Verification mechanisms to ensure no one is cheating on AGI capability development-How to prevent others from cheating? Penalties? Surveillance mechanisms? How much does this need to vary by jurisdiction?

How is such an organization physically distributed?

Mechanisms for ensuring the security of such an entity, preventing leaks and accidents.

Can realpolitik support centralised global AGI development? What would it take to actually convince the major states that they wouldn’t effectively be giving up too much sovereignty or too much strategic positioning?

c. Multiple AGI projects

Similar concerns arise if multiple AGI systems emerge. A delicate balance of power must be strenuously maintained. Furthermore, with all of these scenarios there will ensue the significant dual problem of on the one hand limiting the associated risks of such powerful AI systems, and, on the other, distributing the associated benefits – of which we can expect many. Some problems along those lines in need of solutions:

How much power should an assembly of represented states have vs. bureaucratic managers vs. scientists?

What is an equitable, fair, and widely agreeable distribution of voting power among the represented states? Should simple- or super-majorities be required? Should Vanuatu have the same weight as Japan?

Who gets to decide how or whether AI should influence human values? What and whose values get enshrined in a new global institution for governing AI? And if no one’s in particular, what mechanisms can help to maintain room for personal convictions, free thinking, community traditions such as religion, careful decision-making and contradicting values? (see Robert Lempert’s new RAND paper)

d. Flexible to different numbers of AGI projects

Dividing scenarios into the three above groups will hopefully yield a balanced sample of each outcome. Equally, urging primary investigators to select just one of these categories may help to encourage concrete scenarios for what a well-managed future with advanced AI could look like – in short, push them to pick only their preferred future. However, some applicants may wish to submit proposals for mechanisms flexible enough to adjust to varying numbers of AGI systems. These applicants will need to address how their mechanism can ensure stability in a world where the number of AGI systems can keep changing. Alternatively, there may be mechanisms or institutions proposed whose function is not dependent on a particular number of AGI projects. In such cases, it is still recommended that applicants consider in which scenario their proposed mechanism would best perform, or be most valued; nonetheless, we leave this option available for those cases where such a consideration proves to be unenlightening.

The success of all of these case groups depends upon a diligent and consistent consideration of the risks and benefits of AI capability development. Any increase in the power of intelligent systems must proceed in accordance with an agreed acceptable risk profile, as is done in the development of new drugs. However, it is not clear what the structure of such an organization would look like, how it would command trust, how it would evade capture, or how it could endure as a stable state. This request for proposals can be summarised as a search for this clarity. A better, more informed sense of where we wish to be in a few years, of what institutions will best place us to tackle the upcoming challenges, will be invaluable for policymakers today. Such a well-researched north star can help the world to reverse engineer and work out what governments should be doing now to take humanity in a better, safer direction.

Without a clear articulation of how trustworthy global governance could work, the default narrative is that it is impossible. This RFP is thus borne both of an assessment of the risks we face, and of sincere hope that the default narrative is wrong, a hope that if we keep it under control and use it well, AI will empower – rather than disempower – humans the world over.

Existing projects

FLI is by no means creating a new field here. Promising initiatives already in the works, which may inspire researchers applying to this program, include the following:

III. Evaluation Criteria & Project Eligibility

Proposals will be evaluated according to the track record of the researcher, the proposal’s originality or potential to be transformative, the potential for the proposed activity to advance knowledge of the coordination problems for mitigating AGI risk, and how convincingly the proposal accounts for the range of AGI risks.

Grants applications will be subject to a competitive process of external and confidential peer review. We intend to support several proposals. Accepted proposals will receive a one-time grant of $15,000, to be used at the researcher’s discretion. Grants will be made to nonprofit organizations, with institutional overhead or indirect costs not exceeding 15%.

IV. Application process

All applications should be submitted electronically through this form. We will accept applications internationally. But all applicants should have a nonprofit organization with which they are associated to accept the funding. We will not make grants directly to individuals.

Applications deadline: 1st April 2024.

External reviewers invited by FLI will then evaluate all the proposals according to the above criteria, and decisions will be shared by mid to late May. Completed research papers are due by 13th September.

All questions should be sent to grants@futureoflife.org.

Our other grant programs

Request for Proposals on religious projects tackling the challenges posed by the AGI race

2024 Grants