Policy and Research

We aim to improve the governance of artificial intelligence, and its intersection with biological, nuclear and cyberrisk.

Introduction

Improving the governance of transformative technologies

The policy team at FLI works to improve national and international governance of AI. FLI has spearheaded numerous efforts to this end.

In 2017 we created the influential Asilomar AI principles, a set of governance principles signed by thousands of leading minds in AI research and industry. More recently, our 2023 open letter caused a global debate on the rightful place of AI in our societies. FLI has given testimony at the U.S. Congress, the European Parliament, and other key jurisdictions.

Spotlight

We must not build AI to replace humans.

A new essay by Anthony Aguirre, Executive Director of the Future of Life Institute

Humanity is on the brink of developing artificial general intelligence that exceeds our own. It's time to close the gates on AGI and superintelligence... before we lose control of our future.

Our work

Project database

Perspectives of Traditional Religions on Positive AI Futures

Most of the global population participates in a traditional religion. Yet the perspectives of these religions are largely absent from strategic AI discussions. This initiative aims to support religious groups to voice their faith-specific concerns and hopes for a world with AI, and work with them to resist the harms and realise the benefits.

Recommendations for the U.S. AI Action Plan

The Future of Life Institute proposal for President Trump’s AI Action Plan. Our recommendations aim to protect the presidency from AI loss-of-control, promote the development of AI systems free from ideological or social agendas, protect American workers from job loss and replacement, and more.

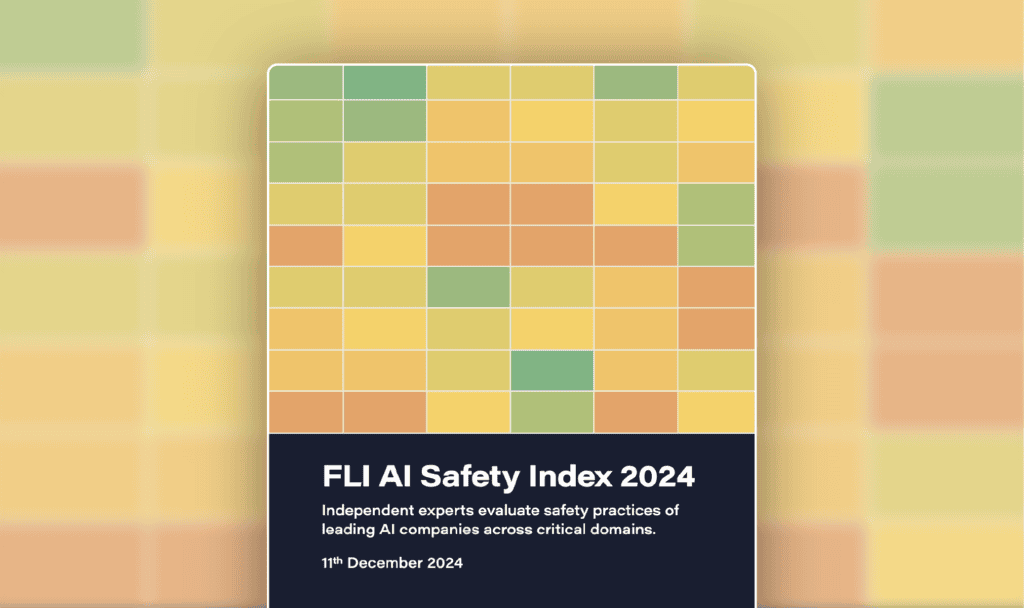

FLI AI Safety Index 2024

Seven AI and governance experts evaluate the safety practices of six leading general-purpose AI companies.

AI Convergence: Risks at the Intersection of AI and Nuclear, Biological and Cyber Threats

The dual-use nature of AI systems can amplify the dual-use nature of other technologies—this is known as AI convergence. We provide policy expertise to policymakers in the United States in three key convergence areas: biological, nuclear, and cyber.

AI Safety Summits

Governments are exploring collaboration on navigating a world with advanced AI. FLI provides them with advice and support.

Engaging with AI Executive Orders

We provide formal input to agencies across the US federal government, including technical and policy expertise on a wide range of issues such as export controls, hardware governance, standard setting, procurement, and more.

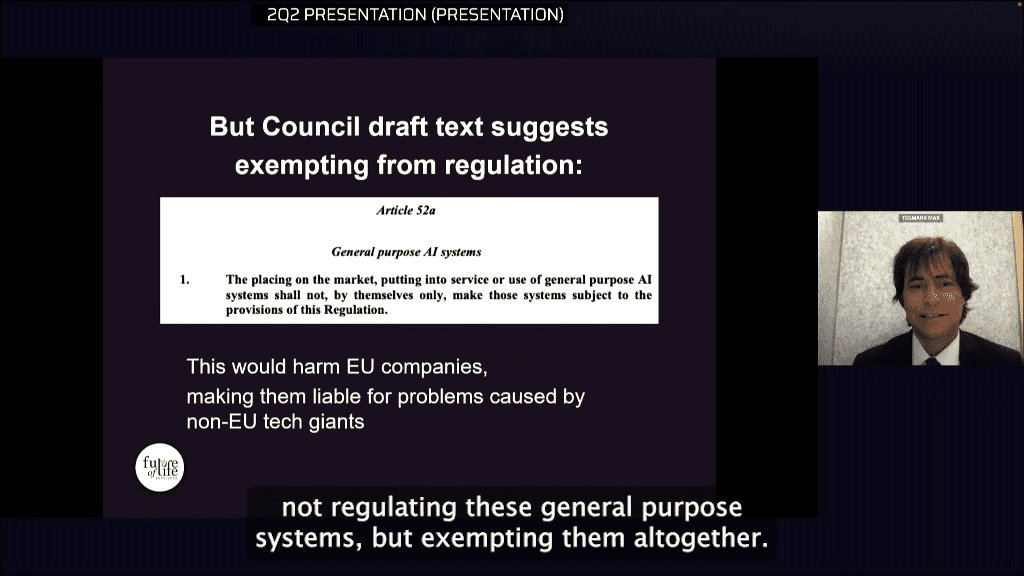

Implementing the European AI Act

Our key recommendations include broadening the Act’s scope to regulate general purpose systems and extending the definition of prohibited manipulation to include any type of manipulatory technique, and manipulation that causes societal harm.

Educating about Autonomous Weapons

Military AI applications are rapidly expanding. We develop educational materials about how certain narrow classes of AI-powered weapons can harm national security and destabilize civilization, notably weapons where kill decisions are fully delegated to algorithms.

All our work

Our content

Latest policy and research papers

Produced by us

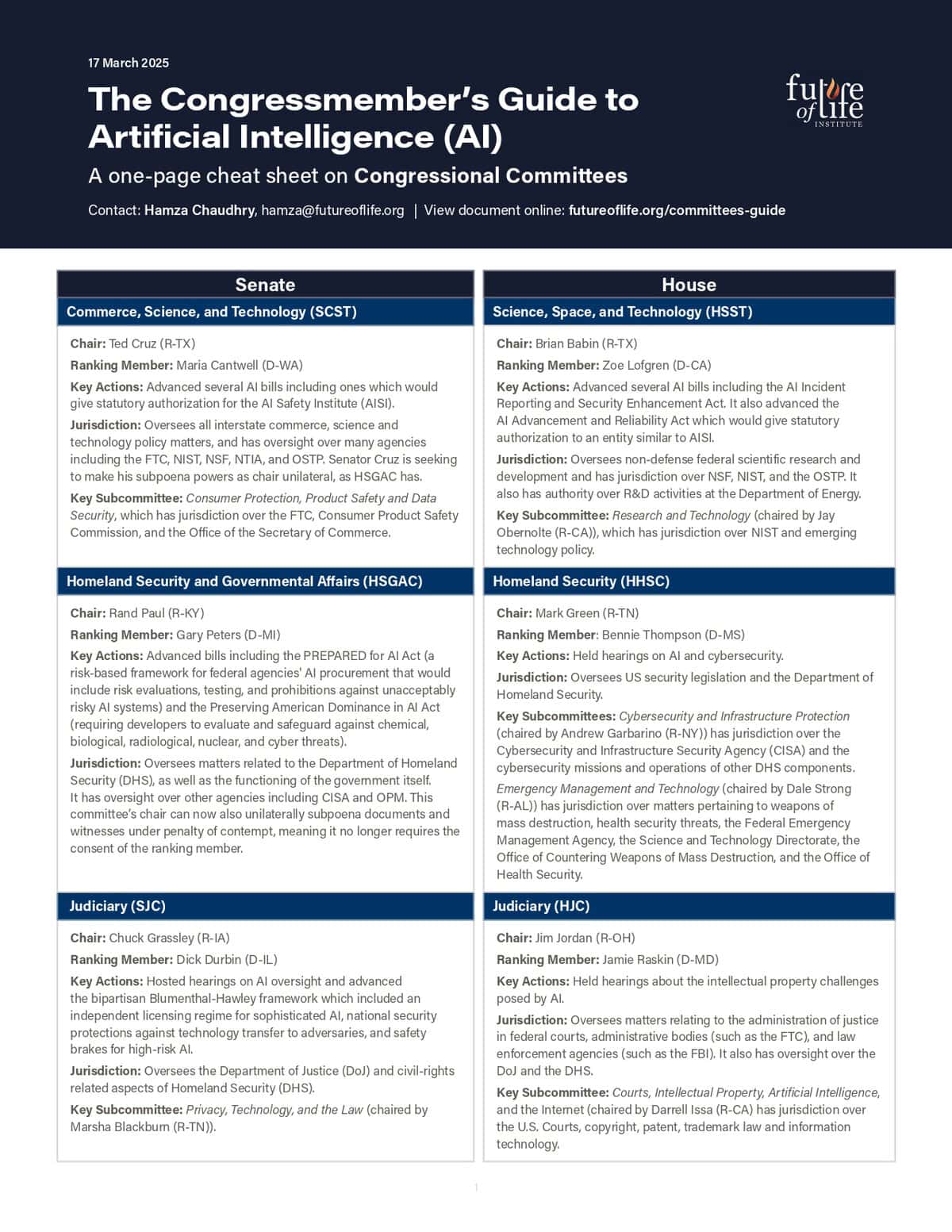

Staffer’s Guide to AI Policy: Congressional Committees and Relevant Legislation

March 2025

Recommendations for the U.S. AI Action Plan

March 2025

Safety Standards Delivering Controllable and Beneficial AI Tools

February 2025

Framework for Responsible Use of AI in the Nuclear Domain

February 2025

Load more

All Documents

Featuring our staff and fellows

A Taxonomy of Systemic Risks from General-Purpose AI

Risto Uuk, Carlos Ignacio Gutierrez, Lode Lauwaert, Carina Prunkl, Lucia Velasco

January 2025

Effective Mitigations for Systemic Risks from General-Purpose AI

Risto Uuk, Annemieke Brouwer, Tim Schreier, Noemi Dreksler, Valeria Pulignano, Rishi Bommasani

January 2025

Examining Popular Arguments Against AI Existential Risk: A Philosophical Analysis

Torben Swoboda, Risto Uuk, Lode Lauwaert, Andrew Peter Rebera, Ann-Katrien Oimann, Bartlomiej Chomanski, Carina Prunkl

January 2025

The AI Risk Repository: Meta-Review, Database, and Taxonomy

Peter Slattery, Alexander K. Saeri, Emily A. C. Grundy, Jess Graham, Michael Noetel, Risto Uuk, James Dao, Soroush Pour, Stephen Casper, Neil Thompson

August 2024

Load more

Resources

We provide high-quality policy resources to support policymakers

US Federal Agencies: Mapping AI Activities

This guide outlines AI activities across the US Executive Branch, focusing on regulatory authorities, budgets, and programs.

9 September, 2024

EU AI Act Explorer and Compliance Checker

Browse the full AI Act text online, and discover how the AI Act will affect you in 10 minutes by answering a series of straightforward questions.

15 January, 2024

Autonomous Weapons website

The era in which algorithms decide who lives and who dies is upon us. We must act now to prohibit and regulate these weapons.

20 November, 2017

Geographical Focus

Where you can find us

We are a hybrid organisation. Most of our policy work takes place in the US (D.C. and California), the EU (Brussels) and at the UN (New York and Geneva).

United States

In the US, FLI participates in the US AI Safety Institute consortium and promotes AI legislation at state and federal levels.

European Union

In Europe, our focus is on strong EU AI Act implementation and encouraging European states to support a treaty on autonomous weapons.

United Nations

At the UN, FLI advocates for a treaty on autonomous weapons and a new international agency to govern AI.

Achievements

Some of the things we have achieved

Developed the AI Asilomar Principles

In 2017, FLI coordinated the development of the Asilomar AI Principles, one of the earliest and most influential sets of AI governance principles.

View the principles

AI recommendation in the UN digital cooperation roadmap

Our recommendations (3C) on the global governance of AI technologies were adopted in the UN Secretary-General's digital cooperation roadmap.

View the roadmap

Max Tegmark's testimony to the EU parliament

Our founder and board member Max Tegmark presented a testimony on the regulation of general-purpose AI systems in the EU parliament.

Watch the testimony

Our content

Featured posts

Here is a selection of posts relating to our policy work:

Context and Agenda for the 2025 AI Action Summit

The AI Action Summit will take place in Paris from 10-11 February 2025. Here we list the agenda and key deliverables.

31 January, 2025

FLI Statement on White House National Security Memorandum

Last week the White House released a National Security Memorandum concerning AI governance and risk management. The NSM issues guidance […]

28 October, 2024

Paris AI Safety Breakfast #2: Dr. Charlotte Stix

The second of our 'AI Safety Breakfasts' event series, featuring Dr. Charlotte Stix on model evaluations, deceptive AI behaviour, and the AI Safety and Action Summits.

14 October, 2024

US House of Representatives call for legal liability on Deepfakes

Recent statements from the US House of Representatives are a reminder of the urgent threat deepfakes present to our society, especially as we approach the U.S. presidential election.

1 October, 2024

Statement on the veto of California bill SB 1047

“The furious lobbying against the bill can only be reasonably interpreted in one way: these companies believe they should play by their own rules and be accountable to no one. This veto only reinforces that belief. Now is the time for legislation at the state, federal, and global levels to hold Big Tech to their commitments”

30 September, 2024

Panda vs. Eagle

FLI's Director of Policy on why the U.S. national interest is much better served by a cooperative than an adversarial strategy towards China.

27 September, 2024

US Federal Agencies: Mapping AI Activities

This guide outlines AI activities across the US Executive Branch, focusing on regulatory authorities, budgets, and programs.

9 September, 2024

Paris AI Safety Breakfast #1: Stuart Russell

The first of our 'AI Safety Breakfasts' event series, featuring Stuart Russell on significant developments in AI, AI research priorities, and the AI Safety Summits.

5 August, 2024

Our content

Contact us

Let's put you in touch with the right person.

We do our best to respond to all incoming queries within three business days. Our team is spread across the globe, so please be considerate and remember that the person you are contacting may not be in your timezone.

Please direct media requests and speaking invitations for Max Tegmark to press@futureoflife.org. All other inquiries can be sent to contact@futureoflife.org.