Contents

HAPPY NEW YEAR!

2016 Was More Successful Than Many Realize

Before we start 2017, we want to take a moment to look back at what a great year 2016 was.

Most people who are familiar with FLI, know that we focus on existential risks, which, by definition, could destroy humanity as we know it. And we look especially closely at those risks relating to artificial intelligence, nuclear weapons, climate change, and biotechnology. But what isn’t always as well known is that we’re equally interested in the flip side of existential risks: existential hope.

Although just about everyone found something to dislike about 2016, from wars to politics and celebrity deaths, it turns out 2016 was also full of stories and activities and research and breakthroughs that give us tremendous hope for the future.

On top of that, we at FLI are especially excited about some of our own accomplishments, as well as those of the organizations we work with. Max, Meia, Victoria, Anthony, Richard, Lucas, Dave, and Ariel sat down for an end-of-year podcast to highlight what we were most excited about in 2016 and why we’re looking forward to 2017.

If trends like these keep up, there’s plenty to look forward to in 2017!

Our Most Popular Apps and Informational Pages

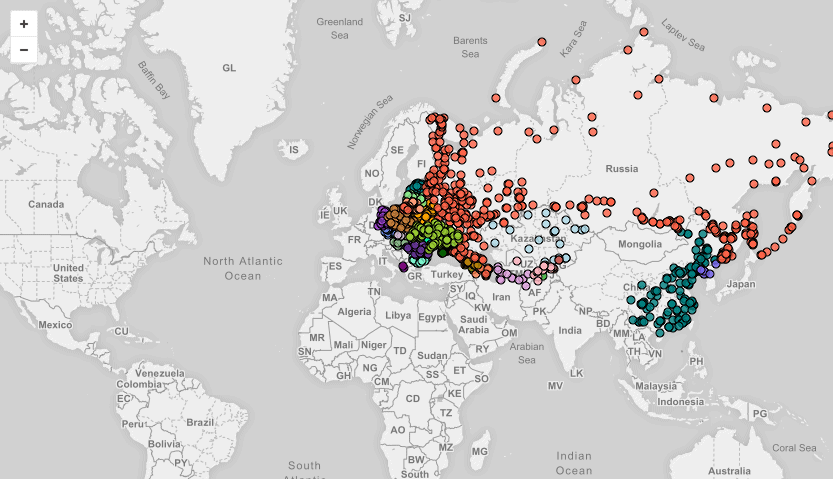

Declassified Nuclear Targets Map

This year, the National Security Archives published a declassified list of U.S. nuclear targets from 1956, which spanned 1,100 locations across Eastern Europe, Russia, China, and North Korea. The map here shows all 1,100 nuclear targets from that list, and we’ve partnered with NukeMap to demonstrate how catastrophic a nuclear exchange between the United States and Russia could be.

Why You Should Care About Nukes

Henry Reich with MinutePhysics and FLI’s Max Tegmark got together to produce an awesome and entertaining video about just how scary nuclear weapons are. They’re a lot scarier than most people realize, and there’s something concrete you can do about it!

Benefits and Risks of Artificial Intelligence

The concern about advanced AI isn’t malevolence but competence. A super-intelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we have a problem. Because AI has the potential to become more intelligent than any human, we have no surefire way of predicting how it will behave. We can’t use past technological developments as much of a basis because we’ve never created anything that has the ability to, wittingly or unwittingly, outsmart us.

Responsible Investing Made Easy

Divestment can play a powerful role in stigmatizing the nuclear arms race. To put your money where your mouth is, you can check where your money is by entering your mutual funds in our app to see whether you’re inadvertently supporting nuclear weapons. (This is a work in progress, and a project we’re looking forward to building up in 2017.)

Open Letters

The Open Letters on Artificial Intelligence and Autonomous Weapons may have been published in 2015, but they continued to have an impact in 2016. In particular the media referenced them often throughout the year, they helped AI safety to become a more mainstream research topic, and the autonomous weapons letter was mentioned as a contributing factor to the recent United Nations decision to start discussions for an autonomous weapons convention.

We’ve updated our nuclear close-calls timeline to include new and more recent nuclear events and problems. We also added insights from FLI members about what each of us thinks is the scariest nuclear close call. Don’t forget to watch the video by the Union of Concerned Scientists at the bottom of the page to learn about hair-trigger alert – which makes accidental nuclear war potentially more likely than an intentional war.

As part of his talk during the EA Global conference, Max Tegmark created a new feature on the site that clears up some of the common myths about advanced AI, so we can start focusing on the more interesting and important debates within the AI field.

Despite the end of the Cold War over two decades ago, humanity still has over 15,000 nuclear weapons. Some of these are hundreds of times more powerful than those that obliterated Hiroshima and Nagasaki, and they may be able to create a decade-long nuclear winter that could kill most people on Earth. Yet the superpowers plan to invest over a trillion dollars upgrading their nuclear arsenals.

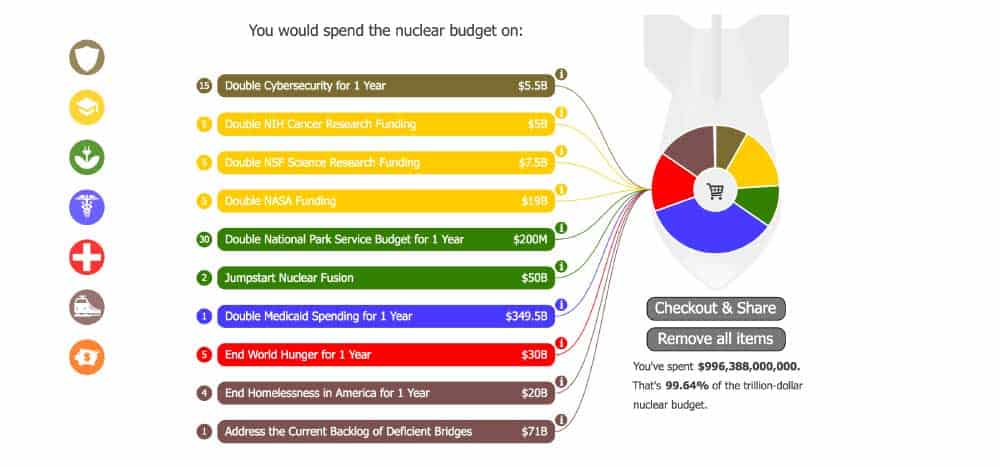

Trillion Dollar Nukes

How much are nuclear weapons really worth? Is upgrading the US nuclear arsenal worth $1 trillion – in the hopes of never using it – when that money could be used to improve lives, create jobs, decrease taxes, or pay off debts? How far can $1 trillion go if it’s not all spent on nukes?

Since the dawn of the nuclear age, each U.S. president has decided to grow or downsize our arsenal based on geopolitical threats, international treaties, and close calls where nuclear war nearly started by accident. As we look toward the end of Obama’s term, let’s consider his nuclear legacy (it’s probably scarier than you think!) with this infographic created in partnership with Futurism.com.

ICYMI: This Year’s Most Popular Articles

Progress in artificial intelligence and machine learning was impressive in 2015. Those in the field acknowledge progress is accelerating year by year, though it is still a manageable pace for us. The vast majority of work in the field these days actually builds on previous work done by other teams earlier the same year, in contrast to most other fields where references span decades.

By Ariel Conn

Value alignment. It’s a phrase that often pops up in discussions about the safety and ethics of artificial intelligence. How can scientists create AI with goals and values that align with those of the people it interacts with?

By Ariel Conn

Slate runs a feature called “Future Tense,” which claims to be the “citizen’s guide to the future.” But two of their articles this year were full of inaccuracies about AI safety and the researchers studying it. While this was disappointing, it also represented a good opportunity to clear up some misconceptions about why AI safety research is necessary.

By Ariel Conn

This year, DeepMind announced a major AI breakthrough: they’ve developed software that can defeat a professional human player at the game of Go. This is a feat that has long eluded computers. We asked AI experts what they thought of this achievement and why it took the AI community by surprise.

By Ariel Conn

When Apple released its software application, Siri, in 2011, iPhone users had high expectations for their intelligent personal assistants. Yet despite its impressive and growing capabilities, Siri often makes mistakes. The software’s imperfections highlight the clear limitations of current AI: today’s machine intelligence can’t understand the varied and changing needs and preferences of human life.

By Anthony Aguirre

It’s often said that the future is unpredictable. Of course, that’s not really true. We can predict next year’s U.S. GDP to within a few percent, what will happen if we jump off a bridge, the Earth’s mean temperature to within a degree, and many other things. Yet we’re often frustrated at our inability to predict better.

By Tucker Davey

As artificial intelligence improves, machines will soon be equipped with intellectual and practical capabilities that surpass the smartest humans. But not only will machines be more capable than people, they will also be able to make themselves better. That is, these machines will understand their own design and how to improve it – or they could create entirely new machines that are even more capable.

Artificial photosynthesis could be the energy of the future, as a Berkeley scientist develops new methods to more effectively harness the energy of the sun.

By Ariel Conn

In 2015, CRISPR exploded onto the scene, earning recognition as the top scientific breakthrough of the year by Science Magazine. But not only is the technology not slowing down, it appears to be speeding up. In just two months, ten major CRISPR developments grabbed headlines. More importantly, each of these developments could play a crucial role in steering the course of genetics research.

Our Most Popular Podcasts on SoundCloud and iTunes

Many researchers in the field of artificial intelligence worry about potential short-term consequences of AI development. Yet far fewer want to think about the long-term risks from more advanced AI. Why? We brought on Dario Amodei and Seth Baum to discuss just that.

By Ariel Conn

The UN voted to begin negotiations on a global nuclear weapons ban, but for now, nuclear weapons still jeopardize the existence of almost all people on earth. I recently sat down with meteorologist Alan Robock and physicist Brian Toon to discuss what is potentially the most devastating consequence of nuclear war: nuclear winter.

By Ariel Conn and Lucas Perry

Lucas and Ariel discuss the concepts of nuclear deterrence, hair trigger alert, the potential consequences of nuclear war, and how individuals can do their part to lower the risks of nuclear catastrophe.

By Ariel Conn

This year, I had the good fortune to interview Robin Hanson about his new book, The Age of Em. We discussed his book, the future and evolution of humanity, and the research he’s doing for his next book. You can listen to all of that here.

By Ariel Conn

Heather Roff and Peter Asaro talk about their work to understand and define the role of autonomous weapons, the problems with autonomous weapons, and why the ethical issues surrounding autonomous weapons are so much more complicated than other AI systems.

What We’ve Been Up to This Year

By Max Tegmark

Max Tegmark spoke at the event hosted by Yann LeCun, and many FLI members attended. The first day included many great quotes from Demis Hassabis, Erik Horvitz, John Kelly, Jen-Hsun Huang, Murray Shanahan, Eric Schmidt, and many others.

By Ariel Conn

The 30th annual Association for the Advancement of Artificial Intelligence (AAAI) conference kicked off on February 12 with two days of workshops, followed by the main conference. FLI is honored to have been a part of the AI, Ethics, and Safety Workshop that took place on Saturday, February 13.

By Lucas Perry

There are approximately 15,000 nuclear weapons on Earth, about 1,800 of which are on hair-trigger alert, ready to be launched within minutes. Yet rather than reduce this risk by trimming its excessive nuclear arsenal, the US plans to invest $1 trillion over the next 30 years in nuclear upgrades. We took up the challenge by helping our local city of Cambridge divest their $1 billion pension fund from nuclear weapons companies. And we also participated in a nuclear weapons conference at MIT, where the divestment was announced.

Some of the greatest minds in science and policy also spoke at the conference, and their videos (including the divestment announcement) can all be seen on our YouTube channel.

White House Frontiers Conference

Richard Mallah attended the White House Frontiers Conference in Pittsburgh, which aimed to explore “new technologies, challenges, and goals that will continue to shape the 21st century and beyond”. In the National track, focused on artificial intelligence, the section discussed the future of innovation, safety challenges, embedding values we want, and the economic impacts of AI.

By Ariel Conn

How can we more effectively make the world a better place? Over 1,000 concerned altruists converged at the Effective Altruism Global conference this year in Berkeley, CA to address this very question.

Since not everyone could make it to the conference, we put together some highlights of the event. Where possible, we’ve included links to videos of the talks and discussions.

By Victoria Krakovna

Victoria Krakovna attended a self-organizing conference at OpenAI. She writes: “There was a block for AI safety along with the other topics. The safety session became a broad introductory Q&A, moderated by Nate Soares, Jelena Luketina and me. Some topics that came up: value alignment, interpretability, adversarial examples, weaponization of AI.”

By Ariel Conn

Many FLI members recently attended the Ethics of AI conference held at NYU, including Max Tegmark and Meia Chita-Tegmark, who gave a joint talk. The event covered two days and 26 speakers, but you can read just some of the highlights and main topics of the event here.

Other Events FLI Members Participated In

In addition to the main Effective Altruism conference, Victoria, Richard and Lucas participated in EAGx Boston in April. And Victoria also participated in EAGx Oxford in November, where she was on a panel — which included Demis Hassabis, Nate Soares, Toby Ord and Murray Shanahan — to discuss the long-term situation in AI, and she gave a talk about the story of FLI.

Victoria also attended a conference at ASU on the Governance of Emerging Technologies in May, where she gave a talk and took part in a panel with Rao Kambhampati and Wendell Wallach. She was also a part of the Cambridge Catastrophic Risk Conference in December (CSER’s first conference – very exciting), where she gave a talk and was on a panel with Toby Walsh and Sean O’hEigeartaigh. Over the summer, Victoria attended the Deep Learning Summer School and wrote a post about the highlights of the event.

During the late spring and summer, Richard participated in:

Data Revolution: How AI and Machine Learning Are Remaking Our World

Symposium on Ethics of Autonomous Systems (SEAS Europe)

Conversations on Ethical and Social Implications of Artificial Intelligence (An IEEE TechEthics™ Event)

22nd European Conference on Artificial Intelligence

FLI also helped co-organize and sponsor a couple AI safety workshops at major ML conferences: Reliable Machine Learning in the Wild at ICML and Interpretable Machine Learning for Complex Systems at NIPS.

FLI’s Volunteer of the Year

We’d like to extend a special thank you to David Stanley for his phenomenal work this year helping to oversee and organize all FLI volunteers.

David Stanley

David is a postdoctoral researcher at Boston University specializing in computational neuroscience. He has published research on deep neural networks, neural network dynamics, and neurological disorders. David has a long-standing interest in the intersection of artificial intelligence and neuroscience. His objectives are to apply the next generation of data analysis techniques to understanding brain function, and to ensure that related neural technology is used ethically.

This year, David oversaw numerous volunteers who clocked over 300 hours of volunteer work. He helped get nine of our most popular pages translated into eight languages, he’s been working to expand our website and social media outreach to many other countries, and he played an integral role in helping to get our Trillion Dollar Nukes app developed. We look forward to working with him even more in 2017.