Our work

An overview of the type of work we do, and all of our current and past projects.

Work areas

Our areas of work

We work on projects across a few distinct areas:

Policy and Research

We engage in policy advocacy and research across the United States, the European Union and around the world.

Our Policy & Research work

Futures

We work on projects which aim to guide humanity towards the beneficial outcomes made possible by transformative technologies.

Our Futures work

Outreach

We produce educational materials aimed at informing public discourse, as well as encouraging people to get involved.

Our Outreach work

Grantmaking

We provide grants to individuals and organisations working on projects that further our mission.

Our Grant Programs

Events

We convene leaders of the relevant fields to discuss ways of ensuring the safe development and use of powerful technologies.

Our Events

Our achievements

Our most important contributions

Here are a few of our proudest achievements:

Hosted the first AI Safety conferences

We were the first to convene leading figures in the field of AI to discuss our concerns about potential safety risks of the emerging technology.

View our events

Created the first AI Safety grant program

From 2015-2017, we ran the first ever grant program dedicated to funding AI Safety projects. We currently offer a range of grant opportunities for projects that forward our mission.

View our grants

Developed the AI Asilomar Principles

In 2017, FLI coordinated the development of the Asilomar AI Principles, one of the earliest and most influential sets of AI governance principles.

View the principles

Celebrated 18 unsung heroes with Future of Life Awards

Every year since 2017, the Future of Life Award has celebrated the contributions of people who helped preserve the prospects of life.

See the award

Produced viral video series raising the alarm on lethal autonomous weapons

We produced two short films, with a combined 75+ million views, depicting a world in which lethal autonomous weapons have been allowed to proliferate.

Watch the videos

AI recommendation in the UN digital cooperation roadmap

Our recommendations on the global governance of AI technologies were adopted in the UN Secretary-General's digital cooperation roadmap.

View the roadmap

Projects

What we're working on

Here is an overview of all the projects we are working on right now:

Control Inversion

Why the superintelligent AI agents we are racing to create would absorb power, not grant it | The latest study from Anthony Aguirre.

Statement on Superintelligence

A stunningly broad coalition has come out against unsafe superintelligence: AI researchers, faith leaders, business pioneers, policymakers, National Security staff, and actors stand together.

Creative Contest: Keep The Future Human

$100,000+ in prizes for creative digital media that engages with the essay's key ideas, helps them to reach a wider range of people, and motivates action in the real world.

AI Existential Safety Community

A community dedicated to ensuring AI is developed safely, including both faculty and AI researchers. Members are invited to attend meetings, participate in an online community, and apply for travel support.

Fellowships

Since 2021 we have offered PhD and Postdoctoral fellowships in Technical AI Existential Safety. In 2024, we launched a PhD fellowship in US-China AI Governance.

RFPs, Contests, and Collaborations

Requests for Proposals (RFPs), public contests, and collaborative grants in direct support of FLI internal projects and initiatives.

Envisioning Positive Futures with Technology

Storytelling has a significant impact on informing people's beliefs and ideas about humanity's potential future with technology. While there are many narratives warning of dystopia, positive visions of the future are in short supply. We seek to incentivize the creation of plausible, aspirational, hopeful visions of a future we want to steer towards.

Perspectives of Traditional Religions on Positive AI Futures

Most of the global population participates in a traditional religion. Yet the perspectives of these religions are largely absent from strategic AI discussions. This initiative aims to support religious groups to voice their faith-specific concerns and hopes for a world with AI, and work with them to resist the harms and realise the benefits.

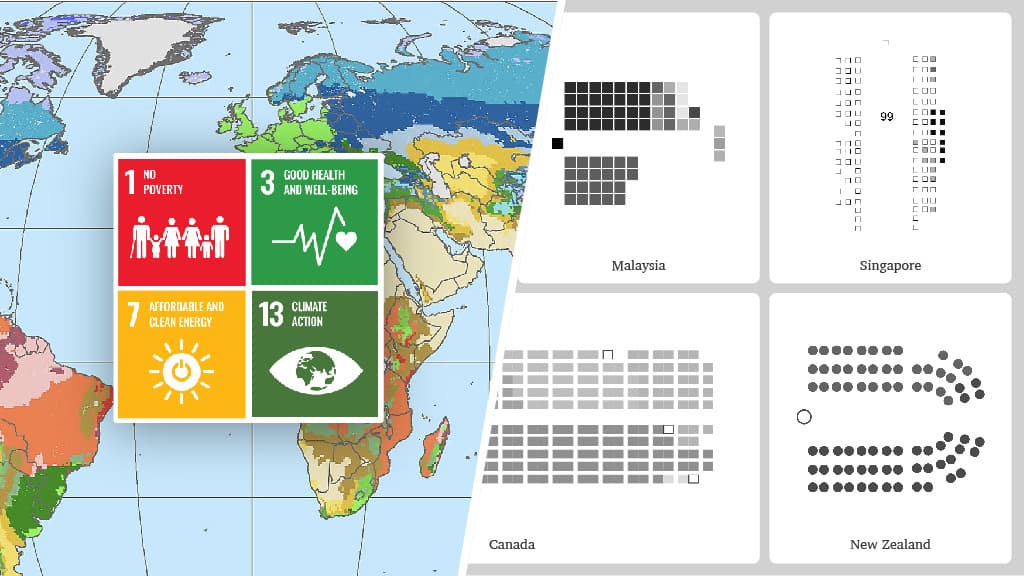

Promoting a Global AI Agreement

We need international coordination so that AI's benefits reach across the globe, not just concentrate in a few places. The risks of advanced AI won't stay within borders, but will spread globally and affect everyone. We should work towards an international governance framework that prevents the concentration of benefits in a few places and mitigates global risks of advanced AI.

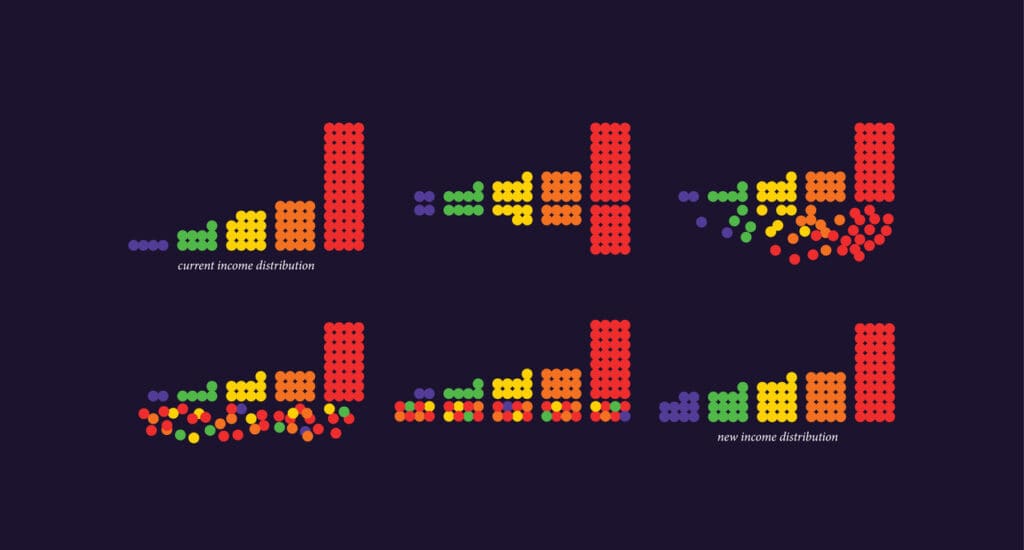

AI’s Role in Reshaping Power Distribution

Advanced AI systems are set to reshape the economy and power structures in society. They offer enormous potential for progress and innovation, but also pose risks of concentrated control, unprecedented inequality, and disempowerment. To ensure AI serves the public good, we must build resilient institutions, competitive markets, and systems that widely share the benefits.

Building Resilient Futures

An age of rapid change requires resilient systems. By developing robust tools, technologies, and institutions, we work to solve humanity's critical challenges while strengthening society's foundations. Our mission is to ensure technological advancement creates stability and flourishing rather than disruption.

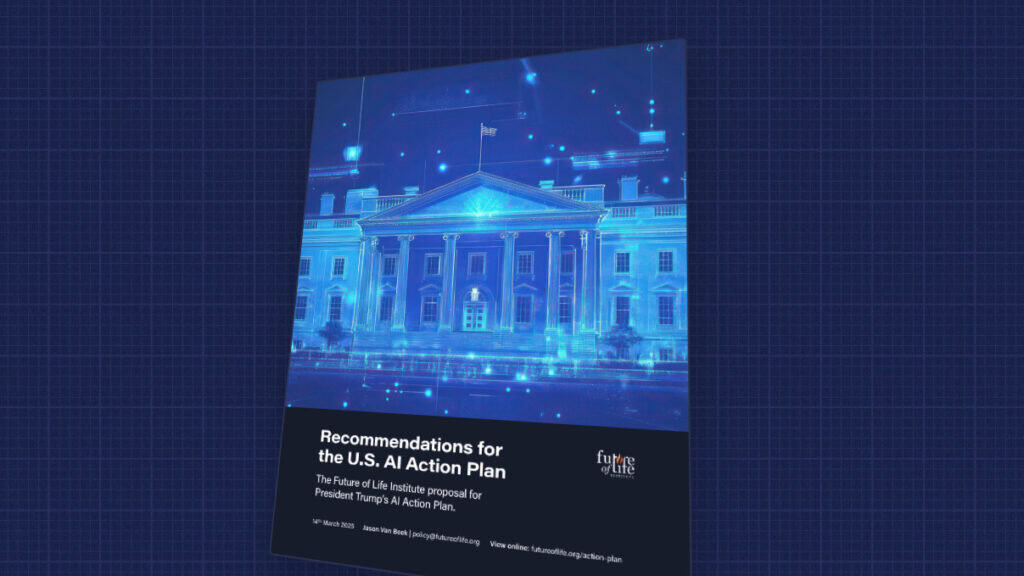

Recommendations for the U.S. AI Action Plan

The Future of Life Institute proposal for President Trump’s AI Action Plan. Our recommendations aim to protect the presidency from AI loss-of-control, promote the development of AI systems free from ideological or social agendas, protect American workers from job loss and replacement, and more.

Multistakeholder Engagement for Safe and Prosperous AI

FLI is launching new grants to educate and engage stakeholder groups, as well as the general public, in the movement for safe, secure and beneficial AI.

Digital Media Accelerator

The Digital Media Accelerator supports digital content from creators raising awareness and understanding about ongoing AI developments and issues.

Keep The Future Human

Why and how we should close the gates to AGI and superintelligence, and what we should build instead | A new essay by Anthony Aguirre, Executive Director of FLI.

FLI AI Safety Index: Winter 2025 Edition

Eight AI and governance experts evaluate the safety practices of leading general-purpose AI companies.

AI Convergence: Risks at the Intersection of AI and Nuclear, Biological and Cyber Threats

The dual-use nature of AI systems can amplify the dual-use nature of other technologies—this is known as AI convergence. We provide policy expertise to policymakers in the United States in three key convergence areas: biological, nuclear, and cyber.

Superintelligence Imagined Creative Contest

A contest for the best creative educational materials on superintelligence, its associated risks, and the implications of this technology for our world. 5 prizes at $10,000 each.

AI Safety Summits

Governments are exploring collaboration on navigating a world with advanced AI. FLI provides them with advice and support.

The Elders Letter on Existential Threats

The Elders, the Future of Life Institute and a diverse range of preeminent public figures are calling on world leaders to urgently address the ongoing harms and escalating risks of the climate crisis, pandemics, nuclear weapons, and ungoverned AI.

Implementing the European AI Act

Our key recommendations include broadening the Act’s scope to regulate general purpose systems and extending the definition of prohibited manipulation to include any type of manipulatory technique, and manipulation that causes societal harm.

Combatting Deepfakes

As part of a growing coalition of concerned organizations, FLI is calling on lawmakers to take meaningful steps to disrupt the AI-driven deepfake supply chain.

Educating about Autonomous Weapons

Military AI applications are rapidly expanding. We develop educational materials about how certain narrow classes of AI-powered weapons can harm national security and destabilize civilization, notably weapons where kill decisions are fully delegated to algorithms.

Global AI governance at the UN

Our involvement with the UN's work spans several years and initiatives, including the Roadmap for Digital Cooperation and the Global Digital Compact (GDC).

Realising Aspirational Futures – New FLI Grants Opportunities

We are opening two new funding opportunities to support research into the ways that artificial intelligence can be harnessed safely to make the world a better place.

The Windfall Trust

The Windfall Trust aims to alleviate the economic impact of AI-driven joblessness by building a global, universally accessible social safety net.

Imagine A World Podcast

Can you imagine a world in 2045 where we manage to avoid the climate crisis, major wars, and the potential harms of artificial intelligence? Our new podcast series explores ways we could build a more positive future, and offers thought provoking ideas for how we might get there.

Artificial Escalation

Our fictional film depicts a world where artificial intelligence ('AI') is integrated into nuclear command, control and communications systems ('NC3') with terrifying results.

Worldbuilding Competition

The Future of Life Institute accepted entries from teams across the globe, to compete for a prize purse of up to $100,000 by designing visions of a plausible, aspirational future that includes strong artificial intelligence.

Future of Life Award

Every year, the Future of Life Award is given to one or more unsung heroes who have made a significant contribution to preserving the future of life.

Future of Life Institute Podcast

A podcast dedicated to hosting conversations with some of the world's leading thinkers and doers in the field of emerging technology and risk reduction. 140+ episodes since 2015, 4.8/5 stars on Apple Podcasts.

Related pages

Were you looking for something else?

Here are a couple of other pages you might have been looking for:

Our mission

Read about our mission and our core principles.

Take action

No matter your level of experience or seniority, there is something you can do to help us ensure the future of life is positive.

Events work

We convene leaders of the relevant fields to discuss ways of ensuring the safe development and use of powerful technologies.