Beneficial AGI 2019

Contents

BENEFICIAL AGI 2019

After our Puerto Rico AI conference in 2015 and our Asilomar Beneficial AI conference in 2017, we returned to Puerto Rico at the start of 2019 to talk about Beneficial AGI. We couldn’t be more excited to see all of the groups, organizations, conferences and workshops that have cropped up in the last few years to ensure that AI today and in the near future will be safe and beneficial. And so we now wanted to look further ahead to artificial general intelligence (AGI), the classic goal of AI research, which promises tremendous transformation in society. Beyond mitigating risks, we want to explore how we can design AGI to help us create the best future for humanity.

We again brought together an amazing group of AI researchers from academia and industry, as well as thought leaders in economics, law, policy, ethics, and philosophy for five days dedicated to beneficial AI. We hosted a two-day technical workshop to look more deeply at how we can create beneficial AGI, and we followed that with a 2.5-day conference, in which people from a broader AI background considered the opportunities and challenges related to the future of AGI and steps we can take today to move toward an even better future. Honoring the FrieNDA for the conference, we have only posted videos and slides below with approval of the speaker. (See the high-res group photo here.)

Conference Schedule

Friday, January 4

18:00 Early drinks and appetizers

19:00 Conference welcome reception & buffet dinner

20:00 Poster session

Saturday, January 5

Session 1 — Overview Talks

9:00 Welcome & summary of conference plan | FLI Team

Destination:

9:05 Framing the Challenge | Max Tegmark (FLI/MIT)

9:15 Alternative destinations that are popular with participants. What do you prefer?

Technical Safety:

10:00 Provably beneficial AI | Stuart Russell (Berkeley/CHAI) (pdf) (video)

10:30 Technical workshop summary | David Krueger (MILA) (pdf) (video)

10:50 Coffee break

11:00 Strategy/Coordination:

AI strategy, policy, and governance | Allan Dafoe (FHI/GovAI) (pdf) (video)

Towards a global community of shared future in AGI | Brian Tse (FHI) (pdf) (video)

Strategy workshop summary | Jessica Cussins (FLI) (pdf) (video)

12:00 Lunch

13:00 Optional breakouts & free time

Session 2 — Destination | Session Chair: Max Tegmark

16:00 Talk: Summary of discussions and polls about long-term goals, controversies

Panels on the controversies where the participant survey revealed the greatest disagreements:

16:10 Panel: Should we build superintelligence? (video)

Tiejun Huang (Peking)

Tanya Singh (FHI)

Catherine Olsson (Google Brain)

John Havens (IEEE)

Moderator: Joi Ito (MIT)

16:30 Panel: What should happen to humans? (video)

José Hernandez-Orallo (CFI)

Daniel Hernandez (OpenAI)

De Kai (ICSI)

Francesca Rossi (CSER)

Moderator: Carla Gomes (Cornell)

16:50 Panel: Who or what should be in control? (video)

Gaia Dempsey (7th Future)

El Mahdi El Mhamdi (EPFL)

Dorsa Sadigh (Stanford)

Moderator: Meia Chita-Tegmark (FLI)

17:10 Panel: Would we prefer AGI to be conscious? (video)

Bart Selman (Cornell)

Hiroshi Yamakawa (Dwango AI)

Helen Toner (GovAI)

Moderator: Andrew Serazin (Templeton)

17:30 Panel: What goal should civilization strive for? (video)

Joshua Greene (Harvard)

Nick Bostrom (FHI)

Amanda Askell (OpenAI)

Moderator: Lucas Perry (FLI)

18:00 Coffee & snacks

18:20 Panel: If AGI eventually makes all humans unemployable, then how can people be provided with the income, influence, friends, purpose, and meaning that jobs help provide today? (video)

Gillian Hadfield (CHAI/Toronto)

Reid Hoffman (LinkedIn)

James Manyika (McKinsey)

Moderator: Erik Brynjolfsson (MIT)

19:00 Dinner

20:00 Lightning talks

Sunday, January 6

Session 3 — Technical Safety | Session Chair: Victoria Krakovna

Paths to AGI:

9:00 Talk: Challenges towards AGI | Yoshua Bengio (MILA) (pdf) (video)

9:30 Debate: Possible paths to AGI (video)

Yoshua Bengio (MILA)

Irina Higgins (DeepMind)

Nick Bostrom (FHI)

Yi Zeng (Chinese Academy of Sciences)

Moderator: Joshua Tenenbaum (MIT)

10:10 Coffee break

Safety research:

10:25 Talk: AGI safety research agendas | Rohin Shah (Berkeley/CHAI) (pdf) (video)

10:55 Panel: What are the key AGI safety research priorities? (video)

Scott Garrabrant (MIRI)

Daniel Ziegler (OpenAI)

Anca Dragan (Berkeley/CHAI)

Eric Drexler (FHI)

Moderator: Ramana Kumar (DeepMind)

11:30 Debate: Synergies vs. tradeoffs between near-term and long-term AI safety efforts (video)

Neil Rabinowitz (DeepMind)

Catherine Olsson (Google Brain)

Nate Soares (MIRI)

Owain Evans (FHI)

Moderator: Jelena Luketina (Oxford)

12:00 Lunch

13:00 Optional breakouts & free time

15:00 Coffee, snacks, & free time

Session 4 — Strategy & Governance | Session Chair: Anthony Aguirre

16:00 Talk: AGI emergence scenarios, and their relation to destination issues | Anthony Aguirre (FLI)

16:10 Debate: 4-way friendly debate – what would be the best scenario for AGI emergence and early use?

Helen Toner (FHI)

Seth Baum (GCRI)

Peter Eckersley (Partnership on AI)

Miles Brundage (OpenAI)

Moderator: Anthony Aguirre (FLI)

16:50 Talk: AGI – Racing and cooperating | Seán Ó hÉigeartaigh (CSER) (pdf) (video)

17:10 Panel: Where are opportunities for cooperation at the governance level? Can we identify globally shared goals? (video)

Jason Matheny (IARPA)

Charlotte Stix (CFI)

Cyrus Hodes (Future Society)

Bing Song (Berggruen)

Moderator: Danit Gal (Keio)

17:40 Coffee break

17:55 Panel: Where are opportunities for cooperation at the academic & corporate level? (video)

Jason Si (Tencent)

Francesca Rossi (CSER)

Andrey Ustyuzhanin (Higher School of Economics)

Martina Kunz (CFI/CSER)

Teddy Collins (DeepMind)

Moderator: Brian Tse (FHI)

18:25 Talks: Lightning support pitches

19:00 Banquet

20:00 Poster session

Monday, January 7

Session 5 — Action items

9:00 Group discussion and analysis of conference

9:45 Governance panel: What laws, regulations, institutions, and agreement are needed? (video)

Wendell Wallach (Yale)

Nicolas Miailhe (Future Society)

Helen Toner (FHI)

Jeff Cao (Tencent)

Moderator: Tim Hwang (Google)

10:15 Coffee break

10:30 Technical safety student panel: What does the next generation of researchers think that the action items should be? (video)

Jaime Fisac (Berkeley/CHAI)

William Saunders (Toronto)

Smitha Milli (Berkeley/CHAI)

El Mahdi El Mhamdi (EPFL)

Moderator: Dylan Hadfield-Menell (Berkeley/CHAI)

11:10 Prioritization: Everyone e-votes on how to rank and prioritize action items

11:30 Closing remarks

Towards a global community of shared future in AGI | Brian Tse

Towards a global community of shared future in AGI | Brian Tse

Strategy workshop summary | Jessica Cussins

Strategy workshop summary | Jessica Cussins

Panel: Should we build superintelligence?

Panel: Should we build superintelligence?

Lightning talk | Francesca Rossi

Lightning talk | Francesca Rossi

Challenges towards AGI | Yoshua Bengio

Challenges towards AGI | Yoshua Bengio

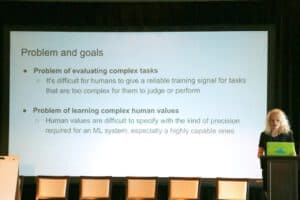

AGI safety research agendas | Rohin Shah

AGI safety research agendas | Rohin Shah

AGI emergence scenarios, and their relation to destination issues | Anthony Aguirre

AGI emergence scenarios, and their relation to destination issues | Anthony Aguirre

Panel: Where are opportunities for cooperation at the governance level?

Panel: Where are opportunities for cooperation at the governance level?

Panel: Where are opportunities for cooperation at the academic & corporate level?

Panel: Where are opportunities for cooperation at the academic & corporate level?

Governance Panel: What laws, regulations, institutions, and agreement are needed?

Governance Panel: What laws, regulations, institutions, and agreement are needed?

Workshop Schedule

Wednesday, January 2

18:00 Workshop welcome reception, 3-word introductions

Thursday, January 3

9:00 Welcome & summary of workshop plan | FLI Team

Destination Session | Session Chair: Max Tegmark

9:15 Talk: Framing, breakout task assignment

9:30 Breakouts: Parallel group brainstorming sessions

10:45 Coffee break

11:00 Discussion: Destination group reports, debate between panel rapporteurs

12:00 Lunch

13:00 Optional breakouts & free time

15:00 Coffee, snacks, & free time

Technical Safety Session | Session Chair: Victoria Krakovna

16:00 Opening remarks

Talks about latest progress on human-in-the-loop approaches to AI safety

16:05 Talk: Scalable agent alignment | Jan Leike (DeepMind) (pdf)

16:20 Talk: Iterated amplification and debate | Amanda Askell (OpenAI) (pdf)

16:35 Talk: Cooperative inverse reinforcement learning | Dylan Hadfield-Menell (Berkeley/CHAI)

16:50 Talk: The comprehensive AI services framework | Eric Drexler (FHI) (pdf)

17:05 Coffee break

Talks about latest progress on theory approaches to AI safety

17:25 Talk: Embedded agency | Scott Garrabrant (MIRI) (pdf)

17:40 Talk: Measuring side effects | Victoria Krakovna (DeepMind) (pdf)

17:55 Talk: Verification/security/containment | Ramana Kumar (DeepMind) (pdf)

18:10 Coffee break

18:30 Debate: Will future AGI systems be optimizing a single long-term goal?

Rohin Shah (Berkeley/CHAI)

Peter Eckersley (Partnership on AI)

Anna Salamon (CFAR)

Moderator: David Krueger (MILA)

19:00 Dinner

20:30 Destinations groups finish their work as needed

Friday, January 4

Strategy Session | Session Chair: Anthony Aguirre

9:00 Welcome back & framing of strategy challenge

9:10 Talk: Exploring AGI scenarios | Shahar Avin (FHI) (pdf)

9:40 Forecasting: What scenario ingredients are most likely and why? What goes into answering this?

Jade Leung (FHI)

Danny Hernandez (OpenAI)

Gillian Hadfield (CHAI/Toronto)

Malo Bourgon (MIRI)

Moderator: Shahar Avin (FHI)

10:10 Coffee break

10:20 Scenario breakouts

11:20 Reports: Scenario report-backs & discussion panel(s), assignments to action item panels

12:00 Lunch

13:00 Optional breakouts & free time

15:00 Coffee & free time

Action Item Session

15:45 Panel: Destination synthesis – what did all groups agree on, and what are the key controversies worth clarifying? Action items?

Tegan Maharaj (MILA)

Alex Zhu (MIRI)

16:15 Panel: What might the unknown unknowns in the space of AI safety problems look like? How can we broaden our research agendas to capture them?

Jan Leike (DeepMind)

Victoria Krakovna (DeepMind)

Richard Mallah (FLI)

Moderator: Andrew Critch (Berkeley/CHAI)

16:45 Coffee break

17:00 Action items: What institutions, platforms, & organizations do we need?

Jaan Tallinn (CSER/FLI)

Tanya Singh (FHI)

Alex Zhu (MIRI)

Moderator: Allison Duettmann (Foresight)

17:30 Action items: What standards, laws, regulations, & agreements do we need?

Jessica Cussins (FLI)

Teddy Collins (DeepMind)

Gillian Hadfield (CHAI/Toronto)

Moderator: Allan Dafoe (FHI/GovAI)

18:00 Conference welcome reception & buffet dinner

20:00 Poster session

There are no videos from the workshop portion of the conference.

Iterated amplification and debate | Amanda Askell

Iterated amplification and debate | Amanda Askell

Measuring side effects | Victoria Krakovna

Measuring side effects | Victoria Krakovna

Exploring AGI scenarios | Shahar Avin

Exploring AGI scenarios | Shahar Avin

Forecasting: What scenario ingredients are most likely and why?

Forecasting: What scenario ingredients are most likely and why?

Action items: What institutions, platforms, & organizations do we need?

Action items: What institutions, platforms, & organizations do we need?

Organizers

Anthony Aguirre, Meia Chita-Tegmark, Ariel Conn, Tucker Davey, Victoria Krakovna, Richard Mallah, Lucas Perry, Lucas Sabor, Max Tegmark

Participants

Bios for the attendees and participants can be found here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Events

Paris AI Safety Breakfast #3: Yoshua Bengio

Paris AI Safety Breakfast #1: Stuart Russell

AIS Program

Augmented Intelligence Summit Speakers

Some of our events

Augmented Intelligence Summit