Fighting for a human future.

AI is poised to remake the world.

Help us ensure it benefits all of us.

Help us ensure it benefits all of us.

Recent updates from us

Including: Davos 2026 highlights (and disappointments); ChatGPT ads; Doomsday Clock update; Trump voters want AI regulation; and more.

2 February, 2026

Including: our Winter 2025 AI Safety Index; NY's new AI safety law; White House AI Executive Order; results from our Keep the Future Human contest; and more!

31 December, 2025

Plus: The first reported case of AI-enabled spying; why superintelligence wouldn't be controllable; takeaways from WebSummit; and more.

1 December, 2025

Hear from us every month

Join 40,000+ other newsletter subscribers for monthly updates on the work we’re doing to safeguard our shared futures.

Our Mission

Steering transformative

technology towards benefiting life and away from extreme large-scale risks.

technology towards benefiting life and away from extreme large-scale risks.

We believe that the way powerful technology is developed and used will be the most important factor in determining the prospects for the future of life. This is why we have made it our mission to ensure that technology continues to improve those prospects.

Learn more

Focus Areas

Artificial Intelligence

AI can be an incredible tool that solves real problems and accelerates human flourishing, or a runaway uncontrollable force which destabilizes society, disempowers most people, enables terrorism, and replaces us.

Biotechnology

Advances in biotechnology can revolutionize medicine, manufacturing, and agriculture, but without proper safeguards, they also raise the risk of engineered pandemics and novel biological weapons.

Nuclear Weapons

Peaceful use of nuclear technology can help power a sustainable future, but nuclear weapons risk mass catastrophe, escalation of conflict, the potential for nuclear winter, global famine and state collapse.

Featured videos

The best recent content from us and our partners:

More videos

Featured projects

Read about some of our current featured projects:

Recently announced

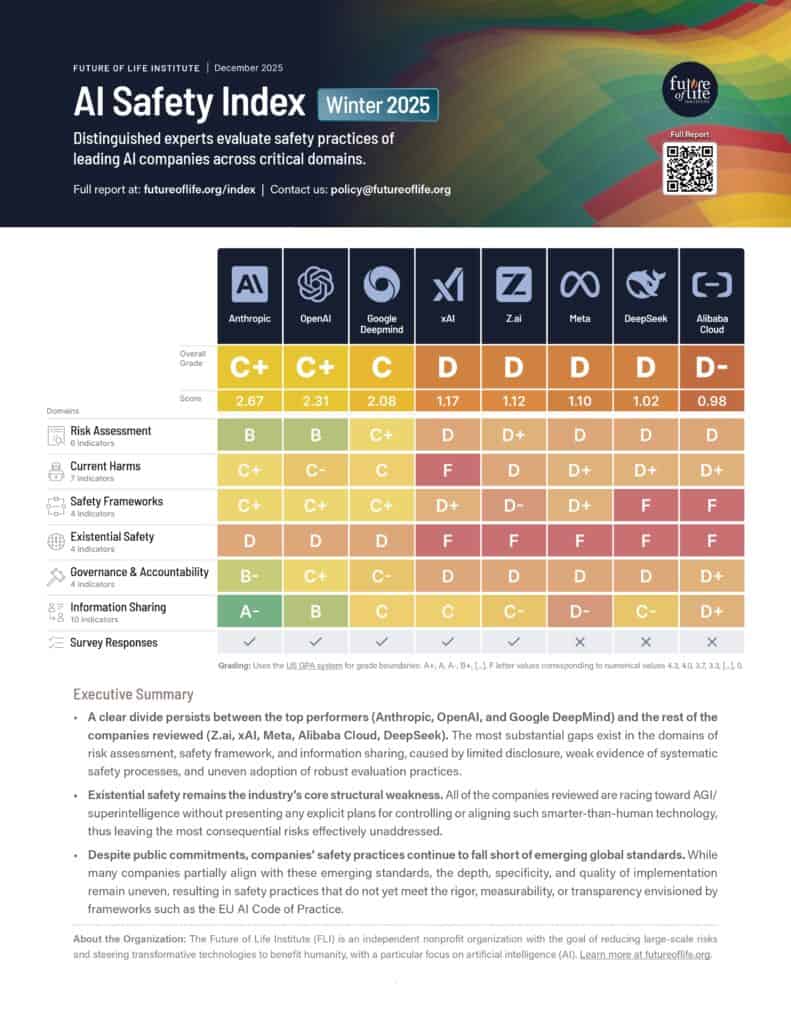

FLI AI Safety Index: Winter 2025 Edition

Eight AI and governance experts evaluate the safety practices of leading general-purpose AI companies.

Policy & Research

View all

Promoting a Global AI Agreement

We need international coordination so that AI's benefits reach across the globe, not just concentrate in a few places. The risks of advanced AI won't stay within borders, but will spread globally and affect everyone. We should work towards an international governance framework that prevents the concentration of benefits in a few places and mitigates global risks of advanced AI.

Recommendations for the U.S. AI Action Plan

The Future of Life Institute proposal for President Trump’s AI Action Plan. Our recommendations aim to protect the presidency from AI loss-of-control, promote the development of AI systems free from ideological or social agendas, protect American workers from job loss and replacement, and more.

AI Convergence: Risks at the Intersection of AI and Nuclear, Biological and Cyber Threats

The dual-use nature of AI systems can amplify the dual-use nature of other technologies—this is known as AI convergence. We provide policy expertise to policymakers in the United States in three key convergence areas: biological, nuclear, and cyber.

Futures

View all

AI’s Role in Reshaping Power Distribution

Advanced AI systems are set to reshape the economy and power structures in society. They offer enormous potential for progress and innovation, but also pose risks of concentrated control, unprecedented inequality, and disempowerment. To ensure AI serves the public good, we must build resilient institutions, competitive markets, and systems that widely share the benefits.

Envisioning Positive Futures with Technology

Storytelling has a significant impact on informing people's beliefs and ideas about humanity's potential future with technology. While there are many narratives warning of dystopia, positive visions of the future are in short supply. We seek to incentivize the creation of plausible, aspirational, hopeful visions of a future we want to steer towards.

Perspectives of Traditional Religions on Positive AI Futures

Most of the global population participates in a traditional religion. Yet the perspectives of these religions are largely absent from strategic AI discussions. This initiative aims to support religious groups to voice their faith-specific concerns and hopes for a world with AI, and work with them to resist the harms and realise the benefits.

Communications

View all

Control Inversion

Why the superintelligent AI agents we are racing to create would absorb power, not grant it | The latest study from Anthony Aguirre.

Digital Media Accelerator

The Digital Media Accelerator supports digital content from creators raising awareness and understanding about ongoing AI developments and issues.

Keep The Future Human

Why and how we should close the gates to AGI and superintelligence, and what we should build instead | A new essay by Anthony Aguirre, Executive Director of FLI.

Grantmaking

View all

AI Existential Safety Community

A community dedicated to ensuring AI is developed safely, including both faculty and AI researchers. Members are invited to attend meetings, participate in an online community, and apply for travel support.

Fellowships

Since 2021 we have offered PhD and Postdoctoral fellowships in Technical AI Existential Safety. In 2024, we launched a PhD fellowship in US-China AI Governance.

RFPs, Contests, and Collaborations

Requests for Proposals (RFPs), public contests, and collaborative grants in direct support of FLI internal projects and initiatives.

Newsletter

Regular updates about the technologies shaping our world

Every month, we bring 40,000+ subscribers the latest news on how emerging technologies are transforming our world. It includes a summary of major developments in our focus areas, and key updates on the work we do.

Subscribe to our newsletter to receive these highlights at the end of each month.

Recent editions

Including: Davos 2026 highlights (and disappointments); ChatGPT ads; Doomsday Clock update; Trump voters want AI regulation; and more.

2 February, 2026

Including: our Winter 2025 AI Safety Index; NY's new AI safety law; White House AI Executive Order; results from our Keep the Future Human contest; and more!

31 December, 2025

Plus: The first reported case of AI-enabled spying; why superintelligence wouldn't be controllable; takeaways from WebSummit; and more.

1 December, 2025

Plus: Final call for PhD fellowships and Creative Contest; new California AI laws; FLI is hiring; can AI truly be creative?; and more.

1 November, 2025

View all

Latest content

The most recent content we have published:

Featured content

We must not build AI to replace humans.

A new essay by Anthony Aguirre, Executive Director of the Future of Life Institute

Humanity is on the brink of developing artificial general intelligence that exceeds our own. It's time to close the gates on AGI and superintelligence... before we lose control of our future.

Read the essay ->Posts

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Three‑quarters of U.S. adults want strong regulations on AI development, preferring oversight akin to pharmaceuticals rather than industry "self‑regulation."

19 October, 2025

Michael Kleinman reacts to breakthrough AI safety legislation

FLI celebrates a landmark moment for the AI safety movement and highlights its growing momentum

Are we close to an intelligence explosion?

AIs are inching ever-closer to a critical threshold. Beyond this threshold lie great risks—but crossing it is not inevitable.

21 March, 2025

The Impact of AI in Education: Navigating the Imminent Future

What must be considered to build a safe but effective future for AI in education, and for children to be safe online?

13 February, 2025

View all

Podcasts

Available on all podcast platforms:

20 February, 2026

Can AI Do Our Alignment Homework? (with Ryan Kidd)

6 February, 2026

How to Rebuild the Social Contract After AGI (with Deric Cheng)

27 January, 2026

How AI Can Help Humanity Reason Better (with Oly Sourbut)

20 January, 2026

View all

Papers

Use your voice

Protect what's human.

Big Tech is racing to build increasingly powerful and uncontrollable AI systems designed to replace humans. You have the power to do something about it.

Take action today to protect our future:

Take Action ->

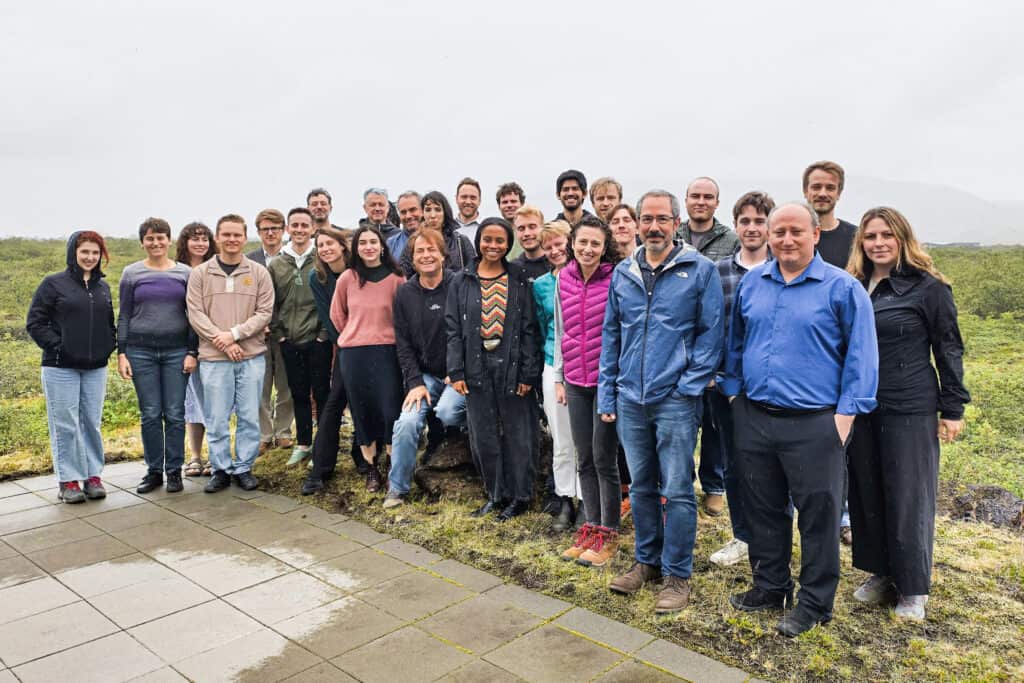

Our people

A team committed to the future of life.

Our staff represents a diverse range of expertise, having worked in academia, for government and in industry. Their background range from machine learning to medicine and everything in between.

Meet our team

Our History

We’ve been working to safeguard humanity’s future since 2014.

Learn about FLI’s work and achievements since its founding, including historic conferences, grant programs, and open letters that have shaped the course of technology.