Superintelligence survey

Contents

Click here to see this page in other languages: Chinese ![]() French

French ![]() German

German![]() Japanese

Japanese ![]() Russian

Russian![]()

The Future of AI – What Do You Think?

Max Tegmark’s new book on artificial intelligence, Life 3.0: Being Human in the Age of Artificial Intelligence, explores how AI will impact life as it grows increasingly advanced, perhaps even achieving superintelligence far beyond human level in all areas. For the book, Max surveys experts’ forecasts, and explores a broad spectrum of views on what will/should happen. But it’s time to expand the conversation. If we’re going to create a future that benefits as many people as possible, we need to include as many voices as possible. And that includes yours! Below are the answers from the first 14,866 people who have taken the survey that goes along with Max’s book. To join the conversation yourself, please take the survey here.

How soon, and should we welcome or fear it?

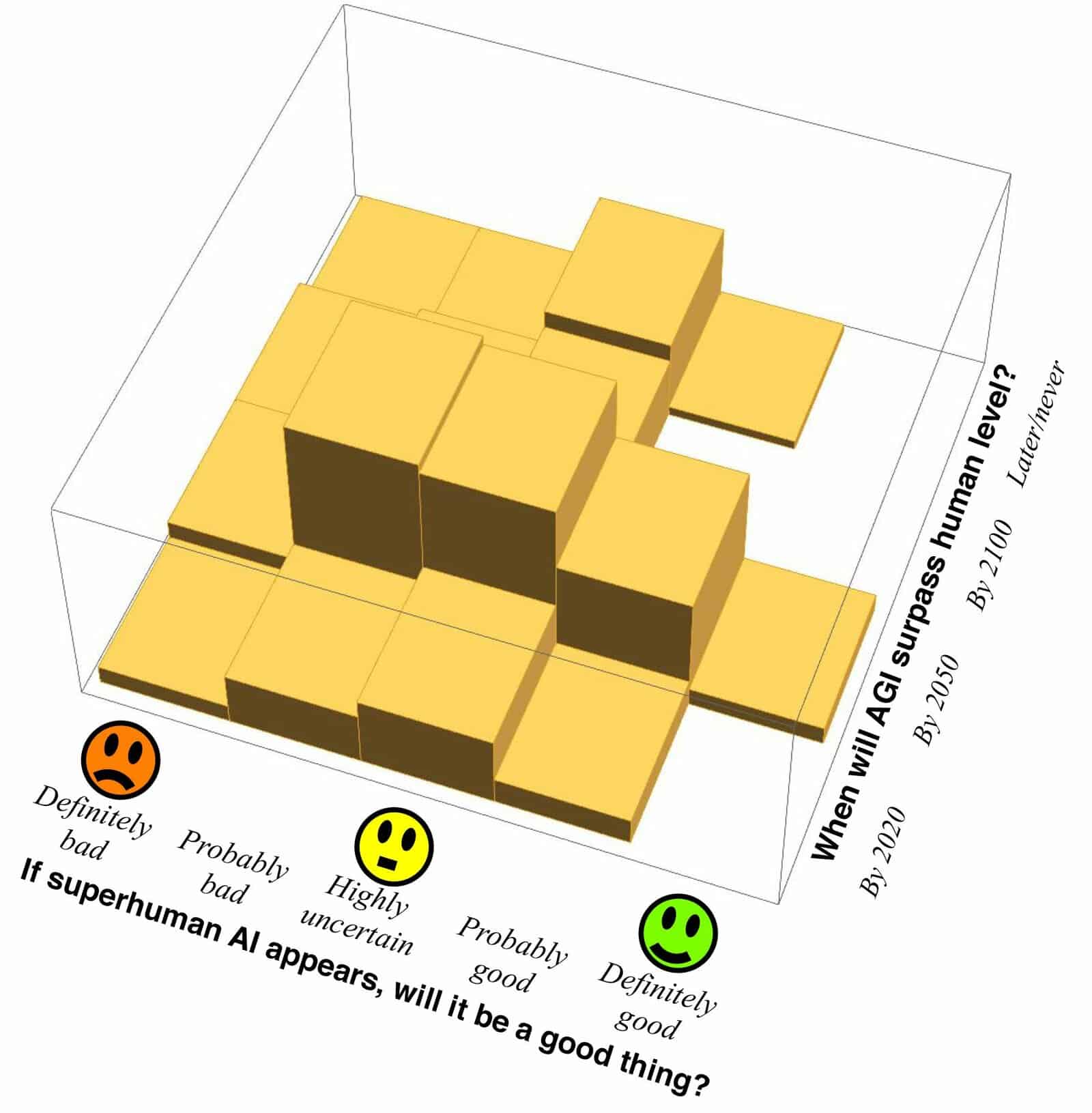

The first big controversy, dividing even leading AI researchers, involves forecasting what will happen. When, if ever, will AI outperform humans at all intellectual tasks, and will it be a good thing?

Do you want superintelligence?

Everything we love about civilization is arguably the product of intelligence, so we can potentially do even better by amplifying human intelligence with machine intelligence. But some worry that superintelligent machines would end up controlling us and wonder whether their goals would be aligned with ours. Do you want there to be superintelligent AI, i.e., general intelligence far beyond human level?

What Should the Future Look Like?

In his book, Tegmark argues that we shouldn’t passively ask “what will happen?” as if the future is predetermined, but instead ask what we want to happen and then try to create that future. What sort of future do you want?

If superintelligence arrives, who should be in control?

If you one day get an AI helper, do you want it to be conscious, i.e., to have subjective experience (as opposed to being like a zombie which can at best pretend to be conscious)?

What should a future civilization strive for?

Do you want life spreading into the cosmos?

The Ideal Society?

In Life 3.0, Max explores 12 possible future scenarios, describing what might happen in the coming millennia if superintelligence is/isn’t developed. You can find a cheatsheet that quickly describes each here, but for a more detailed look at the positives and negatives of each possibility, check out chapter 5 of the book. Here’s a breakdown so far of the options people prefer:

You can learn a lot more about these possible future scenarios — along with fun explanations about what AI is, how it works, how it’s impacting us today, and what else the future might bring — when you order Max’s new book.

The results above will be updated regularly. Please add your voice by taking the survey here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI