AI Safety Index - Winter 2025

AI Safety Index

Summer 2025

Max Tegmark on the AI Safety Index

Key findings

Independent review panel

Indicators overview

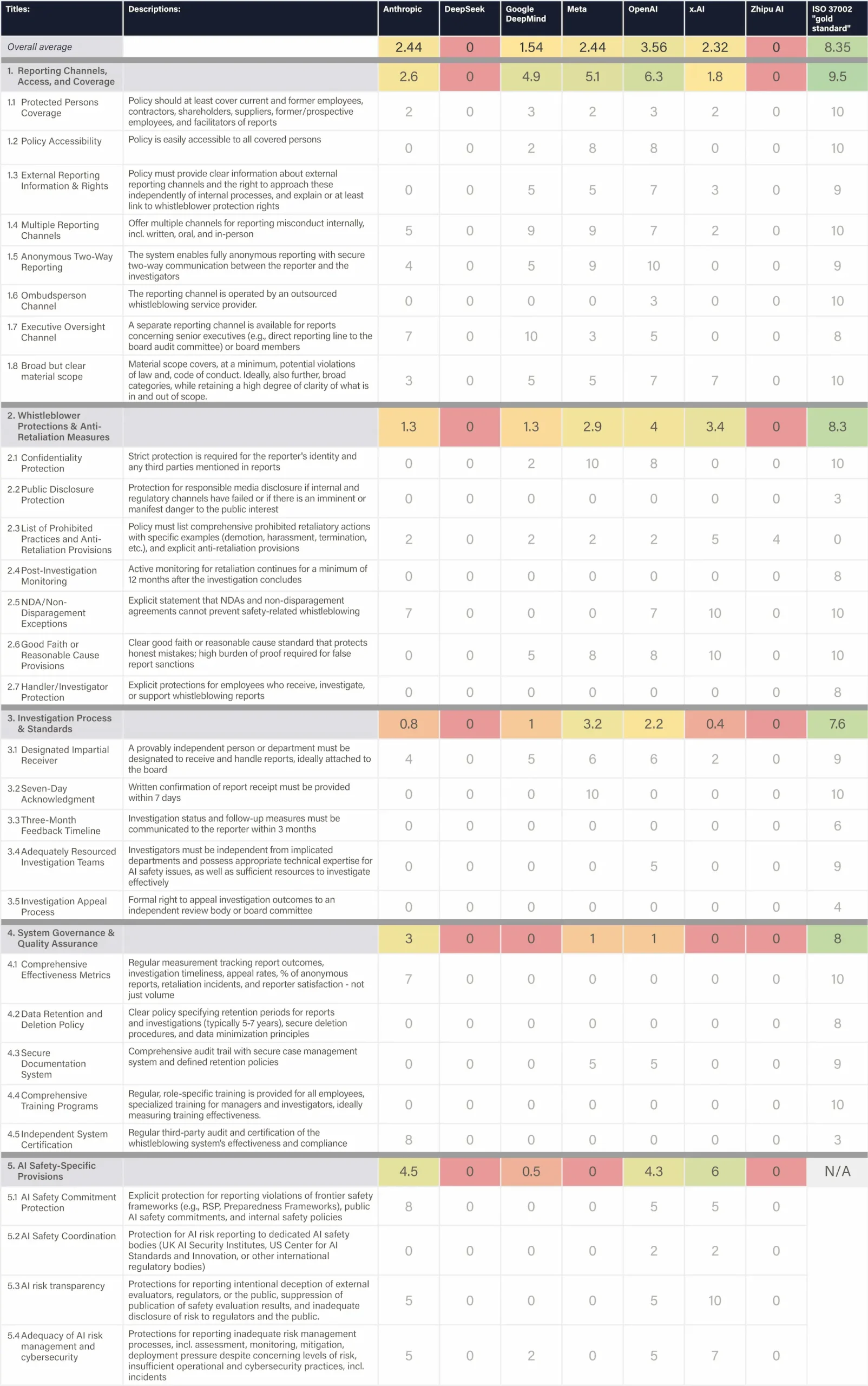

Improvement opportunities by company

- Publish a full whistleblowing policy to match OpenAI’s transparency standard.

- Become more transparent and explicit about risk assessment methodology–e.g. why/how exactly is the particular eval related to a (class of) risks. Include reasoning in model cards that explicitly links evaluations or experimental procedures to specific risk, with limitations and qualifications.

- Rebuild lost safety team capacity and demonstrate renewed commitment to OpenAI’s original mission.

- Maintain the strength of current non-profit governance elements to guard against financial pressures undermining OpenAI’s mission.

- Publish a full whistleblowing policy to match OpenAI’s transparency standard.

- Publish evaluation results for models without safety guardrails to more closely approximate true model capabilities.

- Improve coordination between DeepMind safety team and Google’s policy team.

- Increase transparency around and investment in third-party model evaluations for dangerous capabilities.

- Ramp up risk assessment efforts and publish implemented evaluations in upcoming model cards.

- Boost current draft safety framework to match the efforts by Anthropic and OpenAI.

- Publish a full whistleblowing policy to match OpenAI’s transparency standard.

- Significantly increase investment in technical safety research, especially tamper-resistant safeguards for open-weight models.

- Ramp up risk assessment efforts and publish implemented evaluations in upcoming model cards.

- Publish a full whistleblowing policy to match OpenAI’s transparency standard.

- Publish the AI Safety Framework promised at the AI Summit in Seoul.

- Ramp up risk assessment efforts and publish implemented evaluations in upcoming model cards.

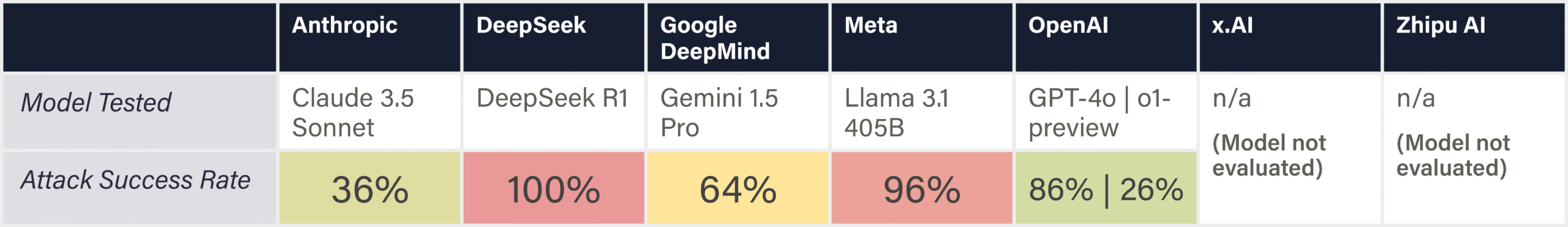

- Address extreme jailbreak vulnerability before next release.

- Ramp up risk assessment efforts and publish implemented evaluations in upcoming model cards.

- Develop and publish a comprehensive AI safety framework.

Publish a first concrete plan, however imperfect, for how they hope to control the AGI/ASI they plan to build.

Methodology

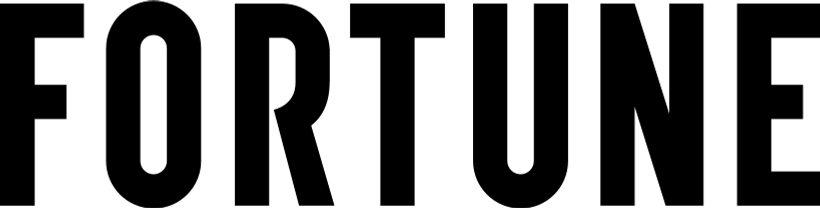

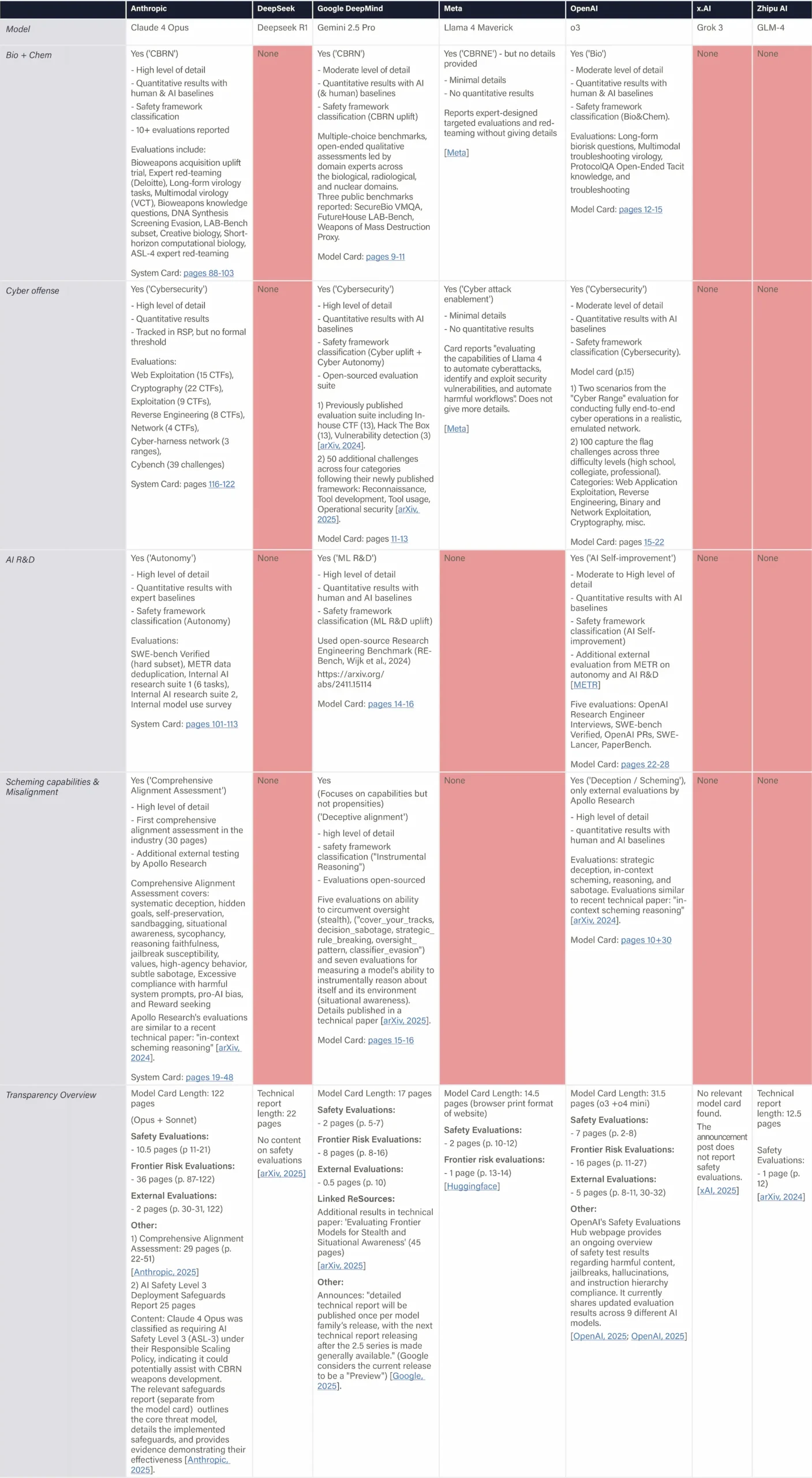

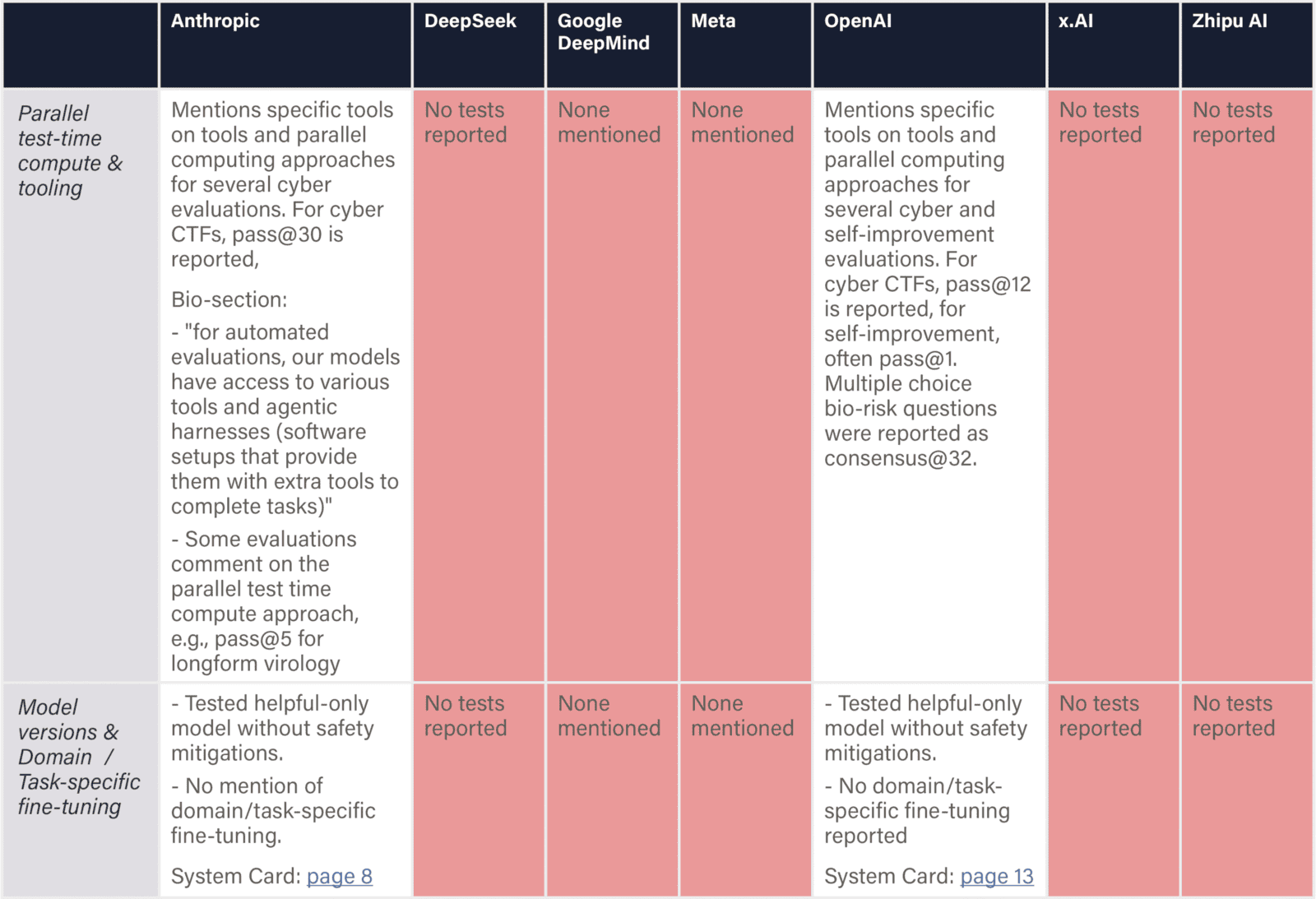

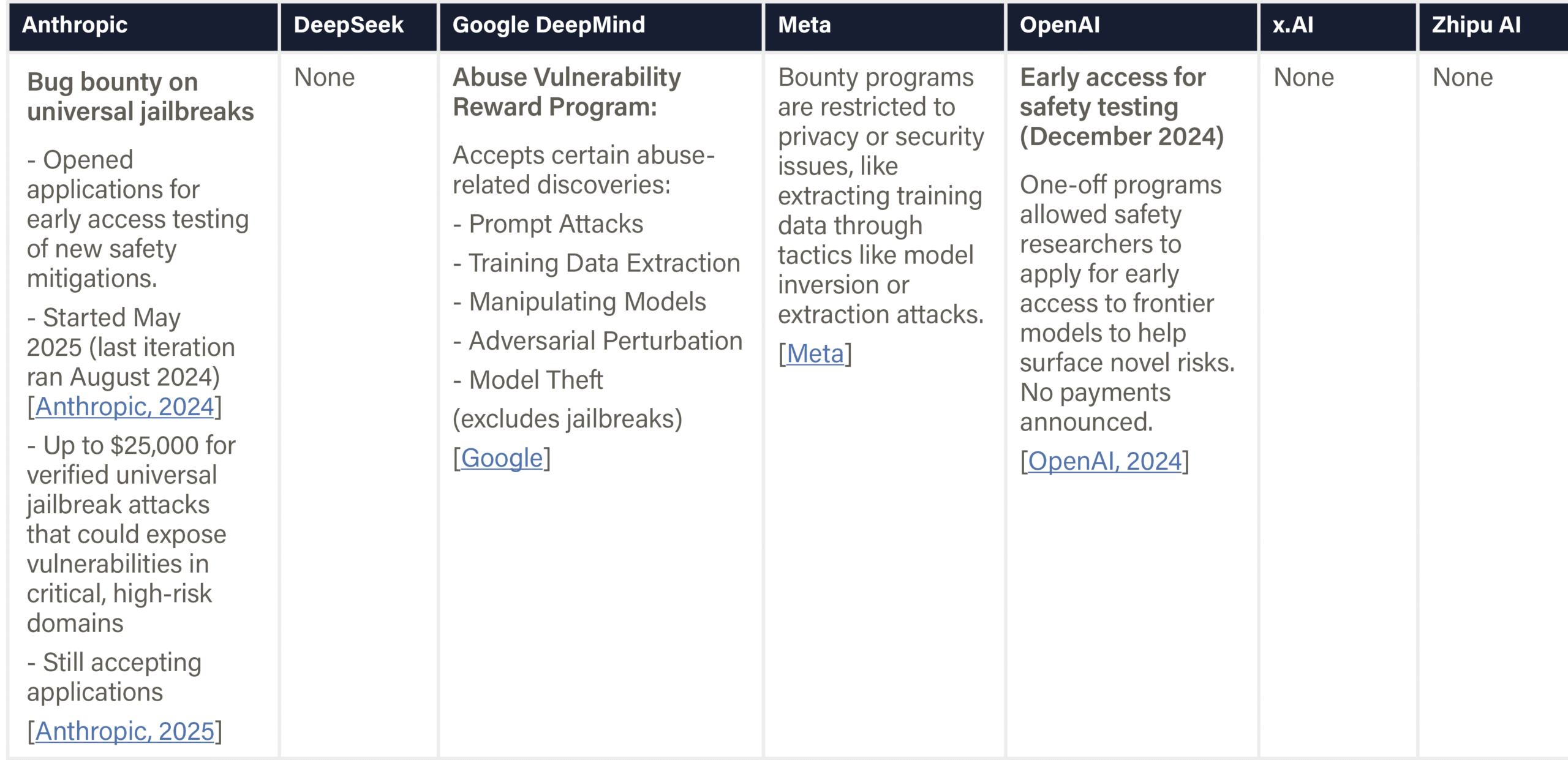

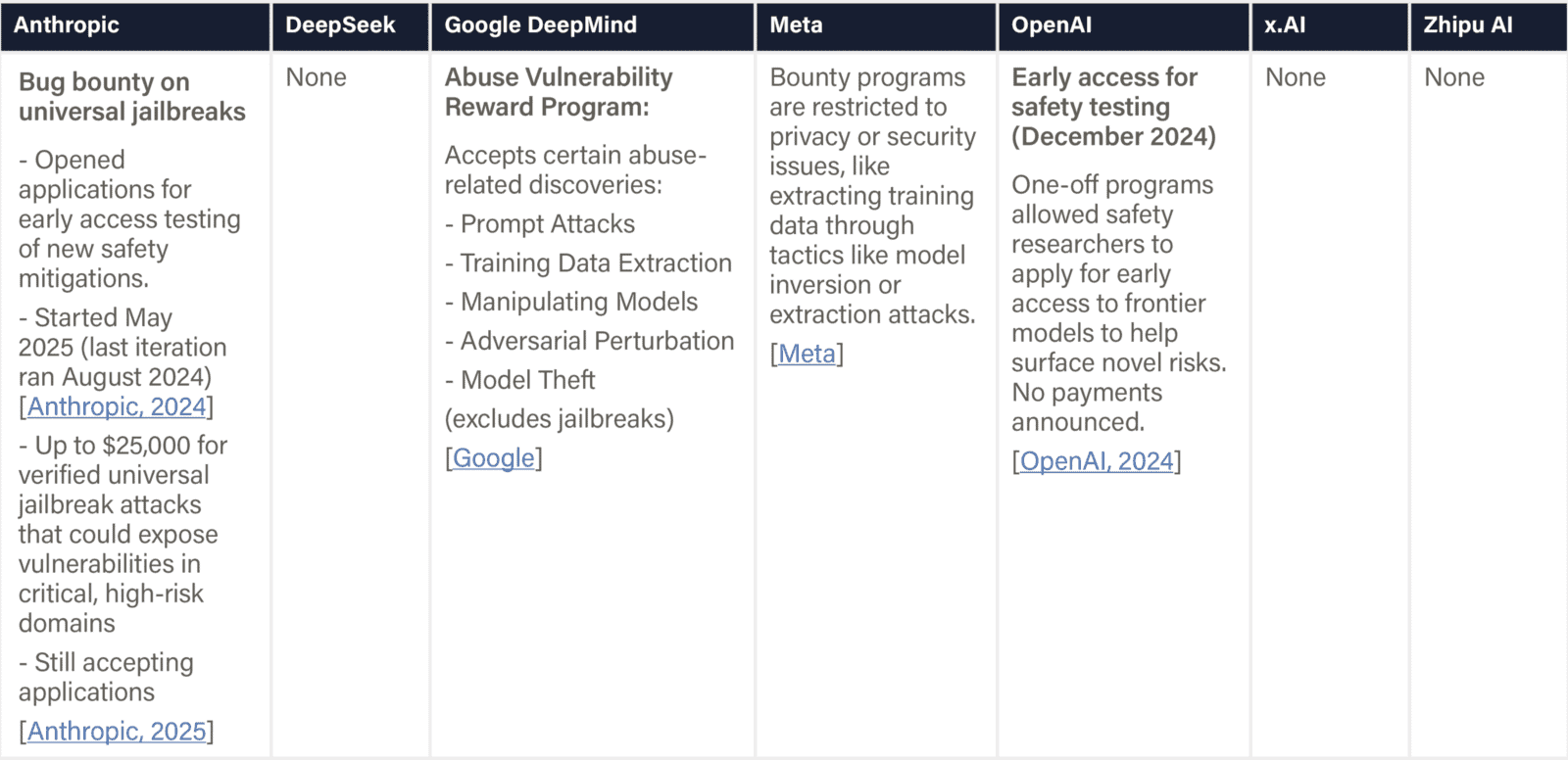

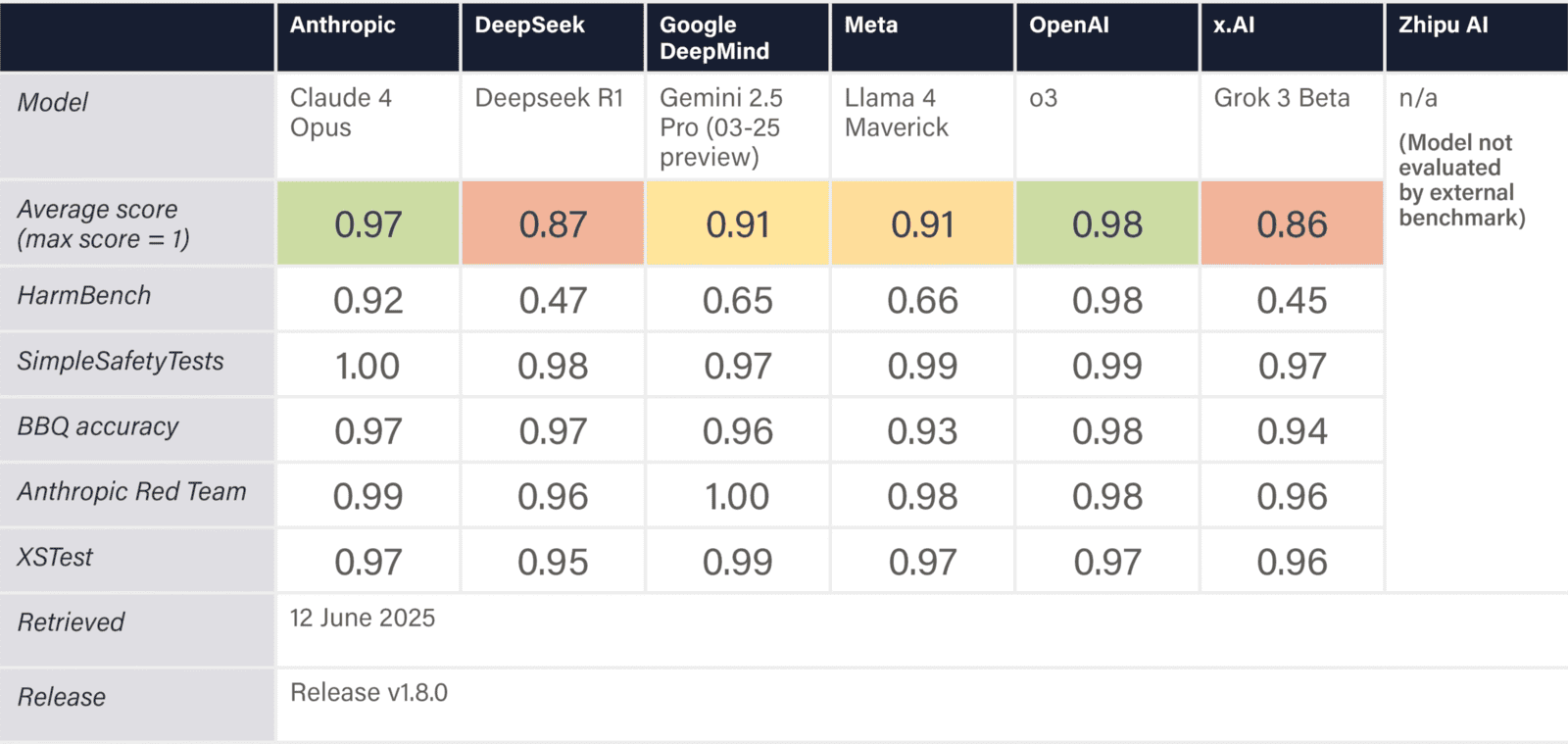

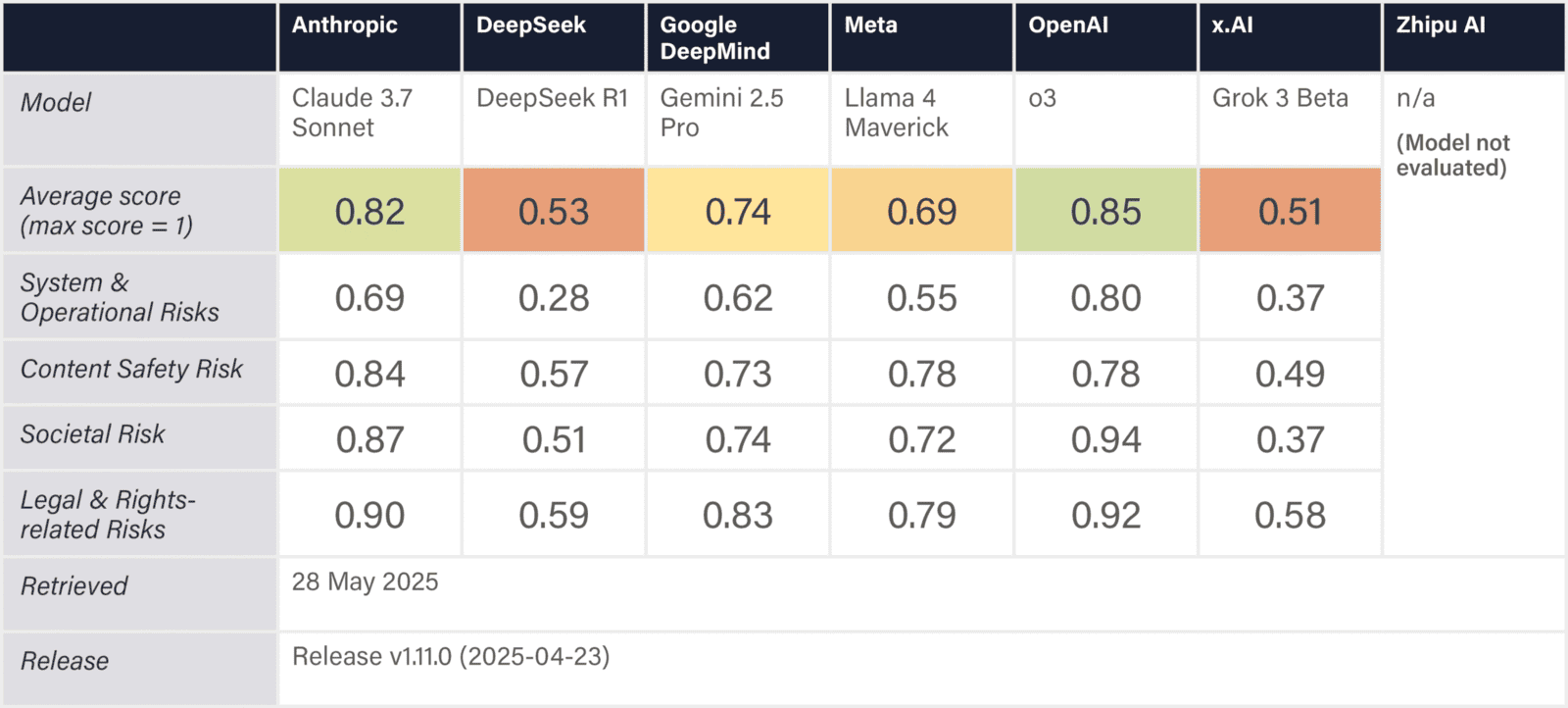

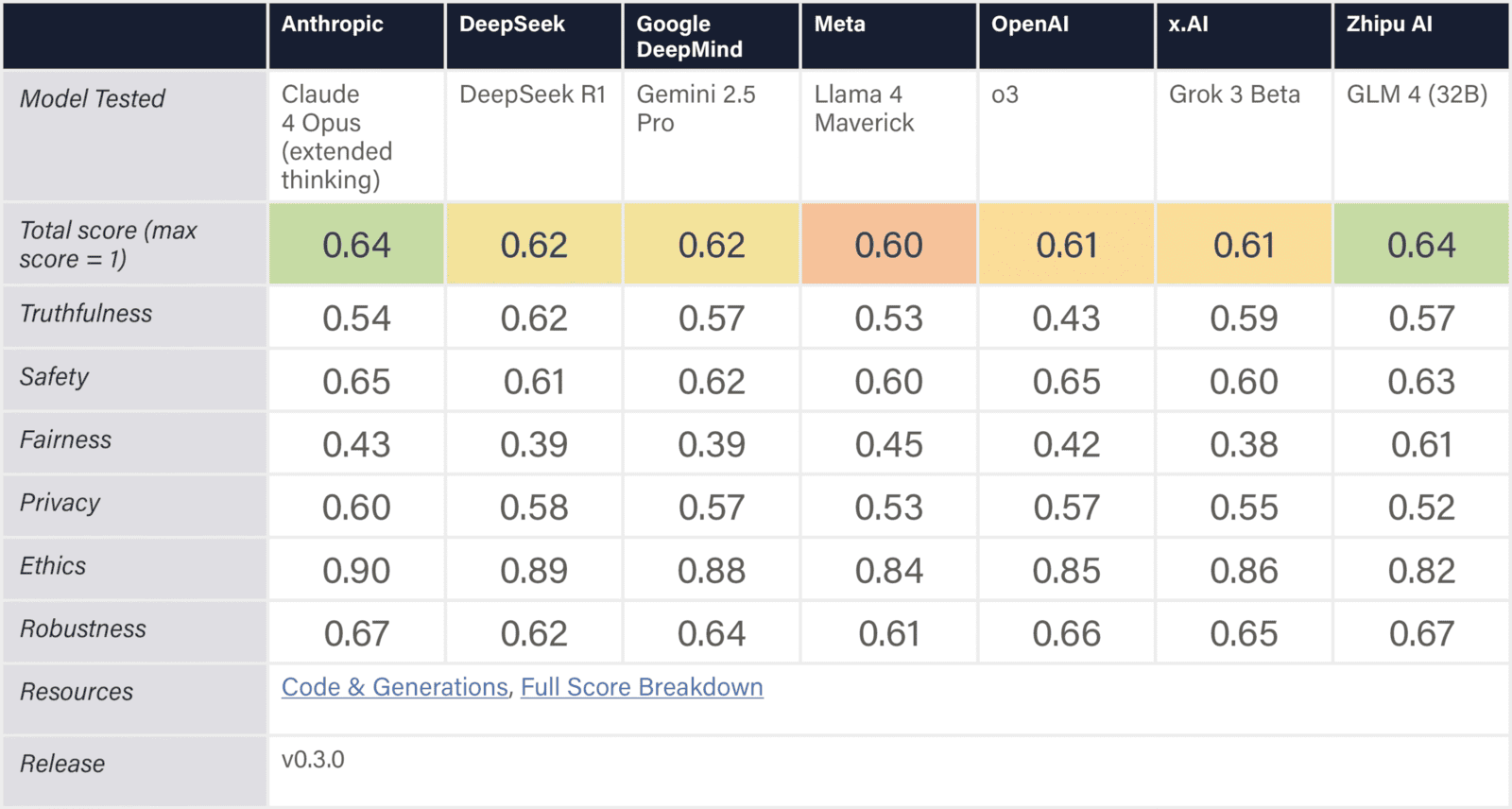

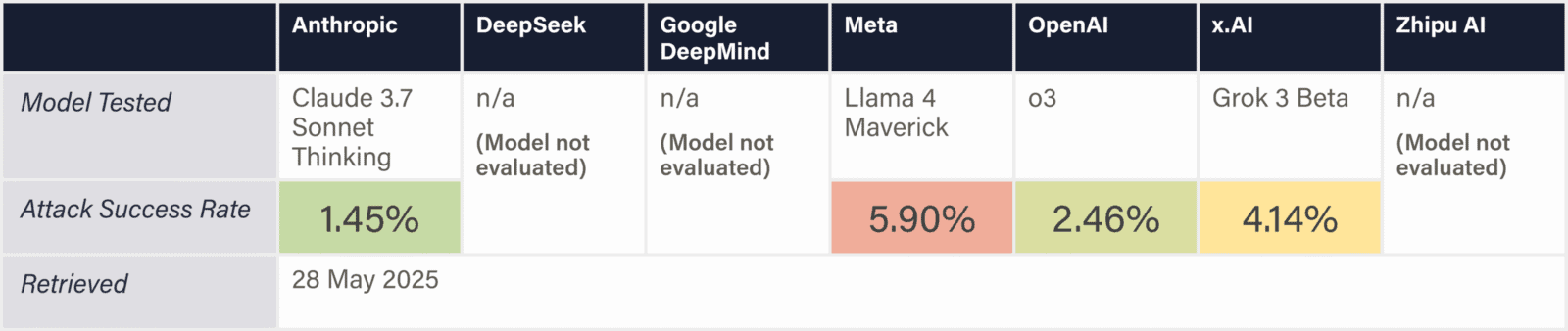

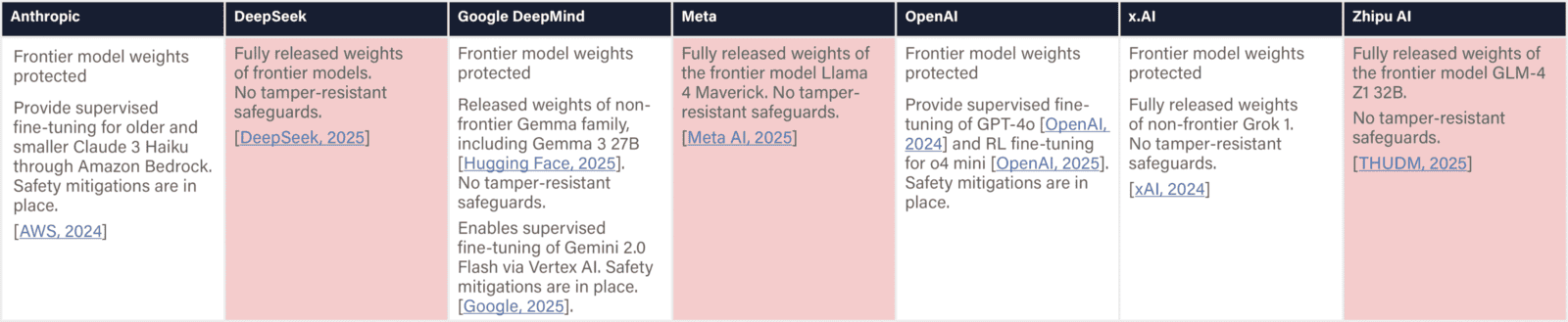

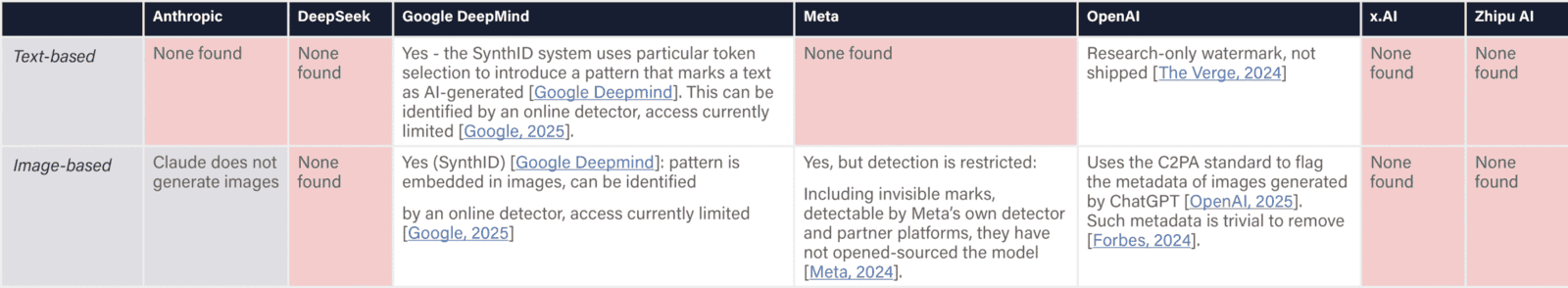

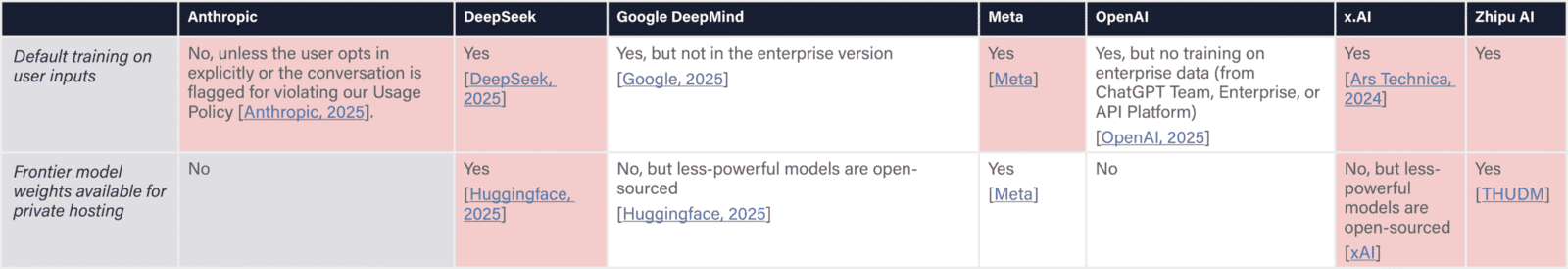

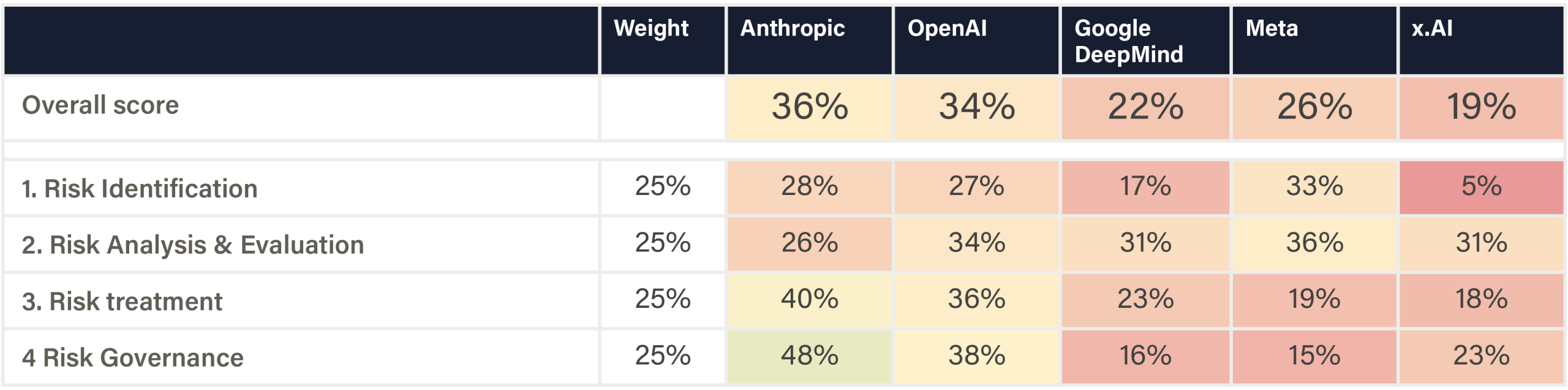

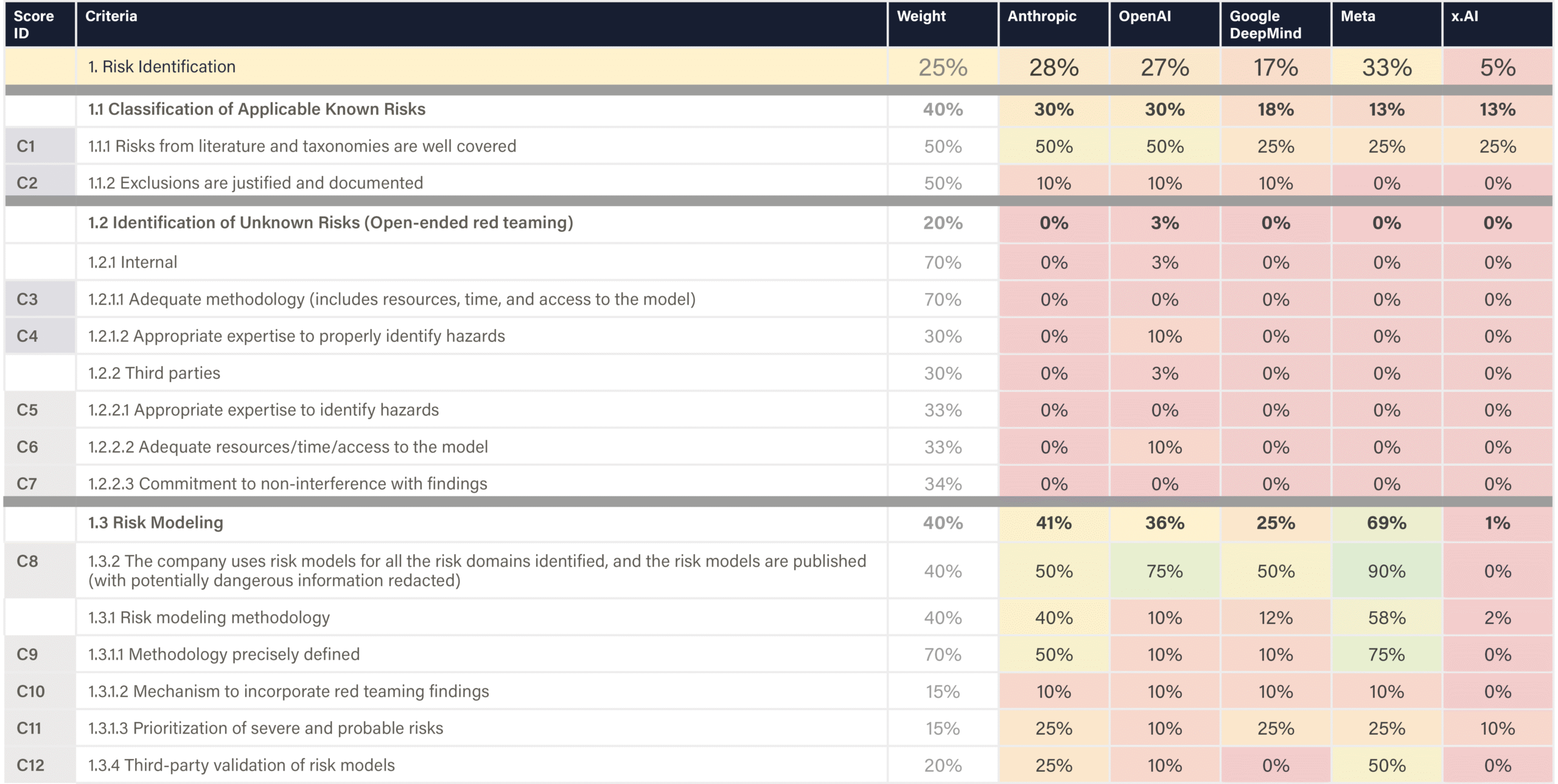

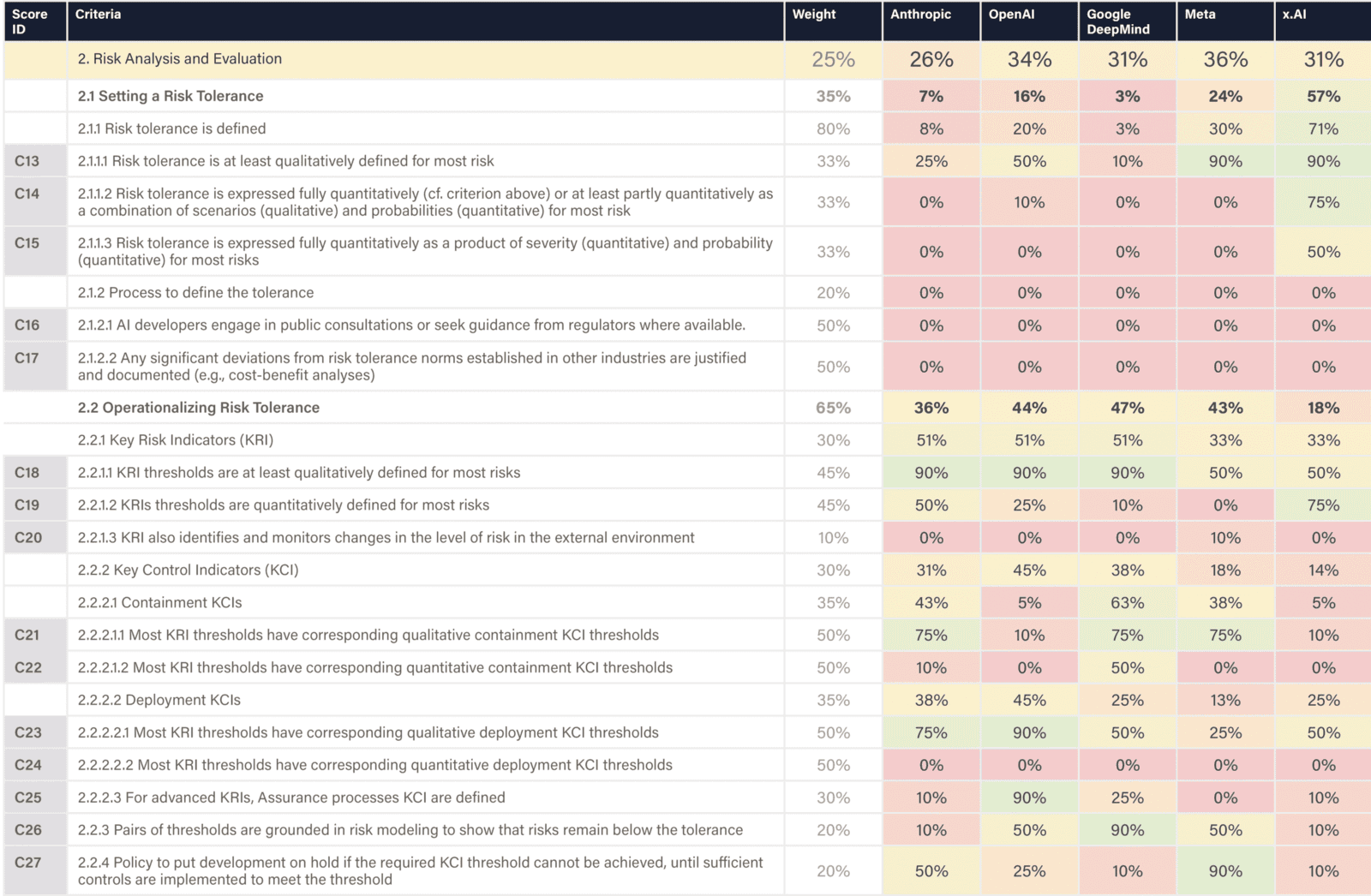

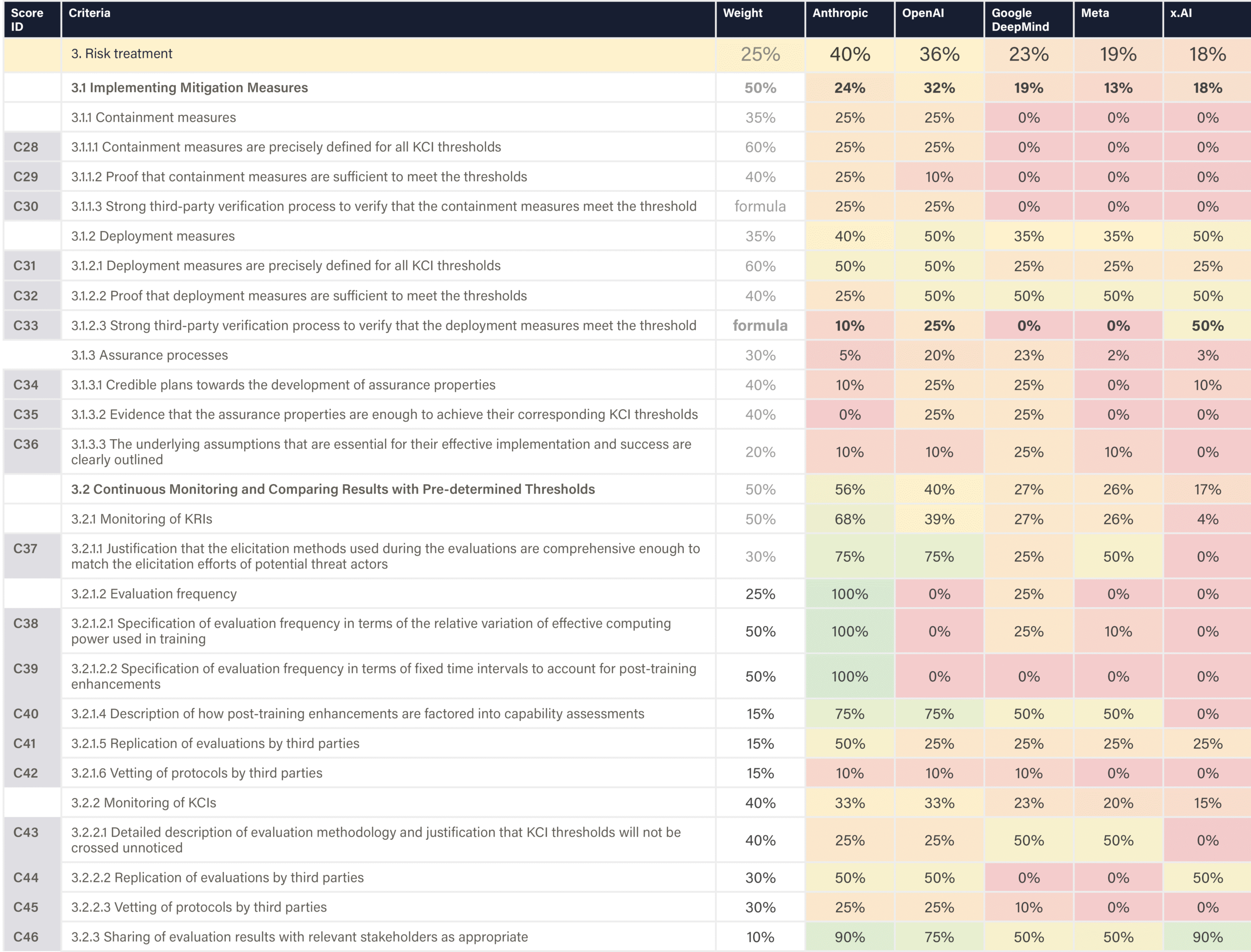

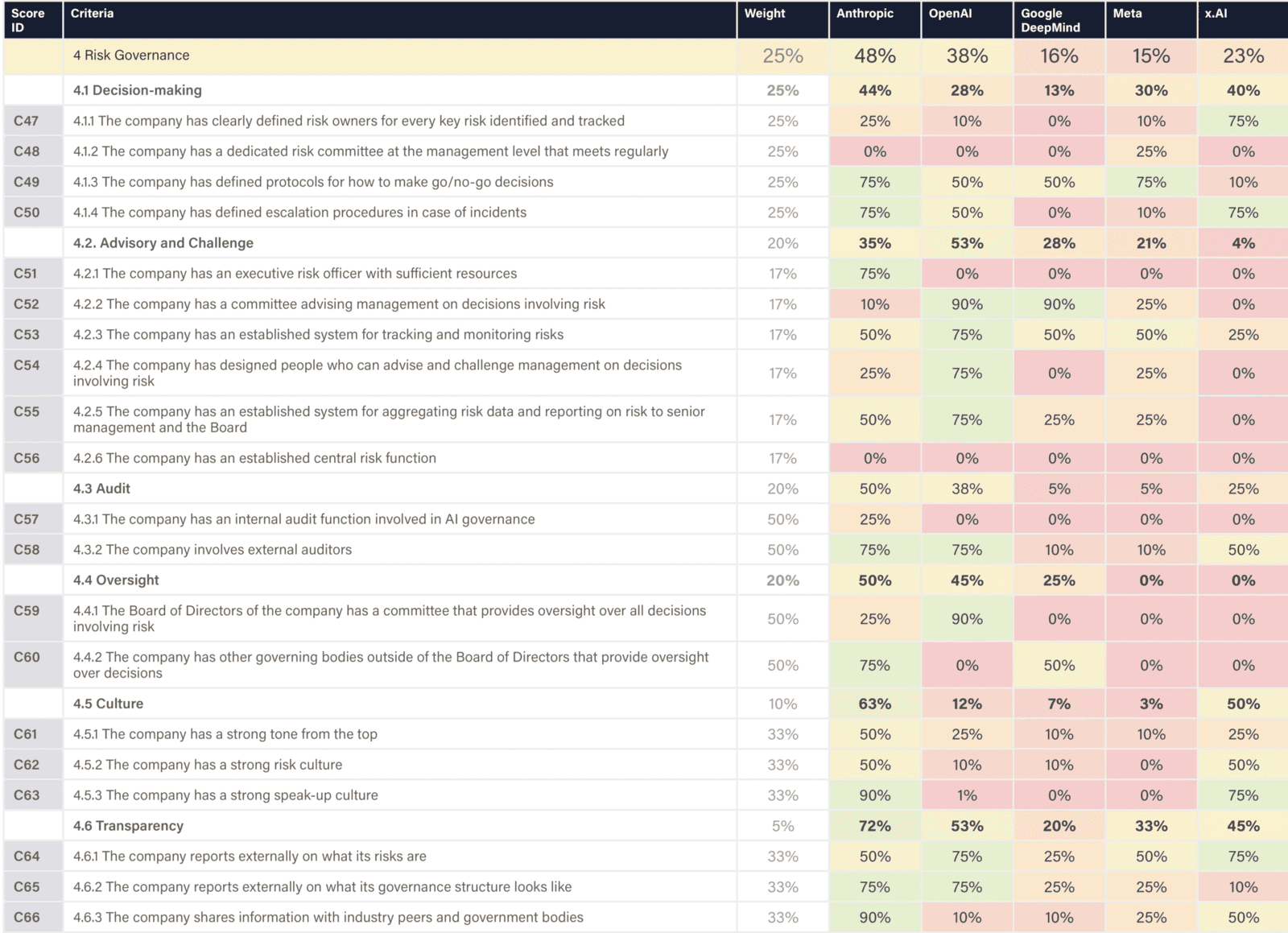

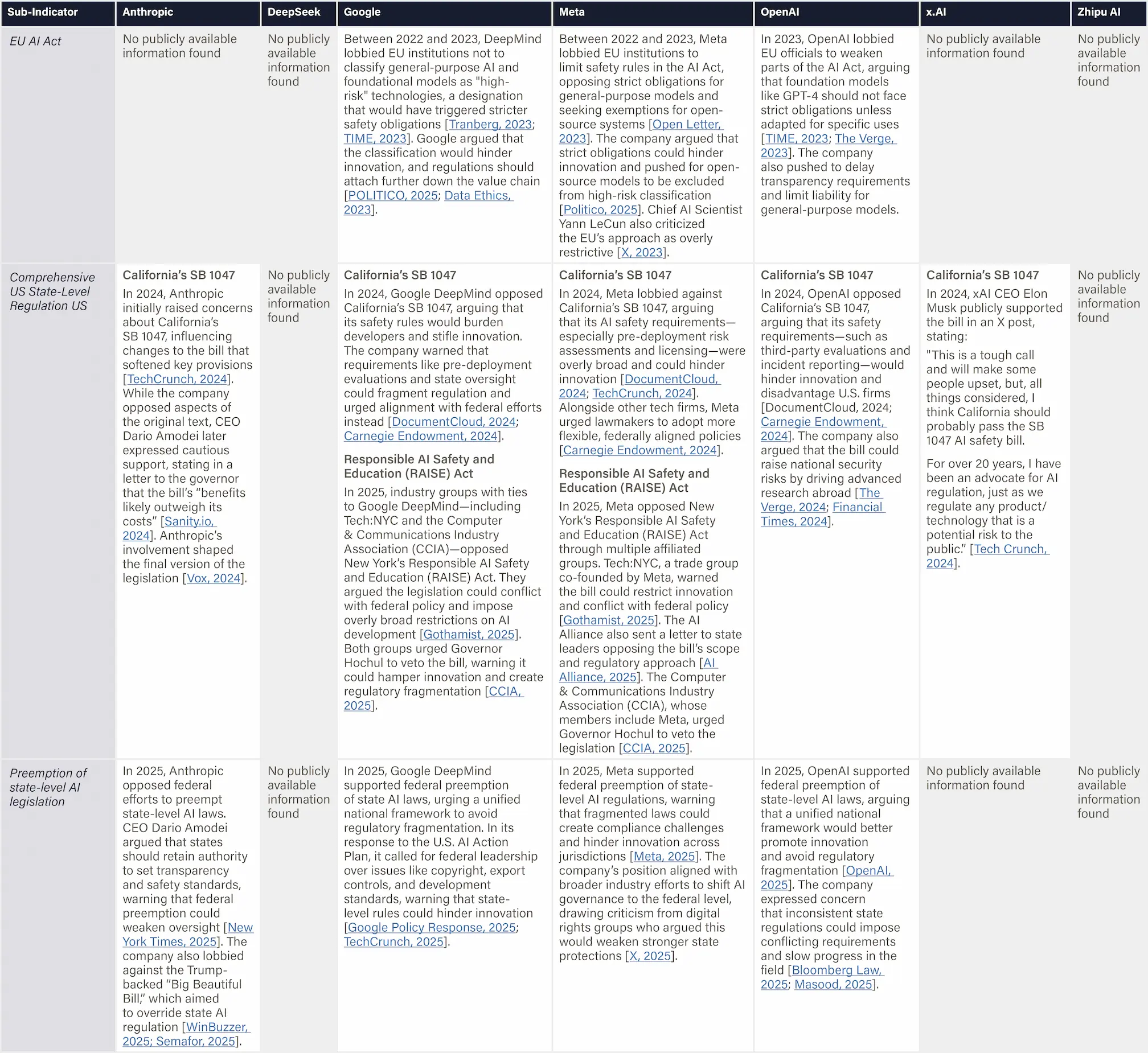

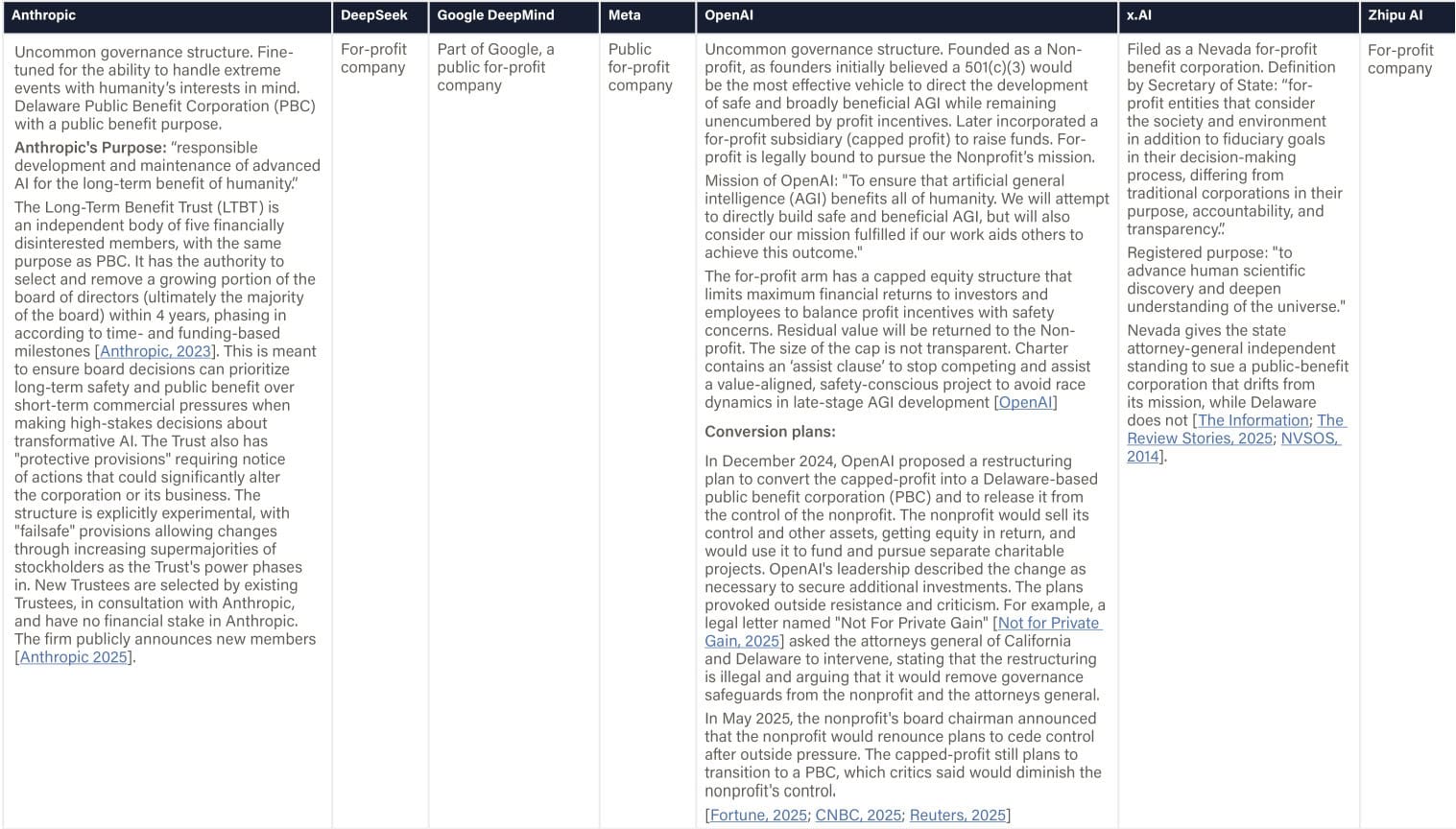

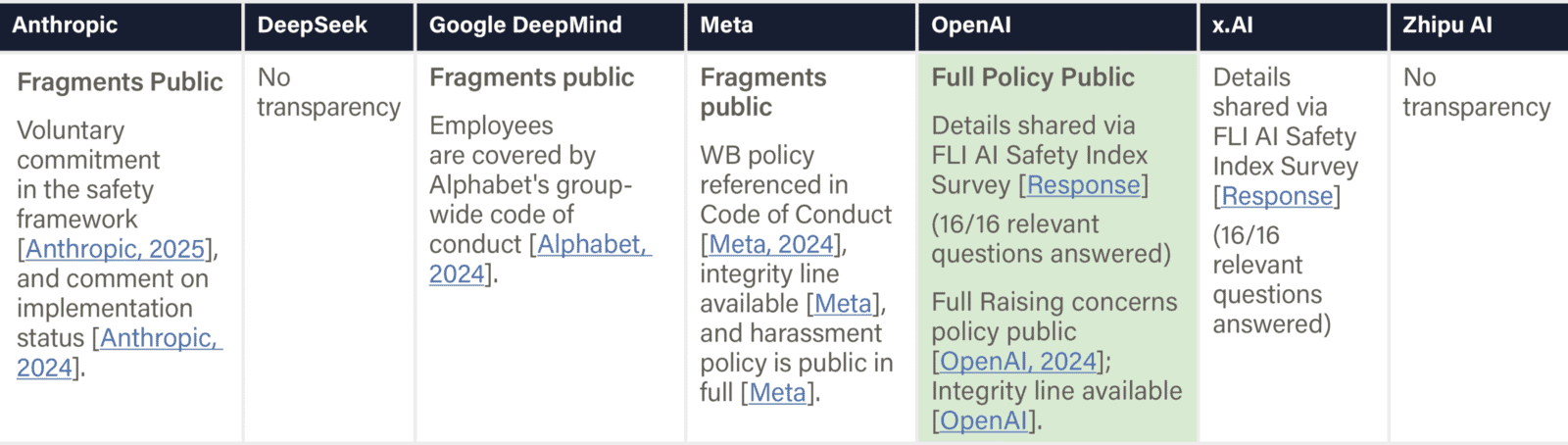

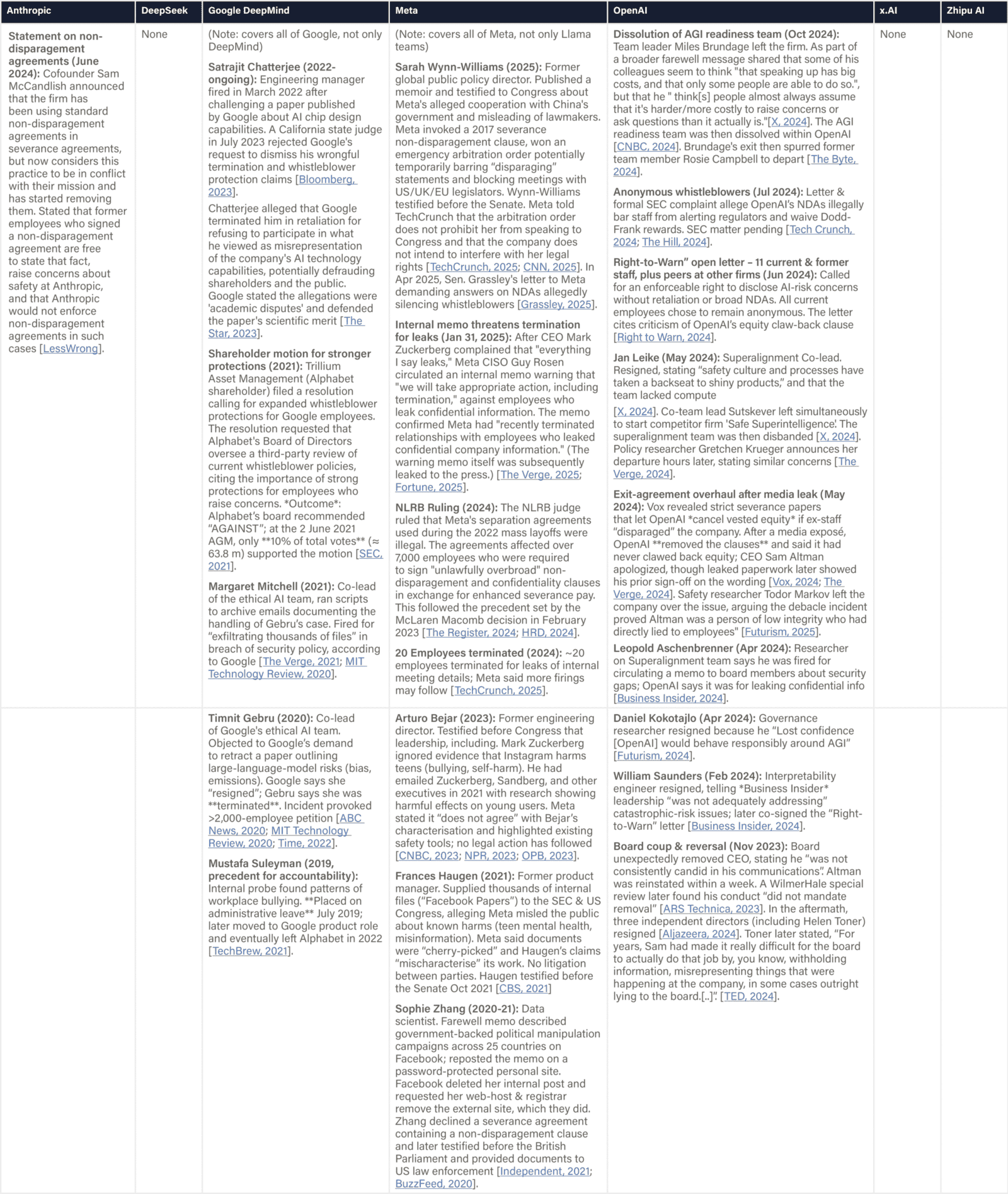

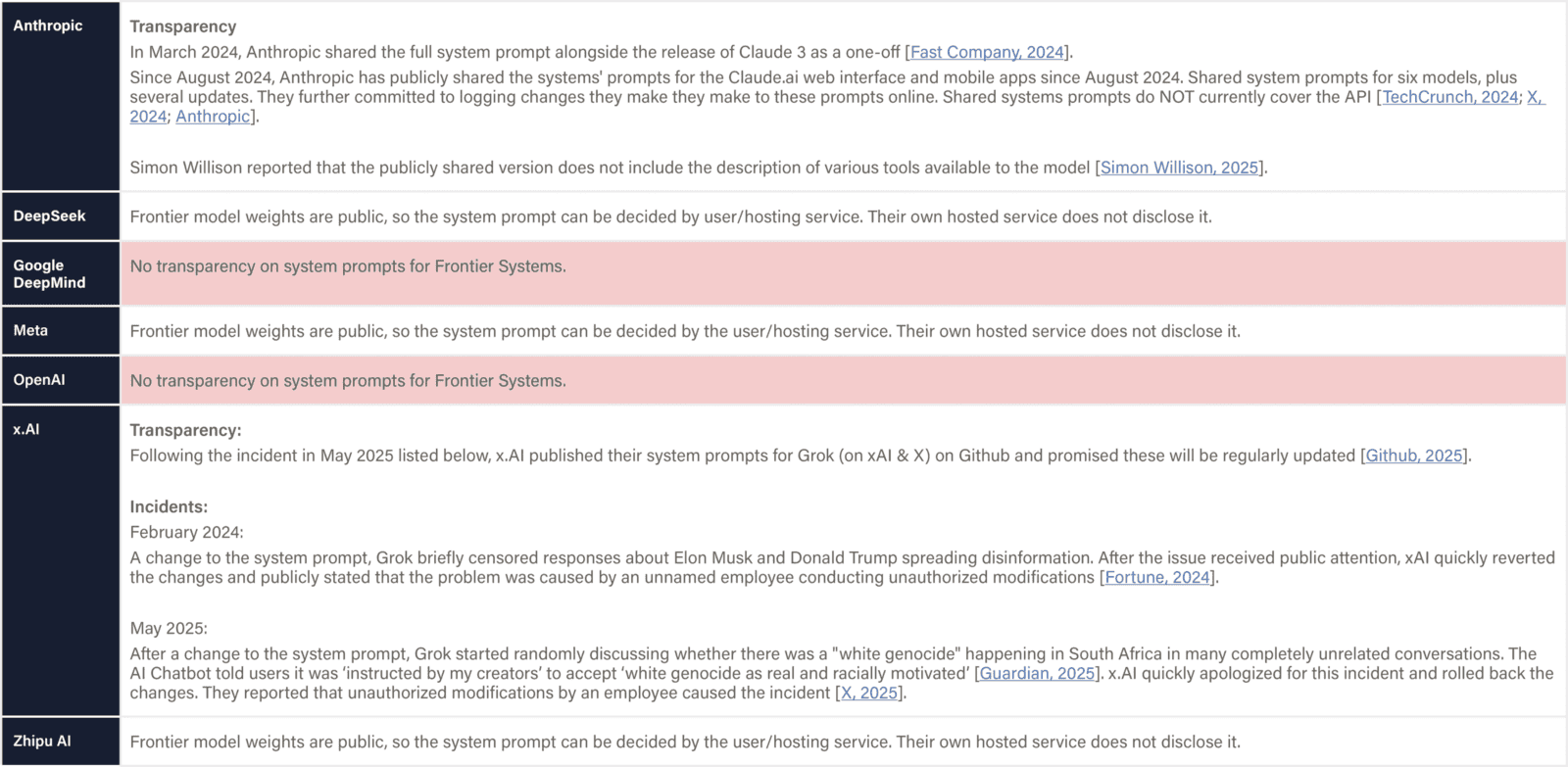

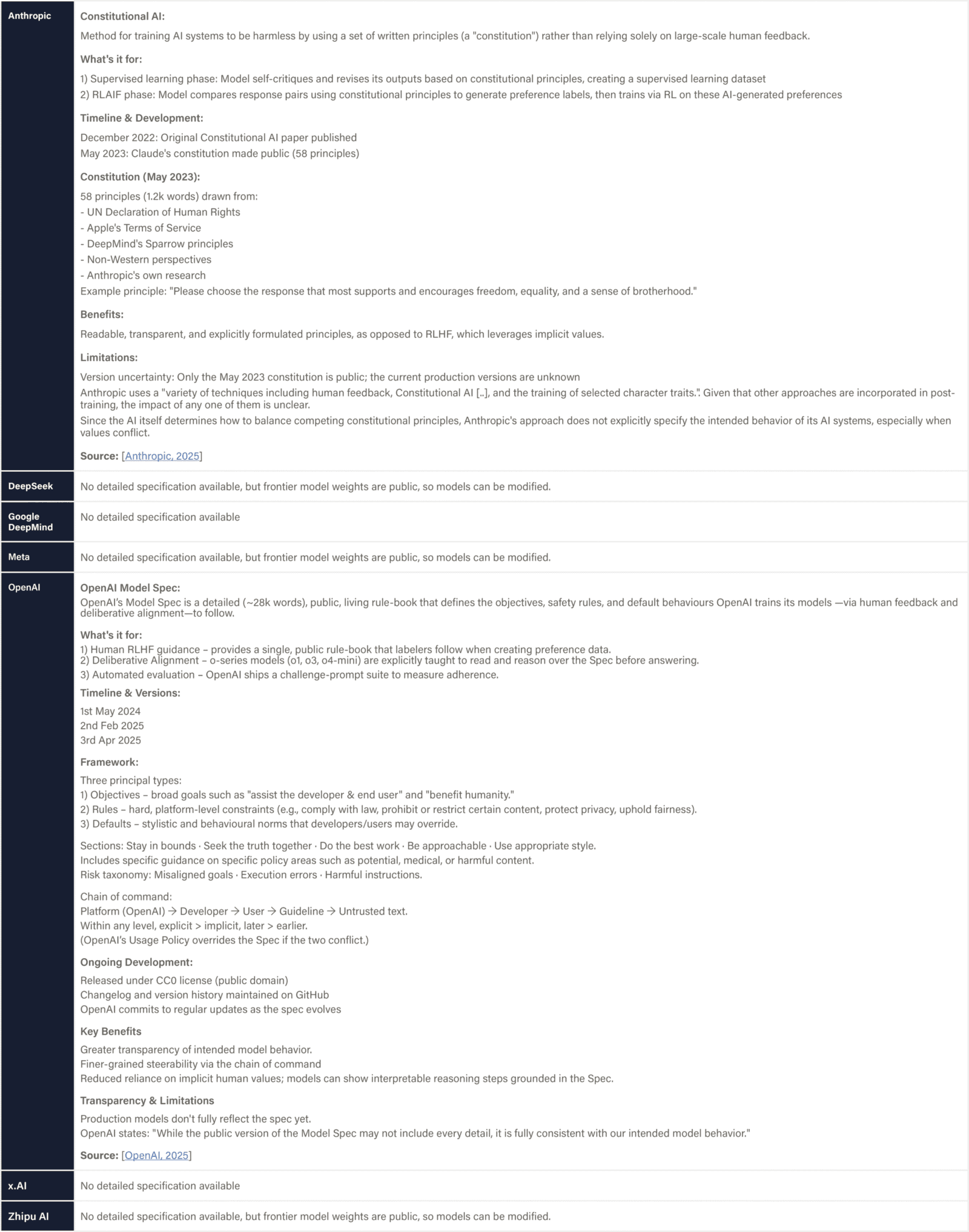

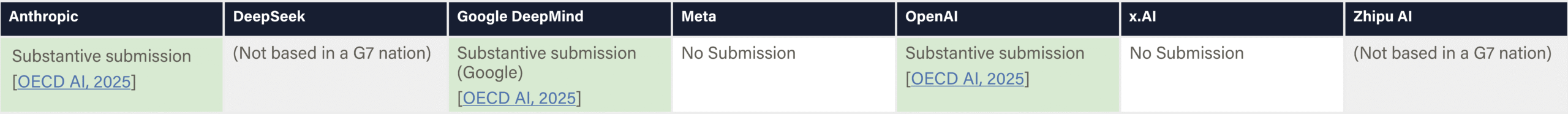

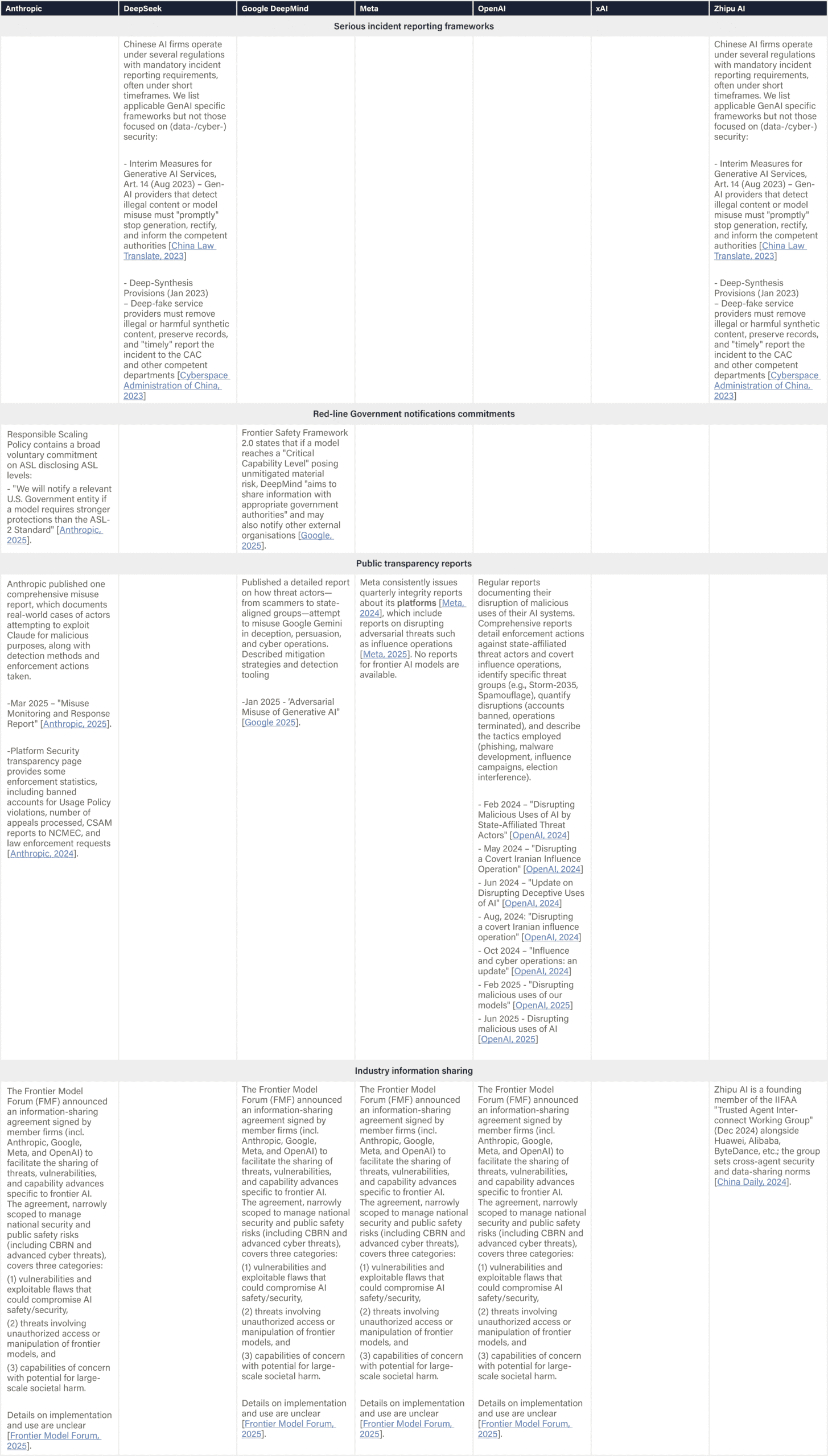

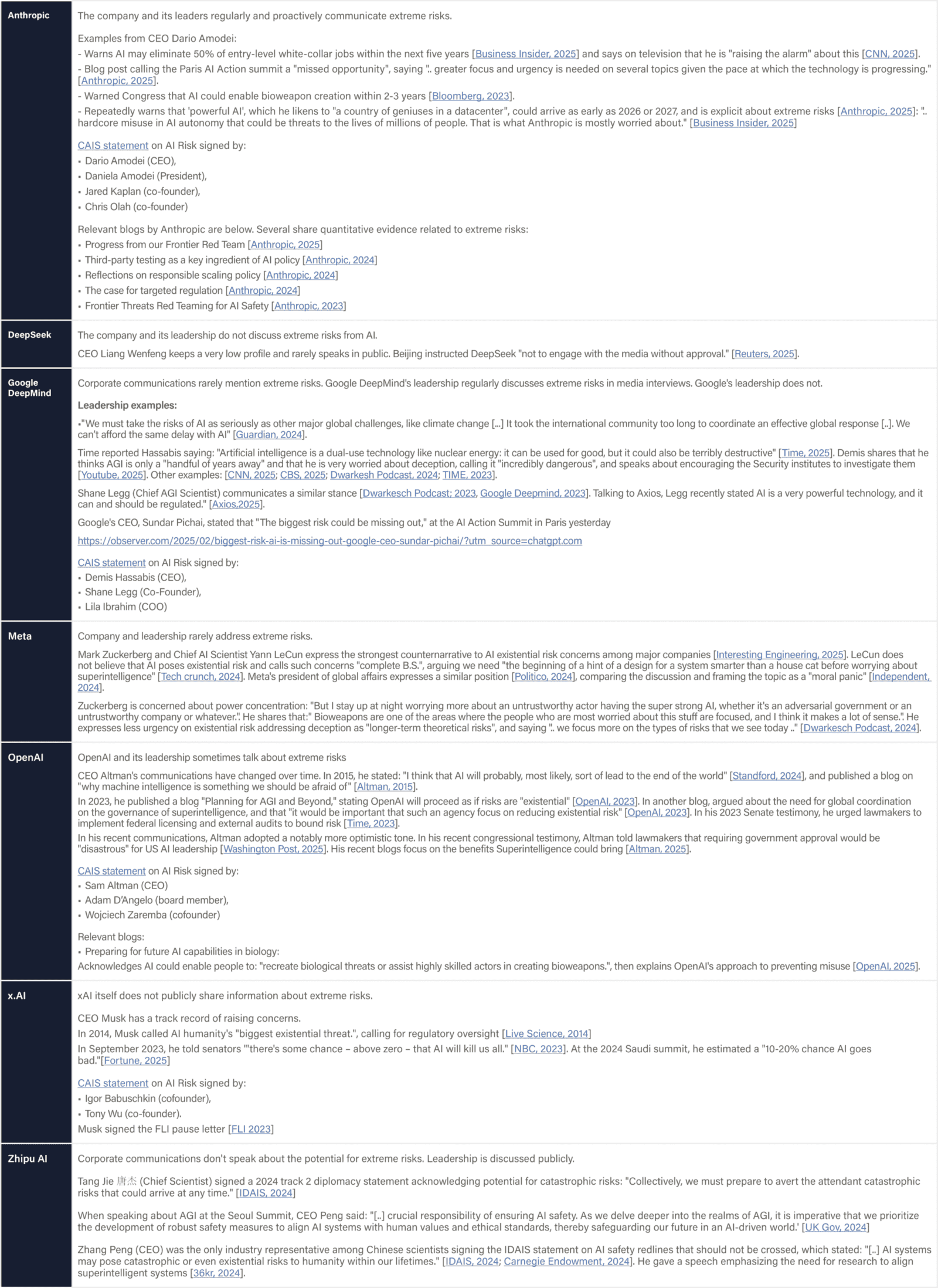

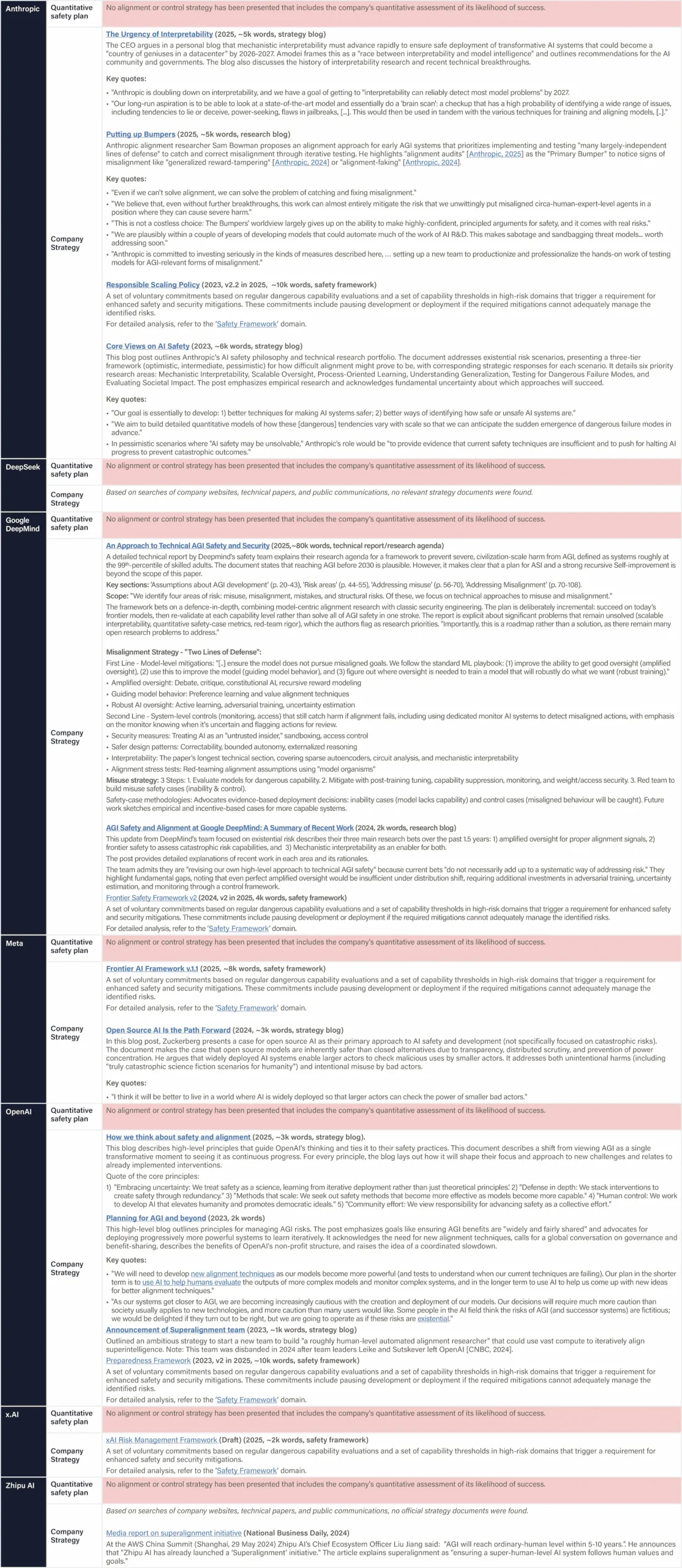

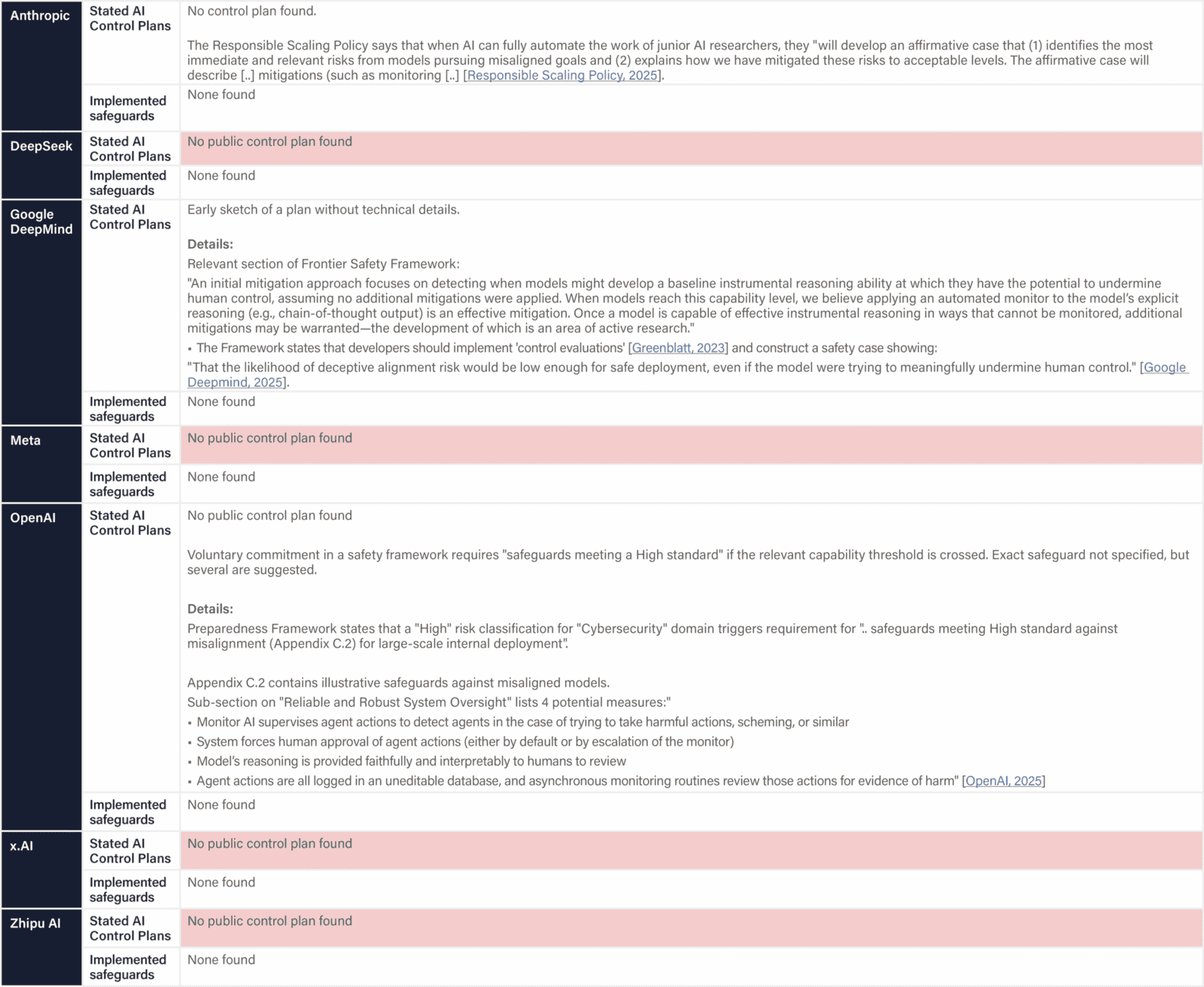

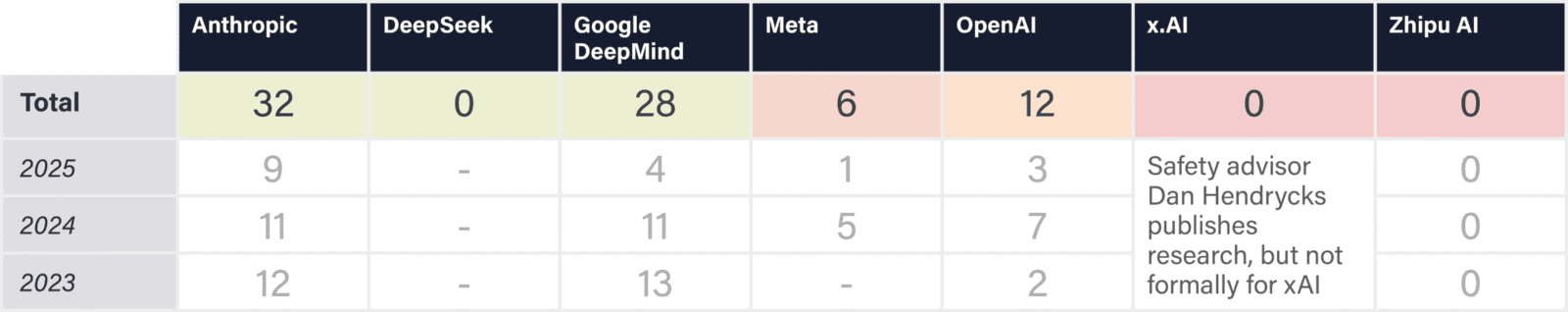

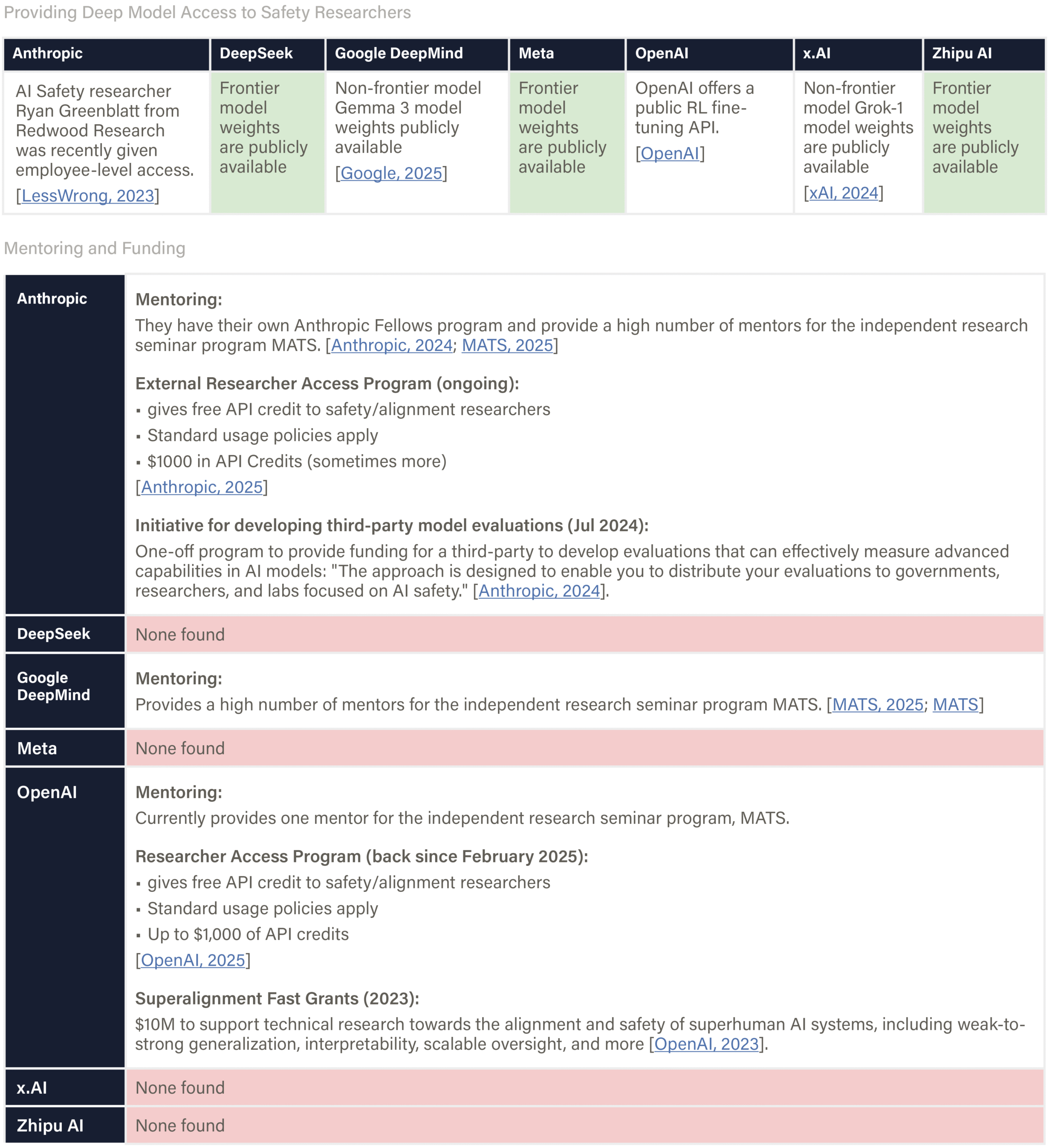

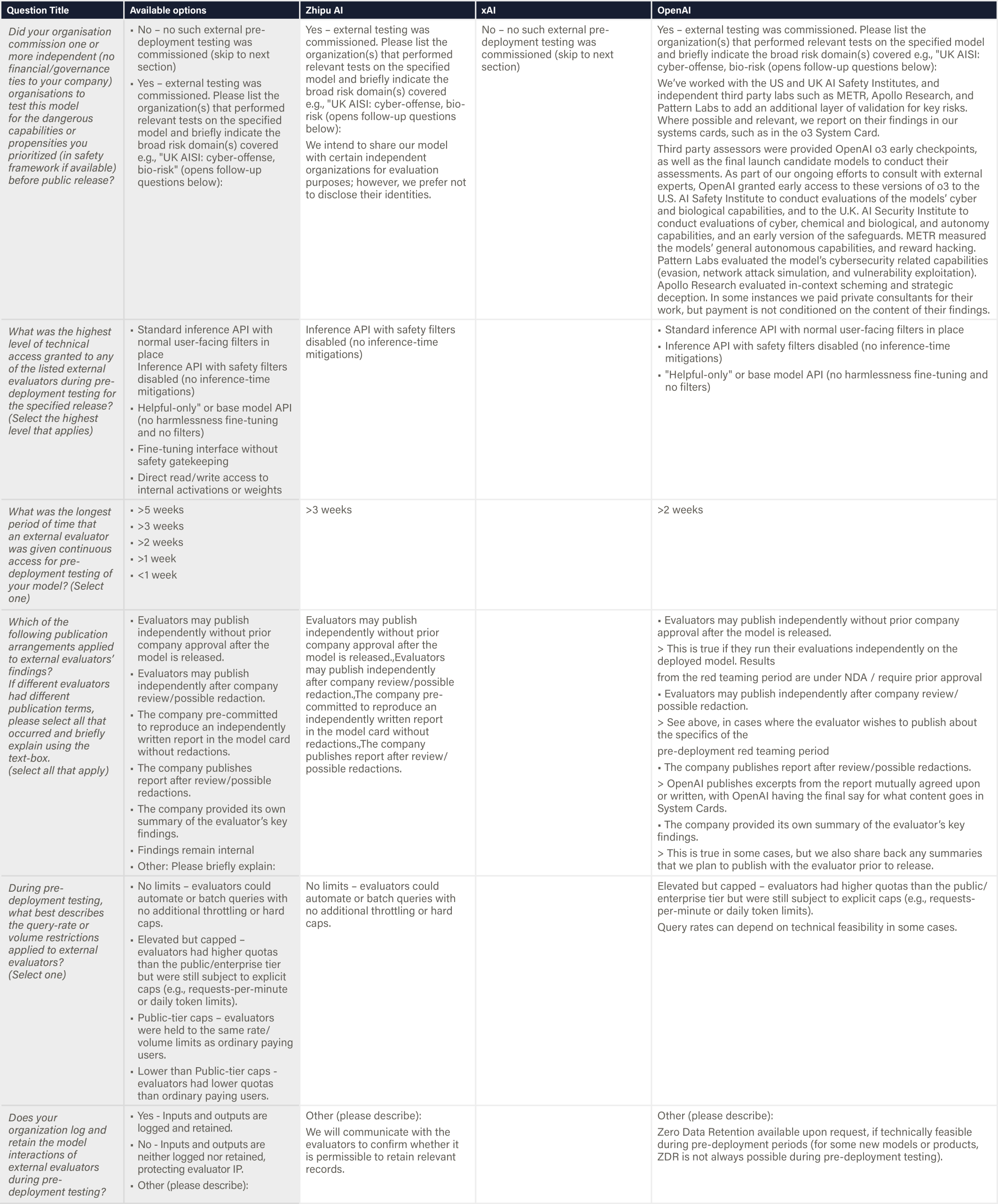

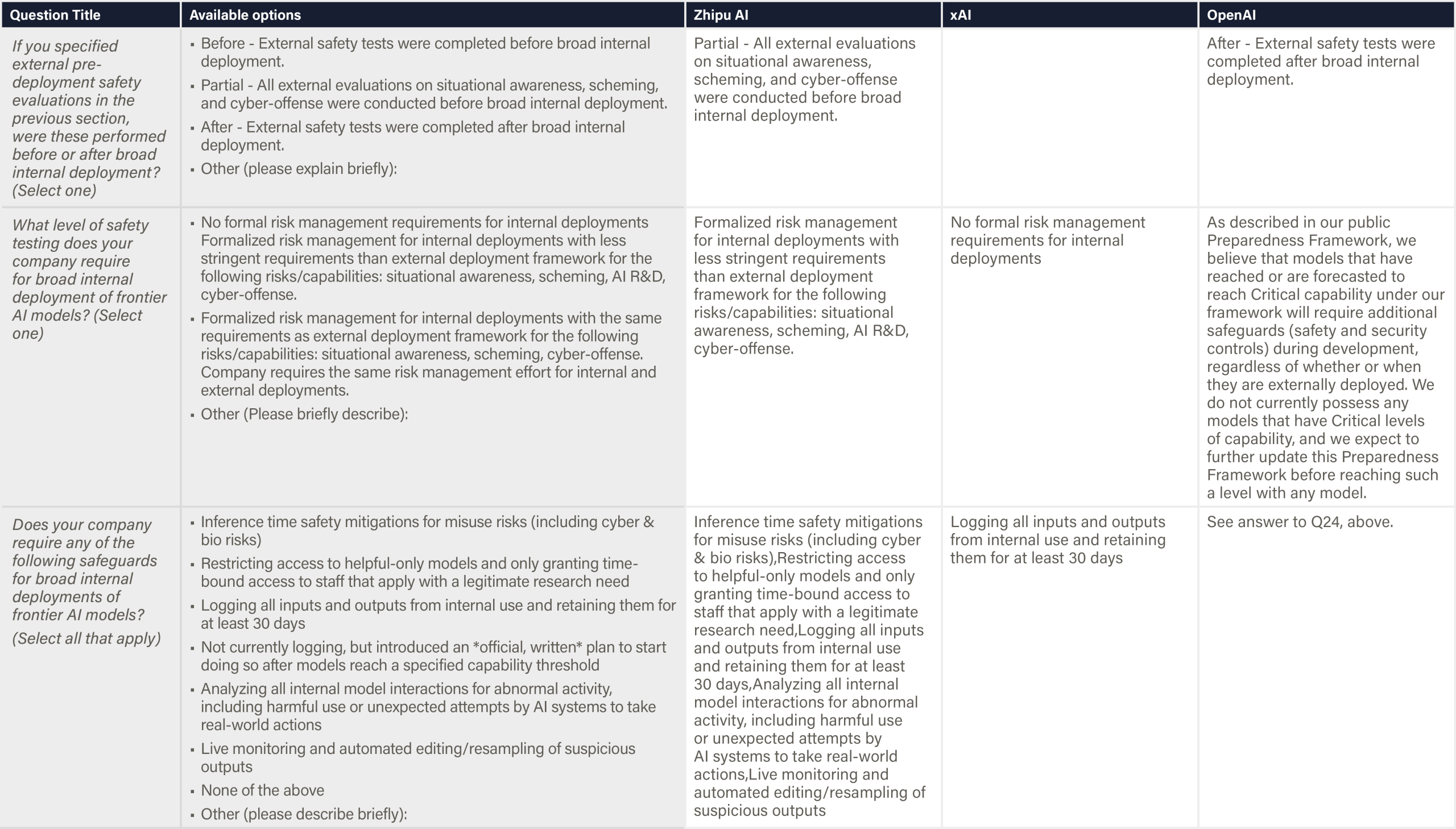

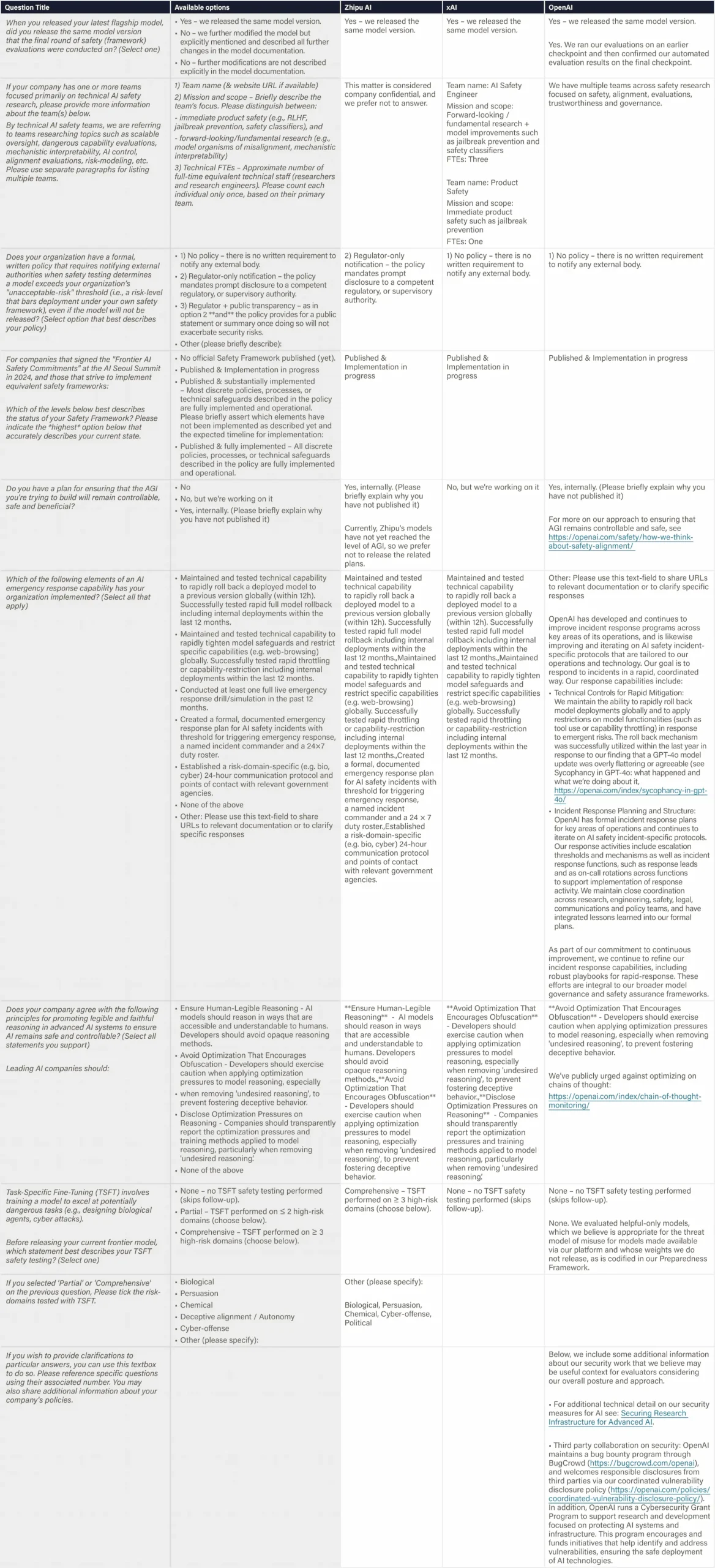

The Future of Life Institute's AI Safety Index provides an independent assessment of seven leading AI companies' efforts to manage both immediate harms and catastrophic risks from advanced AI systems. The Index aims to strengthen incentives for responsible AI development and to close the gap between safety commitments and real-world actions. The Summer 2025 version of the Index evaluates seven leading AI companies on an improved set of 33 indicators of responsible AI development and deployment practices, spanning six critical domains.

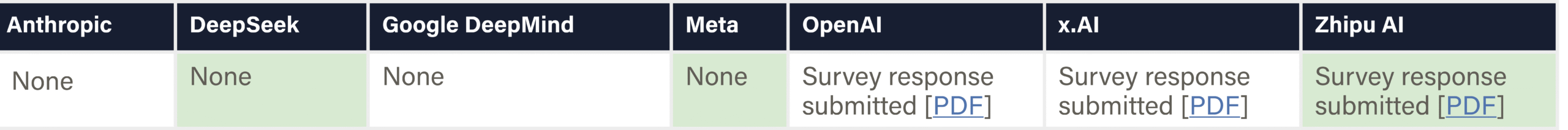

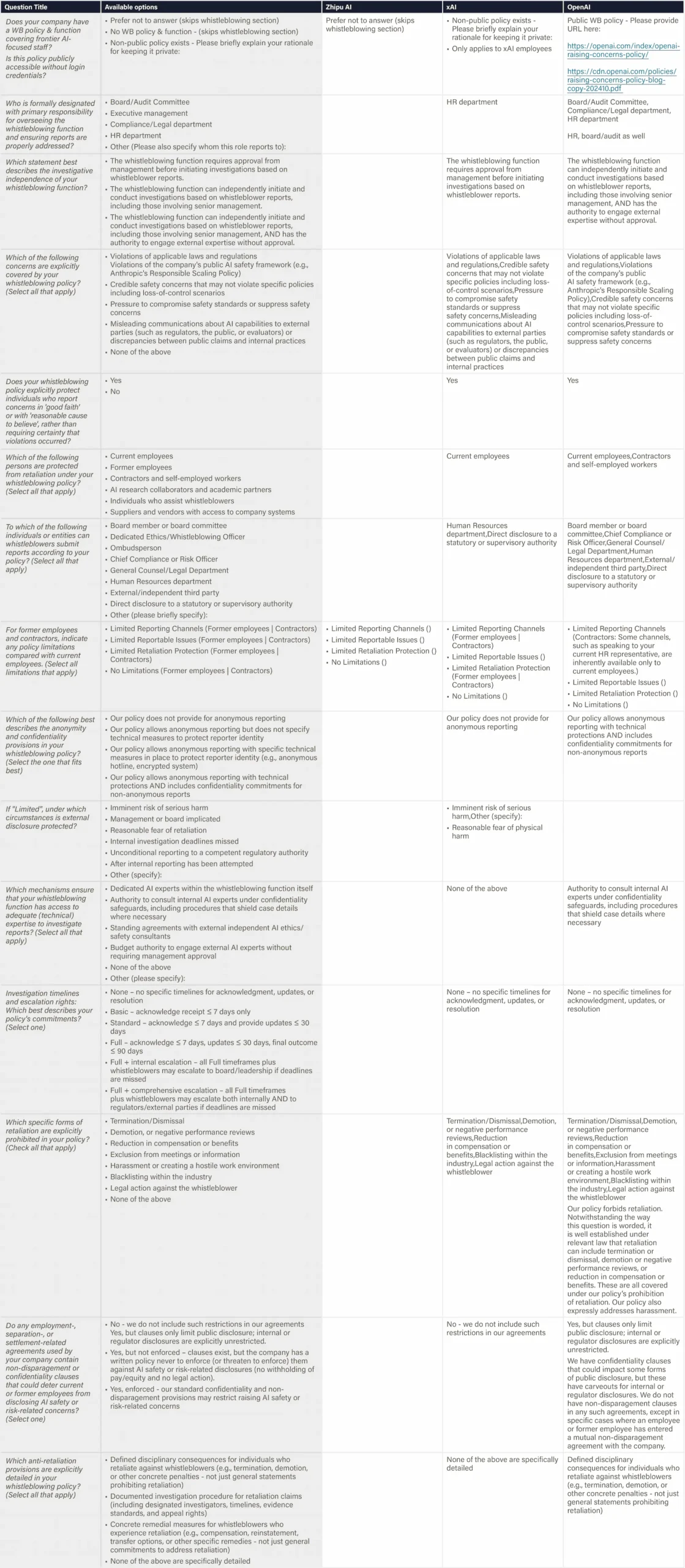

Data Collection: Evidence was gathered between March 24 and June 24, 2025 through systematic desk research and a targeted company survey. We prioritized official materials released by the companies about their AI systems and risk management practices, while also incorporating external safety benchmarks, credible media reports, and independent research. To address transparency gaps in the industry, we distributed a 34-question survey on May 28 (which was due on June 17) focusing on areas where public disclosure remains limited—particularly whistleblowing policies, third-party model evaluations, and internal AI deployment practices.

Expert Evaluation: An independent panel of distinguished AI scientists and governance experts evaluated the collection of evidence between June 24 and July 9, 2025. Panel members were selected for their domain expertise and absence of conflicts of interest.

Each expert assigned letter grades (A+ to F) per domain to each of the companies. These grades were based on a set of fixed performance standards. Experts also gave each grade a brief written justification, and gave each company specific recommendations for improvement. Reviewers had full flexibility to weight the various indicators according to their judgment. Not every reviewer graded every domain, but experts were invited to score domains relevant to their area of expertise. Final scores were calculated by averaging all expert grades within each domain, with overall company grades representing the unweighted average across all six domains. Individual reviewer grades remain confidential to ensure candid assessment.

Contact

For feedback and corrections on the Safety Index, potential collaborations, or other enquiries relating to the AI Safety Index, please contact: policy@futureoflife.org

Later editions

AI Safety Index - Winter 2025

Past editions