Protect what's human.

Contact your Legislator (USA)

Receive action alerts

Let others know

What you need to know about AI today

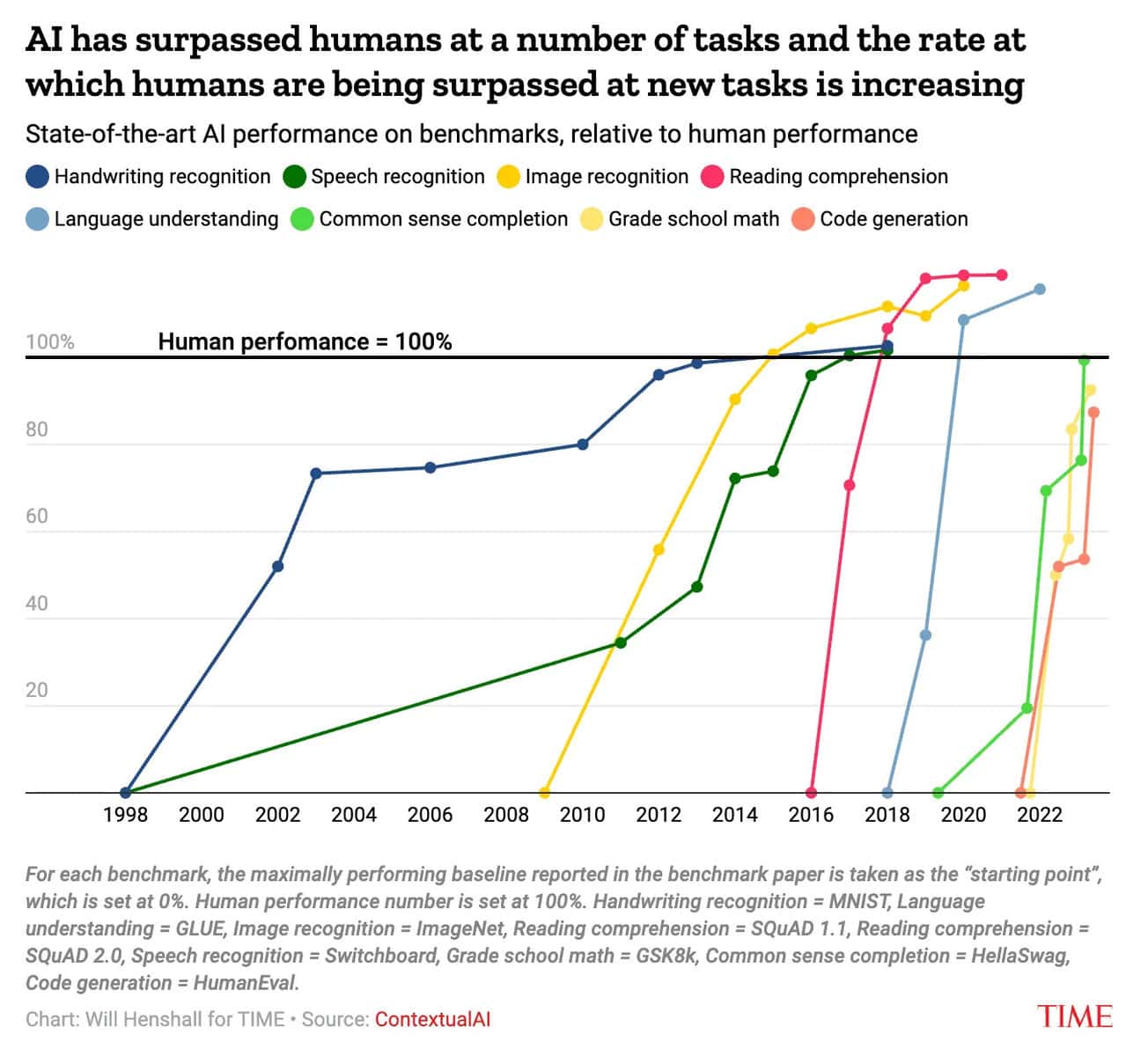

AI capabilities are advancing dramatically

In just the last couple of years, AI models have learned to impersonate humans, generate life-like audio, images, and videos, beat the world’s best coders at competitive coding challenges, and perform independent research. Every few months, AI systems unlock new capabilities - and accompanying risks.

As Big Tech companies spend more money and build ever-larger data centers, we can expect AI capabilities to keep accelerating.

Big Tech companies are explicitly building AI to replace humankind

The world’s largest tech companies (Google, Amazon, Microsoft, Facebook) have all stated that they are trying to build artificial general intelligence, or "AGI": a type of AI system that can do more-or-less all the tasks that a human can do.

Their ultimate goal is NOT to build AI products for you, but for your employer: AIs that can do your job faster, cheaper, and do so indefinitely. They want to replace you.

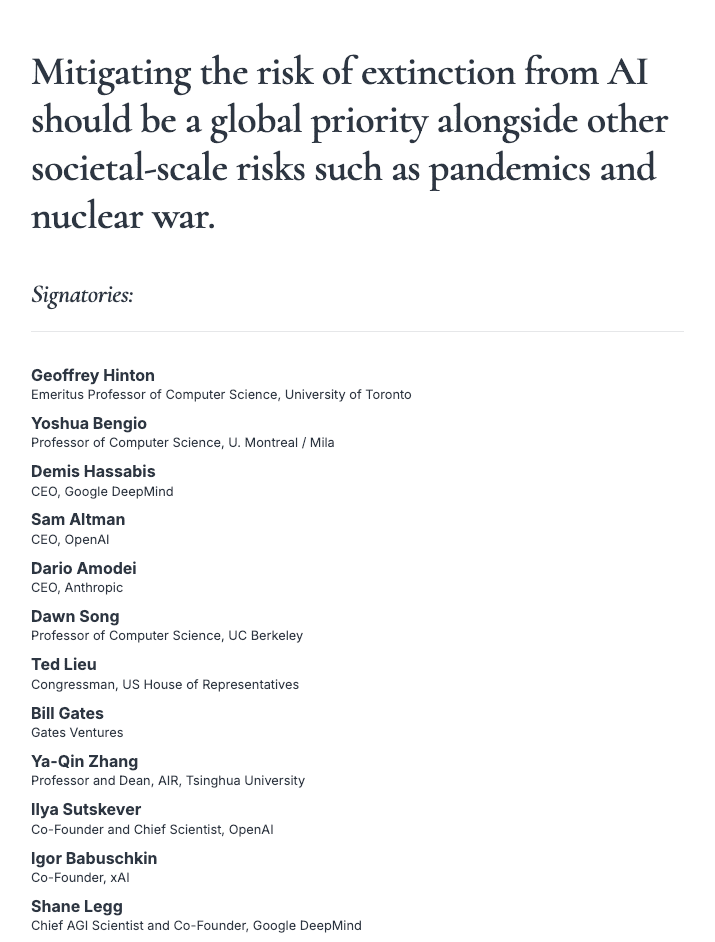

Experts are sounding the alarm

Thousands of experts have sounded the alarm about the massive risks of powerful AI systems — from bioweapons, to infrastructure attacks, to mass unemployment, and even extinction.

You don't have to take their word for it, though. Many of the CEOs leading these tech companies have publicly admitted these risks are real, too.

Despite these early warnings, policymakers have repeatedly surrendered to Big Tech lobbyists and refused to enact common-sense guard rails.

Join #TeamHuman.

Since 2015, people like you have helped us fight for a human future, one in which humanity as a whole is empowered by AI tools to fulfill its potential, rather than replaced by AI.

Here are just a few examples of how we've worked with people around the world to keep the future human:

- 33,000+ individuals signed the ‘Pause Giant AI Experiments’ open letter calling for a pause on the development of more powerful AI systems, including business leaders, community organizers, families, and concerned citizens. The letter sparked a global discussion on the topic of AI safety and ethics.

- Over 1,000+ people have participated in workshops, courses, and hackathons to build positive visions of the future with AI. These visions are shaping the narrative around which technologies we should build, and which we should not.

- The world’s religious communities are awakening to the potential benefits, and also the great risks, of powerful AI. They are making their voices heard, and providing moral leadership on the development of emerging technology.

- Thousands of people posted their stories on social media and signed open letters (including youth leaders, parents, academics, AI experts, and even former AI lab employees) to demonstrate their support when the fate of a 2024 California bill to protect people from AI risks was hanging in the balance.

- Over 100M+ people have watched and shared ‘Slaughterbots’, our viral video series on autonomous weapons that policymakers still refer to today.

Your voice matters; be ready to use it. Our Action Alerts enable you to take concrete action at exactly the moment it matters most.

We must keep control over the future of our world; We must stop the development of superhuman AI.

Receive action alerts

Subscribe to the FLI newsletter

Start the conversation that matters.

Assets for social media

Our plan to keep humans in control.

Show them what AI is capable of today.

Convincing deepfakes are generated with just a few seconds of audio

DEMO: Voter Turnout Manipulation Using AI

An entire film generated by AI. How realistic do you think it is?

China's slaughterbots show WW3 would kill us all.

Our recommended reads

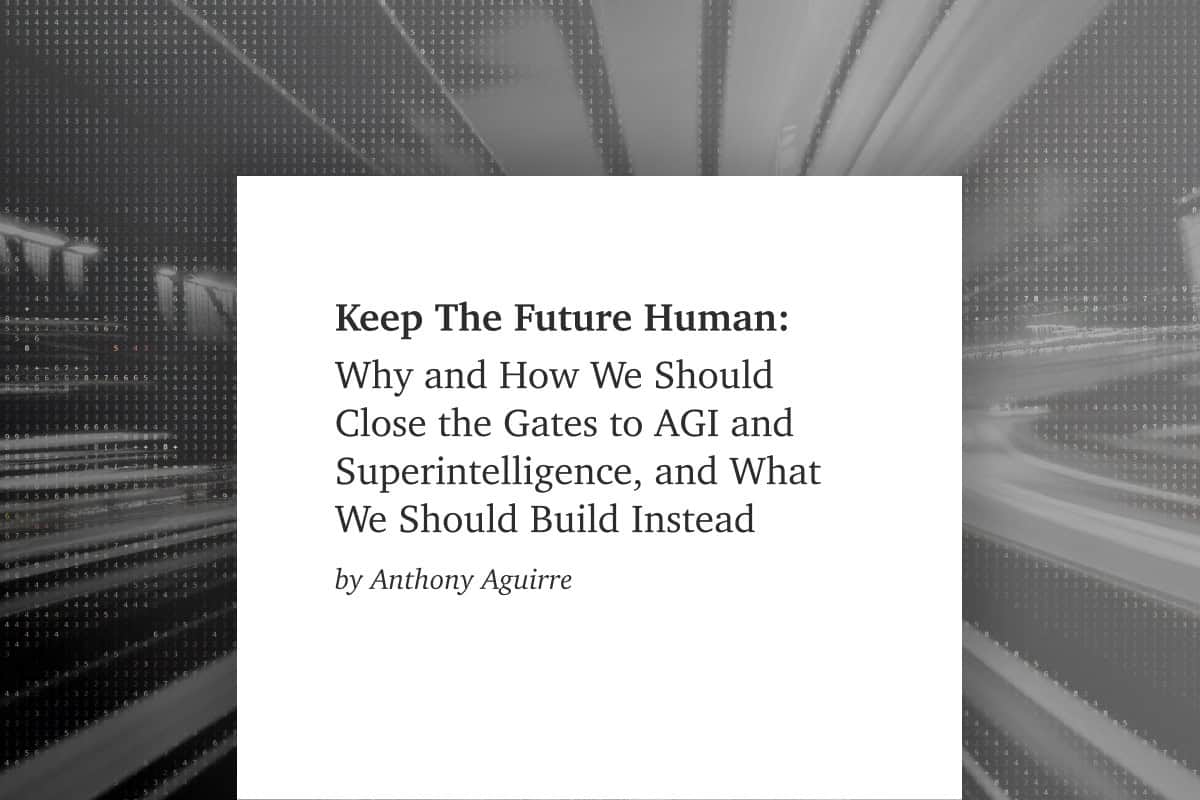

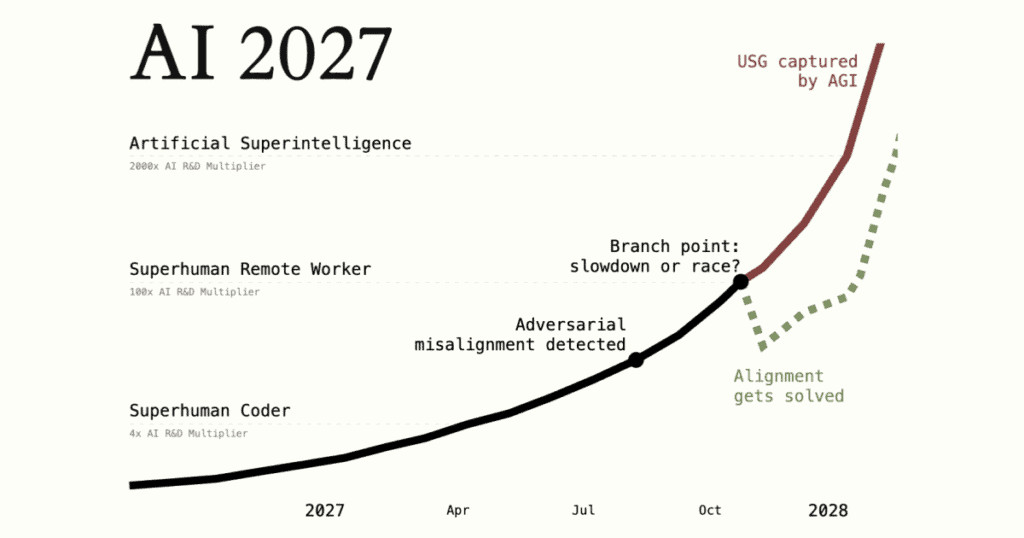

AI 2027

A Narrow Path

The Compendium

Put pressure on companies to excel in safety.

Add your voice to the list of concerned citizens.

Open letter calling on world leaders to show long-view leadership on existential threats

Pause Giant AI Experiments: An Open Letter

Asilomar AI Principles

Participate in our programs and initiatives

Our projects

Digital Media Accelerator

Give to the cause.

We’ve hardly made a dent in our list of project ideas. Donations enable us to grow as an organisation and execute more of our plans.

Visit Our work to read more about the work we have done so far and the types of projects your donations would help support. Find out everything you need to know about donating on our dedicated page:

Back to the top

Did we miss something you need?

This webpage has been recently overhauled to provide more relevant and numerous opportunities for action.

Let us know if you have any feedback or comments. We're particularly eager to hear:

- If there is something you were looking for, but couldn't find.

- Ideas for resources you would find helpful.

- Things you especially did or didn't like about this page.