Technical Postdoctoral Fellowships

Fellows receive:

- An annual $80,000 stipend for up to 3 years.

- At universities not in the US, UK or Canada, this amount may be adjusted to match local conditions.

- A $10,000 fund that can be used for research-related expenses such as travel and computing.

- Invitations to virtual and in-person events where they will be able to interact with other researchers in the field.

See below for a definition of 'AI Existential Safety research' and additional eligibility criteria.

Questions about the fellowship or application process not answered on this page should be directed to grants@futureoflife.org

The Vitalik Buterin Fellowships in AI Existential Safety are run in partnership with the Beneficial AI Foundation (BAIF).

Grant winners

Ekdeep Singh Lubana

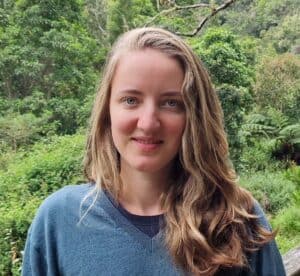

Nandi Schoots

Dr. Peter S. Park

Nisan Stiennon

Results

No results to show yet.

AI Existential Safety Research Definition

FLI defines AI existential safety research as:

- Research that analyzes the most probable ways in which AI technology could cause an existential catastrophe (that is: a catastrophe that permanently and drastically curtailshumanity’s potential, such as by causing human extinction), and which types of research could minimize existential risk (the risk of such catastrophes). Examples include:

- Outlining a set of technical problems and arguments that their solutions would reduce existential risk from AI, or arguing that existing such sets are misguided.

- Concretely specifying properties of AI systems that significantly increase or decrease their probability of causing an existential catastrophe, and providing ways to measure such properties.

- Technical research which could, if successful, assist humanity in reducing the existential risk posed by highly impactful AI technology to extremely low levels. Examples include:

- Research on interpretability and verification of machine learning systems, to the extent that it facilitates analysis of whether the future behavior of the system in a potentially different distribution of situations could cause existential catastrophes.

- Research on ensuring that AI systems have objectives that do not incentivize existentially risky behavior, such as deceiving human overseers or amassing large amounts of resources.

- Research on developing formalisms that help analyze advanced AI systems, to the extent that this analysis is relevant for predicting and mitigating existential catastrophes such systems could cause.

- Research on mitigating cybersecurity threats to the integrity of advanced AItechnology.

- Solving problems identified as important by research as described in point 1, or developing benchmarks to make it easier for the AI community to work on such problems.

The following are examples of research directions that do not automatically count as AIexistential safety research, unless they are carried out as part of a coherent plan for generalizing and applying them to minimize existential risk:

- The mitigation of non-existential catastrophes, e.g. ensuring that autonomous vehicles avoid collisions, or that recidivism prediction systems do not discriminate based on race. We believe this kind of work is valuable; it is simply outside the scope of this fellowship.

- Increasing the general competence of AI systems, e.g. improving generative modelling,or creating agents that can optimize objectives in partially observable environments.

Purpose and eligibility

The purpose of the fellowship is to fund talented postdoctoral researchers to work on AI existential safety research. To be eligible, applicants should identify a mentor (typically a professor) at the host institution (typically a university) who commits in writing to mentor and support the applicant in their AI existential safety research if a Fellowship is awarded. This includes ensuring that the applicant has access to office space and is welcomed and integrated into the local research community. Fellows are expected to participate in annual workshops and other activities that will be organized to help them interact and network with other researchers in the field.

Application process

Applicants will submit a detailed CV, a research statement, a summary of previous and current research, the names and email addresses of three referees, and the proposed host institution and mentor (whose agreement must have been secured beforehand).

The research statement should include the applicant’s reason for interest in AI existential safety, a technical specification of the proposed research, and a discussion of why it would reduce the existential risk of advanced AI technologies or otherwise meet our eligibility criteria.

Finalists’ proposed mentors will be asked to confirm that they will supervise the applicant to work on AI existential safety research as per above, and that the applicant will be employed by the host institution.

There are no geographic limitations on applicants or host universities. We welcome applicants from a diverse range of backgrounds, and we particularly encourage applications from women and underrepresented minorities.

Timing for Fall 2024

The deadline for application is January 6, 2025 at 11:59 pm ET. After an initial round of deliberation, those applicants who make the short-list will then go through an interview process before fellows are finalized. Offers will be made no later than the end of March 2025.

Our other fellowships

US-China AI Governance PhD Fellowships

Technical PhD Fellowships

Our other grant programs

2024 Grants

Multistakeholder Engagement for Safe and Prosperous AI