Policy and Research

Introduction

Improving the governance of transformative technologies

The policy team at FLI works to improve national and international governance of AI.

In 2017 we created the influential Asilomar AI principles, a set of governance principles signed by thousands of leading minds in AI research and industry. More recently, our 2023 open letter caused a global debate on the rightful place of AI in our societies. FLI regularly participates in intergovernmental conferences, and advises governments around the world on questions of AI governance.

How might AI transform our world?

Tomorrow's AI is a scrollytelling site with 13 interactive, research-backed scenarios showing how advanced AI could transform the world—for better or worse. The project blends realism with foresight to illustrate both the challenges of steering toward a positive future and the opportunities if we succeed. Readers are invited to form their own views on which paths humanity should pursue.

Project database

Promoting a Global AI Agreement

Perspectives of Traditional Religions on Positive AI Futures

Recommendations for the U.S. AI Action Plan

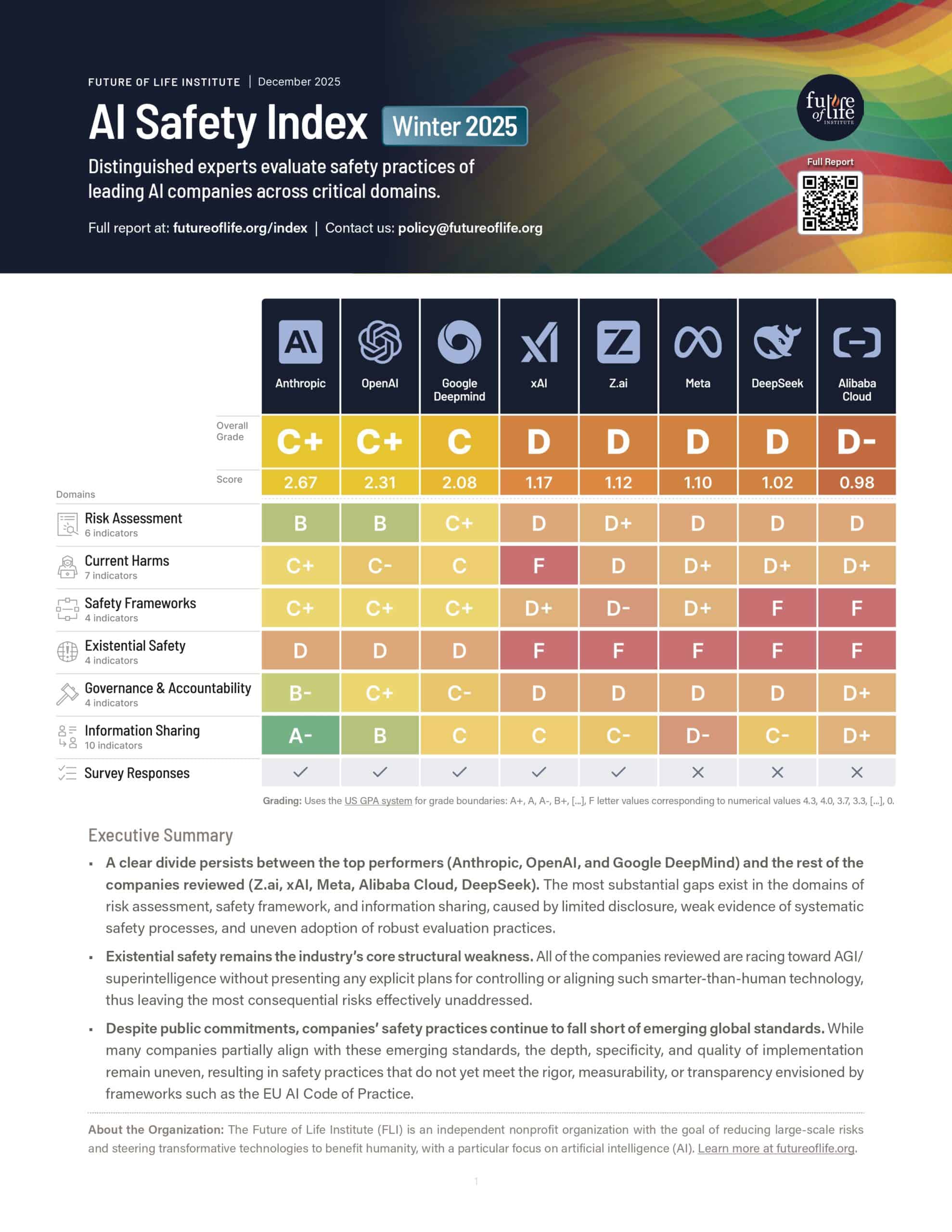

FLI AI Safety Index: Winter 2025 Edition

AI Convergence: Risks at the Intersection of AI and Nuclear, Biological and Cyber Threats

AI Safety Summits

Implementing the European AI Act

Educating about Autonomous Weapons

Latest policy and research papers

Produced by us

FLI’s Recommendations for the AI Impact Summit

AI Safety Index: Winter 2025 (2-Page Summary)

Control Inversion

Embedded Off-Switches for AI Compute

Load more

Featuring our staff and fellows

AI Benefit-Sharing Framework: Balancing Access and Safety

Examining Popular Arguments Against AI Existential Risk: A Philosophical Analysis

A Blueprint for Multinational Advanced AI Development

Looking ahead: Synergies between the EU AI Office and UK AISI

Load more

We provide high-quality policy resources to support policymakers

US Federal Agencies: Mapping AI Activities

EU AI Act Explorer and Compliance Checker

Autonomous Weapons website

Geographical Focus

Where you can find us

United States

European Union

United Nations

Featured posts

Michael Kleinman reacts to breakthrough AI safety legislation

Context and Agenda for the 2025 AI Action Summit

FLI Statement on White House National Security Memorandum

Paris AI Safety Breakfast #2: Dr. Charlotte Stix

US House of Representatives call for legal liability on Deepfakes

Statement on the veto of California bill SB 1047

Panda vs. Eagle