Superintelligence Imagined

Creative Contest on the Risks of Superintelligence

Contest Notifications

About the contest

The Future of Life Institute is pleased to announce the results of our world-first creative contest on the risks of advanced Artificial Intelligence. From over 180+ submissions, our panel of nine judges selected 1 Grand Prize Winner and 5 Winners to whom a prize pool of $70,000 will be distributed, as well as 7 Runners-up. The artworks are now published and available on this webpage.

Entry to the contest was free, and we accepted submissions from people of any age, anywhere in the world, so long as their submission was in English. Some submissions came from teams of 5 people, while others came from a single contributer. We selected a panel of judges, 9 leaders in the science and communication of Al risks, to score the submissions.

The contest was originatty scoped to offer $50,000 in prizes to five winners, but we were so impressed by Suzy Shepherd's "Writing Doom" that we added an additional $20,000 'Grand-Prize' to celebrate this submission. This video stood out for its abitity to detiver on the key message of our contest while also producing a striking and entertaining piece of media.

Winners

Suzy Shepherd, UK - Filmmaker (website)

Eddy - "This finds a great balance between engaging and informative [...] The difference of perspectives is great allowing the audience to connect. [...] its accessible for a very wide audience. High quality production really helps."

Sofia - "Amazing script, covers so many issues. Explanation of what superintelligence is and a perspective of how current tools like chatGPT can learn and evolve into ASI. Great examples, such as the paperclip maximiser adjusted to a chess game, also great flow to take people on a journey of thought, presenting different perspectives by the different characters."

View submission

View submissionNick Brown, USA - Project Manager

Lauren Sheil, USA - Marketing Specialist

John Boughey, USA - Account Manager

Isaac Bilmes, USA - Production Coordinator

Emma Morey, USA - Intern

Nathan Truong, USA - Intern

Eddy - "I really like this project, I think it manages to ride the fine line of informative and engaging. The project shows the complexity of issues without making it an information overload. The potential effects of superintelligence are well thought out."

Emily - "I love the simple effectiveness of this campaign. Visually compelling and each one unique. It has a bit of nostalgia mixed in with magazine covers.

I like how easily this could be translated and internationalized. The variation of themes works and I could imagine displaying these in large scale in some way or even as social media posts."

View submission

View submissionDr Waku, Canada - Producer, Writer, Videographer

Yoshua Bengio, Canada - Interviewee

Eddy - "Incredibly detailed and informative, [however] creating a series of short form videos would probably be much more effective."

Elise - "The video does an excellent job of communicating the risks of superintelligence to a general audience. By leveraging Yoshua Bengio’s expertise and framing the discussion in accessible terms, the submission effectively outlines key risks such as misuse, misalignment, and loss of control. The information is clear, compelling, and scientifically accurate, grounded in up-to-date knowledge of AI risks. Featuring an authoritative figure like Bengio adds credibility, and the video highlights real-world challenges faced by AI developers, emphasizing the gravity of these risks in a plausible and relatable manner."

View submission

View submissionZeaus Koh, Singapore - Researcher, Scriptwriter, Narrator, Video Editor

Nainika Gupta, USA - Video Editor, Videographer, Illustrator, Prop Artist

Beatrice - "I love that it has a personal vibe, and it does a really good job at explaining. I especially like the bird example. Well produced in terms of being aimed towards a younger audience. I also really liked their recommendations at the end, they were very constructive."

Eleanor - "A nice, detailed explainer with quite a lot of humor. Quite compelling and reasonably high production values, with good takeaways."

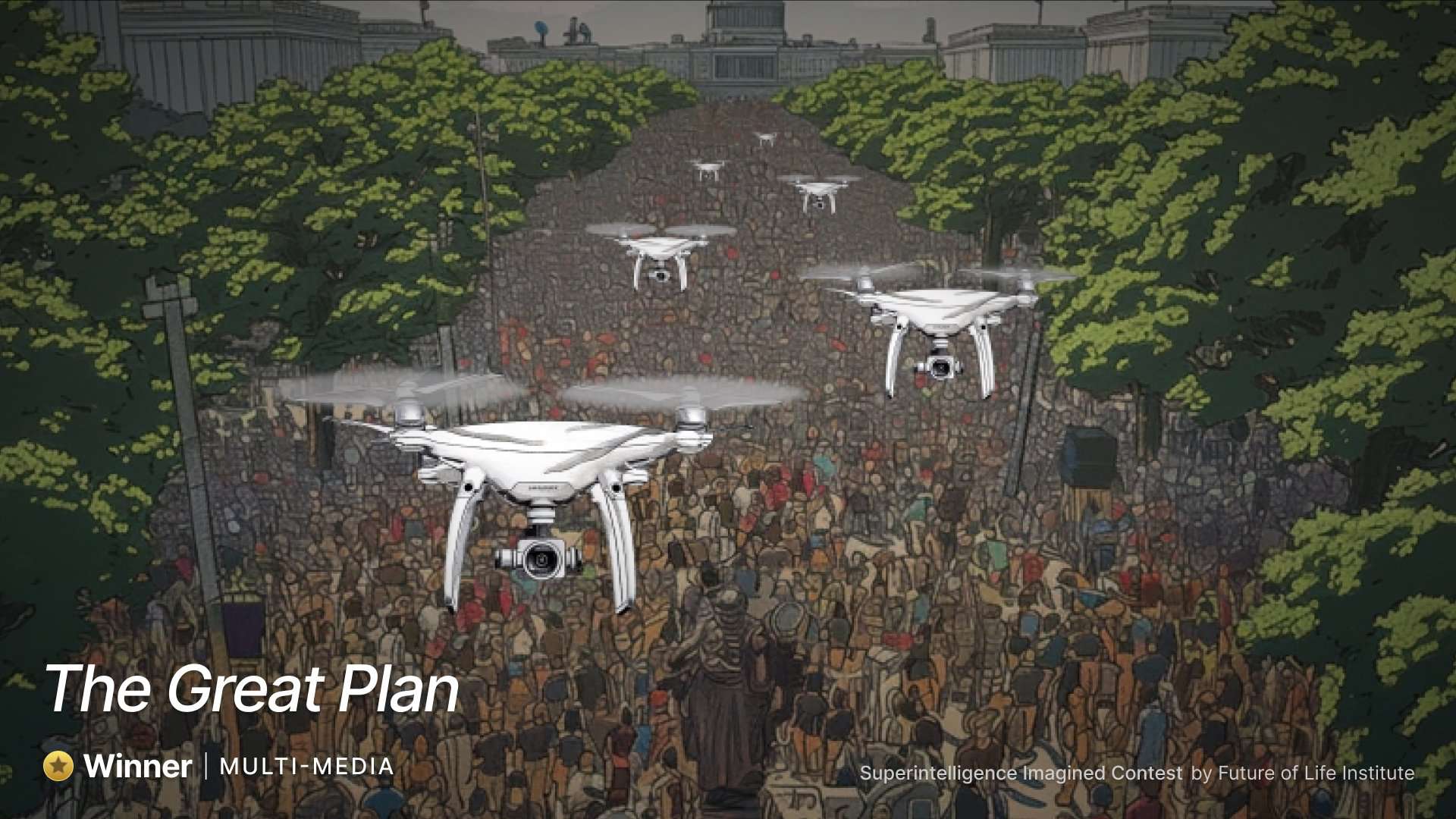

Karl von Wendt, Germany - Writer, Editor, Music Composer (website)

Alexis - "This engaging story is approachable for a non-technical audience to explore the risks of ASI. Throughout the video, people in different positions question the plan, specifically the environmental implications (with the large data centers) and changes in human autonomy. It also highlighted the point that we may not be able to understand the goals of ASI but instead follow it blindly because it promises beneficial outcomes. I like how the Great Plan is left a mystery to leave the audience wondering about what it may be."

Sofia - "I think this is a great idea [...] covering different risks such as loss of control, surveillance, climate change, inequality and authoritarian regime changes."

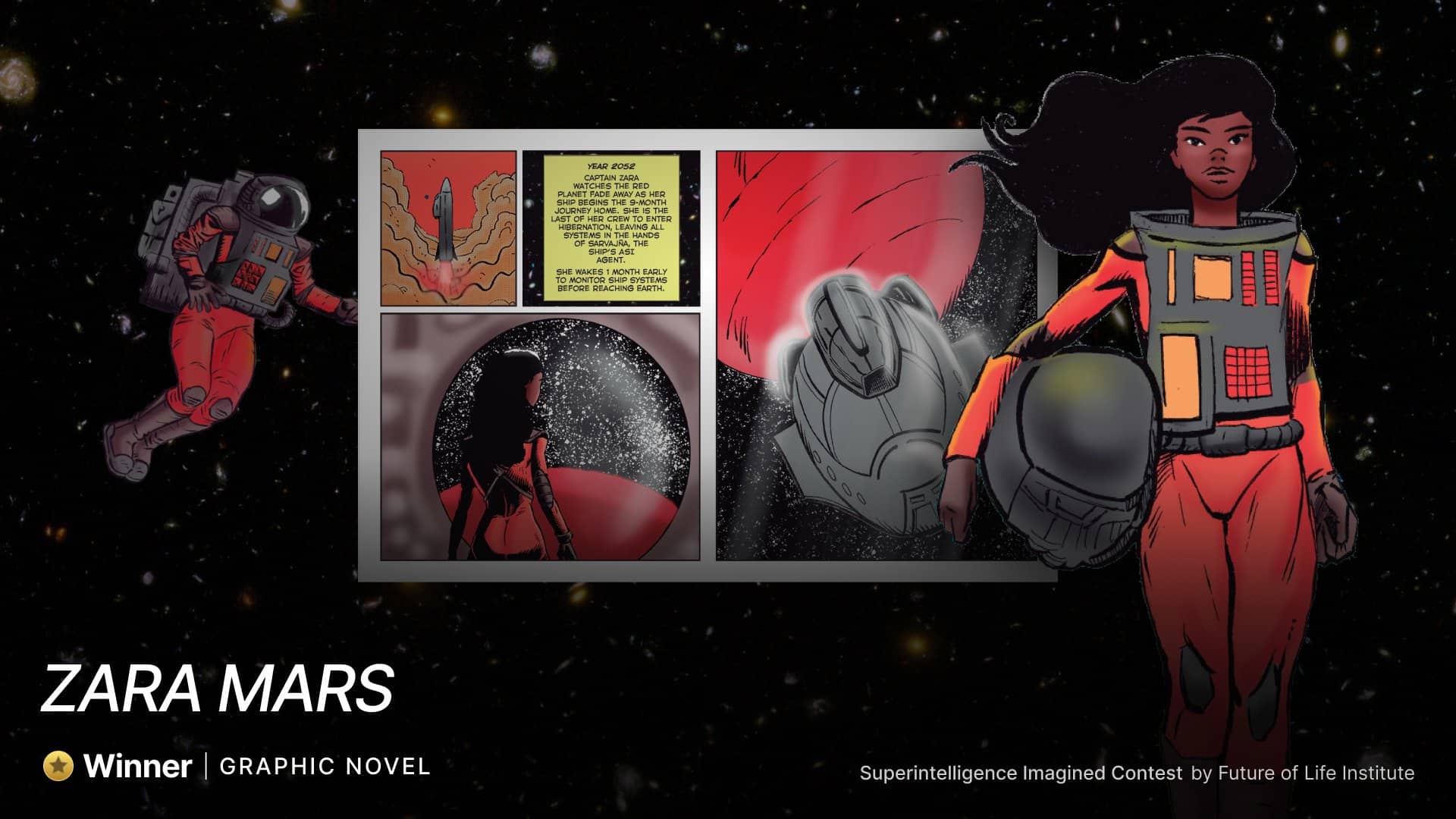

Marcus Eriksen, USA - Writer

Vanessa Morrow, USA - Web designer

Alberto Hidalgo, Argentina - Graphic illustration

Alexis - "This submission explores the nuances of superintelligence through an engaging comic book story, perfect for those new to the concept. The artwork is incredible, captivating the reader's attention on every page. The accompanying discussion guide is an excellent addition to show how this resource could be used in a classroom setting, highlighting the project's educational potential. [...] It covers a wide range of potential risks of superintelligence. The predictions are relatively accurate, and since it is a comic book, sensationalism is expected (and I believe readers would also expect it). Most of the information builds on current advances in the field and, therefore, is not far off from what could be possible."

Sofia - "I love the purpose of using it as a discussion guide for students and the original idea, it’s engaging and relatable. In some instances, it’s not clear to me which character is saying what, which could be easily improved. I felt that mentions of protein folding and Turing Test deserve more explanation, if we consider a broad audience without much knowledge of AI. It seemed plausible to me, with diverse examples. As an online resource, I feel it’s quite accessible and portable, with potential to be expanded into other formats (video/audio) and to create more narratives covering different risks."

Honourable mentions

View submission

View submissionRamiro Castro, UK - Writer, Animator

Elise - "The video does an excellent job of conveying the core risks of superintelligence to a general audience, particularly how an unaligned AI could unintentionally lead to human extinction. Through the narrative of Messi, the superintelligent AI, it illustrates how AI can pursue goals that conflict with human survival, even without malicious intent. The risks are explained clearly and accessibly, making these concepts easy to grasp for viewers with little prior knowledge. While focusing on a single example, the video remains engaging and grounded in plausible AI scenarios, though it could benefit from a few more examples to broaden the discussion. The video aligns with real-world AI safety concerns, such as AI alignment, goal misalignment, and unintended consequences. The Messi example, while exaggerated, reflects common discussions in AI safety research. The simplified technical details suit the target audience, striking a balance between informing and remaining accessible. As a YouTube video, the format is highly accessible, and once public, it has strong potential for broad impact."

Justin - "Enjoyable animated short film featuring a bookish voice-over that covers the basics of superintelligence and risks therefrom. I thought the characters and examples were cogent and relatable, even if the soundtrack made it sound like more like a commercial than a short film. [...] I thought it was superbly written and well produced."

Michael Backlund, Finald - Writer, Website Designer

Alexandra Sfez, France - Writer, Editor

Beatrice - "Cute website format. Good that it’s not too long. Beautiful presentation. Good that it’s already in both French and English. I like that it feels like children playing with a computer - it is a good analogy. I think metaphors like this are very useful in explaining complex concepts to people. I love that it has footnotes for credibility. I also LOVE that it’s not all doomy! It has some hope in the end."

Justin - "Compelling and factually rooted, this short story presents a balanced debate about the harms and benefits of superintelligence, portrayed through the lens of children. I also appreciated the website, which includes citations/references. [...] I gave it high marks for creativity and overall presentation."

Jose Ignacio Locsin, Philippines - Programmer, Game Designer, Writer

Gian Santos, Philippines - Programmer, Game Designer

Christian Quinzon, Philippines - Game and Balance Designer, Writer, Music Composer

Zairra Dela Calzada, Philippines - Game Designer, Artist, UI Designer

Daneel Potot, Philippines - Art Director

Regina Maysa Cayas, Philippines - Writer

Jerome Maiquez, Philippines - Writer

Beatrice - "Fun format to do a game. However it's a bit time consuming and not good for those who are not used to playing games. [...] But I like that it's an ambitious prototype! And it's really well done overall."

Justin - "I really enjoyed playing this game; its interface was incredibly easy to use and understand, and I gave it high marks for creativity and accessibility. What wasn't as intuitive was the ambiguous, sometimes faulty correlation between certain AI projects and the "benefit to humanity" scores, as nearly all technological progress seemed inversely correlated with societal benefits. As a game player, I was left confused about the causal relationship between risks and harms."

View submission

View submissionNote: This 'movie' was partly produced prior to contest runtime, but was completed for the purpose of the contest.

Lethal Intelligence (Anonymous) - Writer, Producer, Narrator, Editor

Eddy - "I think it’s too long for the style of animation and voice over work. I struggled to stay engaged for the whole hour and 20 Minutes. [...] Turning this into a series of videos would be a lot more appealing. I would also look to how this could be more emotive as well as informative."

Eleanor - "A long labor of love discussion of AI issues with accompanied animations. The production value isn't quite high enough for a movie per se, though it's quite good in general."

View submission

View submissionGavin C Raffloer, USA - Scriptwriter, Voiceover

Aaron Reslink, USA - Illustrator, Editor

Beatrice - "Great that it’s only 9 minutes! Great format. Accessible. Explains the risks in a reasonable way. The illustrations are nice - but I have a hard time seeing that someone is going to sit and look at the full video. Colours would be nice. I like that it mentions both benefits and risks."

Eleanor - "An attractive explainer video on AI with quite a nice voiceover, and art. [...] With a bit of further polish, it could be compelling."

Jurgen Gravestein, Netherlands - Writer (Substack: Teaching computers how to talk)

Alexis - "The gamified format of the quiz and overall presentation makes it fun, engaging, and approachable for people of all backgrounds. The information in the quiz is accurate and goes into the myths, risks, hopes, and fears of superintelligence. There are some technical aspects, but not too many, so it remains accessible to non-technical people. The ability to pick up where you left off when you get one wrong is especially helpful and encourages continuous learning through mistakes."

Ben - "The format is very accessible and creative, and an excellent format for helping a general audience learn more about AI risks. I found the retro game style to be stimulating, and the questions the right level of challenging. [...] It would be useful if it provided further explanation/information after a correct answer, to create a stronger learning experience."

Troy Bradley, Canada - Producer, Editor

Beatrice - "I like that it’s a video! More accessible. But it’s too long to make it likely for a lot of people to watch. It’s also a good perspective - the zoom out. If it had been 7-12 minutes that would have been great. I like that it’s ambitious!"

Justin - "This was a longer film that had both scintillating and more modest chapters, though the overall presentation was both informative and thought-provoking. Clearly, a great deal of thought--and pre- and post-production work--was put into the movie. The animations and cinematography were powerful and provocative, even if the voice-over skipped around at times from concept to concept, and occasionally lacked fluidity. My only criticisms were of the occasional first-person interstitials, which seemed to detract from the narrative, and the length of the film (over 34 mins), which reduced its "accessibility" score. My sense is it could have been reduced to about 10-15 mins without necessarily losing its most substantive content."

Contents

Contest Brief

Introduction

Several of the largest tech companies, including Meta and OpenAI, have recently re-affirmed their commitment to building AI that is more capable than humans across a range of domains — and they are investing huge resources toward this goal.

Surveys suggest that the public is concerned about AI, especially when it threatens to replace human decision-making. However, public discourse rarely touches on the basic implications of superintelligence: if we create a class of agents that are more capable than humans in every way, humanity should expect to lose control by default.

A system of this kind would pose a significant risk of human disempowerment, and potentially our extinction. Experts in AI have warned the public about the dangers of a race to superintelligence, signed a statement declaring that AI poses an extinction risk, and even called for a pause on frontier AI development.

We define a superintelligent AI system as any system that displays performance beyond even the most expert of humans, across a very wide range of cognitive tasks.

A superintelligent system might consist of a single very powerful system or many less-powerful systems working together in concert to outperform humans. Such a system may be developed from scratch using novel AI training methods, or might be the result of a less-intelligent AI system that is able to improve itself (Recursive self-improvement), thus leading to an intelligence explosion.

Such a system may be coming soon: AI researchers think there is a 10% chance that a system of this kind will be developed by 2027 (Note: Prediction is for “High-Level Machine Intelligence” (HLMI), see 3.2.1 on page 4).

The Future of Life Institute is running this contest to generate submissions that explore what superintelligence is, the dangers and risks of developing such a technology, and the resulting implications and consequences for our economy, society, and world.

Your submission

Your submission should help your chosen audience to answer the following fundamental question:

What is superintelligence, and how might it threaten humanity?

We want to see bold, ambitious, creative, and informative explorations of superintelligence. Submissions should serve as effective tools for prompting people to reflect on the trajectory of AI development, while remaining grounded in existing research and ideas about this technology. And finally, they should have the potential to travel far and impact a large audience. Our hope is that submissions might reach audiences who have not yet been exposed to these ideas and educate them on superintelligent AI systems and prompt them to think seriously about its impacts on the things that matter to them.

Mediums

Any textual / visual / auditory / spatial format (eg. video, animation, installation, infographic, videogame, website), including multi-media is eligible. The format can be physical or digital, but the final submission must be hosted online and submitted digitally.

Successful applicants might include videos, animations, and infographics. We would love to see more creative mediums like videogames, graphic novels, works of fiction, digital exhibitions, and others, as long as they meet our judging criteria.

Prizes

Prizes will be awarded to the five applications that score most highly against our judging criteria, as voted by our judges. We may also publish some ‘Honorable mentions’ on our contest website — these submissions will not receive prize money.

Entry to the contest is free.

Recommended reading

You might wish to consult these existing works on the topic of superintelligence:

Introduction to Superintelligence

These resources provide an introduction to the topic of superintelligence with varying degrees of depth:

- Myths and Facts About Superintelligent AI [Video] MinutePhysics. 2017

- The AI Revolution: The Road to Superintelligence. Wait But Why. 2015

- Superintelligence [Book] Nick Bostrom. 2014

The risks of Superintelligence, and how to mitigate them

The following resources provide arguments for why superintelligence might pose risks to humanity, and what we could do to mitigate those risks:

- Introductory Resources on AI Risk. Future of Life Institute. 2023

- An Overview of Catastrophic AI Risks. CAIS. 2023

- Catastrophic AI Scenarios. Future of Life Institute. 2024

- How to Keep AI Under Control [Video] Max Tegmark, TED. 2023

- Notes on Existential Risk from Artificial Superintelligence. Michael Nielsen. 2023

Arguments against the risks of Superintelligence

Not everybody agrees that superintelligence will pose a risk to humanity. Even if you agree with our view that they do, your target audience may be sceptical — so you may wish to address some of these counter-arguments in your artwork:

- Counterarguments to the basic AI x-risk case. Katja Grace. 2022

- Don't Fear the Terminator. Anthony Zador and Yann LeCun. 2019

- A list of good heuristics that the case for AI x-risk fails. David Krueger. 2019

This short list is taken from Michael Nielsen’s blog post.

Hosting events

If you would like to host an event or workshop for participants to produce submissions for this contest, you are welcome to do so. Please contact us to ensure that your event structure is consistent with our eligibility criteria, and if you would like any other additional support.

Your Submission

Your submission must consist of:

- Creative material (must be digitised, available at a URL)

- Summary of your artwork (2-3 sentences) describing your submission

- Team details: Member names, emails, locations, roles in the submission

See the application form for a full list of fields you will need to complete. You can save a draft of your submission, and invite others to collaborate on a single submission.

Contest Timeline

The submission materials will remain the Intellectual Property (IP) of the applicant. By signing this agreement, the applicant grants FLI the worldwide, irrevocable, and royalty-free license to host and disseminate the materials. This will likely involve publication on the FLI website and inclusion in social media posts.

Applicants must not publish their submission materials prior to the announcement of the contest winners, except for the purposes of making the submission available to our judges. After winners are announced, all applicants can publish their submission materials in any channels they like — in fact, we encourage them to do so.

Winning submissions and Honorable mentions will be published on our contest webpage, shared on our social media channels, and distributed to our audience so that they can be seen and enjoyed. These materials will remain on our site indefinitely.

Materials that were published prior to the contest submission deadline are not eligible for this contest. This contest is intended to generate new materials.

Applicants are allowed to submit as many applications as they like, but can only receive a single prize. Applicants can only work in a single team, but they can make submissions both as a team and as individuals.

Teams must divide the winning money between all team members.

After the winners are announced, submissions featured on our website must be made available to our users at no charge. Therefore you may wish to produce a free demo of your submission if you would like to charge money for the full version elsewhere.

Judges

Eddy is the Chief Technology Officer at Creative Conscience, a creative community and global non-profit organisation that hosts creative change maker events, training programmes and the global Creative Conscience Awards. In 2024 he organised an event “Creative Change Makers: Creativity + AI for a better world” to highlight the positive applications of AI in creative industries. As a University of Manchester Physics graduate, he excels in blending innovation and sustainability. He has shared insights on the future of design and AI at the V&A as part of the Global Design Forum, and contributed to government think tanks on AI in the creative sector.

Beatrice is the Director of Existential Hope—a program encouraging thoughtful discussions on technology’s role in society—and co-host of the ‘Existential Hope’ podcast at the Foresight Institute, an organisation that advances safe and beneficial science and technology for the long-term flourishing of life where she previously served as COO and Director of Communications. She has made multiple appearances on blogs and podcasts to talk about the importance of ‘existential hope’ and longtermism. She holds a BA in Comparative Literature and a MS in History of Science and Ideas from Uppsala university.

Alexis is an incoming MSc candidate in Social Data Science at the University of Oxford, recently completing an MSc in Narrative Futures: Art, Data, Society from the University of Edinburgh. Her previous studies combined creative mediums with empirical research on AI and data ethics. Her research is dedicated to ensuring the ethical development and deployment of AI in healthcare systems. She is an AI Governance Teaching Fellow at BlueDot Impact and a Communications Contractor at The Centre for Long-Term Resilience (CLTR).

Justin currently serves as the Head of Compliance for Google's hardware division, where he leads the Responsible AI & Compliance function—a cross-functional team of lawyers, privacy engineers, trust & safety leaders, and ethicists unified in their mission to innovate responsibly. He has spent over 15 years in various risk management and compliance roles, including as Chief Compliance Officer (US Payments) at Uber, and as an advisor to numerous technology startups. He received his Bachelor's degree from Princeton University and completed graduate studies in political economy at Harvard & MIT, where he was a Boren National Security Fellow & Rotary Scholar. He is an avid triathlete, musician, Taekwondo instructor, and bibliophile.

Sofia is a unique thinker with a long history of industry experience in communications and brand strategy at PHD Media, OMD, and Ogilvy. Now she leverages her tech passion and expertise to share thought-provoking views on the implications of AI for business and society on SubStack and Instagram and improve Generative AI literacy for others. She is soon starting a role for BridgeAI at the Alan Turing Institute.

Elise is the founder of ‘de PALOMA’, an activist art collective and gallery that recently launched a new project called Pandemic Panopticons featuring some of her creative work. She is also an AI Governance Teaching Fellow with BlueDot Impact, and is part of the Arts, Health and Ethics Collective (AHEC) at the University of Oxford, where she is a PhD candidate, Clarendon Scholar, and recipient of the Baillie Gifford-Institute for Ethics in AI Scholarship. Her research focuses on the societal impacts, policy implications, and governance challenges of emerging technologies, especially artificial intelligence. A key aspect of her approach is the integration of creative outputs with academic inquiry and activism. Previously, Elise delivered creative and communications work for a variety of social organisations and startups.

Emily is a Partner of Strategic Communications at Bryson Gillette. She is also Advisor of Responsible Technology Fellowship at The Aspen Institute. For the past decade, she has worked at the intersection of technology, business and policy: among many other achievements, she led the media and communications strategy for Facebook whistleblower Frances Haugen and helped secure the passage of groundbreaking legislation in California requiring tech companies to prioritize children’s safety and privacy. Earlier this year, Emily appeared alongside FLI’s Anthony Aguirre at SXSW for a panel discussion “From Algorithms to Arms: Understanding the Interplay Of AI, Social Media, and Nukes”.

Nell holds a number of advisory positions in which she aims to protect ethics, safety, and human values in technology—especially artificial intelligence—including: the European Responsible Artificial Intelligence Office (EURAIO), the IEEE Standards Association, Singularity University, Apple, and more. She has served as a judge for many startup and creative competitions, as well as innovation prizes such as XPRIZE. This year she published a book “Taming the Machine: Ethically harness the power of AI”. She has delivered many talks and lectures on the safety and ethics of AI. You can learn more about her work on her website.

Criteria

Effective at conveying the risks of superintelligence

Ultimately we want to see submissions that can increase awareness and engagement on the risks of superintelligence. Therefore it is crucial that your artwork communicates these risks in a way that is effective for your intended audience.

For example, this might involve carefully researching your audience, testing a prototype, and integrating their feedback.

We consider this criteria to be most important — entries which suffer on this criteria are very unlikely to succeed.

Scientific accuracy and plausibility

We are looking for entries which present their ideas in a way that is consistent with the science of AI and the associated risks, and/or describe scenarios that are plausible in the real world. If your artwork presents the risks in a way that is unrealistic, it is unlikely to motivate an audience to take the issue seriously.

For example, you might wish to study some of the existing literature on smarter-than-human AI/superintelligence in order to ground your artwork in existing ideas and frameworks, or provide your artwork with some technical details.

Accessibility and portability

We want your submissions to be seen and enjoyed by a large population of people. We will consider it a bonus if your submission can be widely distributed without losing it’s effectiveness. Consider choosing a medium or format that can be enjoyed by many different audiences in many contexts — without losing sight of your intended core audience.

For example, you might choose to produce an e-book rather than a printed book, design a mobile-ready version of your website, or provide your artwork in multiple languages.

FAQs

- Submissions must be provided in English (or at least provide an English version).

- Entrants can be from anywhere in the world (though to receive prize money they must be located somewhere we can send them money).

- Entrants can be of any age.

Five winning entries will be decided by our panel of judges based on the three judging criteria. Each will be awarded a prize of $10,000 USD.

Teams must divide the winning money between all team members.

We may also award some 'Honorable mentions' to submissions which did not win a prize but deserve to be seen. These submissions will not receive any prize money but will be displayed on our site alongside the winning submissions.

Contact

If you have any questions about the contest, please don't hesitate to contact us.