Make AI Safe

Big Tech is racing to release massively powerful AIs—with no meaningful guardrails or oversight.

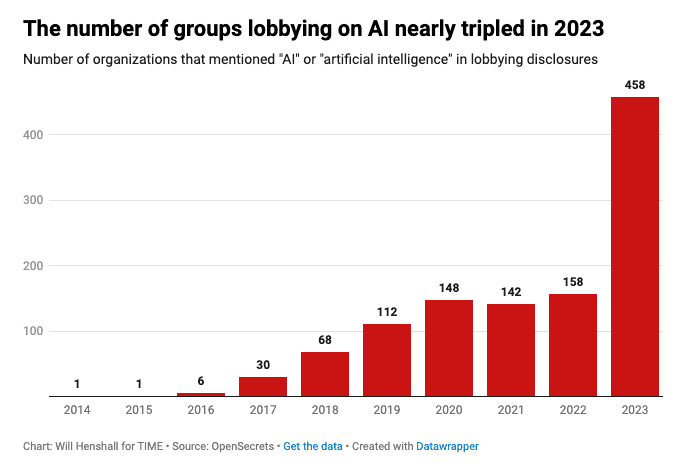

Despite admitting the risks, they aggressively lobby against regulation.

Leaders should step in, and make them be safe. Do you agree?

Find out how you can help

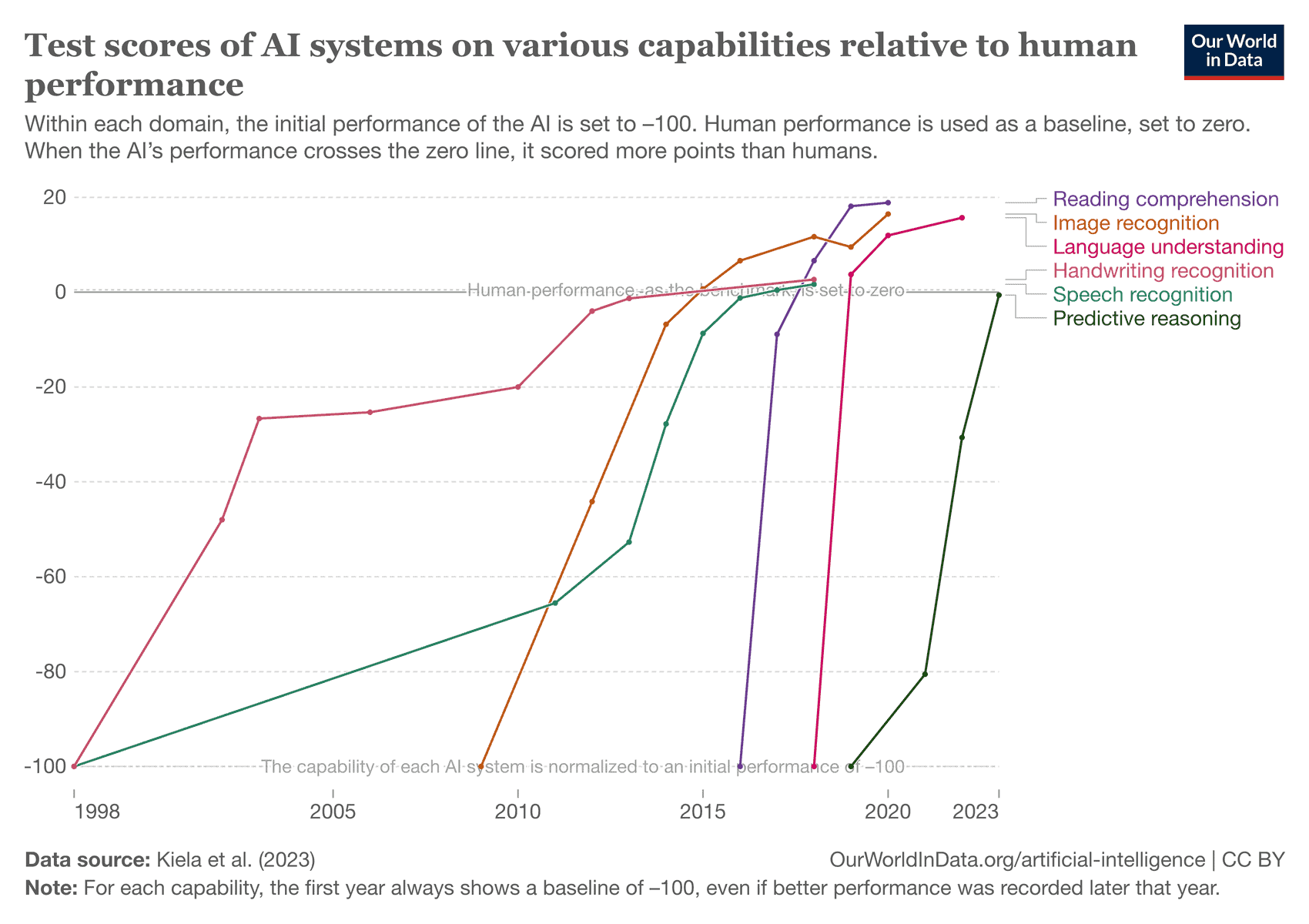

We're seeing a rapid development in AI capabilities

But it is also extremely dangerous.

Pause Giant AI Experiments: An Open Letter

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

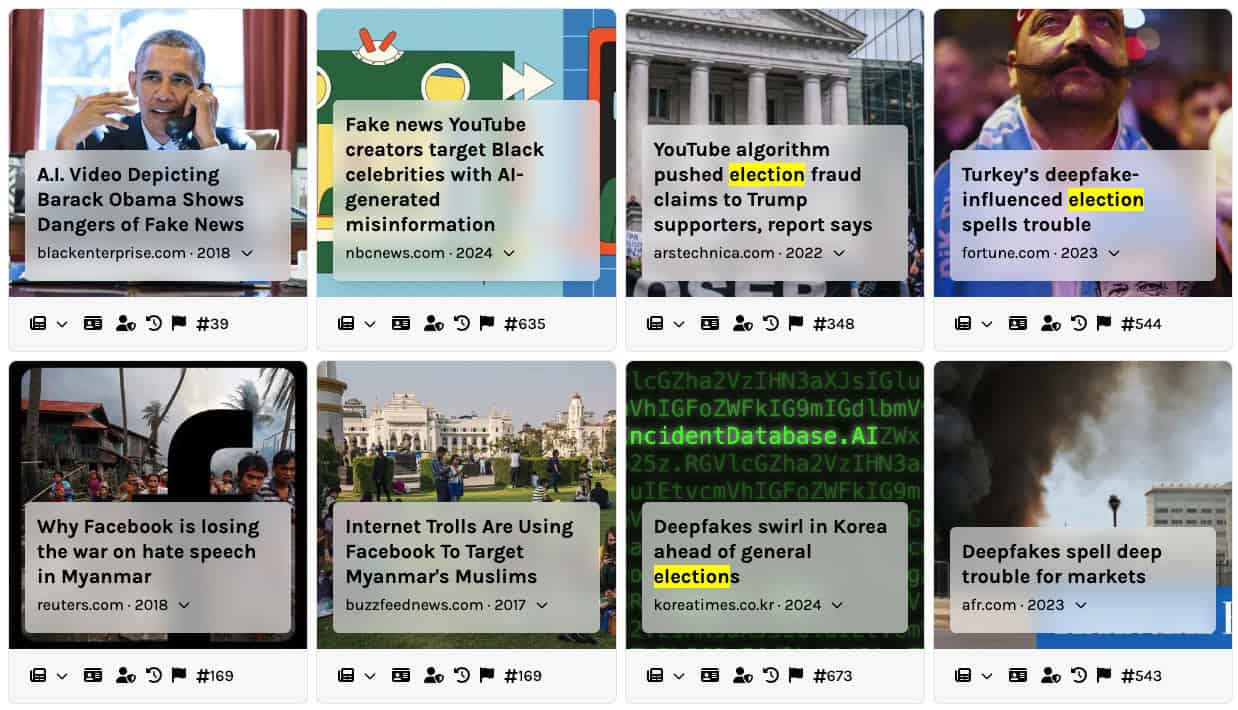

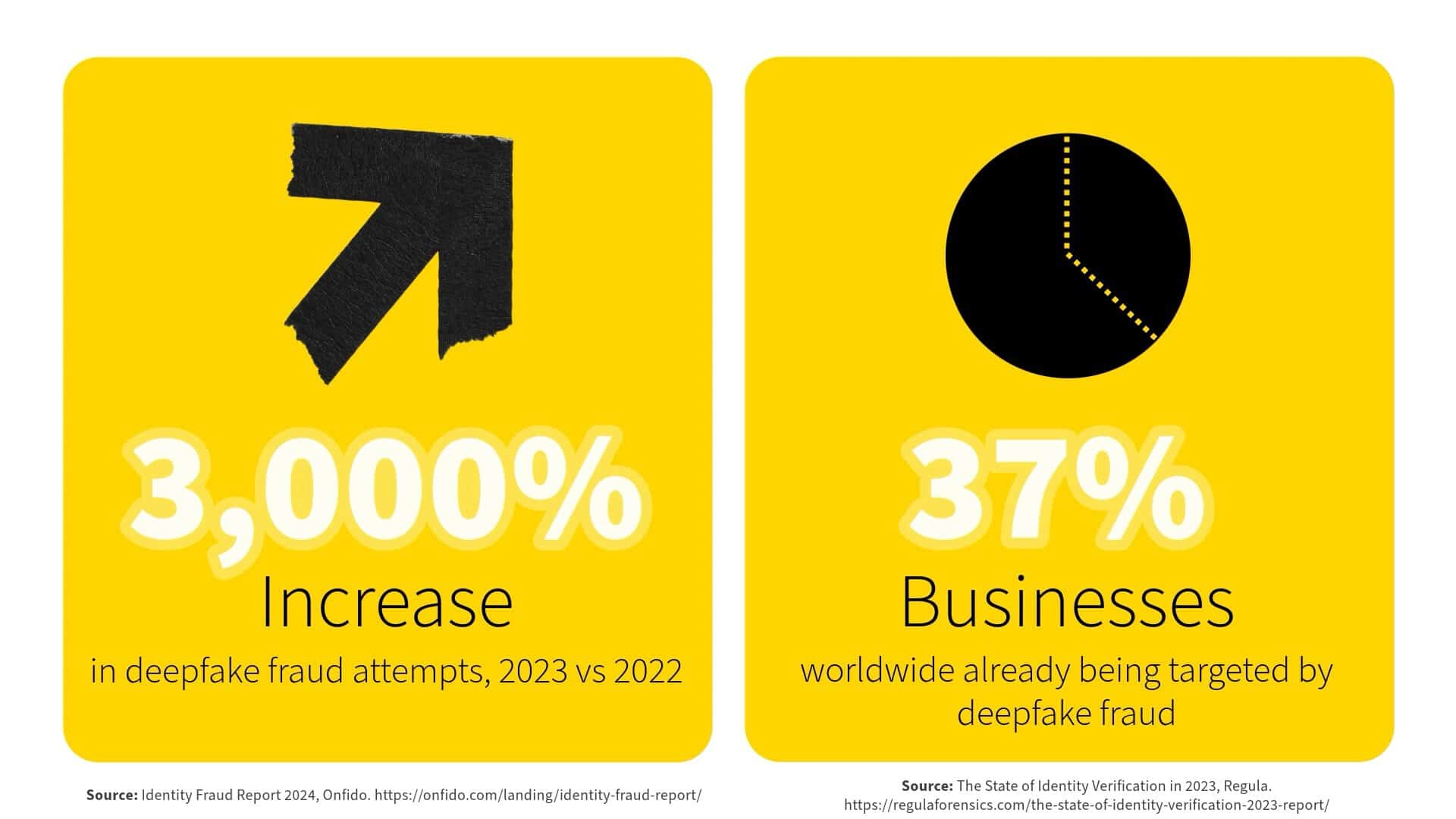

AI-driven harms are already destroying lives and livelihoods.

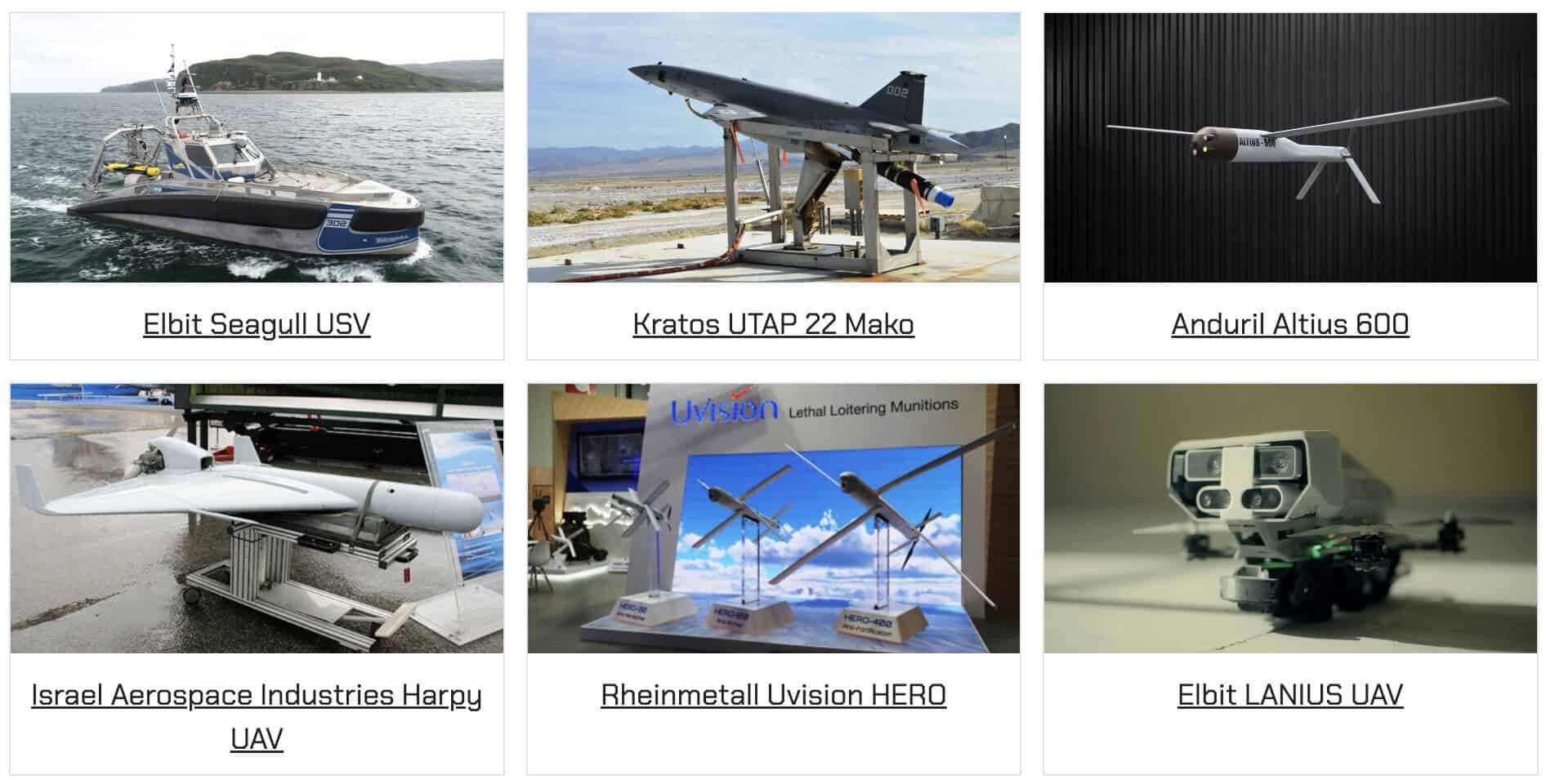

The autonomous weapons of science fiction are here.

The era in which algorithms decide who lives and who dies is upon us. We must act now to prohibit and regulate these weapons.

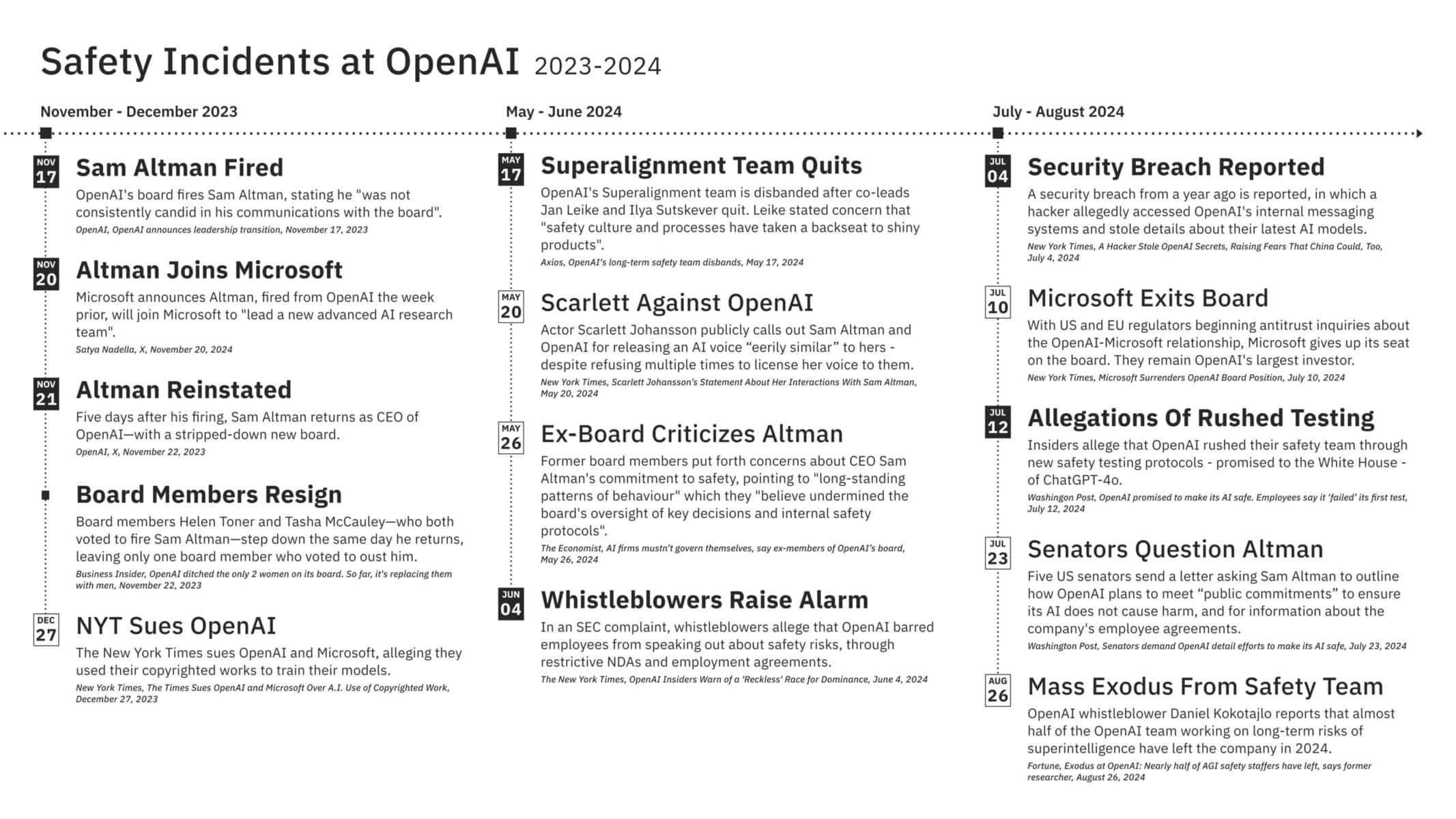

The leading AI developers are locked in a furious race to develop and deploy the most powerful systems as fast as possible.

Intense competition is already forcing them to cut corners. We need laws to keep us safe.

Policymakers face a barrage of industry lobbyists.

Some efforts at regulation have begun, but are slowed by political inertia and opposed by furious lobbying from industry.

We can build the solutions to make sure AI preserves individual freedom, and doesn't concentrate enormous power within a handful of companies or individuals.

"It's time for bold, new thinking on existential threats"

Confronting Tech Power

How to mitigate AI-driven power concentration

Deadline: 31 October 2024

We Need An FDA For Artificial Intelligence

Proposals already exist for how AI can be governed effectively. These proposals are based on existing models for the governance of powerful technologies of the past.

By creating enforceable safety standards, lawmakers can protect people and sustain innovation for decades to come. By empowering robust institutions, they can deliver the oversight necessary to keep Big Tech and government in line.

Our Position on AI

Advanced AI should be developed safely, or not at all. AI should be developed for all people and to solve real human problems.

We present a framework for regulating AI with safety standards.

With the wrong rules—or no rules at all—advanced AI is likely to concentrate power within a handful of corporations or governments and endanger everyone else.

By carefully developing safe and controllable AI systems to solve specific problems and enable people to do what they could not do before, we can reap huge benefits.

Find out how you can help

We must take control of AI before it controls us

The ongoing, unchecked, out-of-control race to develop increasingly powerful AI systems puts humanity at risk. These threats are potentially catastrophic, including rampant unemployment, bioterrorism, widespread disinformation, nuclear war, and many more.

We urgently need lawmakers to step in and ensure a safety-first approach with proper oversight, standards and enforcement. These are not only critical to protecting human lives and wellbeing, they are essential to safeguarding innovation and ensuring that everyone can access the incredible potential benefits of AI going forward. We must not let a handful of tech corporations jeopardise humanity’s shared future.