FLI October 2022 Newsletter: Against Reckless Nuclear Escalation

Contents

Welcome to the Future of Life Institute Newsletter. Every month, we bring 24,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

A quick note before we get started: We’ve redesigned our website! Let us know what you think of it in a reply to this email.

Today’s newsletter is a 6-minute read. We cover:

- Our open letter against reckless nuclear escalation and use

- Ex-ante regulations for general-purpose AI systems

- The re-launch of our podcast

- New research about autonomous weapons and international humanitarian law

- Arkhipov Day

Open Letter Against Reckless Nuclear Escalation and Use

We at FLI suspect that you’re as appalled as we are by how talk of nuclear strikes is suddenly becoming normalized. Moreover, the public debate is almost entirely about strategies for escalating rather than de-escalating, even though there are many de-escalation strategies that involve no concessions or appeasement whatsoever.

FLI has therefore launched an open letter pushing both to de-normalize nuclear threats and to re-mainstream non-appeasing de-escalation strategies that reduce the risk of nuclear war without giving into blackmail.

- Would you like to join as a signatory? This would be wonderful, because it would help reduce the probability of the greatest catastrophe in human history. To read and consider signing, please click here.

- Still not convinced? Here’s what nuclear war looks like from space:

Governing General Purpose Artificial Intelligence

In October, we joined nine other civil society organizations calling on the European Parliament to impose ex-ante obligations in the EU AI Act on the providers of general-purpose AI systems (GPAIS). Read the open letter here.

- Why this matters: GPAIS can perform many distinct tasks, including those they were not intentionally trained for. Left unchecked, actors can use these models – think GPT-3 or Stable Diffusion – for harmful purposes, including disinformation, non-consensual pornography, privacy violations, and more.

- What do we want? The original draft of the AI Act imposed precautionary obligations for uses deemed “high-risk”, placing the burden of compliance on end users who may not be able to influence the AI systems’ behaviour. GPAIS developers, typically well-funded and resourced, are more capable of ensuring that their models pass regulatory scrutiny. The AI Act should recognize these differences and impose obligations for developers and downstream users according to their level of control, resources and capabilities.

- Dive deeper: FLI researchers recently co-authored a new working paper proposing a definition for GPAIS. Our goal was threefold: 1) highlight ambiguity in existing definitions, 2) examine the phrase ‘general purpose’, and 3) propose a definition that is legislatively useful.

- Additional Context: Earlier in May, the French Government proposed that the AI Act should regulate GPAIS. FLI has advocated for this position since the EU released its first draft of the AI Act last year. Last month, FLI’s Vice-President for Policy and Strategy, Anthony Aguirre, spoke on a panel hosted by the Centre for Data Innovation about how the EU should regulate GPAIS.

In Case You Missed It

The FLI Podcast is back!

We’re thrilled about the re-launch of our podcast! Head over to our YouTube channel or follow us on your favourite podcast application to catch all the episodes. Our latest interview is with Ajeya Cotra, a Senior Research Analyst at Open Philanthropy working on AI alignment. Check out the full interview, where we speak to Ajeya about transformative AI, here:

FLI Impacts of Nuclear War and AI Existential Safety Grants

If you’re a researcher or academic, remember to take advantage of our grants:

The PhD fellowship covers tuition costs, a stipend of up to $40,000, and a research fund of $10,000. The PostDoc fellowship provides an annual $80,000 stipend and a research fund of $10,000. Applications are due on 15 November 2022 for the PhD Fellowship and on 2 January 2023 for the PostDoc Fellowship.

We’re offering at least $3M in grants to improve scientific forecasts about the impacts of nuclear war. Proposed research projects can address issues such as nuclear use scenarios, fuel loads and urban fires, climate modelling, nuclear security doctrines and more. A typical grant award will range between $100,000 to $500,000 and support a project for up to three years. Letters of Intent are due on 15 November 2022.

Nominate a Candidate for the Future of Life Award!

The Future of Life Award is a $50,000 prize given to individuals who, without much recognition at the time, helped make today dramatically better than it may have otherwise been. Previous winners include individuals who eradicated smallpox, fixed the ozone layer, banned biological weapons, and prevented nuclear wars. Follow these instructions to nominate a candidate. If we decide to give the award to your nominee, you will receive a $3,000 prize!

Governance and Policy Updates

AI Policy:

- The White House published a Blueprint for an AI Bill of Rights.

- 70 states issued a joint statement calling for new international rules to govern autonomous weapons at the UN General Assembly.

Nuclear Security:

- The 77th session of the UN First Committee on Disarmament and International Security concluded its thematic debate on Weapons of Mass Destruction.

- The US Department of Defence released its Nuclear Posture Review.

Biotechnology:

- The White House published its National Biodefense Strategy.

Climate Change:

- A new report published by the UN ahead of the 27th Conference of Parties to the UNFCC in Egypt warned that countries’ climate plans are “nowhere near” the 1.5C goal.

Updates from FLI

- The MIT Technology Review quoted Claudia Prettner, our EU policy representative, explaining why the EU’s new AI Liability Directive should impose strict liability on high-risk AI systems.

- Vox profiled FLI President Max Tegmark for their Future Perfect 50 series – a list of individuals who are “building a more perfect future.” The profile featured FLI advocacy on lethal autonomous weapons and the Future of Life Award.

- Our Director of AI projects, Richard Mallah, spoke on the Foresight Institute’s Existential Hope podcast about his vision for aligned AI and a better future for humanity.

New Research

Autonomous weapons and international law: A new report by the Stockholm International Peace Research Institute outlines how international law governing state responsibility and individual criminal responsibility applies to the use of lethal autonomous weapon systems (AWS).

- Why this matters: Multilateral efforts to ban the use of AWS have stalled, making it imperative to clarify who is liable for their development and use.

- Dive deeper: Visit our website to learn more about AWS and why organizations like the International Committee of the Red Cross and dozens of nation states want international safeguards. To raise awareness about the ethical, legal, and strategic implications of AWS, we produced two short films about “slaughterbots”. Watch them here:

What We’re Reading

Nuclear Winter: Heat, radiation, blast effects, and prolonged nuclear winter. These are the ways nuclear war will kill you and everyone you know, according to a new visual essay by the Bulletin of the Atomic Scientists.

- Dive deeper: On Aug 6, 2022 – Hiroshima Day – we presented the Future of Life Award to the individuals who discovered and popularised nuclear winter. Find our more about their work here.

Weaponized Robots: Six robotics manufacturers, led by Boston Dynamics, have pledged not to weaponize “general-purpose” robots. Bostin Dynamics VP Brenden Schulman thinks this is a good start; others are more sceptical.

Nuclear Escalation: “A series of individually rational steps can add up to a world-ending absurdity.” That’s what the Director of the European Council for Foreign Relations had to say about the potential for nuclear escalation in the Ukraine war.

Biosecurity: A recent controversy surrounding a Boston University study that involved modifying the SARS-CoV-2 demonstrates the need for better communication and government guidance regarding bio-risks, says a new piece in Nature.

- Dive deeper: We recently interviewed Dr. Filippa Lentzos, Senior Lecturer in Science and International Security at King’s College London, about global catastrophic biological risks. Listen to the conversation here.

Planetary Defence: NASA confirmed that its efforts to divert the orbit of an asteroid were successful. We can now – in principle – deflect asteroids heading towards the Earth!

- Dive deeper: Our interview with climate scientist Brian Toon covers why asteroids are an existential risk. Watch the full episode here:

Hindsight is 20/20

On October 27, 1962, during the height of Cuban Missile Crisis, the American navy began dropping depth signaling charges on a Soviet B-59 submarine close to Cuba. Unbeknownst to them, the submarine recieving fire was armed with nuclear weapons and had lost contact with Moscow for over four days.

Believing that war had already started, the submarine’s captain, Valentin Grigoryevich Savitsky, decided to launch nuclear missiles. Fortunately for us, Savitsky needed the approval of his second-in-command, Vasily Arkhipov, who refused to grant it.

Arkhipov’s story is relatively unknown, despite its importance. But it did feature in a PBS documentary, The Man Who Saved the World, and a National Geographic profile titled, “You (and almost everyone you know) Owe Your Life to This Man.”

In 2017, we tried to correct this by honoring him with the Future of Life Award. Read about it here.

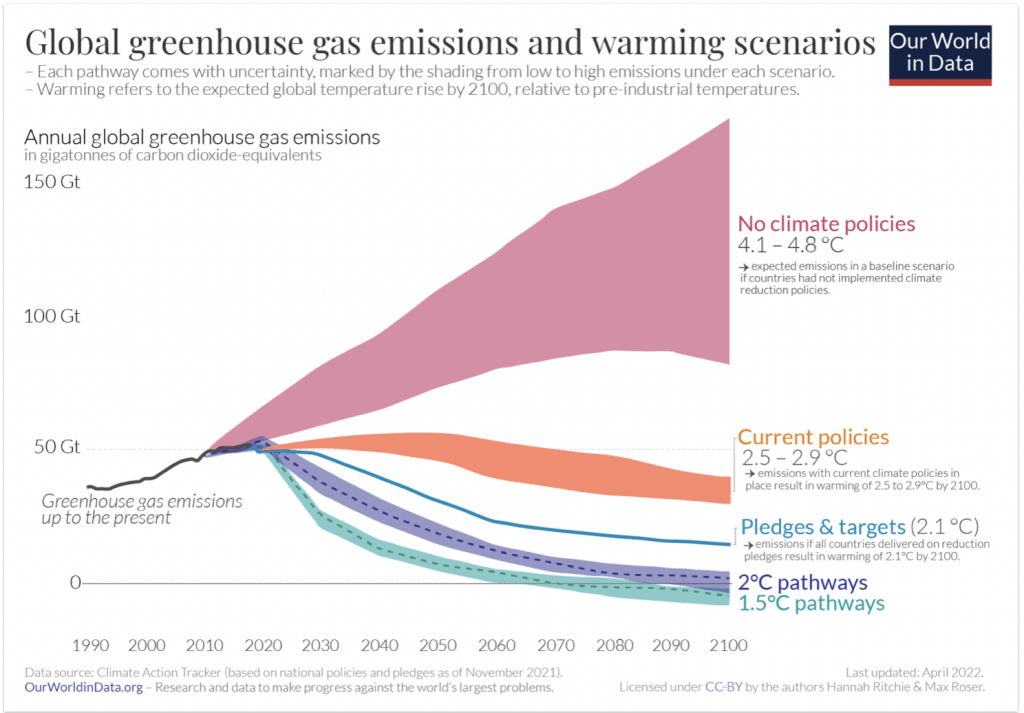

Chart of the Month

The 27th Conference of the Parties of the UNFCCC (COP27) will take place from 6 to 18 November 2022 in Sharm El Sheikh, Egypt. Are we on track to meet the goals set out in the Paris Agreement? Not yet. Here’s a chart from Our World in Data.

FLI is a 501c(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.