Contents

FLI November, 2017 Newsletter

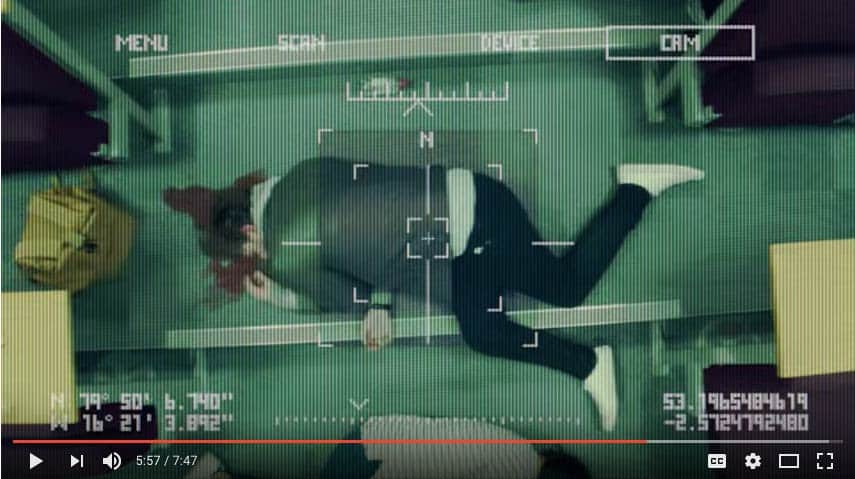

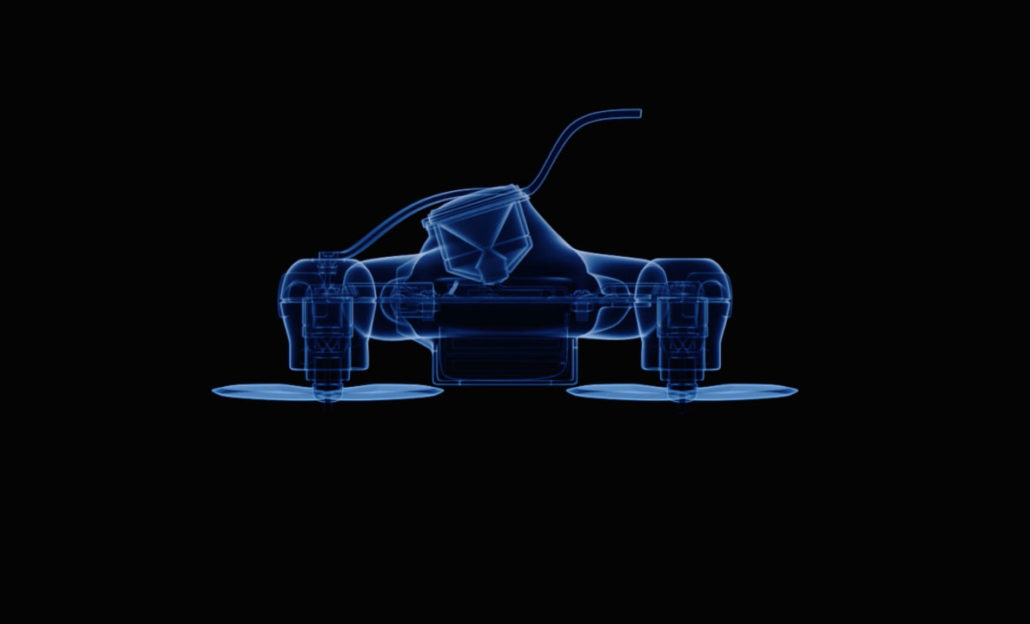

AI Researchers Create Video to Call for Autonomous Weapons Ban at UN

The video was launched in Geneva, where AI researcher Stuart Russell presented it at an event at the United Nations Convention on Conventional Weapons hosted by the Campaign to Stop Killer Robots.

Support for a ban has been mounting. Just this past week, over 200 Canadian scientists and over 100 Australian scientists in academia and industry penned open letters to Prime Minister Justin Trudeau and Malcolm Turnbull urging them to support the ban. Earlier this summer, over 130 leaders of AI companies signed a letter in support of this week’s discussions. These letters follow a 2015 open letter released by the Future of Life Institute and signed by more than 20,000 AI/Robotics researchers and others, including Elon Musk and Stephen Hawking.

To learn more about these weapons and the efforts to ban them, visit autonomousweapons.org and sign up for the newsletter to stay up to date.

Banning Lethal Autonomous Weapons

Many of the world’s leading AI researchers and humanitarian organizations are concerned about the potentially catastrophic consequences of allowing lethal autonomous weapons to be developed. Encourage your country’s leaders to support an international treaty limiting lethal autonomous weapons:

Add your name to the list of over 20,000 others who oppose autonomous weapons.

Visit the Campaign to Stop Killer Robots to take further action or donate to the cause.

Check us out on SoundCloud and iTunes!

Podcast: Balancing the Risks of Future Technologies

with Andrew Maynard and Jack Stilgoe

What does it means for technology to “get it right,” and why do tech companies ignore long-term risks in their research? How can we balance near-term and long-term AI risks? And as tech companies become increasingly powerful, how can we ensure that the public has a say in determining our collective future?

To discuss how we can best prepare for societal risks, Ariel spoke with Andrew Maynard and Jack Stilgoe on this month’s podcast. Andrew directs the Risk Innovation Lab in the Arizona State University School for the Future of Innovation in Society, where his work focuses on exploring how emerging and converging technologies can be developed and used responsibly within an increasingly complex world. Jack is a senior lecturer in science and technology studies at University College London where he works on science and innovation policy with a particular interest in emerging technologies.

When Should Machines Make Decisions?

By Ariel Conn

Human Control Principle: Humans should choose how and whether to delegate decisions to AI systems, to accomplish human-chosen objectives.

Continuing our discussion of the 23 Asilomar AI principles, researchers weigh in on issues of human control in AI systems. When is it okay to let a machine make a decision instead of a person? And how do we address the question of control when AI becomes so much smarter than us? If an AI knows more about the world and our preferences, would it be better if the AI made all decisions for us?

By Jessica Cussins

Private companies and military sectors have moved beyond the goal of merely understanding the brain to that of augmenting and manipulating brain function. In particular, companies such as Elon Musk’s Neuralink and Bryan Johnson’s Kernel are hoping to harness advances in computing and artificial intelligence alongside neuroscience to provide new ways to merge our brains with computers.

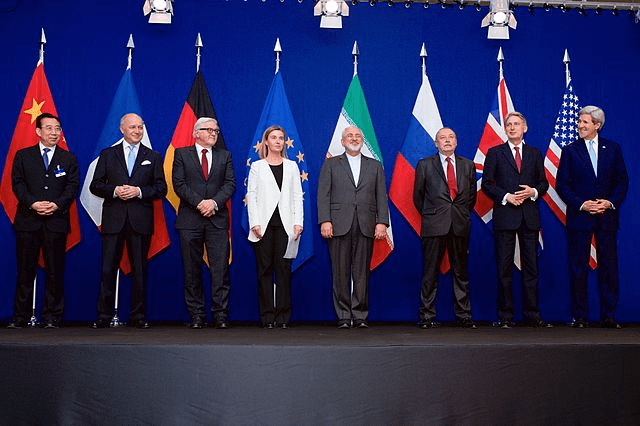

By Dr. Lisbeth Gronlund

Over 90 prominent scientists sent a letter to leading members of Congress yesterday urging them to support the Iran Deal—making the case that continued US participation will enhance US security.

Many of these scientists also signed a letter strongly supporting the Iran Deal to President Obama in August 2015, as well as a letter to President-elect Trump in January. In all three cases, the first signatory is Richard L. Garwin, a long-standing UCS board member who helped develop the H-bomb as a young man and has since advised the government on all matters of security issues. Last year, he was awarded a Presidential Medal of Freedom.

In 1992, the Union of Concerned Scientists and the majority of Nobel laureates in the sciences penned the “World Scientists’ Warning to Humanity.” Pointing to growing problems like ocean dead zones, biodiversity destruction, climate change, and continued human population growth, the scientists argued that “a great change in our stewardship of the Earth and the life on it is required, if vast human misery is to be avoided.”

Now, 25 years after this initial dire warning, over 15,000 scientists from 184 countries have signed a “second notice” to humanity, warning that we are on a collision course with the natural world, and that “soon it will be too late to shift course away from our failing trajectory.”

Harvesting Water Out of Thin Air: A Solution to Water Shortage Crisis?

By Jung Hyun Claire Park

One in nine people around the world do not have access to clean water. As the global population increases and climate heats up, experts fear water shortages will increase. To address this anticipated crisis, scientists are turning to a natural reserve of fresh water that has yet to be exploited: the atmosphere.

The atmosphere is estimated to contain 13 trillion liters of water vapor and droplets, which could significantly contribute to resolving the water shortage problem.

How might a nuclear crisis play out in today’s media environment? What dynamics in this information ecosystem—with social media increasing the velocity and reach of information, disrupting journalistic models, creating potent vectors for disinformation, and changing how political leaders interact with constituencies—might challenge decision making during crises between nuclear-armed states?

News from Partner Organizations

The Future Society: AI Global Civic Debate

We at FLI are excited to partner with the AI Initiative and The Future Society in the second phase of a global civic debate on Governing the Rise of Artificial Intelligence. This debate is open to anyone and available in five languages. Join in at aicivicdebate.org and engage with us on the thread for AI Safety and Security, where we will be discussing catastrophic and existential risk posed by artificial intelligence and autonomous weapons.

Pick a theme and join the debate here!

VIDEO: Managing Extreme Technological Risk

The Center for the Study of Existential Risks (CSER) released a video about existential risk featuring Martin Rees, which you can view here. The video describes the different types of existential risks facing humanity, and what we can do about them.

CSER is “an interdisciplinary research centre within CRASSH at the University of Cambridge dedicated to the study and mitigation of existential risks.” Visit CSER’s new site to learn more about their research and efforts to keep humanity safe!

The Threat of Nuclear Terrorism: Dr. William J. Perry

Former U.S. Secretary of Defense William Perry launched a MOOC on the changing nuclear threat. With a team of international experts, Perry explores what can be done about the threat of nuclear terrorism.

The course will answer questions like: Is the threat of nuclear terrorism real? What would happen if a terror group was able to detonate a nuclear weapon? And, what can be done to lower the risk of a nuclear catastrophe? This course is free, and you can enroll here.

What We’ve Been Up to This Month

Anthony Aguirre attended the Partnership on AI’s first meeting this month. Since October, FLI has been an official member of the Partnership on AI, whose mission is to study and formulate best practices on AI technologies, to advance the public’s understanding of AI, and to serve as an open platform for discussion and engagement about AI and its influences on people and society.

Ariel Conn participated as a discussion leader at the Future Workforce Roundtable hosted by Littler’s Workplace Policy Institute and Robotics, AI & Automation Practice Group. The discussion focused on current issues facing the workforce and how those might change as AI moves toward superintelligence.

Richard participated in a workshop at the Machine Intelligence Research Institute (MIRI), which covered Paul Christiano’s AI safety research agenda and its intersections with MIRI’s agent foundations research agenda. MIRI’s mission is to ensure that the creation of smarter-than-human intelligence has a positive impact. They aim to make advanced intelligent systems behave as we intend even in the absence of immediate human supervision.

Viktoriya Krakovna gave a talk at EA Global London about AI safety careers. EA Global London was an advanced EA conference with a focus on research and intellectual exploration. It included a research poster session and career coaching from 80,000 Hours. Topics included the research on basic income, moral trade, EA in government and policy, and individual vs. collective action.

FLI in the News

Slaughterbots

WASHINGTON POST: Stop the rise of the ‘killer robots,’ warn human rights advocates

“It is very common in science fiction films for autonomous armed robots to make life-or-death decisions — often to the detriment of the hero. But these days, lethal machines with an ability to pull the trigger are increasingly becoming more science than fiction.”

CNN: ‘Slaughterbots’ film shows potential horrors of killer drones

“Such atrocities aren’t possible today, but given the trajectory of tech’s development, that will change in the future. The researchers warn that several powerful nations are moving toward autonomous weapons, and if one nation deploys such weapons, it may trigger a global arms race to keep up.”

THE GUARDIAN: Ban on killer robots urgently needed, say scientists

“The short, disturbing film is the latest attempt by campaigners and concerned scientists to highlight the dangers of developing autonomous weapons that can find, track and fire on targets without human supervision.”

VICE: Watch ‘Slaughterbots,’ A Warning About the Future of Killer Bots

“On Friday, the Future of Life Institute—an AI watchdog organization that has made a name for itself through its campaign to stop killer robots—released a nightmarish short film imagining a future where smart drones kill.”

FOX NEWS: UC Berkeley professor’s ‘slaughterbots’ video on killer drones goes viral

“A UC Berkeley computer science professor helped to create a video that imagined a world where nuclear weapons were replaced by swarms of autonomous tiny drones that could kill half a city and are virtually unstoppable.”

THE INDEPENDENT: Killer robots must be banned but ‘window to act is closing fast’, AI expert warns

“In the video, autonomous weapons are used to carry out mass killings with frightening efficiency, while people struggle to work out how to combat them.”

BUSINESS INSIDER: The short film ‘Slaughterbots’ depicts a dystopian future of killer drones swarming the world

“The Future of Life Institute released a short film showing a dystopian future with killer drones powered by artificial intelligence.”

FLI

FINANCIAL TIMES: The media have duty to support tech in fourth industrial revolution

“Max Tegmark and the Future of Life Institute are driving research on AI safety, a field that he and his colleagues had to invent. Public institutions in most continents are not yet even playing catch-up.”

UNDARK: In ‘Life 3.0,’ Max Tegmark Explores a Robotic Utopia — or Dystopia “Where is it all heading? Enter Max Tegmark, iconoclastic mop-topped MIT physicist and co-founder of the Future of Life Institute, whose mission statement includes ‘safeguarding life’ and developing ‘positive ways for humanity to steer its own course considering new technologies and challenges.'”

Get Involved

FHI: AI policy and Governance Internship

The Future of Humanity Institute at the University of Oxford seeks interns to contribute to our work in the areas of AI policy, AI governance, and AI strategy. Our work in this area touches on a range of topics and areas of expertise, including international relations, international institutions and global cooperation, international law, international political economy, game theory and mathematical modelling, and survey design and statistical analysis. Previous interns at FHI have worked on issues of public opinion, technology race modelling, the bridge between short-term and long-term AI policy, the development of AI and AI policy in China, case studies in comparisons with related technologies, and many other topics.

If you or anyone you know is interested in this position, please follow this link.

FHI: AI Safety and Reinforcement Learning Internship

The Future of Humanity Institute at the University of Oxford seeks interns to contribute to our work in the area of technical AI safety. Examples of this type of work include Cooperative Inverse Reinforcement Learning, Learning the Preferences of Ignorant, Inconsistent Agents, Learning the Preferences of Bounded Agents, and Safely Interruptible Agents. The internship will give the opportunity to work on a specific project. The ideal candidate will have a background in machine learning, computer science, statistics, mathematics, or another related field. This is a paid internship. Candidates from underrepresented demographic groups are especially encouraged to apply.

If you or anyone you know is interested in this position, please follow this link.

FHI: AI Safety Postdoctoral Research Scientist

You will advance the field of AI safety by conducting technical research. You can find examples of related work from FHI on our website. Your research is likely to involve collaboration with researchers at FHI and with outside researchers in AI or computer science. You will publish technical work at major conferences, raise research funds, manage your research budget, and potentially hire and supervise additional researchers.

If you or anyone you know is interested in this position, please follow this link.

FHI: AI Safety Research Scientist

You will be responsible for conducting technical research in AI Safety. You can find examples of related work from FHI on our website. Your research is likely to involve collaboration with researchers at FHI and with outside researchers in AI or computer science. You will co-publish technical work at major conferences, own research budget for your project, and contribute to the recruitment process and supervision of additional researchers.

If you or anyone you know is interested in this position, please follow this link.

The Fundraising Manager will have a key role in securing funding to enable us to meet our aim of increasing the preparedness, resources & ability (knowledge & technology) of governments / corporations / humanitarian organizations / NGOs / people to be able to feed everyone in the event of a global catastrophe through recovery of food systems. To reach this ambitious goal, the Fundraising Manager will develop a strategic fundraising plan, and interface with the funder community, including foundations, major individual donors, and other non-corporate institutions.

If you or anyone you know is interested in this position, please follow this link.

To learn more about job openings at our other partner organizations, please visit our Get Involved page.