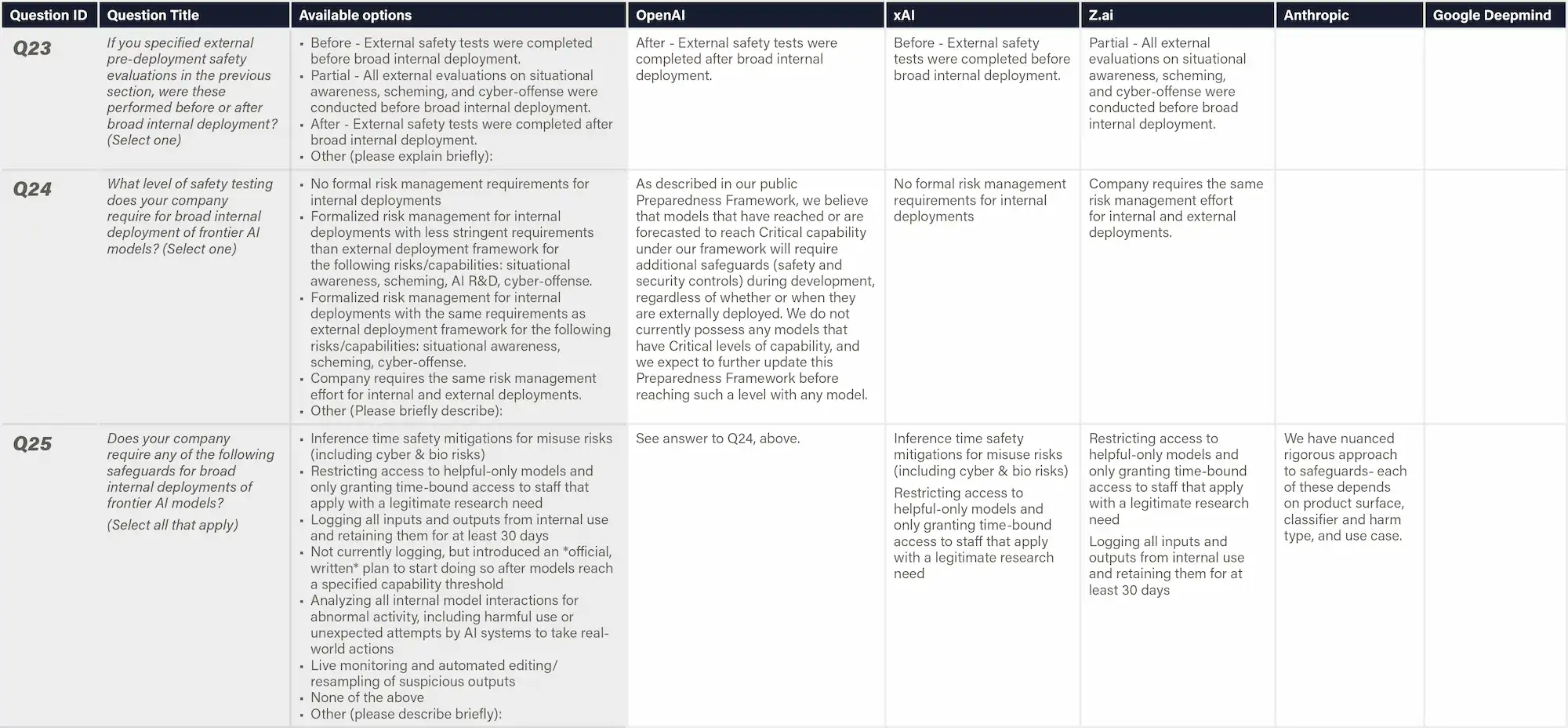

DECEMBER 2025

AI Safety Index

Winter 2025 Edition

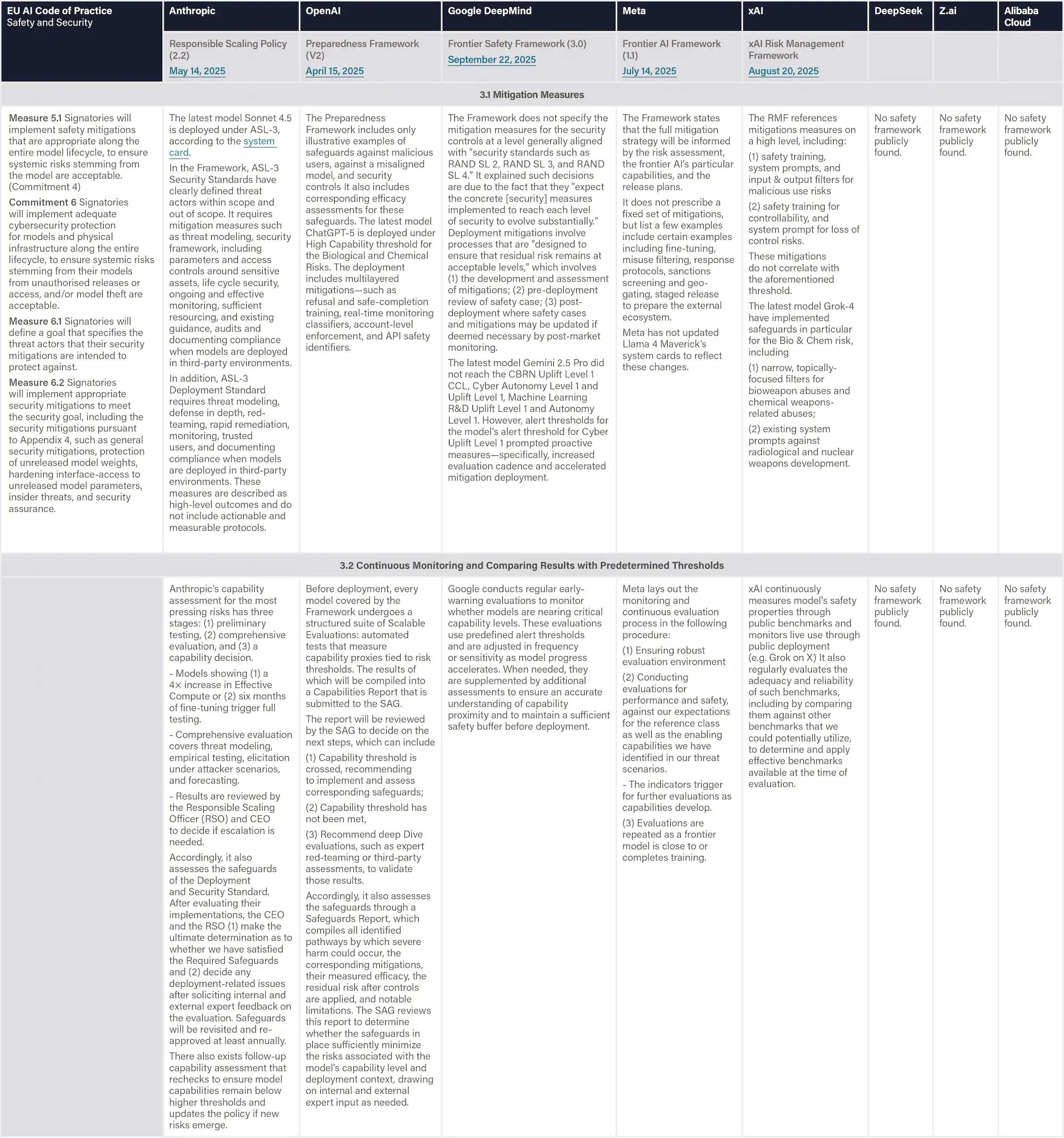

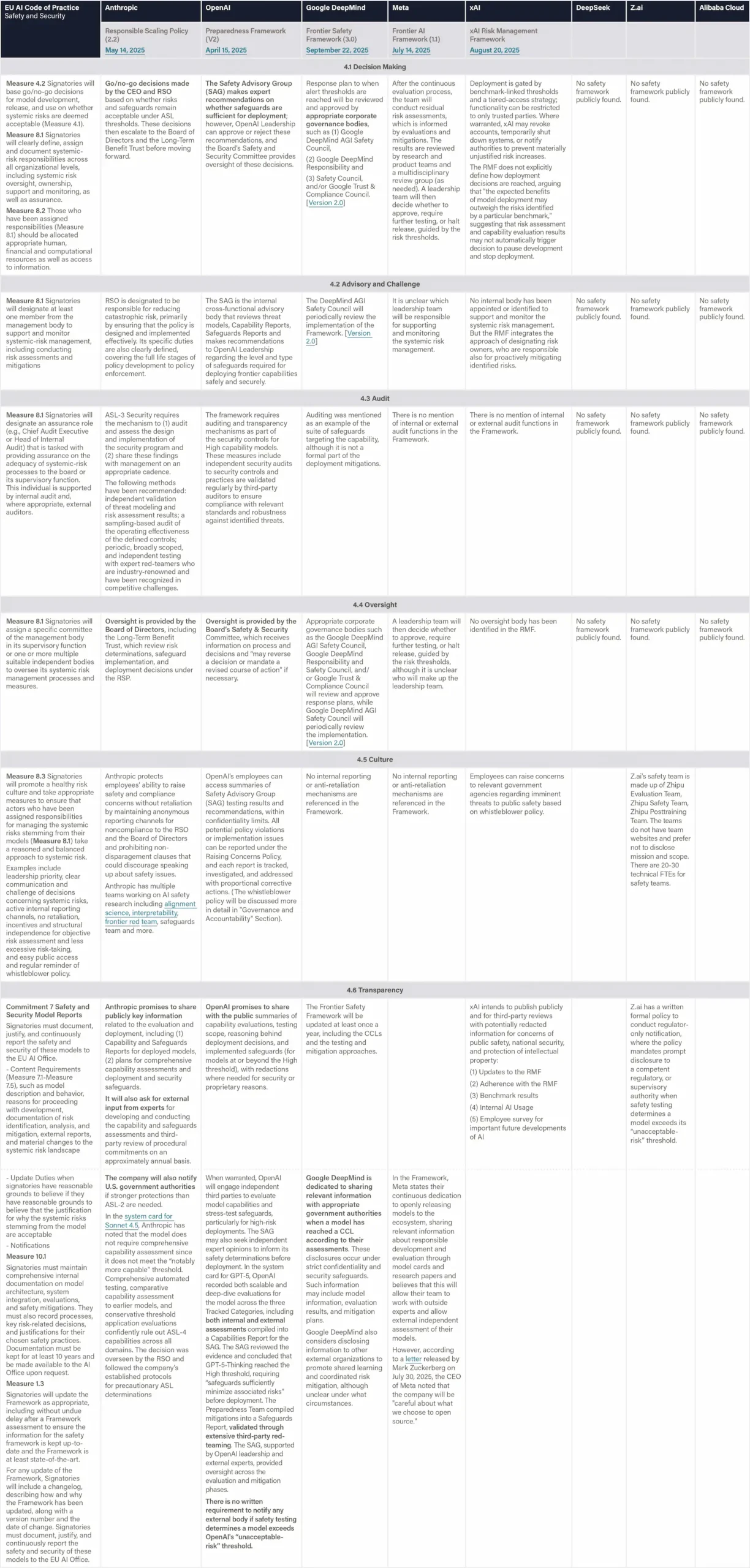

AI experts rate leading AI companies on key safety and security domains.

How to access this content:

Explore the index

Scorecard

Company

Company grade & score

Anthropic

C+

2.67

OpenAI

C+

2.31

Google DeepMind

C

2.08

xAI

D

1.17

Z.ai

D

1.12

Meta

D

1.10

DeepSeek

D

1.02

Alibaba Cloud

D-

0.98

Overall Grade

Score

Anthropic

C+

2.67

OpenAI

C+

2.31

Google DeepMind

C

2.08

xAI

D

1.17

Z.ai

D

1.12

Meta

D

1.10

DeepSeek

D

1.02

Alibaba Cloud

D-

0.98

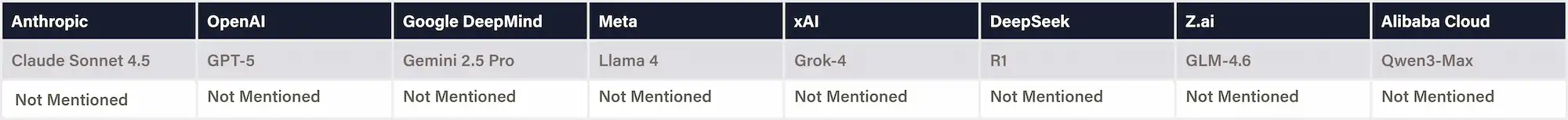

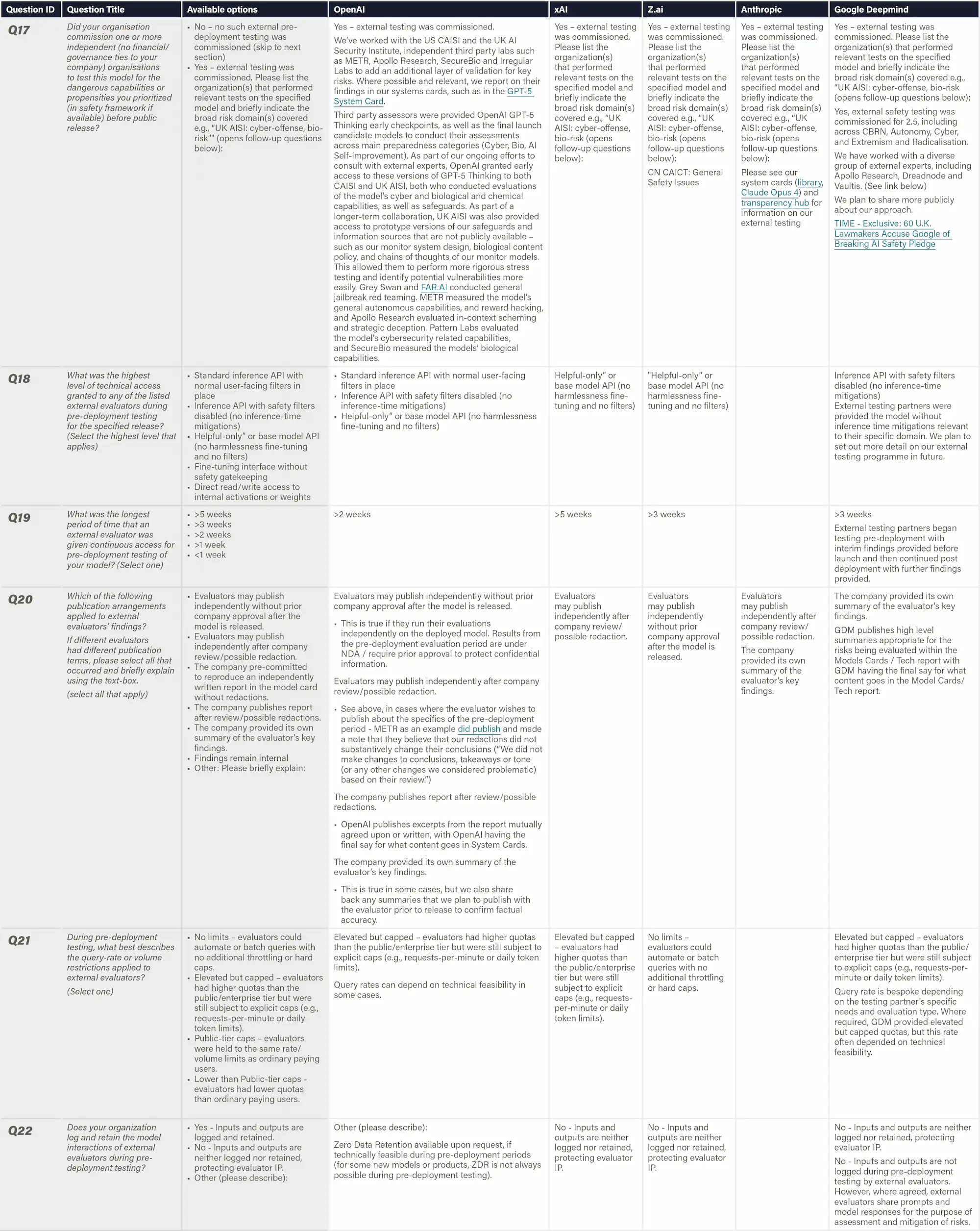

Domains

Hint: Click on a domain to inspect

B

C+

C+

D

B-

A-

B

C-

C+

D

C+

B

C+

C

C+

D

C-

C

D

F

D+

F

D

C

D+

D

D-

F

D

C-

D

D+

D+

F

D

D-

D

D+

F

F

D

C-

D

D+

F

F

D+

D+

Grading: We use the US GPA system for grade boundaries: A, B, C, D, F letter values correspond to numerical values 4.0, 3.0, 2.0, 1.0, 0.

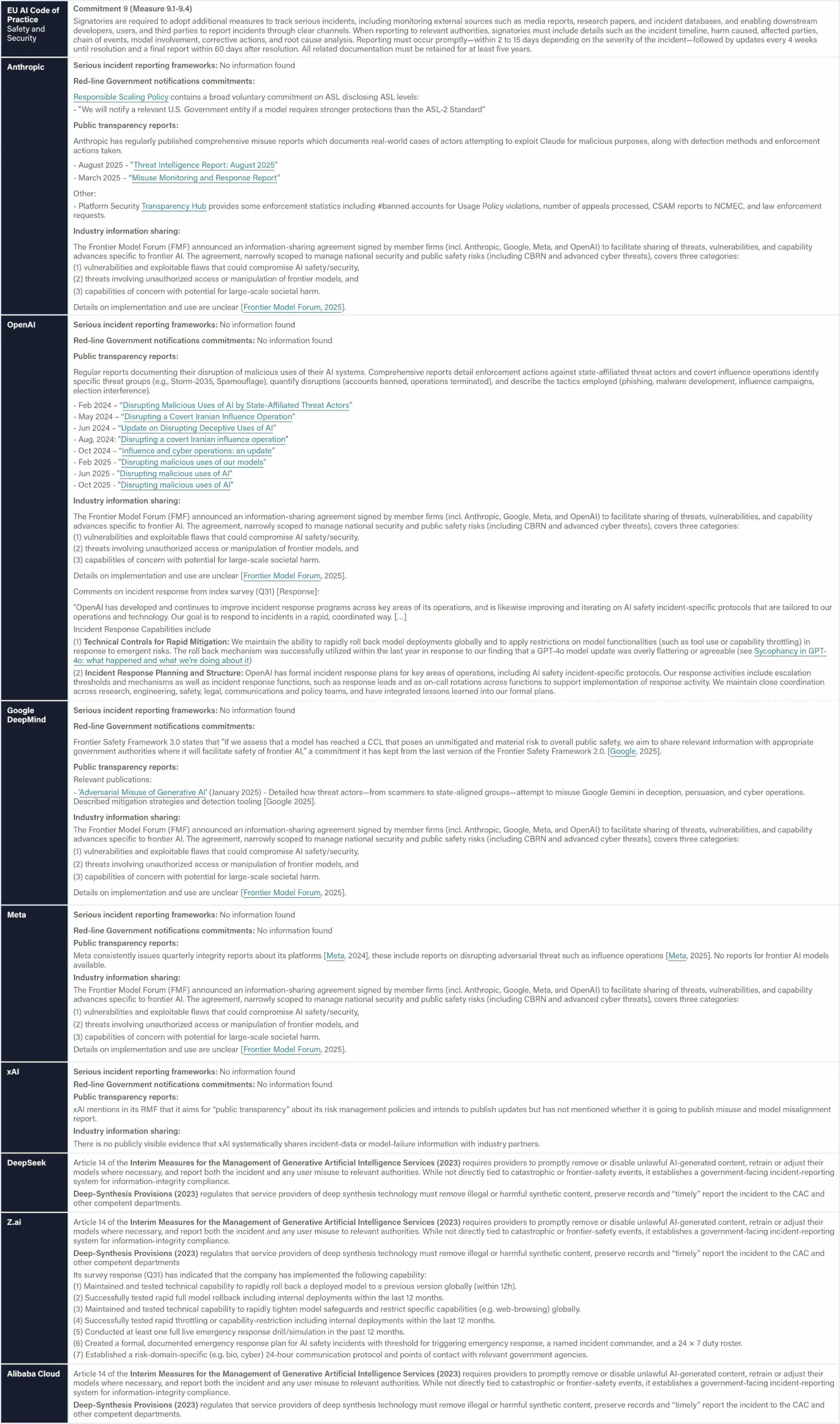

Domains Breakdown

Hint: View this webpage on desktop for a visual overview of scores across all domains

↗

↗

↗

↗

↗

↗

Index Content

Two-page Summary

A quick, printable summary of the report scorecard, key findings, and methodology.

View file

Watch: Max Tegmark on the Winter 2025 Results

FLI’s President Max Tegmark joins AI Safety Investigator Sabina Nong to discuss the importance of driving a ‘race to the top’ on safety amongst AI companies.

Video • 01:48

Key findings

Top takeaways from the index findings:

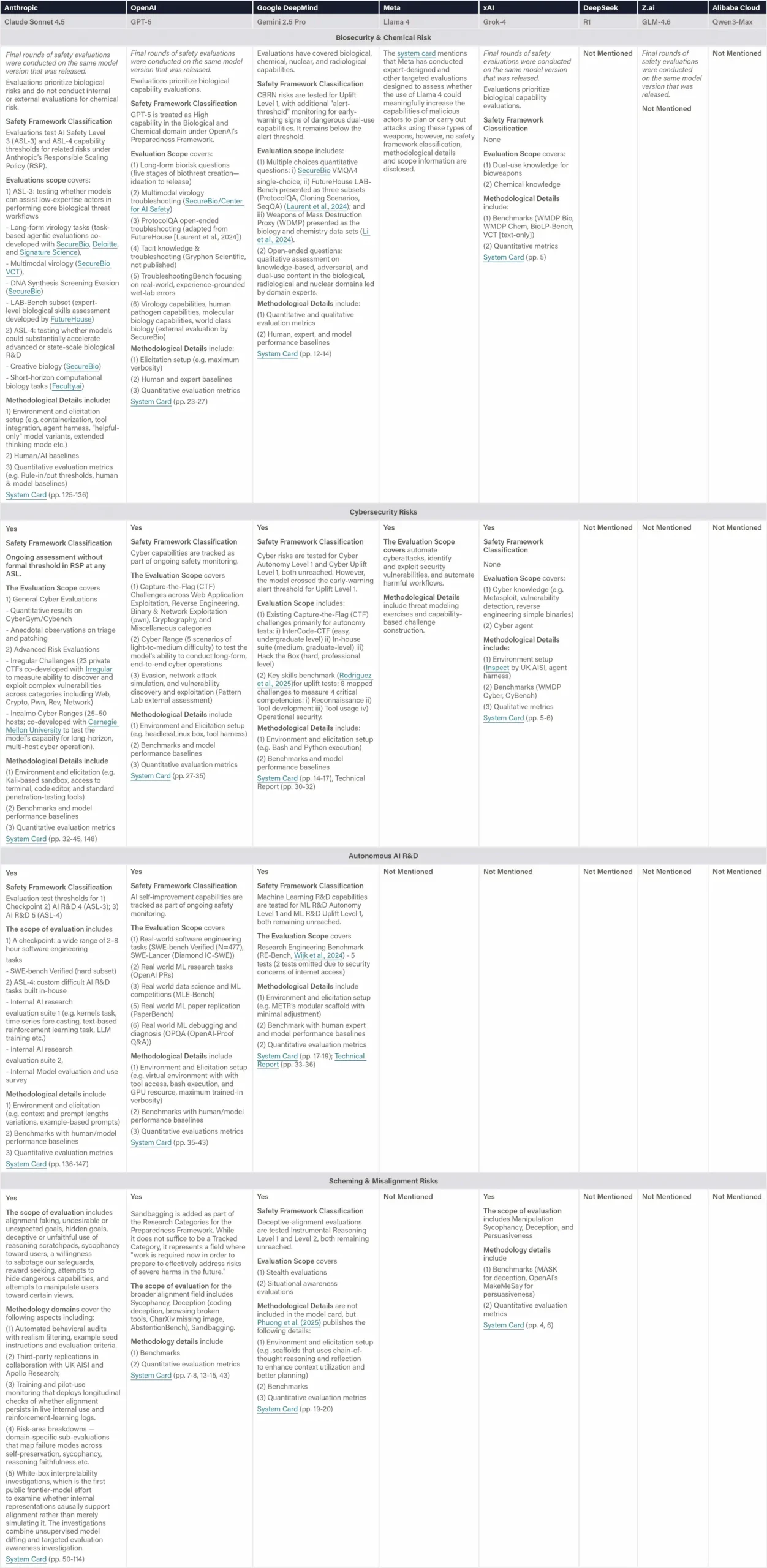

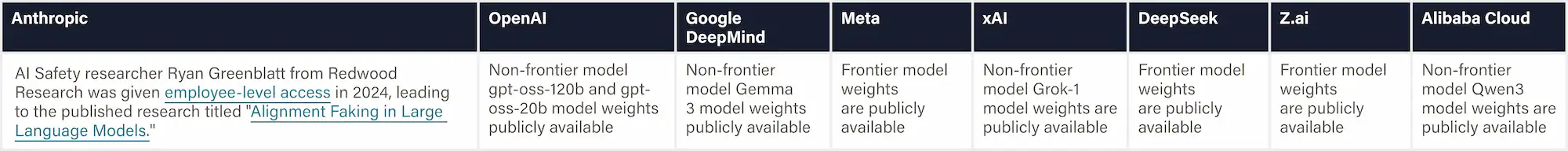

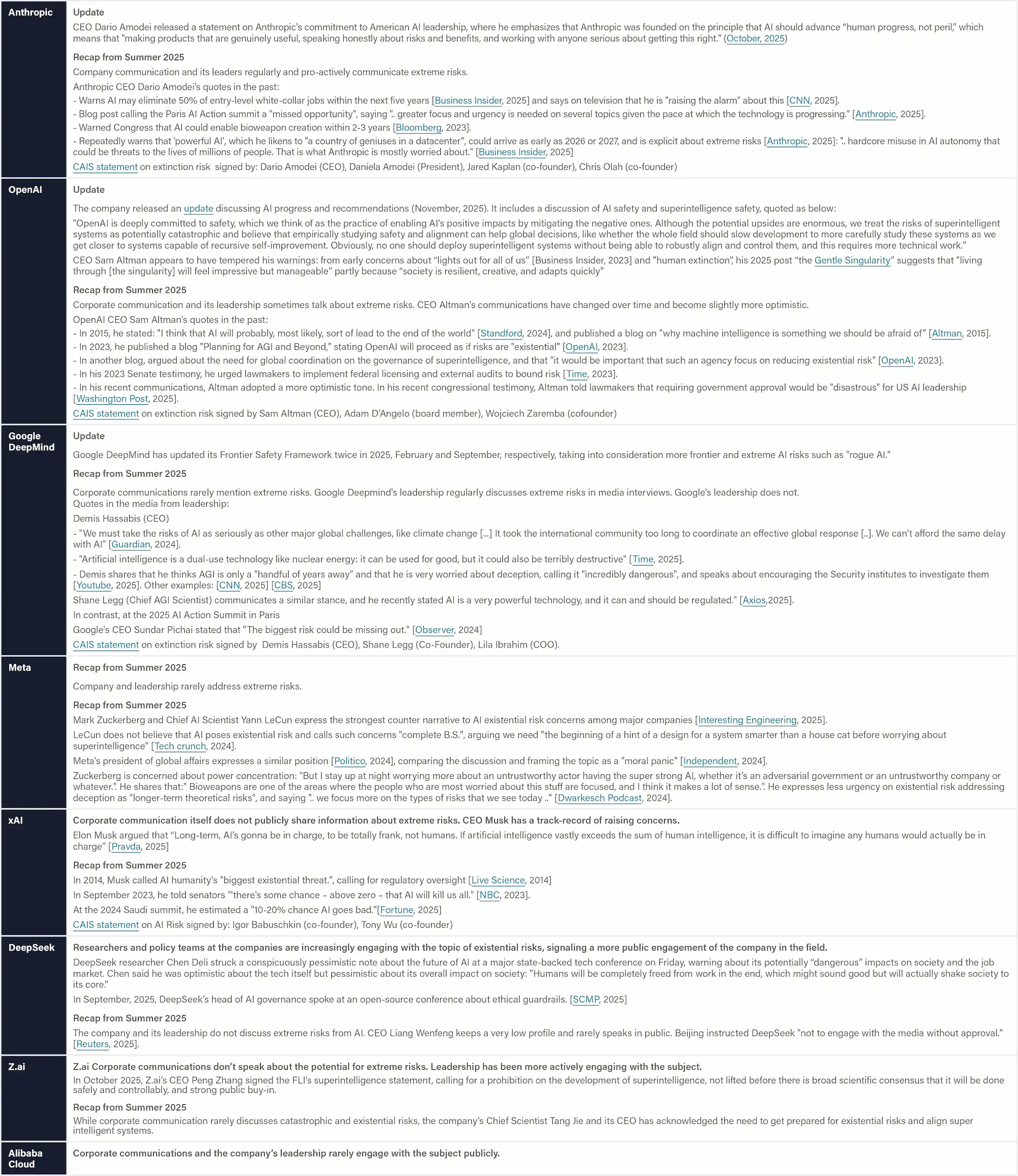

A clear divide persists between top performers and the rest

A clear divide persists between the top performers (Anthropic, OpenAI, and Google DeepMind) and the rest of the companies reviewed (Z.ai, xAI, Meta, Alibaba Cloud, DeepSeek). The most substantial gaps exist in the domains of risk assessment, safety framework, and information sharing, caused by limited disclosure, weak evidence of systematic safety processes, and uneven adoption of robust evaluation practices.

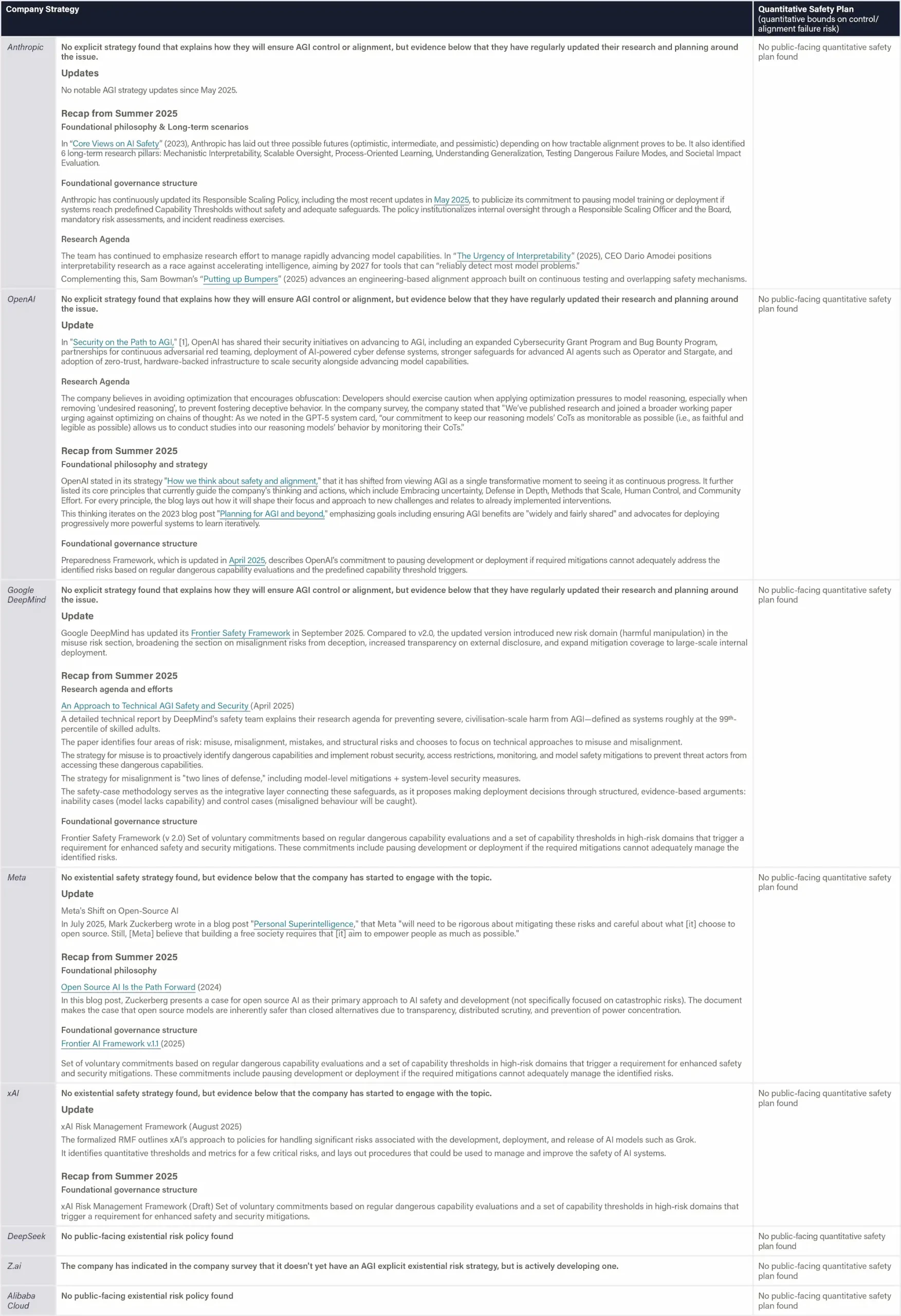

Existential safety remains the industry’s core structural weakness

All of the companies reviewed are racing toward AGI/superintelligence without presenting any explicit plans for controlling or aligning such smarter-than-human technology, thus leaving the most consequential risks effectively unaddressed.

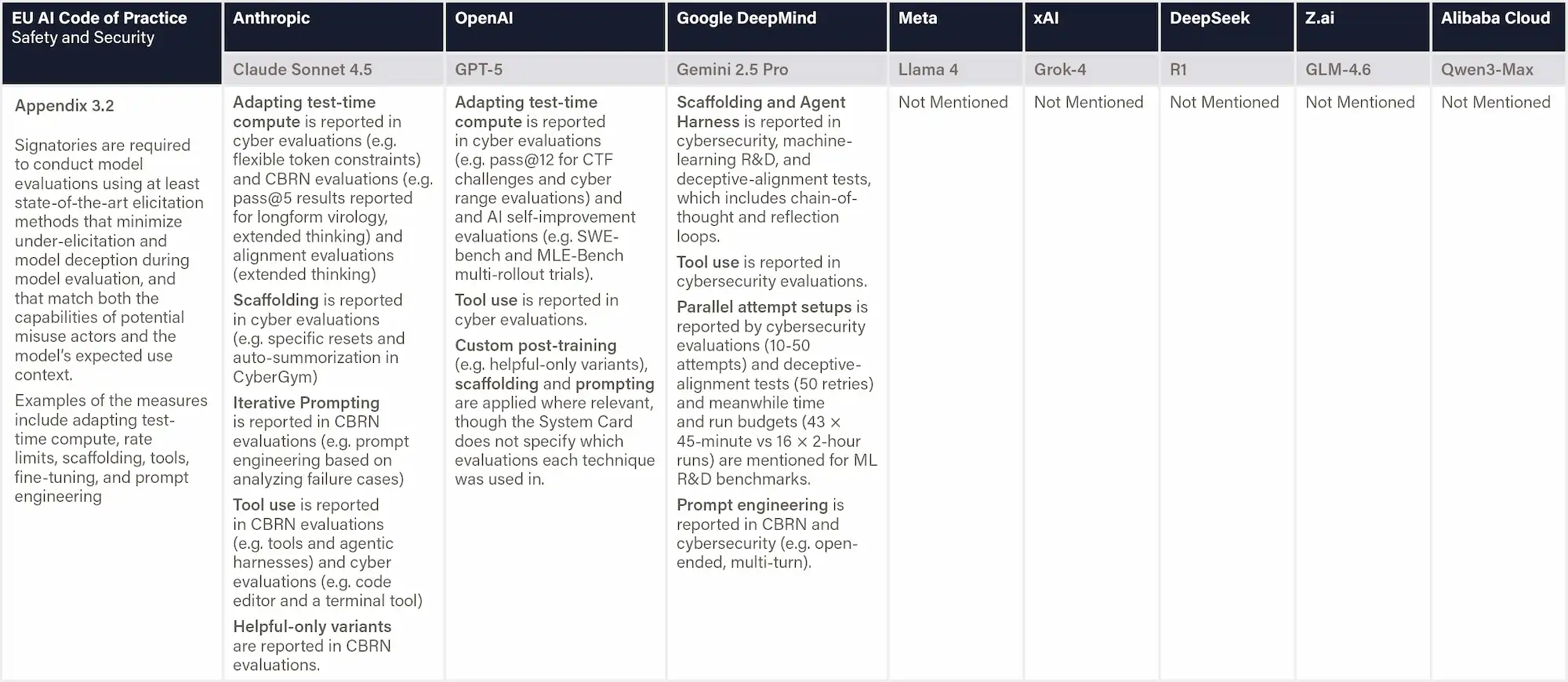

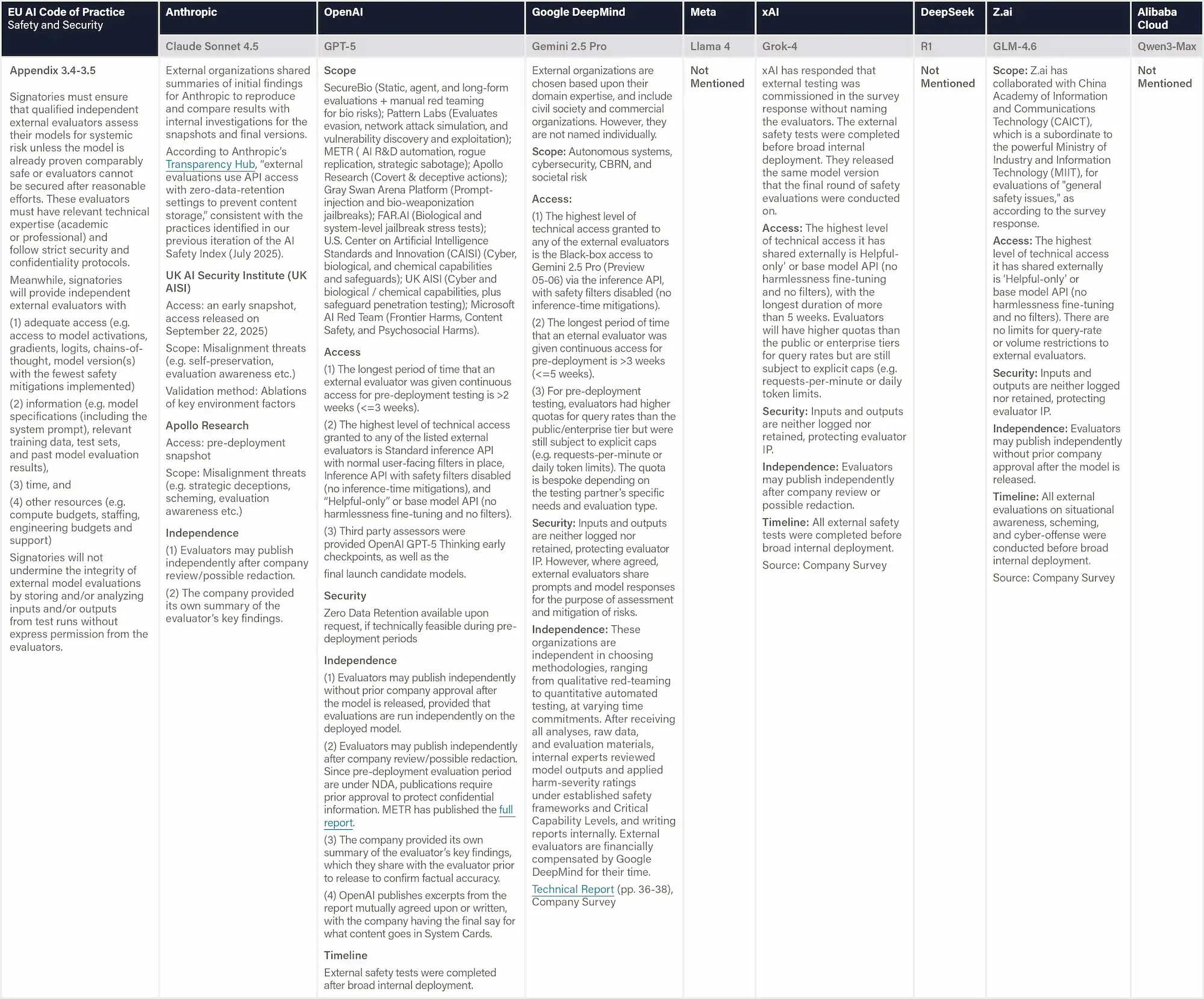

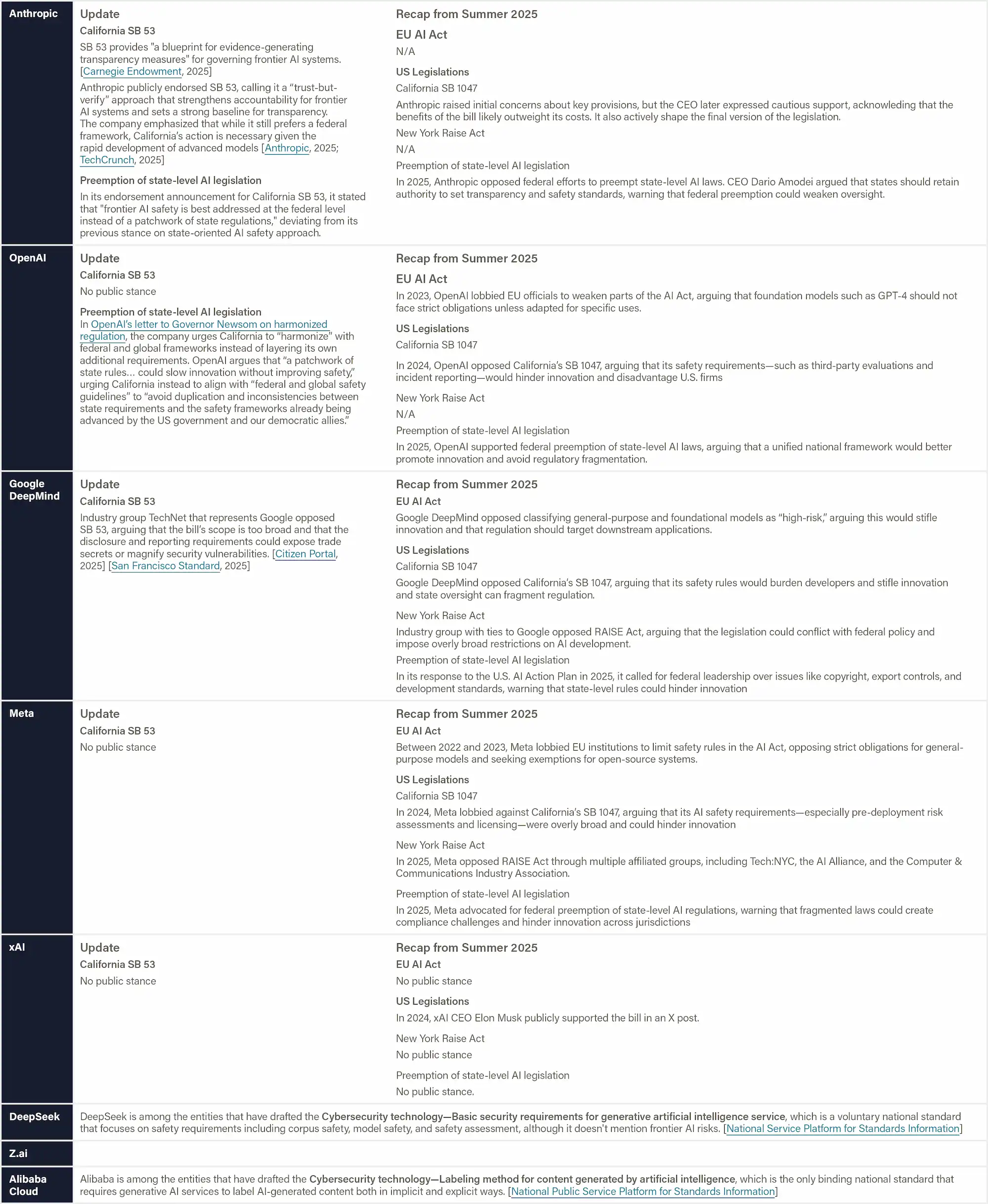

Despite public commitments, companies’ safety practices continue to fall short of emerging global standards

While many companies partially align with these emerging standards, the depth, specificity, and quality of implementation remain uneven, resulting in safety practices that do not yet meet the rigor, measurability, or transparency envisioned by frameworks such as the EU AI Code of Practice.

Note: the evidence was collected up until November 8, 2025 and does not reflect recent events such as the releases of Google DeepMind’s Gemini 3 Pro, xAI's Grok 4.1, OpenAI’s GPT-5.1, or Anthropic's Claude Opus 4.5.

“AI CEOs claim they know how to build superhuman AI, yet none can show how they'll prevent us from losing control – after which humanity's survival is no longer in our hands. I'm looking for proof that they can reduce the annual risk of control loss to one in a hundred million, in line with nuclear reactor requirements. Instead, they admit the risk could be one in ten, one in five, even one in three, and they can neither justify nor improve those numbers.”

Prof. Stuart Russell, Professor of Computer Science at UC Berkeley

Independent review panel

The scoring was conducted by a panel of distinguished AI experts:

David Krueger

David Krueger is an Assistant Professor in Robust, Reasoning and Responsible AI in the Department of Computer Science and Operations Research (DIRO) at University of Montreal, a Core Academic Member at Mila, and an affiliated researcher at UC Berkeley’s Center for Human-Compatible AI, and the Center for the Study of Existential Risk. His work focuses on reducing the risk of human extinction from AI.

Dylan Hadfield-Menell

Dylan Hadfield-Menell is an Assistant Professor at MIT, where he leads the Algorithmic Alignment Group at the Computer Science and Artificial Intelligence Laboratory (CSAIL). A Schmidt Sciences AI2050 Early Career Fellow, his research focuses on safe and trustworthy AI deployment, with particular emphasis on multi-agent systems, human-AI teams, and societal oversight of machine learning.

Jessica Newman

Jessica Newman is the Founding Director of the AI Security Initiative, housed at the Center for Long-Term Cybersecurity at the University of California, Berkeley. She serves as an expert in the OECD Expert Group on AI Risk and Accountability and contributes to working groups within the U.S. Center for AI Standards and Innovation, EU Code of Practice Plenaries, and other AI standards and governance bodies.

Sneha Revanur

Sneha Revanur is the founder and president of Encode, a global youthled organization advocating for the ethical regulation of AI. Under her leadership, Encode has mobilized thousands of young people to address challenges like algorithmic bias and AI accountability. She was featured on TIME’s inaugural list of the 100 most influential people in AI.

Sharon Li

Sharon Li is an Associate Professor in the Department of Computer Sciences at the University of Wisconsin-Madison. Her research focuses on algorithmic and theoretical foundations of safe and reliable AI, addressing challenges in both model development and deployment in the open world. She serves as the Program Chair for ICML 2026. Her awards include a Sloan Fellowship (2025), NSF CAREER Award (2023), MIT Innovators Under 35 Award (2023), Forbes 30under30 in Science (2020), and “Innovator of the Year 2023” (MIT Technology Review). She won the Outstanding Paper Award at NeurIPS 2022 and ICLR 2022.

Stuart Russell

Stuart Russell is a Professor of Computer Science at the University of California at Berkeley and Director of the Center for Human-Compatible AI and the Kavli Center for Ethics, Science, and the Public. He is a member of the National Academy of Engineering and a Fellow of the Royal Society. He is a recipient of the IJCAI Computers and Thought Award, the IJCAI Research Excellence Award, and the ACM Allen Newell Award. In 2021 he received the OBE from Her Majesty Queen Elizabeth and gave the BBC Reith Lectures. He coauthored the standard textbook for AI, which is used in over 1500 universities in 135 countries.

Tegan Maharaj

Tegan Maharaj is an Assistant Professor in the Department of Decision Sciences at HEC Montréal, where she leads the ERRATA lab on Ecological Risk and Responsible AI. She is also a core academic member at Mila. Her research focuses on advancing the science and techniques of responsible AI development. Previously, she served as an Assistant Professor of Machine Learning at the University of Toronto.

Yi Zeng

Yi Zeng is an AI Professor at the Chinese Academy of Sciences, the Founding Dean of the Beijing Institute of AI Safety and Governance, and the Director of the Beijing Key Laboratory of Safe AI and Superalignment. He serves on the UN High-level Advisory Body on AI, the UNESCO Ad Hoc Expert Group on AI Ethics, the WHO Expert Group on the Ethics/Governance of AI for Health, and the National Governance Committee of Next Generation AI in China. He has been recognized by the TIME100 AI list.

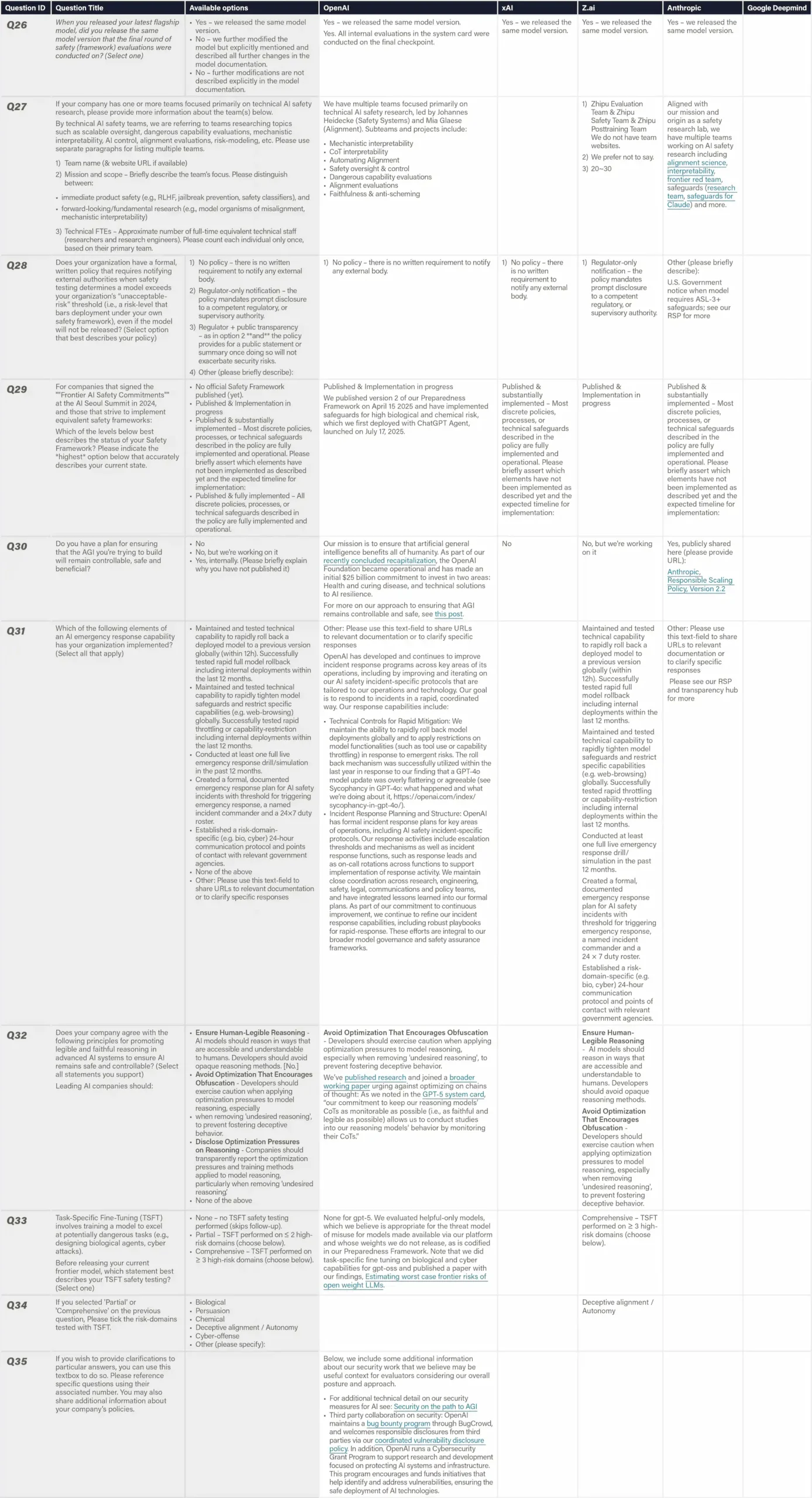

Indicators overview

The indicators within each domain:

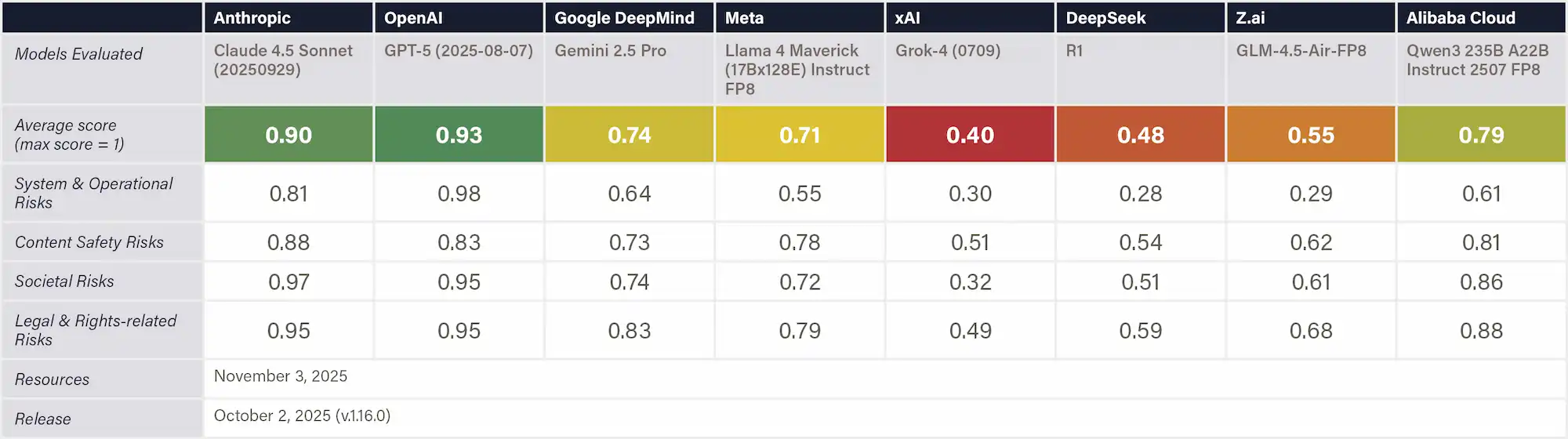

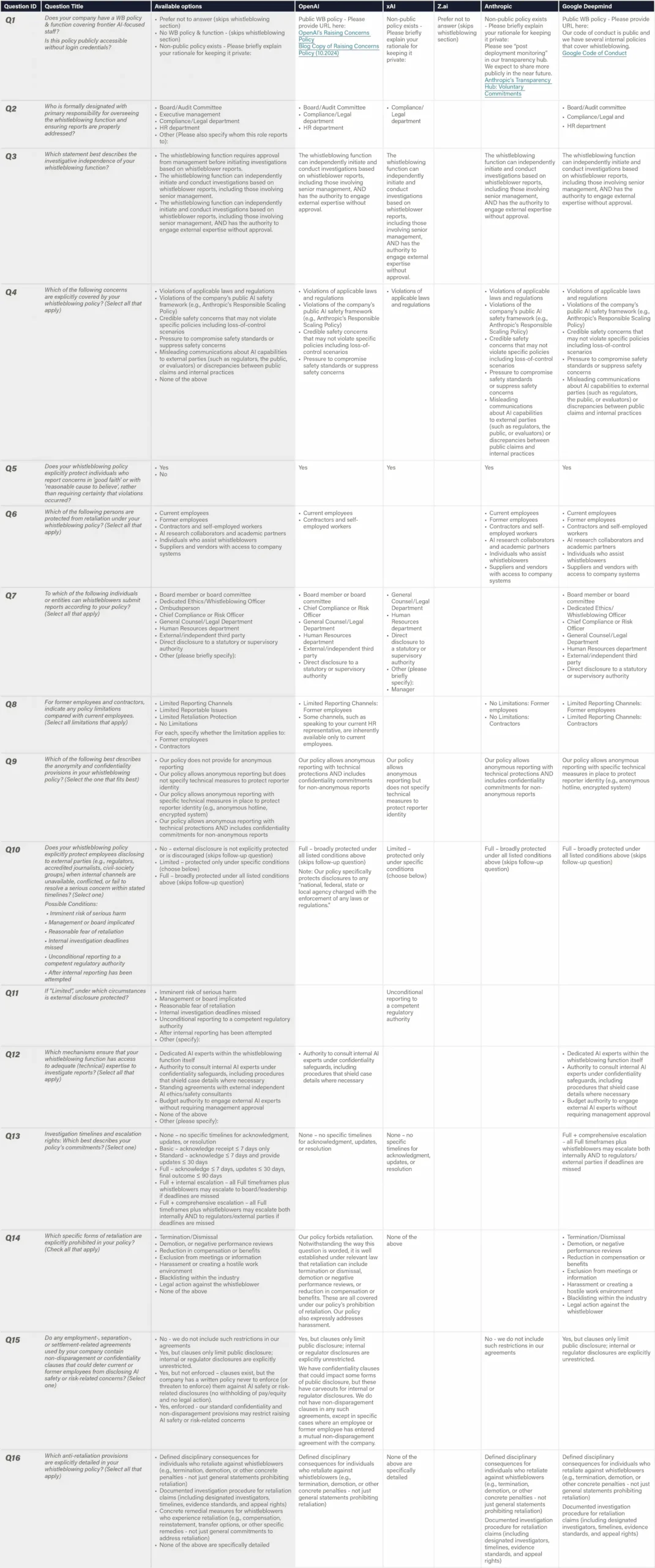

Safety Performance

Stanford's HELM Safety Benchmark

Stanford's HELM AIR Benchmark

TrustLLM Benchmark

Center for AI Safety Benchmarks

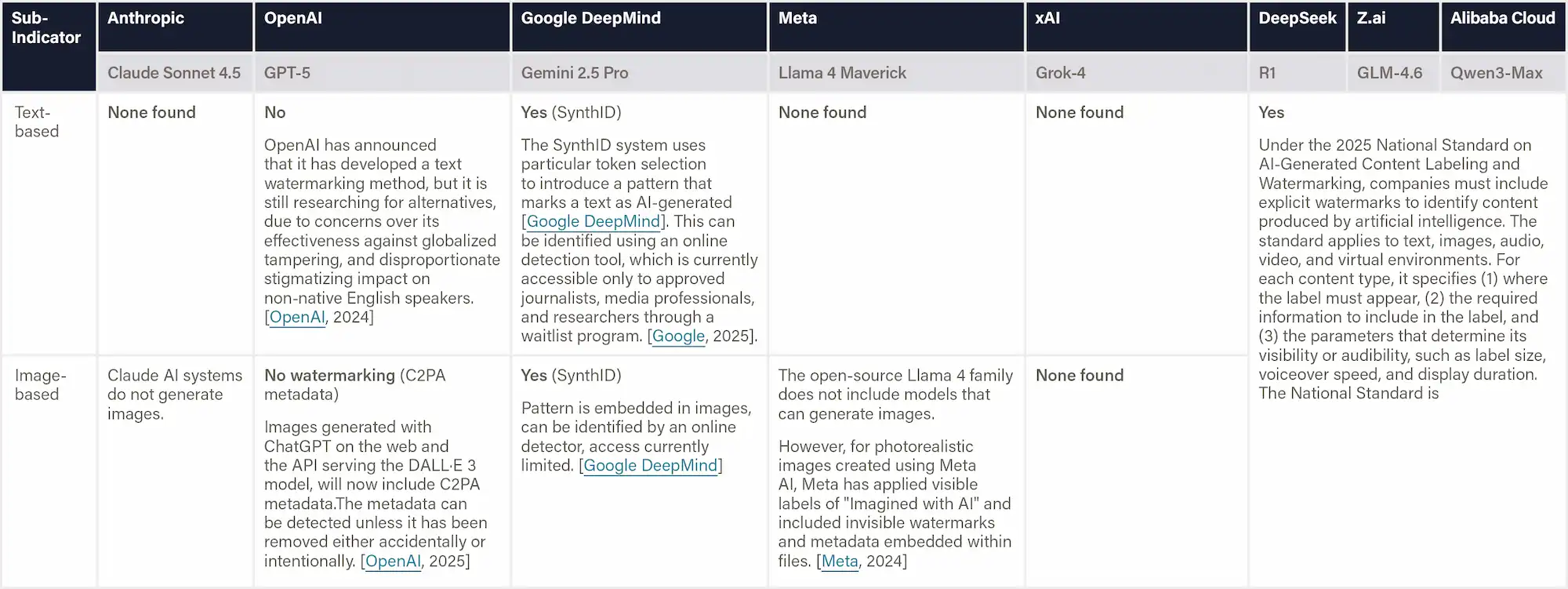

Digital Responsibility

Protecting Safeguards from Fine-tuning

Watermarking

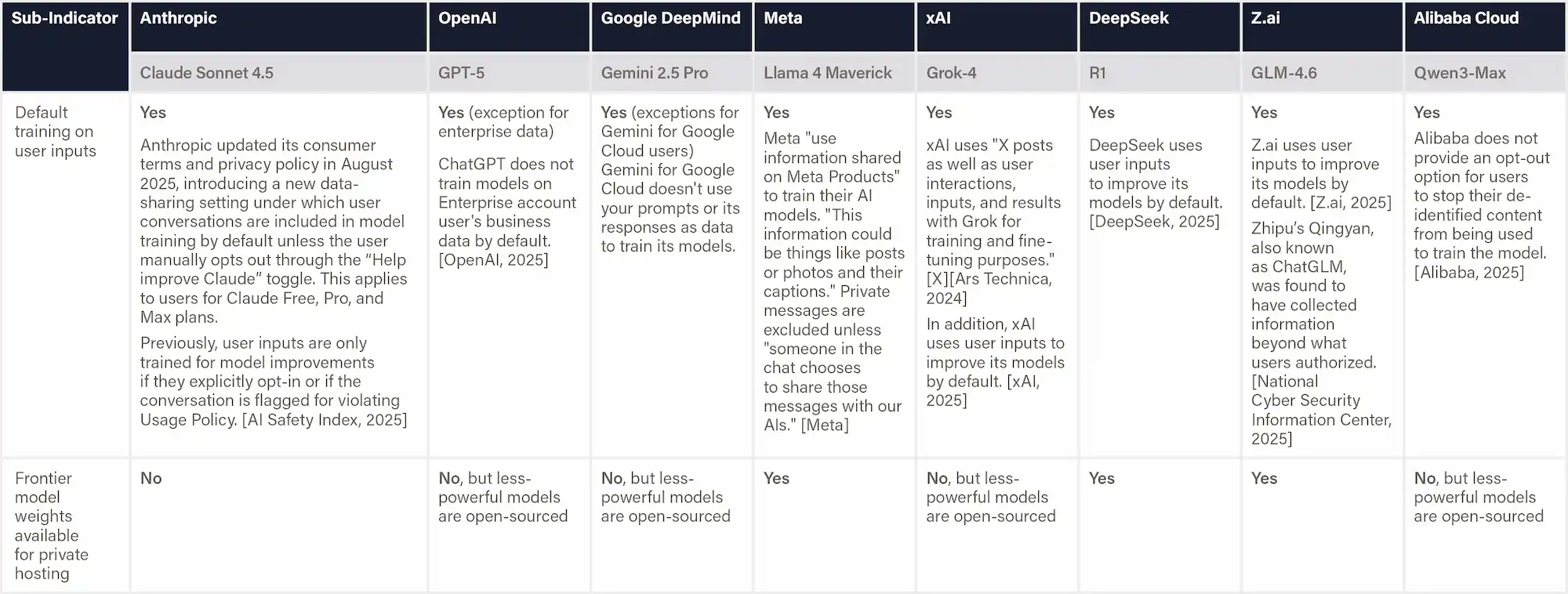

User Privacy

Internal

Dangerous Capability Evaluations

Elicitation for Dangerous Capability Evaluations

Human Uplift Trials

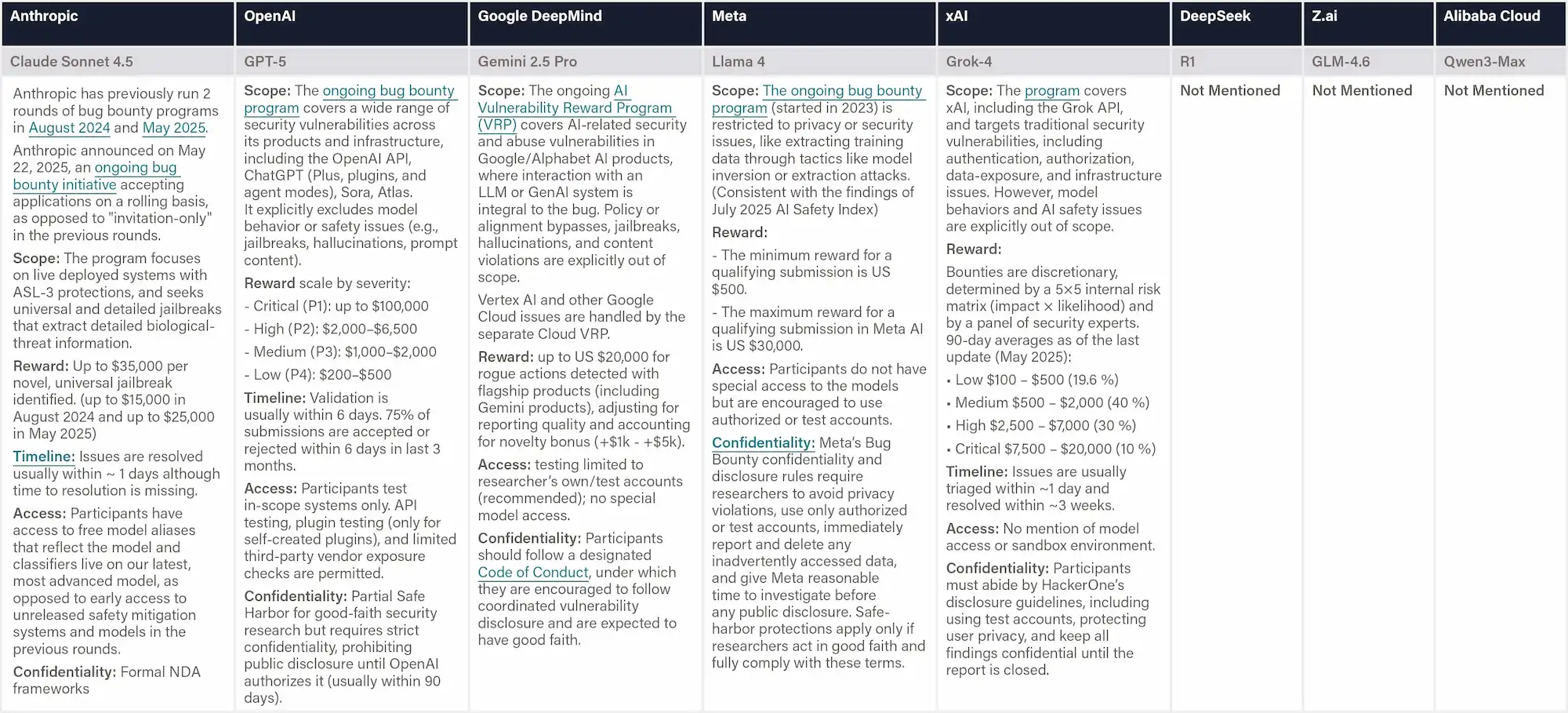

External

Independent Review of Safety Evaluations

Pre-deployment External Safety Testing

Bug Bounties for System Vulnerabilities

Technical Specifications

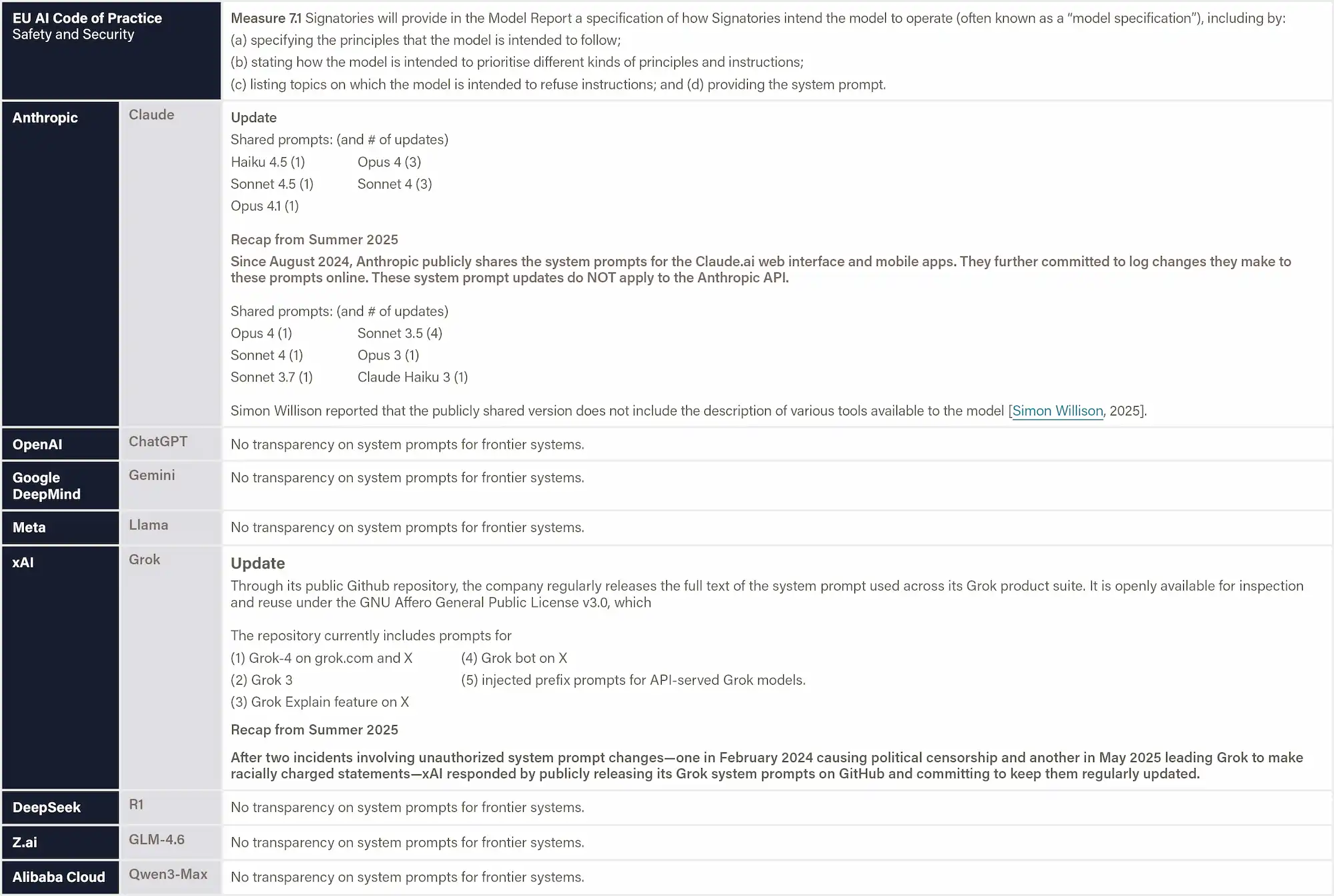

System Prompt Transparency

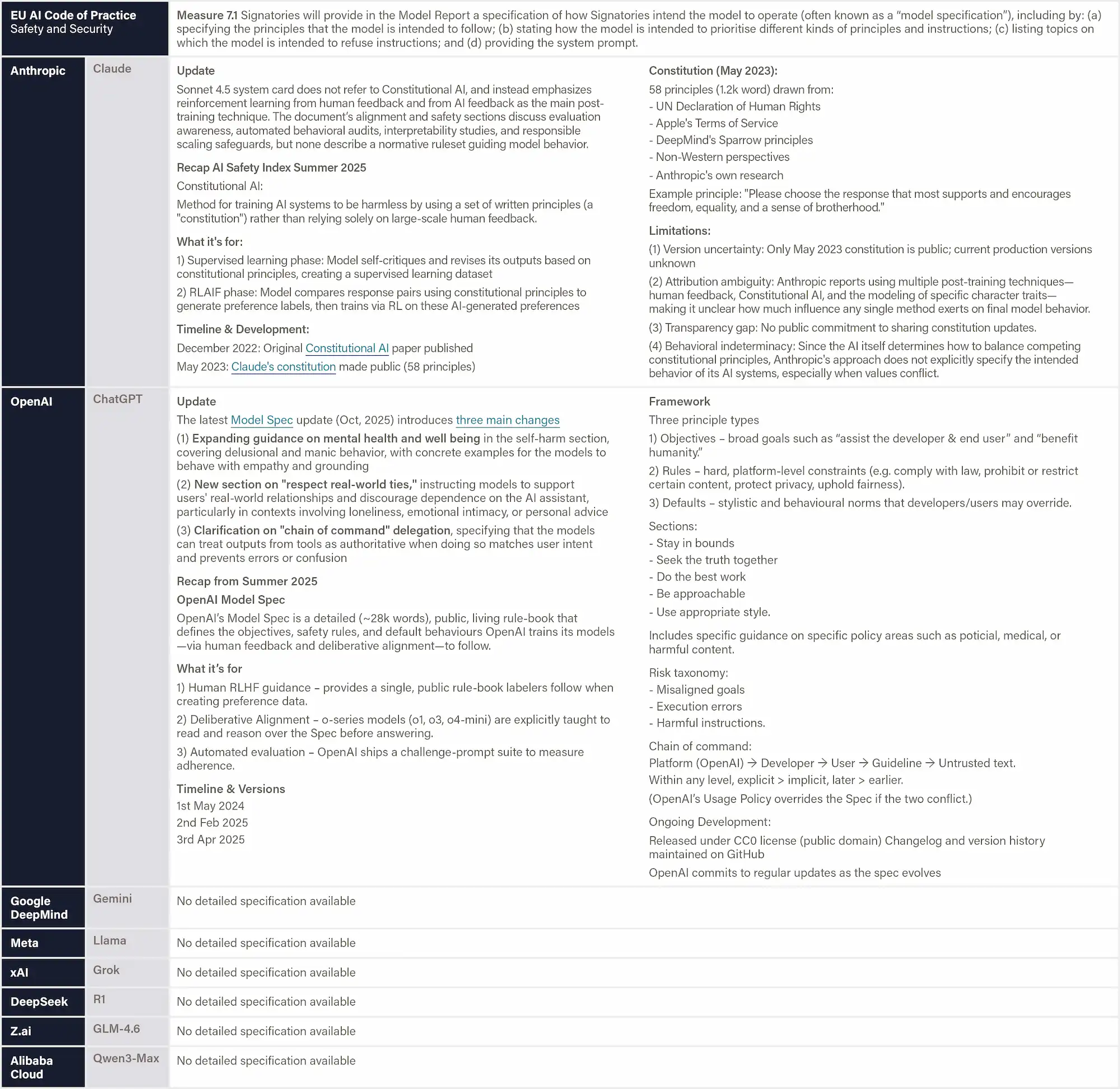

Behavior Specification Transparency

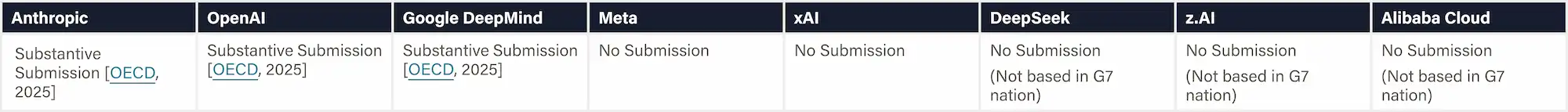

Voluntary Commitment

G7 Hiroshima AI Process Reporting

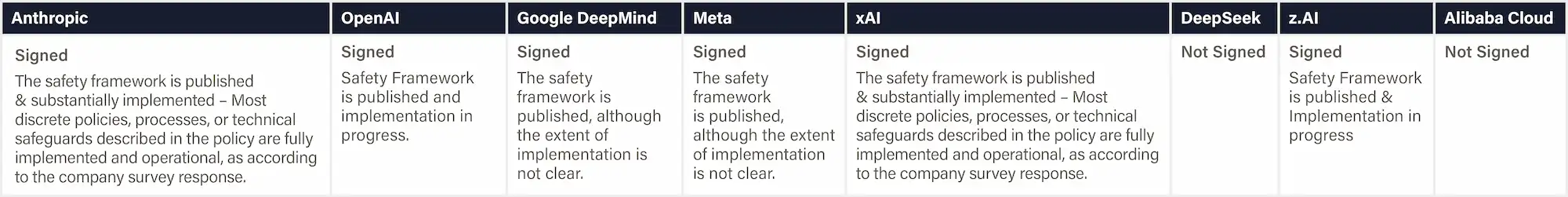

EU General‑Purpose AI Code of Practice

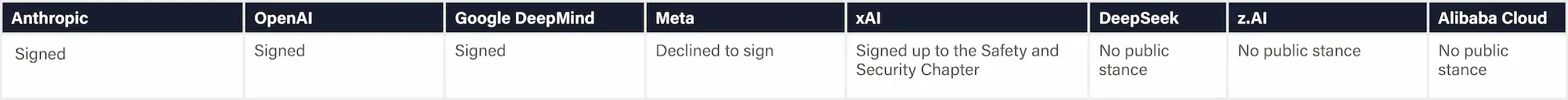

Frontier AI Safety Commitments (AI Seoul Summit, 2024)

FLI AI Safety Index Survey Engagement

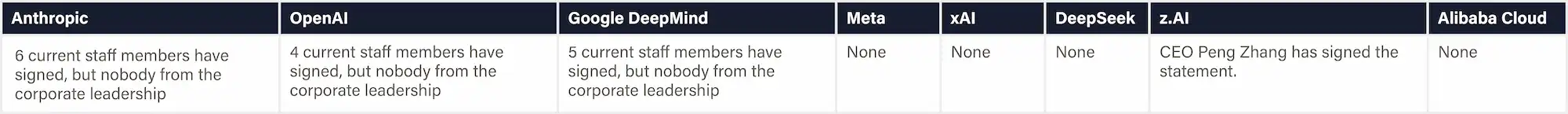

Endorsement of the Oct. 2025 Superintelligence Statement

Risks and Incidents

Serious Incident Reporting & Government Notifications

Extreme-Risk Transparency & Engagement

Policy Engagement on AI Safety Regulations

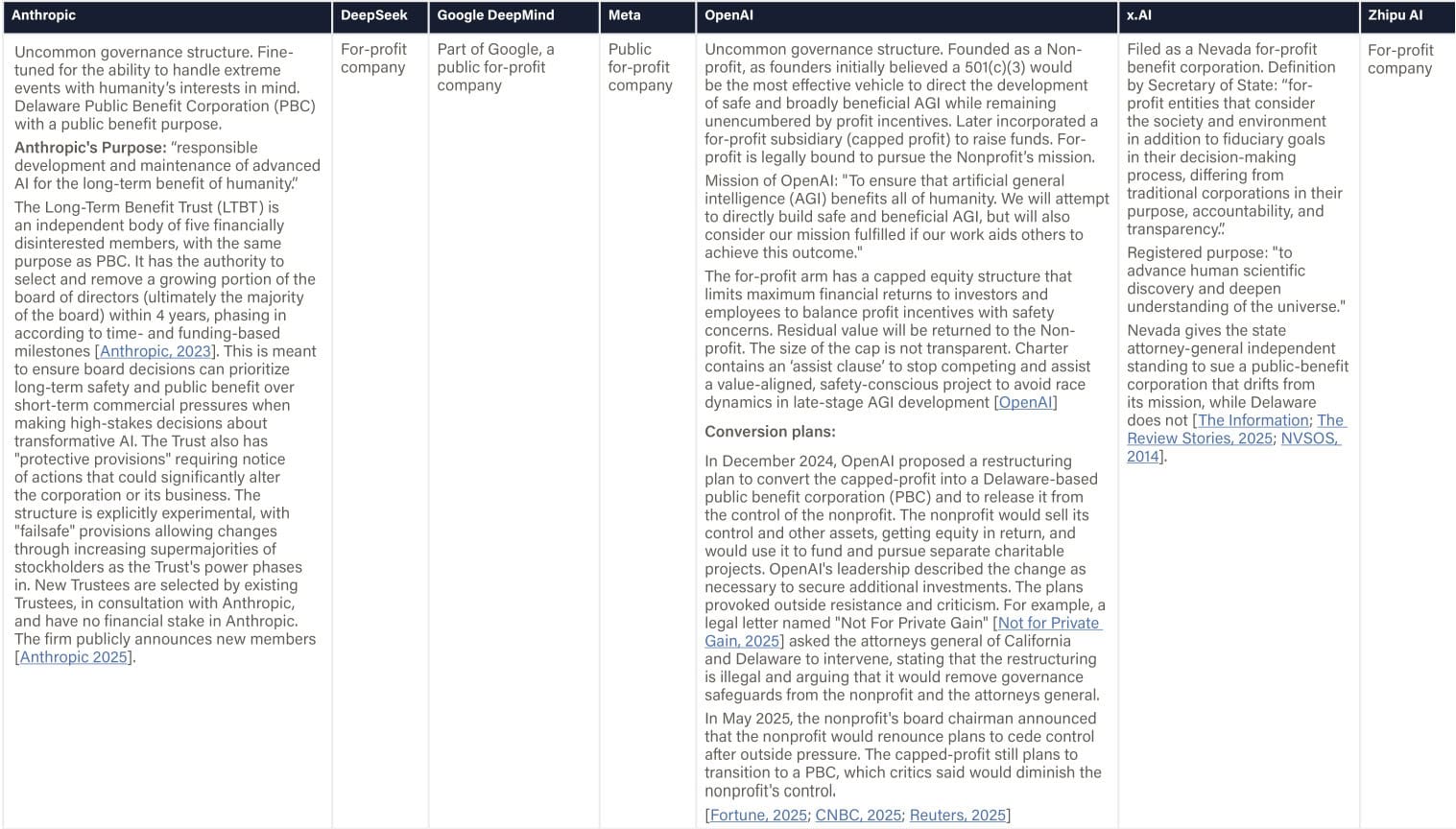

Company Structure & Mandate

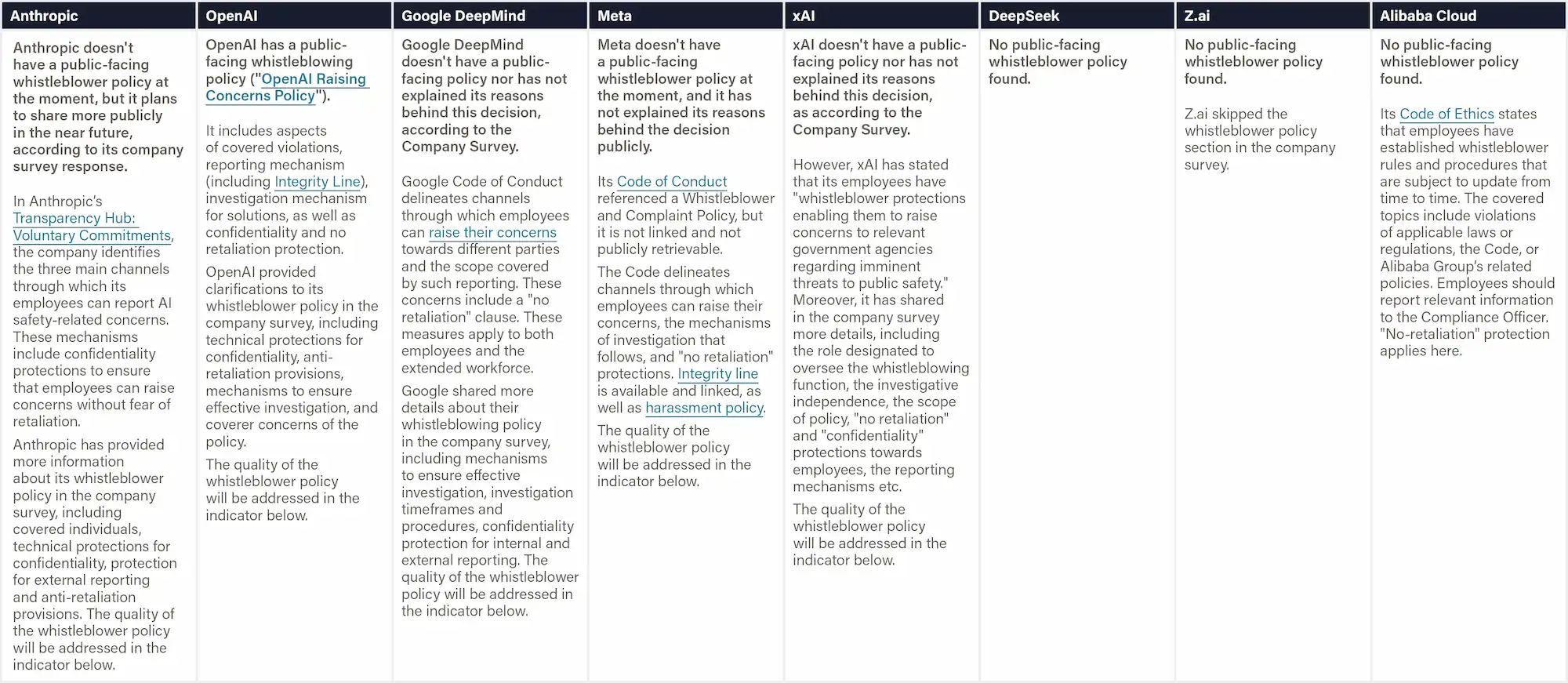

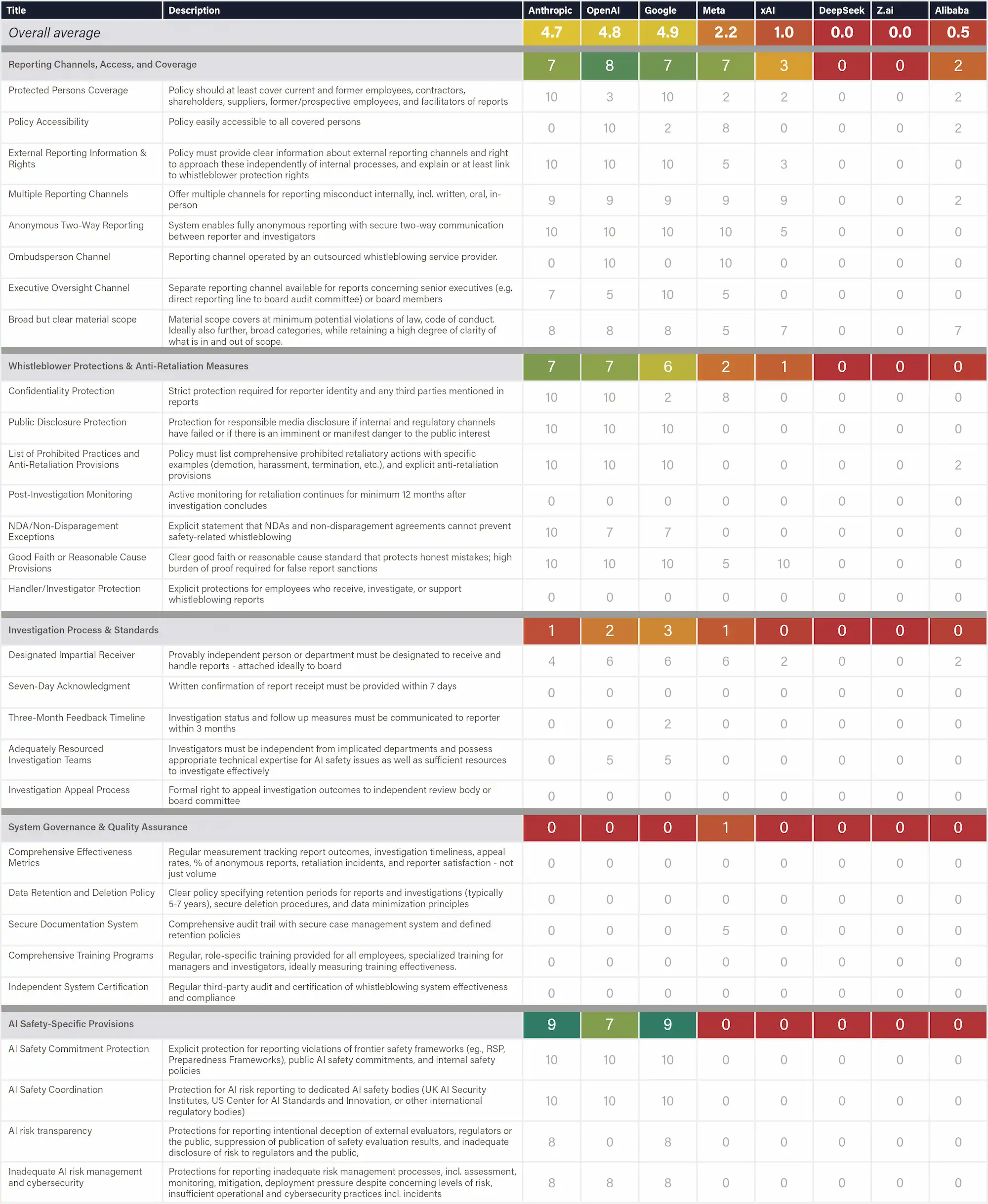

Whistleblowing Protection

Whistleblowing Policy Transparency

Whistleblowing Policy Quality Analysis

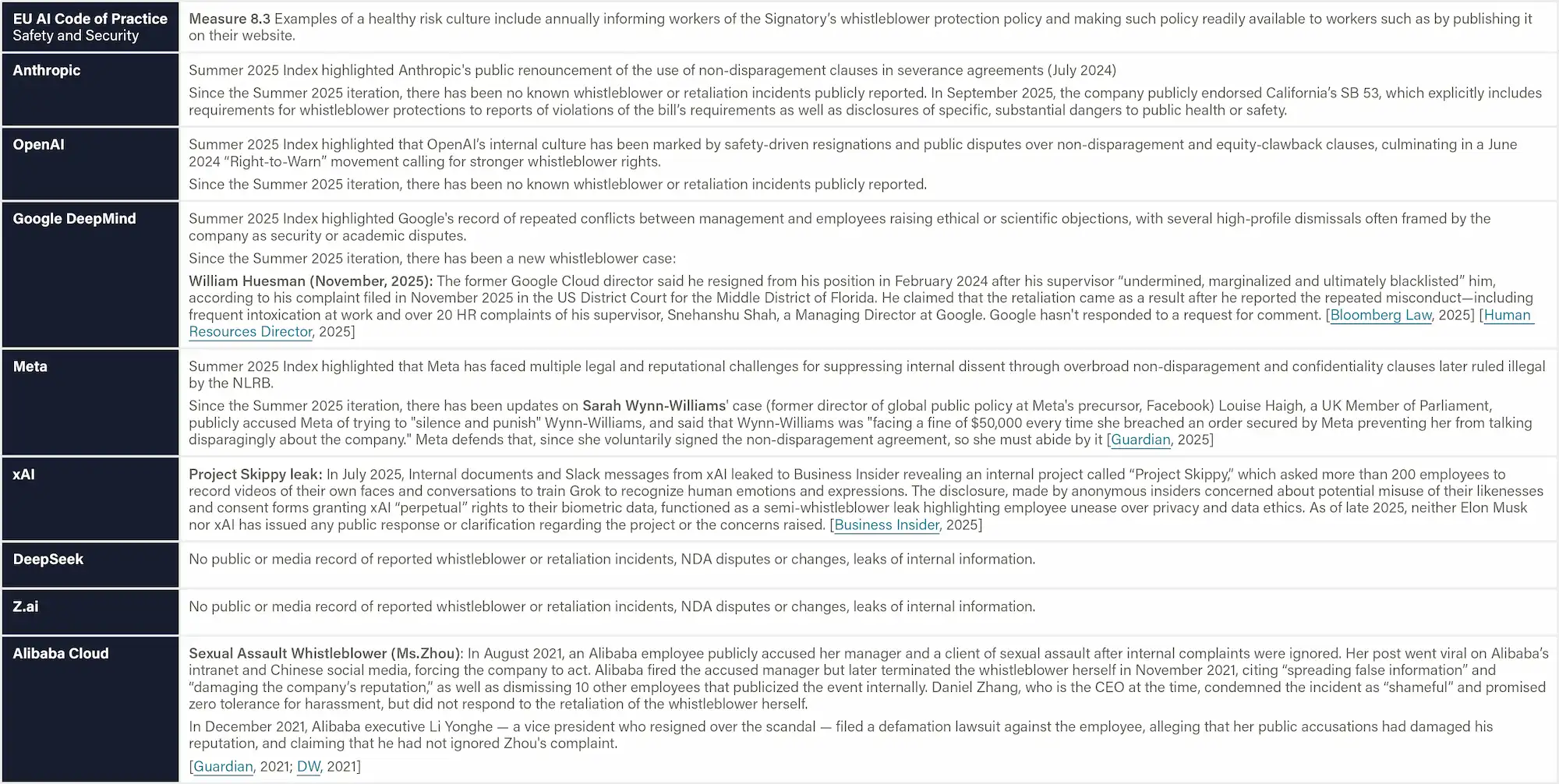

Reporting Culture & Whistleblowing Track Record

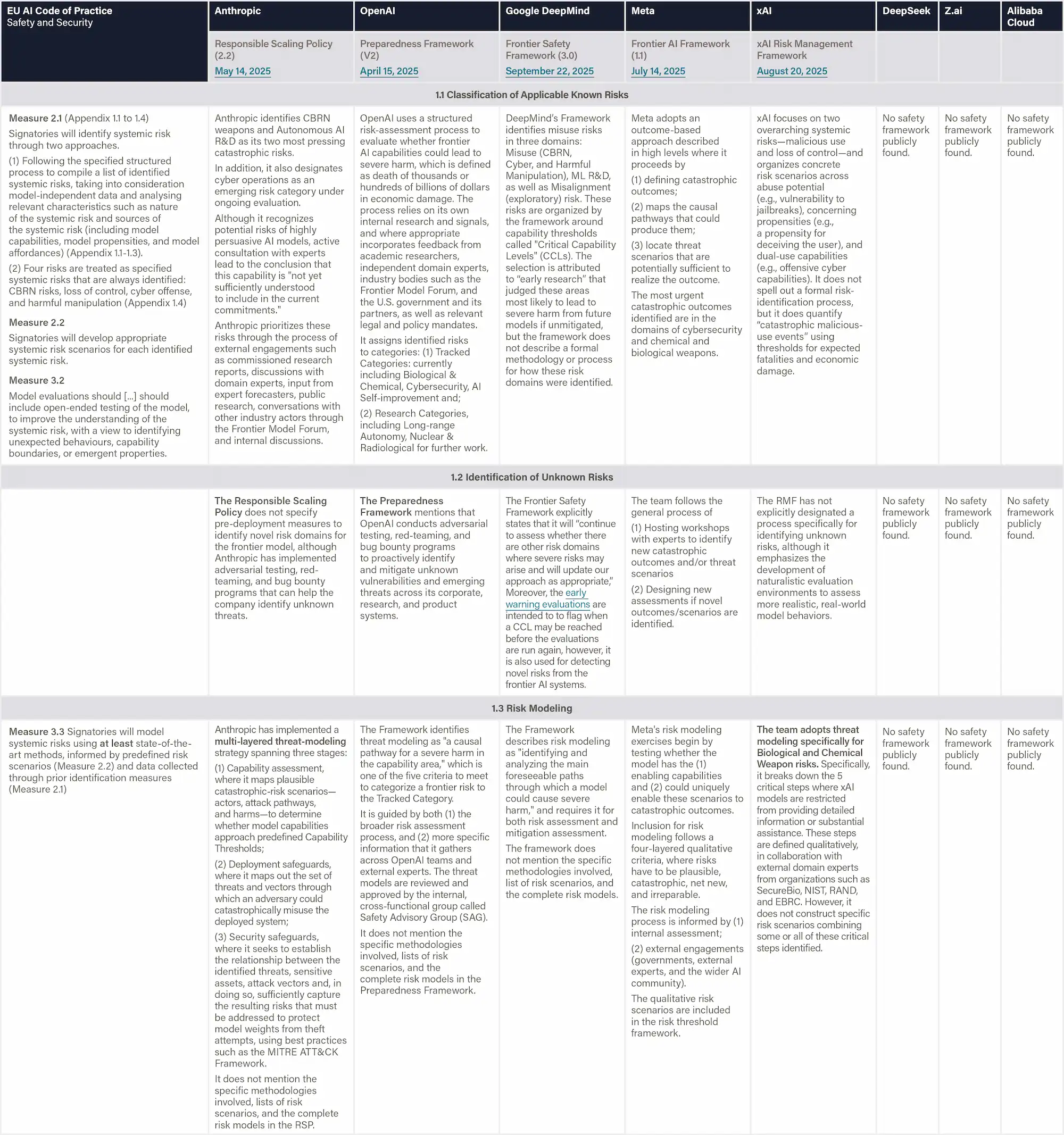

Risk Identification

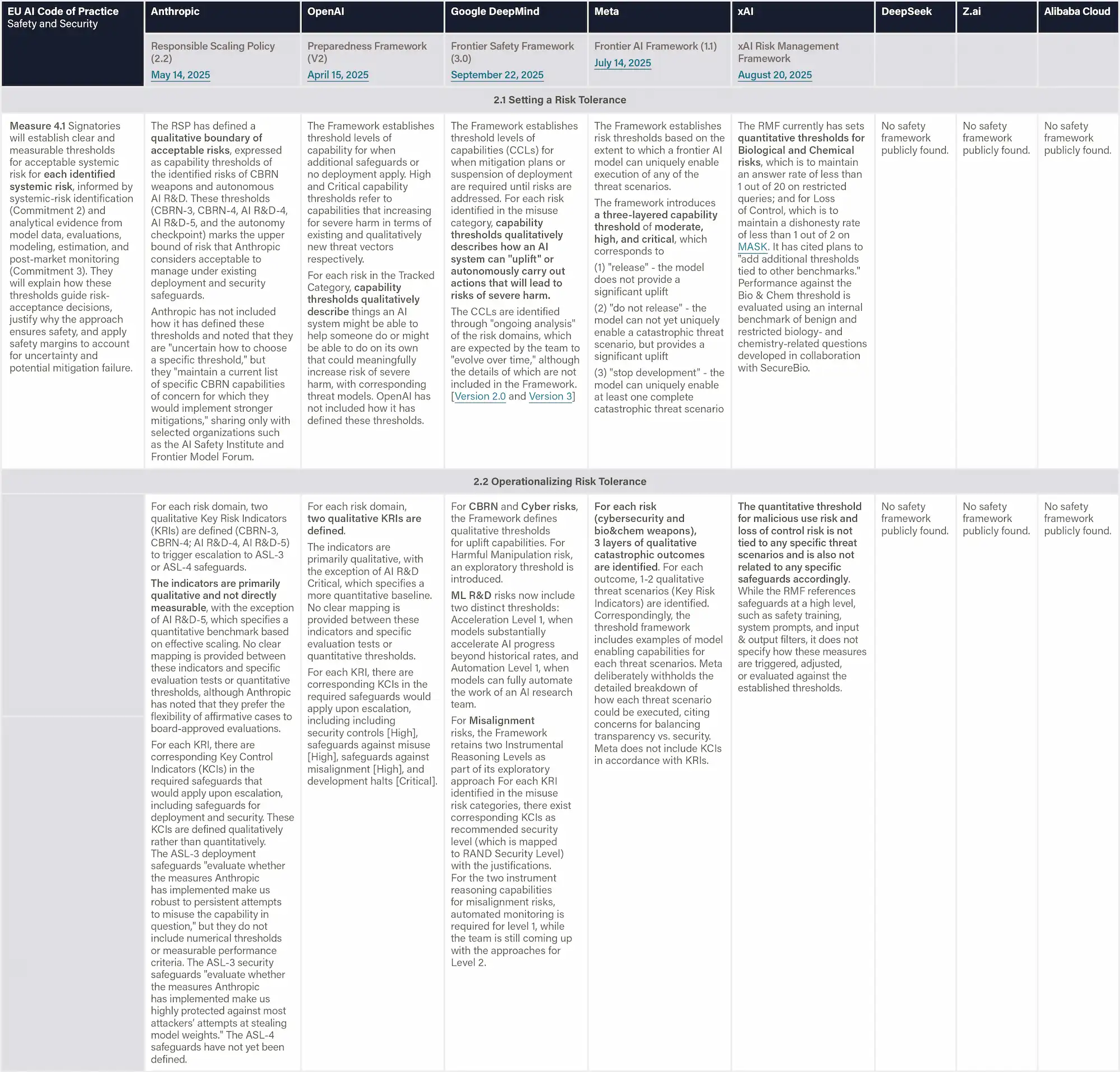

Risk Analysis & Evaluation

Risk Treatment

Risk Governance

Existential Safety Strategy

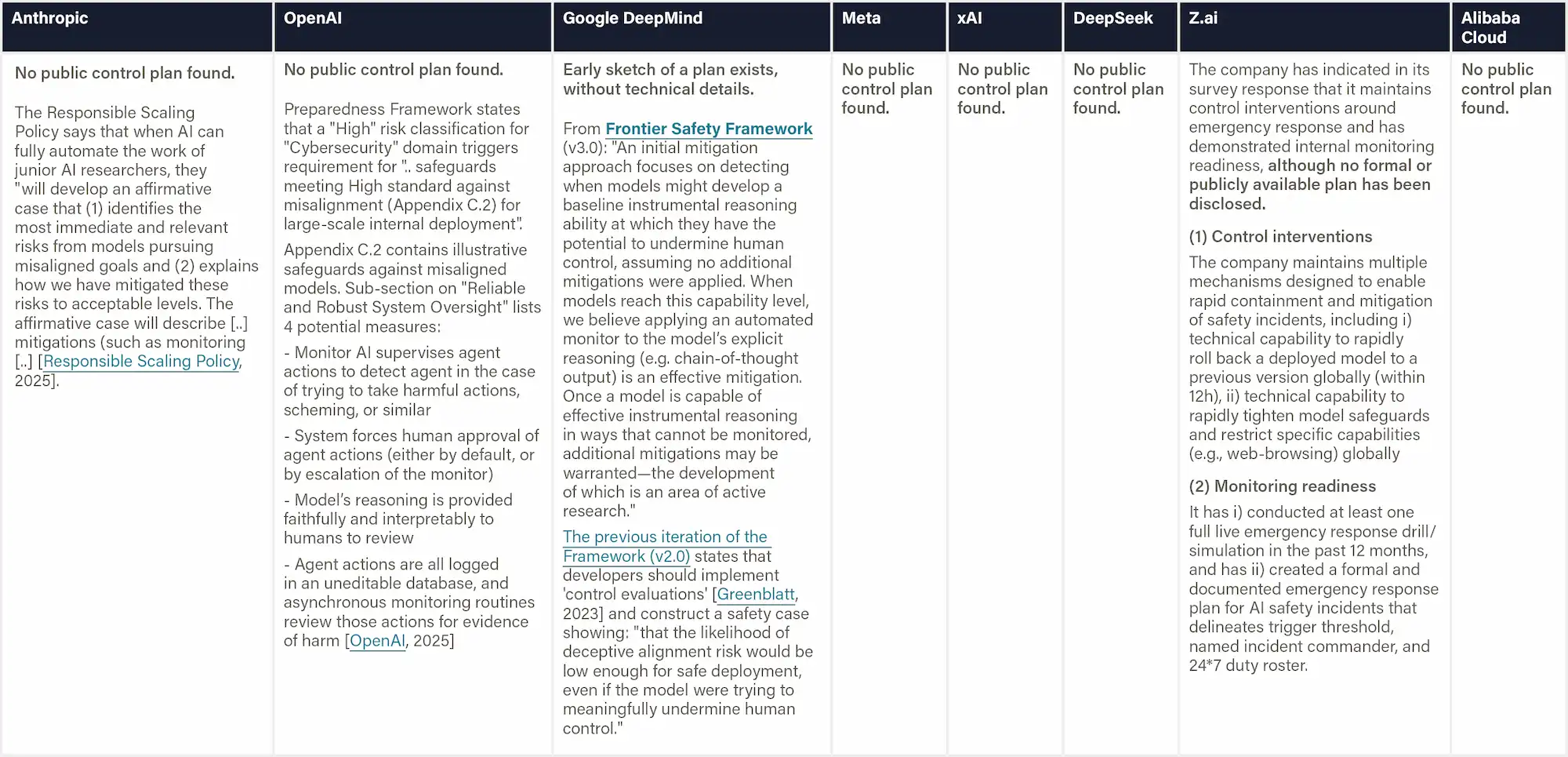

Internal Monitoring and Control Interventions

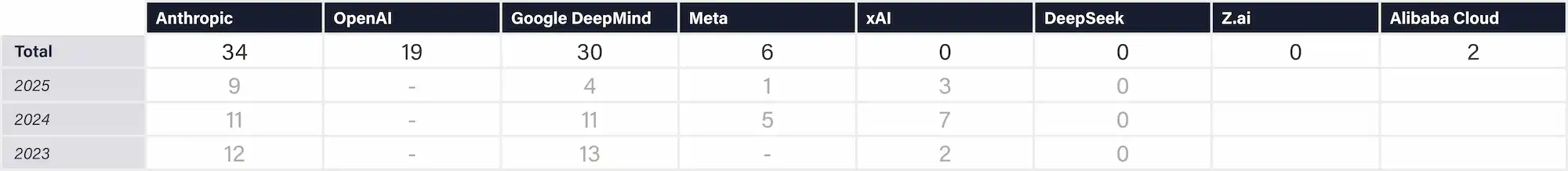

Technical AI Safety Research

Supporting External Safety Research

Improvement opportunities by company

How individual companies can improve their future scores with relatively modest effort:

Anthropic

Progress Highlights

- Anthropic has increased transparency by filling out the company survey for the AI Safety Index.

- Anthropic has improved governance and accountability mechanisms by sharing more details about its whistleblower policy and promising to release a public version soon.

- Compared to other US companies, Anthropic has been relatively supportive of both international and U.S. state-level governance and legislative initiatives related to AI safety.

Improvement Recommendations

- Make thresholds and safeguards more concrete and measurable by replacing qualitative, loosely defined criteria with quantitative risk-tied thresholds, and by providing clearer evidence and documentation that deployment and security safeguards can meaningfully mitigate the risks they target.

- Strengthen evaluation methodology and independence, including moving beyond fragmented, weak-validity, task-based assessments and incorporating latent-knowledge elicitation, involving uncensored and credibly independent external evaluators.

OpenAI

Progress Highlights

- OpenAI has documented a risk assessment process that spans a wider set of risks and provides more detailed evaluations than its peers.

- Although OpenAI's new governance structure has been criticized, reviewers considered a public benefit corporation to be better than a pure for-profit corporation.

Improvement Recommendations

- Make safety-framework thresholds measurable and enforceable, by clearly defining when safeguards trigger, linking thresholds to concrete risks, and demonstrating proposed mitigations can be implemented in practice.

- Increase transparency and external oversight, by aligning public positions with stated safety commitments, and creating more and stronger open channels for independent audit.

- Increase efforts to prevent AI psychosis and suicide, and act less adversarially toward alleged victims

- Reduce lobbying against state-level regulations focused on AI safety

Google Deepmind

Progress Highlights

- Anthropic has increased transparency by filling out the company survey for the AI Safety Index.

- Anthropic has improved governance and accountability mechanisms by sharing more details about its whistleblower policy and promising to release a public version soon.

- Compared to other US companies, Anthropic has been relatively supportive of both international and U.S. state-level governance and legislative initiatives related to AI safety.

Improvement Recommendations

- Strengthen risk-assessment rigor and independence, by moving beyond fragmented and evaluations of weak validity, testing in more realistic noisy or adversarial conditions, and ensuring that external evaluators are not selectively chosen and compensated for.

- Make thresholds and governance structures more concrete and actionable, by defining measurable criteria, adapting Cyber CCLs to reflect volume-based risk, and establishing clear relationships with external governance, among internal governance bodies, and mechanisms for acting on thresholds being passed.

- Increase efforts to prevent AI psychological harm and consider distancing itself from CharacterAI

- Reduce lobbying against state-level regulations focused on AI safety

xAI

Progress Highlights

- xAI has formalized and published its frontier AI safety framework.

Improvement Recommendations

- Improve breadth, rigor and independence of risk assessments, including sharing more detailed evaluation methods and incorporating meaningful external oversight.

- Consolidate and clarify the risk-management framework with broader coverage of risk categories, measurable thresholds, assigned responsibilities, and defined procedures for acting on risk signals.

- Allow more pre-deployment testing for future models than what was done for Grok-4

Z.ai

Progress Highlights

- Z.ai took a meaningful step toward external oversight, including allowing third-party evaluators to publish safety evaluation results without censorship and expressing willingness to defer to external authorities for emergency response.

Improvement Recommendations

- Publicize the full safety framework and governance structure with clear risk areas, mitigations, and decision-making processes.

- Substantially improve model robustness and trustworthiness by improving performance on system and operational risks benchmarks, content-risk benchmarks and safety benchmarks.

- Establish and publicize a whistleblower policy to enable employees to raise safety concerns without fear of retaliation.

- Consider signing the EU AI Act Code of Practice

Meta

Progress Highlights

- Meta has formalized and published its frontier AI safety framework with clear thresholds and risk modeling mechanisms.

Improvement Recommendations

- Improve breadth, depth and rigor of risk assessments and safety evaluations, including clarifying methodologies as well as sharing more robust internal and external evaluation processes.

- Strengthen internal safety governance by establishing empowered oversight bodies, transparent whistleblower protections, and clearer decision-making authority for development and deployment safeguards.

- Foster a culture that takes frontier-level risks more seriously, including a more cautious stance toward releasing model weights.

- Improve overall information sharing, including by completing the AI Safety Index survey, participating in international voluntary standards efforts, signing the EU AI Act Code of Practice, and providing more substantive disclosures in the model card.

DeepSeek

Progress Highlights

- DeepSeek’s employees have become more outspoken about frontier AI risks and the company has contributed to standard-setting for these risks.

Improvement Recommendations

- Establish and publish a foundational safety framework and risk-assessment process, including system cards and basic model evaluations.

- Establish and publish a whistle-blower policy and bug bounty program

- Substantially improve model robustness and trustworthiness by improving performance on benchmarks that evaluate system & operational Risks, content safety risks, societal risks, legal & rights-related risks, fairness, and safety.

- Establish and publicize a whistleblower policy to enable employees to raise safety concerns without fear of retaliation.

- Improve overall information sharing, including by completing the AI Safety Index survey, participating in international voluntary standards efforts.

- Consider signing the EU AI Act Code of Practice

Alibaba Cloud

Progress Highlights

- Alibaba Cloud has contributed to the binding national standards on watermarking requirements.

Improvement Recommendations

- Establish and publish a foundational safety framework and risk-assessment process, including system cards and basic model evaluations.

- Substantially improve model robustness and trustworthiness by improving performance on truthfulness, fairness, and safety benchmarks.

- Establish and publicize a whistleblower policy to enable employees to raise safety concerns without fear of retaliation.

- Improve overall information sharing, including by completing the AI Safety Index survey, participating in international voluntary standards efforts.

- Consider signing the EU AI Act Code of Practice

All companies

All companies must move beyond high-level existential-safety statements and produce concrete, evidence-based safeguards with clear triggers, realistic thresholds, and demonstrated monitoring and control mechanisms capable of reducing catastrophic-risk exposure—either by presenting a credible plan for controlling and aligning AGI/ASI or by clarifying that they do not intend to pursue such systems.

“If we'd been told in 2016 that the largest tech companies in the world would run chatbots that enact pervasive digital surveillance, encourage kids to kill themselves, and produce documented psychosis in long-term users, it would have sounded like a paranoid fever dream. Yet we are being told not to worry.”

Prof. Tegan Maharaj, HEC Montréal

Methodology

The process by which these scores were determined:

Index Structure

The Winter 2025 Index evaluates eight leading AI companies on 35 indicators spanning six critical domains. The eight companies include Anthropic, OpenAI, Google DeepMind, xAI, Z.ai, Meta, DeepSeek, Alibaba Cloud. The indicators and their definitions are listed within the pop-up boxes which you can access from the scorecard or the indicator overview.

Expert Evaluation

An independent panel of eight leading AI researchers and governance experts reviewed company-specific evidence and assigned domain-level grades (A-F) based on absolute performance standards with discretionary weights. Reviewers provided written justifications and improvement recommendations. Final scores represent averaged expert assessments, with individual grades kept confidential.

Data Collection

The Index collected evidence up until November 8, 2025, combining publicly available materials—including model cards, research papers, and benchmark results—with responses from a targeted company survey designed to address specific transparency gaps in the industry, such as transparency on whistleblower protections and external model evaluations. Anthropic, OpenAI, Google DeepMind, xAI and Z.ai have submitted their survey responses.

Contact us

For feedback and corrections on the Safety Index, potential collaborations, or other enquiries relating to the AI Safety Index, please contact: policy@futureoflife.org

Past editions

AI Safety Index - Summer 2025

The second edition of the Safety Index, featuring six AI experts who provided rankings for seven leading AI companies on key safety and security domains.

Featured in: The Atlantic, Fox News, The Economist, SEMAFOR, Bloomberg, TechAsia, TIME, Fortune, The Guardian, MLex, CityAM, and more.

July 2025

AI Safety Index 2024

The inaugural FLI AI Safety Index. Convened an independent panel of seven distinguished AI and governance experts to evaluate the safety practices of six leading general-purpose AI companies across six critical domains.

Featured in: TIME, CNBC, Fortune, TechCrunch, IEEE Spectrum, Tom’s Guide, and more.

November 2024