Our history

2014

May 2014

Founding

The Future of Life Institute is founded by our board members and holds its launch event at MIT.

2015

2-5 January 2015

Puerto Rico Conference

This conference brings together world-leading AI builders from academia and industry with experts in economics, law and ethics. The event identifies promising research directions to help maximize the future benefits of AI while avoiding pitfalls (see this open letter).

2015

2015 Grant Program

FLI establishes the world’s first peer-reviewed grant programme aimed at ensuring artificial intelligence remains safe, ethical and beneficial.

2015

FLI Podcast Launched

FLI launches a podcast dedicated to conversations with some of the most respected figures in emerging technology and risk mitigation, which now includes Daniel and Dario Amodei, Dan Hendrycks, Liv Boeree, Yuval Noah Harari, Annie Jacobsen, Beatrice Fihn, Connor Leahy, Ajeya Cotra, and many more.

2017

3-8 January 2017

BAI 2017 and the Asilomar Principles

In a follow-up to the 2015 Puerto Rico AI conference, FLI assembles world-leading researchers from academia and industry and thought leaders in economics, law, ethics, and philosophy for five days dedicated to beneficial AI at BAI 2017.

Out of the 2017 conference FLI formulates the Asilomar AI Principles, one of the earliest and most influential sets of AI governance principles.

July 2017

UN Treaty for the Prohibition of Nuclear Weapons

72 years after their invention, states at the United Nations formally adopt a treaty which prohibits nuclear weapons and ultimately seeks to eliminate them. Behind the scenes, FLI mobilises 3700 scientists in 100 countries – including 30 Nobel Laureates – in support of this treaty, and presented a video at the UN during the proceedings.

October 2017

First Future of Life Award

Every year the Future of Life Award celebrates the unsung people who helped preserve the prospects of life. In 2017 the inaugural award goes to Vasili Arkhipov for his role in averting nuclear war during the Cuban Missile Crisis.

November 2017

Slaughterbots

FLI produces Slaughterbots, a short film boosting public awareness of autonomous weapons.

On YouTube, the video quickly went viral and was re-posted by other channels. Across channels, it now has over 100 million views. It was screened at the United Nations Convention on Certain Conventional Weapons meeting in Geneva on the month of it's release.

2018

2018

2018 Grant Program

FLI launches a second grant programme to ensure artificial general intelligence remains safe and beneficial.

June 2018

Lethal Autonomous Weapons Pledge

FLI publishes this open letter, now signed by over 5000 individuals and organizations, declaring that "the decision to take a human life should never be delegated to a machine".

30 August 2018

State of California Endorses the Asilomar AI Principles

The State of California unanimously adopts legislation in support of the Asilomar AI Principles, developed by FLI the previous year.

2019

2019

BAGI 2019

After the Puerto Rico AI conference in 2015 and Asilomar 2017, FLI returns to Puerto Rico at the start of 2019 to facilitate discussion about Beneficial AGI.

June 2019

UN Digital Roadmap and Co-Champion for AI

FLI is named as Civil Society Co-Champion for the UN Secretary-General’s Digital Cooperation Roadmap for recommendation (3C) on the global governance of AI technologies.

2020

April 2020

Better Futures Short Fiction Contest

FLI co-hosts the 2020 Sapiens Plurum Short Fiction Contest with the theme “Better Futures: Interspecies Interaction.” The purpose of the contest is to encourage authors to conceive of the future in terms of desirable outcomes, and imagine how we might get there. Here are the winners from the 2020 contest.

June 2020

Foresight in AI Regulation Open Letter

FLI publishes an open letter calling upon the European Commission to consider future capabilities of AI when preparing regulation and to stand firm against industry lobbying. It receives over 120 signatures, including a number of the world’s top AI researchers.

August 2020

Autonomous Weapons Campaign Launch

FLI launches its campaign on the topic of Autonomous Weapons by publishing a policy position and a series of blog posts.

2021

2021

2021 Grant Program

FLI launches a $25M multi-year grant programme focused on reducing the most extreme risks to life on earth, or 'existential risks'.

December 2021

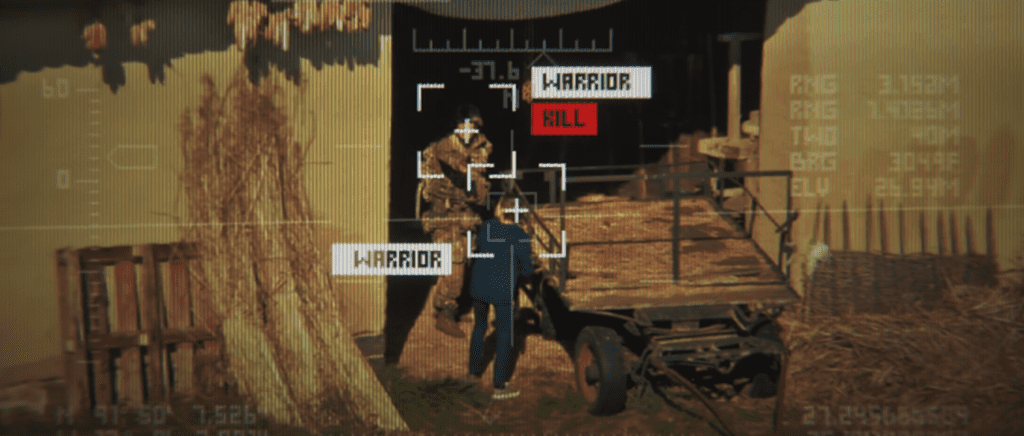

Slaughterbots - if human: kill()

Slaughterbots had warned the world of what was coming. Four years later, its sequel Slaughterbots - if human: kill() shows that autonomous weapons have arrived. Will humanity prevail?

The short film is watched by over 10 million people across YouTube, Facebook and Twitter, and receives substantial media coverage, from outlets such as Axios, Forbes, BBC World Service's Digital Planet and BBC Click, PopularMechanics, and ProlificNorth.

2022

March 2022

Max Tegmark at EU Parliament

FLI President Max Tegmark testifies on the regulation of general-purpose AI systems in the EU parliament.

2023

March 2023

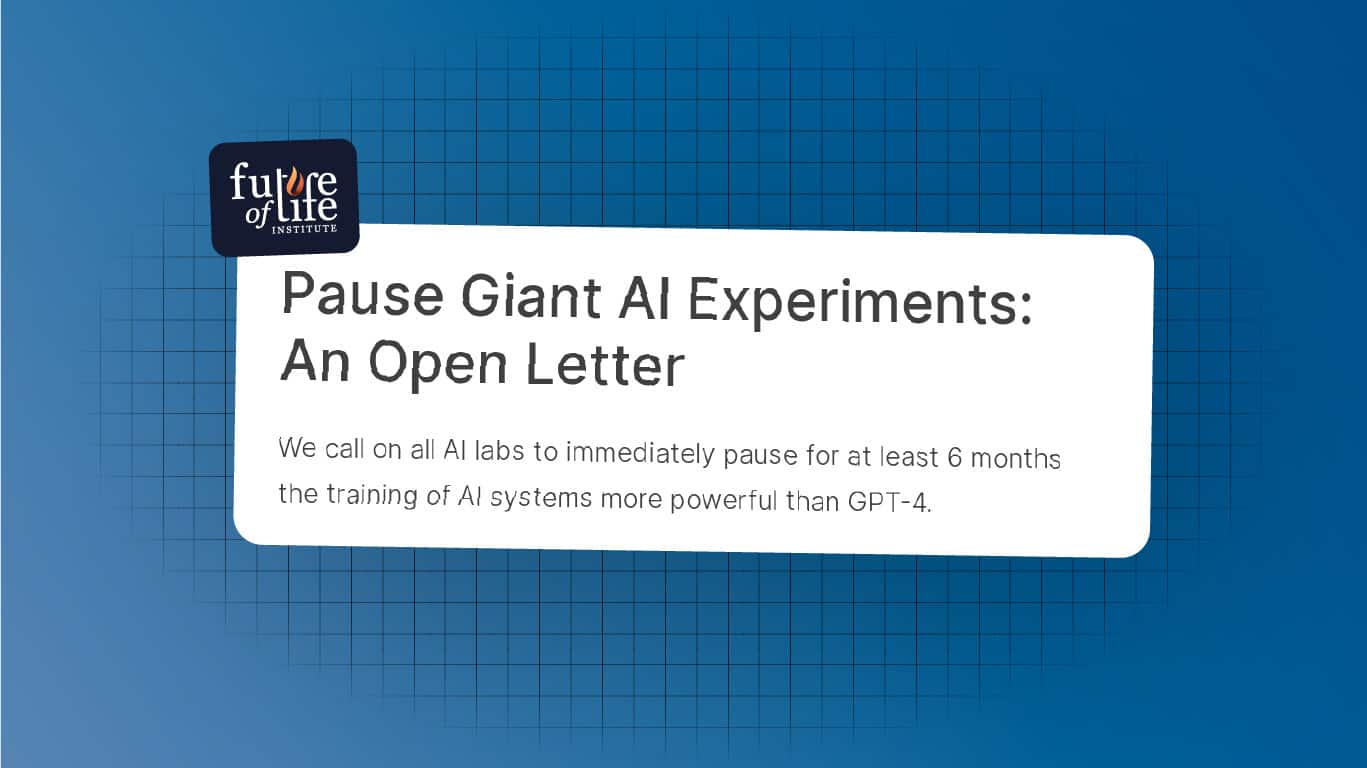

Pause Giant AI Experiments

FLI's open letter calls for a pause on AI training runs larger than GPT-4, which had been released a couple of weeks earlier. The letter sparks a global debate on the benefits and risks of rapid AI scaling.

June 2023

Nuclear War Simulation Video

FLI publishes the world's most realistic simulation of a nuclear conflict between the US and Russia, based on real present-day nuclear targets and cutting-edge science on the effects of nuclear winter.

July 2023

Artificial Escalation

Our short film depicts how race dynamics between the US and China could lead to unwise AI integration into nuclear weapons systems, with disastrous consequences.

October 2023

Max Tegmark at AI Insight Forum

FLI President Max Tegmark is invited to speak at the AI Insight Forum.

October 2023

Joint Open Letter with Encode Justice

A joint open letter by youth network Encode Justice and FLI calls for the implementation of three concrete US policies addressing present and future AI harms.

2024

January 2024

Futures Program

FLI introduces its Futures program, which works to guide humanity towards the beneficial outcomes made possible by transformative technologies. The program debuts with two projects focused on producing positive outcomes with AI: The Windfall Trust and grants programs centred on 'Realising Aspirational Futures'.

January 2024

Open Letter on Longview Leadership

The Elders, FLI, and a diverse range of preeminent public figures call on world leaders to urgently address the ongoing harms and growing risks of the climate crisis, pandemics, nuclear weapons, and ungoverned AI.

January 2024

Ban Deepfakes Campaign Launch

FLI launches the Campaign to Ban Deepfakes in collaboration with a bipartisan coalition of organizations including Control AI, SAG-AFTRA, the Christian Partnership, Encode Justice, the Artist's Rights Alliance, and more.

February 2024

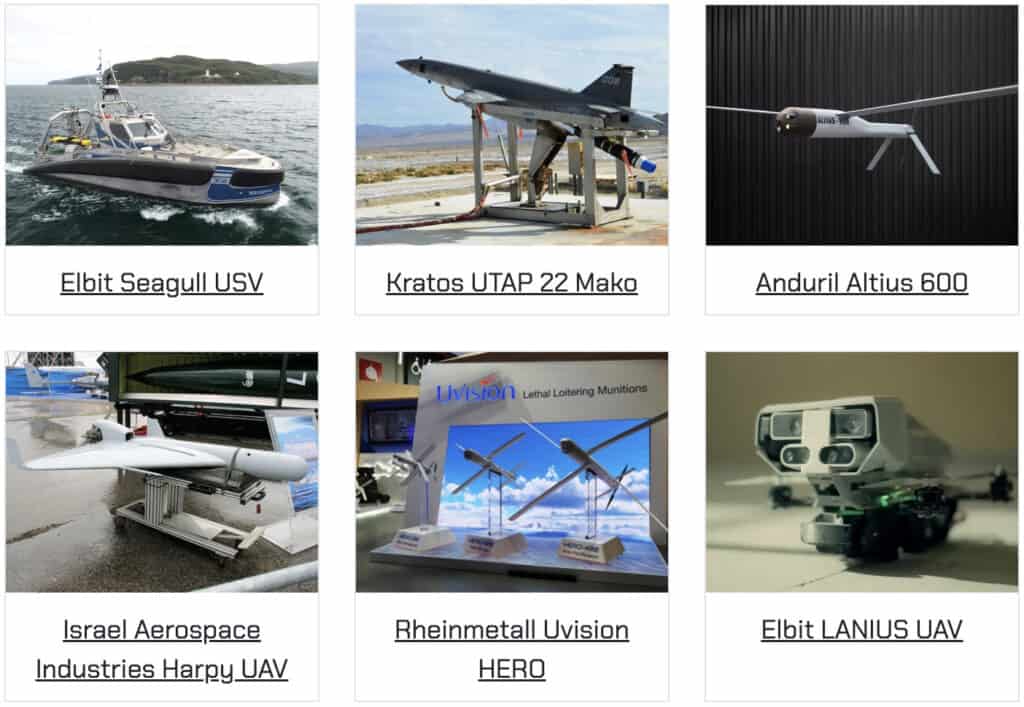

Autonomous Weapons Newsletter and Database

FLI initiates The Autonomous Weapons Newsletter, a monthly newsletter covering policymaking efforts, weapons systems technology, and more – alongside Autonomous Weapons Watch, a database of the scariest autonomous weapons in production, and on today's market.

April 2024

FLI at Vienna on Autonomous Weapons

FLI board member Jaan Tallinn delivers the keynote opening speech for the 2024 Vienna Conference on Autonomous Weapons. Both our Executive Director Anthony Aguirre and Director of Futures Emilia Javorsky participate in expert panels.

April 2024

Max Tegmark Addresses the US AI Taskforce

President Max Tegmark provides a briefing to members of Congress in the House AI Taskforce on the topic of Deepfakes. He is the first leader from Civil Society to do so.

June 2024

Our Position on AI

FLI releases an updated position statement on AI, including extreme risk, power concentration, pausing advanced AI, and human empowerment.

To see what we're working on right now, go to Our work overview.