Peter Railton on Moral Learning and Metaethics in AI Systems

From a young age, humans are capable of developing moral competency and autonomy through experience. We begin life by constructing sophisticated moral representations of the world that allow for us to successfully navigate our way through complex social situations with sensitivity to morally relevant information and variables. This capacity for moral learning allows us to solve open-ended problems with other persons who may hold complex beliefs and preferences. As AI systems become increasingly autonomous and active in social situations involving human and non-human agents, AI moral competency via the capacity for moral learning will become more and more critical. On this episode of the AI Alignment Podcast, Peter Railton joins us to discuss the potential role of moral learning and moral epistemology in AI systems, as well as his views on metaethics.

Topics discussed in this episode include:

- Moral epistemology

- The potential relevance of metaethics to AI alignment

- The importance of moral learning in AI systems

- Peter Railton's, Derek Parfit's, and Peter Singer's metaethical views

Timestamps:

0:00 Intro

3:05 Does metaethics matter for AI alignment?

22:49 Long-reflection considerations

26:05 Moral learning in humans

35:07 The need for moral learning in artificial intelligence

53:57 Peter Railton's views on metaethics and his discussions with Derek Parfit

1:38:50 The need for engagement between philosophers and the AI alignment community

1:40:37 Where to find Peter's work

Citations:

You can find Peter's work here

We hope that you will continue to join in the conversations by following us or subscribing to our podcasts on Youtube, Spotify, SoundCloud, iTunes, Google Play, Stitcher, iHeartRadio, or your preferred podcast site/application. You can find all the AI Alignment Podcasts here.

Transcript

Lucas Perry: Welcome to the AI Alignment Podcast. I’m Lucas Perry. Today, we have a conversation with Peter Railton that explores metaethics, moral epistemology, moral learning, and how these areas of philosophy may or may not inform AI alignment. The core problem that this episode explores is that as systems become more and more autonomous and increasingly participate in social roles that require social functioning, it will become increasingly necessary for AI systems to be familiar with and sensitive to morally salient features of the world. This requires that systems have the capacity for moral learning and developing an understanding of human normative processes and beliefs. On top of that, structuring any kind of procedure for moral learning in AI systems will bring in metaethical beliefs and assumptions that would be wise to understand and be explicit about. For a little more context, some key motivating questions for this episode to consider are: when and what is the degree to which AI systems will require the capacity for moral learning? How might metaethics inform or not inform AI alignment? How do you structure a system such that it can engage in moral learning in a way that would be broadly endorsed and would satisfy other ethical or meta-ethical principles we broadly care about?

For some more background, I did a podcast with Peter Singer on his transition from being a moral anti-realist to a moral realist. That episode is titled “On Becoming a Moral Realist with Peter Singer.” In that episode we explore his metaethical views, and Peter Singer mentions conversations and debate between Derek Parfit and Peter Railton on issues in metaethics. So, the second half of this podcast is dedicated to understanding and unpacking Peter Railton’s metaethics and how it compares with Peter Singer’s and Derek Parfit’s views. This podcast is pretty philosophy heavy, so if you’re into that and the ethics of AI then you’ll appreciate this episode. You can subscribe to and follow this podcast on your preferred podcasting platform, by searching for “Future of Life.”

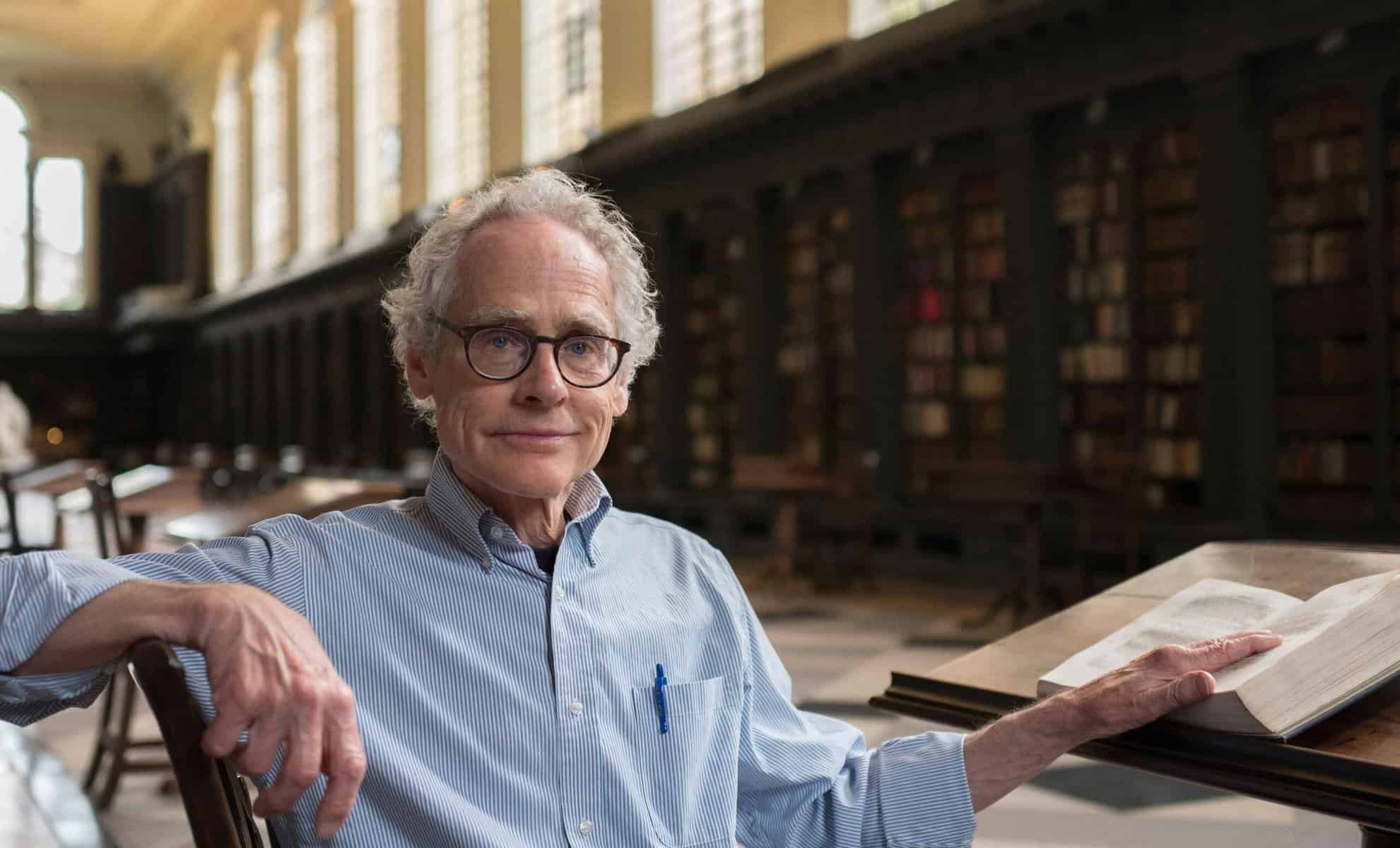

Peter Railton is a Professor of Philosophy at the University of Michigan, Ann Arbor. He has a PhD from Princeton and primarily researches ethics and the philosophy of science. He focuses especially on questions about the nature of objectivity, value, norms, and explanation. Recently, he has also begun working in aesthetics, moral psychology, and the theory of action. And with that, let’s get into our conversation with Peter Railton.

Just to start off here, sometimes I've heard that metaethics doesn't matter, or one might wonder when does metaethics ever matter in real life anyway? I'm curious, do you have any thoughts on whether metaethics matters at all for AI alignment?

Peter Railton: Well, in the most general sense, metaethics concerns, questions about the nature of morality its foundation, the possibility of moral knowledge, how we might acquire it, the meanings of moral claims, how they stand in relation to our other forms of knowledge. And so it does seem to me as if metaethics is important in thinking about the problems of ethics in AI, apparently because I think a lot of people have in the back of their minds, skeptical concerns about morality. And therefore, they doubt whether there could be objective value. They think perhaps value is entirely subjective. And if that's your approach, then you might say the challenge of creating ethical AI is not a very well defined problem.

What would be the subjective attitude of a properly aligned AI system? You might consult the population and find out what the average point of view is. But we know the average point of view right now is very different from what it was 200 or 300 years ago. We think in some ways it's improved since then. And we think in some ways where we are now could be improved. So we can't reduce the question of ethics in AI to something like opinion sampling, and that's because morality has objective dimensions and we use these to criticize our preferences and our opinions. And so any decent ethics for AI would build into the concept, the possibility of correction and criticism. And for that, you need some thought of what would constitute correction or criticism? How would we justify moral claims? And that takes us to the heart of metaethics.

Lucas Perry: Right. And there's a lot of moral anti realists or people who think that morality is subjective in, I guess, hard sciences and computer science in general. So this also applies to the alignment community. If one feels that moral claims or moral attitudes are subjective, then this choice that you mentioned to take the average of general popular opinion is itself a moral choice, which is the expression of one owns subjective moral attitude from that point of view. And within a subjective framework, there's no way to resolve that, except take the expression of all of the power dynamics of everyone's subjective moral attitudes and see what comes out of that, right?

Peter Railton: Well, yeah, that would be one of the problems. The project of creating ethical AI or AI alignment, as it's sometimes called, can't be the problem of giving our value system to machines because there is no unique value system that we possess. It could be the project of trying to make it possible for the machine to learn the most justified value system. And part of the problem, I think, is that people have exaggerated notions of what it would take to justify moral claims. They assume, for example, that there's a huge gulf between facts and values, that there are no reasonable ways of bridging that gulf, and that in general, what it would take to have objective morality would look something like the universe with what God would do, only without God.

One of the problems with that thought is that that's a model of morality as a set of commands given by some kind of a divine enforcer. And if you think that absent such a divine enforcer, morality could only be subjective, then I think you're missing the idea of what morality really does. The existence of a divine enforcer wouldn't bring morality into existence. A divine enforcer could be either good or malevolent. And so understanding what it is to do moral criticism should be an integral part of the challenge of thinking about ethics and AI. But looking at moral criticism, we have many practices of moral criticism, and those aren't, strictly speaking, subjective, and we value them because they help correct our subjective opinions.

Lucas Perry: So I think there's two parts of metaethics that I would like to see if you have any thoughts on how they may or may not apply here. Metaethical epistemology, how is it that you know things about metaethics? And whatever may be metaphysically true about ethics or not. So you brought up religion there. So in terms of, I guess, what would be called Divine Command Theory, morality would have a metaphysically very solid ground as being codified by God or something like that.

Peter Railton: Actually, I'd say that that wouldn't get us a solid metaphysical ground. The fact that commands come from a being that supremely powerful, and even one that's supremely knowing would not make those commands moral commands. Those conditions are perfectly compatible with immoral values. What we would need is a perfectly knowledgeable, entirely powerful, and all good God. A so-called AAA God. But that means the concept of good is independent of the concept of God itself, and understanding what it would be for the commands of a divine super powerful being to be good just takes us right back to the question of the nature of morality. We don't solve it by introducing supreme beings.

Lucas Perry: Right, right. So I'm not trying to justify or lay out the Divine Command Theory. Only using it to, I guess, attempt to explain how epistemology and metaphysics fit into metaethics. To me, it seems like what is relevant here to AI alignment is that how one believes one can know things about metaethics and whether or not there can be agreement upon metaethical epistemology would be the foundation upon which metaethical moral learning machine systems could be expressed.

There is sort of a meta view on the epistemology of metaethics, where one could say, "Because there are no moral facts, the epistemology is whatever human beings are doing to think about moral thought." And there isn't a correct epistemology. Whereas one could, whether through naturalism in your metaethics, or through non-naturalism in Peter Singer's ethics, believe there to be moral truths, and that thus there is a correct epistemology about metaethics, and that that epistemology of metaethics could be used to instantiate metaethical learning in machine systems.

Peter Railton: So one thought would be, there is one true morality and we're capable of knowing it. That itself wouldn't get us very far in epistemology until we could say what those methods of knowing are. An approach that's got something like that as an assumption, but that doesn't assume that we know what the destination is ultimately going to be, would be to ask, "Do we have good practices of moral criticism? And do those help us to solve actual problems, social problems, interpersonal problems, problems with our own lives?" And then to look at the ways in which we use morality in these contexts to solve problems.

And that brings it down to the level that it's something that comes within the scope of what can be learned. And if we look at children's learning, we see that their development as moral creatures proceeds in pace with their understanding of causality, their understanding of theory of mind, their capacity to form a counterfactual thoughts, because it's really an integrated body of general understanding. And so for example, the idea of solutions that are positive sums of game theoretic challenges, that's something that can be agreed upon by all parties to be a desirable thing. And so looking at strategies that have the possibility of yielding positive sums, cooperative strategies, strategies of trustworthiness, of signaling strategies, which enable us to coordinate with each other, understand each other's intentions, those have a justification that we can give in terms that are not tied to any one particular person's interests, which address interests generally, and which we can defend in an impartial way.

And so that would be an example of a way in which we could say those are more reasonable solutions, more justified solutions. There's an analogy here with epistemology generally. If someone were to come to me and say, "Well, you claim to have knowledge, how do you demonstrate that? How would you show that your understanding of knowledge is genuine knowledge?" I'd have to say, "Well, sorry, I can't demonstrate that. Any demonstration would presuppose knowledge. And so I can't pull it out of a hat and I can't derive it from nothing." So what can I do? I can say, "Well, here our practices of epistemic criticism. And while we have disagreements in various places about what counts as evidence or what does not, do those practices deliver the kinds of results that we would expect from reasonable epistemologies, making possible things like scientific inquiry and technology and so on?

And we can say, "Well, that's what epistemology could be expected to give us. We do have methods that can improve our ability to solve such problems in just those ways. We can find various ways to justify them in terms of probabilities, looking for ways in which we can increase accuracy and estimations." And so those are different ways in which by looking at our actual practices of epistemic criticism, we try to get some traction on the problem of knowledge. And I would argue we should do the same thing about morality. If we start from the standpoint of skepticism, in the case of knowledge, we will end with skepticism. The same would be true with ethics, but I see no more reason to do it in ethics than in epistemology. We surely must know a great deal about what's good for us, good for one another. And we have well-developed practices of moral assessment that we use in our own lives, and we use in our collective institutions. So I would say, if we look to those, then we don't see just subjective opinion. It's quite different from that and we see a lot of constraints.

Lucas Perry: So I do want to explore more arguments around metaethics with you. And we're intending to do that after we discuss moral learning here. Now, in terms of moral epistemology and the epistemology of metaethics, I'm interested in this part of the conversation in setting up an attempting to illustrate that whether one is going to take a skeptical view on moral epistemology or not. That moral learning and our view on moral epistemology is essential and important in the alignment and development of AI systems. And here you're defending a more realist account of epistemology in ethics.

Peter Railton: Well, you could say that I, myself, am a realist, but what I've been saying so far, a pragmatist about ethics could say just as well. John Dewey would say something very similar. Various kinds of non realists, but who are nonetheless objectivists in ethics, Kantians, for example, Constructivists, and so on. What I've said it was really neutral territory for a wide range of views in metaethics. And it doesn't presuppose in particular, a form of naturalism or a form of realism. That's actually a tremendous amount to build upon so that when we think about how to design robots to understand the world, we have a lot of knowledge about what sorts of systems would be well-designed for doing that.

Similarly, if we want to build a robot who can interact creatively and productively with other robots, solve problems of coordination, reduce conflict, realize longterm goals, interact successfully with people, recognize their interests, take their interests into account, being relatively impartial with regard to interests that are at stake, those are not mysterious in the same way that the skeptic seems to think they are. Because again, they're already integrated in our practice and as Hume pointed out a long time ago, skepticism doesn't survive very well once we leave the closeted philosophical study. People go out and they act as if they had knowledge of the world and they act as if there are things that people could do to them or that they could do that would be better or worse, right or wrong. They think about how they would treat their children. They think about how they should behave with respect to their students or their professor. That doesn't take us into the misty realms of metaphysics, but it does take us into the practices of moral criticism and self criticism.

Lucas Perry: So could you unpack just a little bit more about why this view is neutral?

Peter Railton: So for example, I've mentioned a couple of features of moral thought. One feature of moral thought is that it takes a kind of impartiality seriously. It gives equal weight to all those effected. That's something that Kantians and Utilitarians and many other moral theorists would agree on. Another feature of moral thought is that it's concerned with general reasons. Similar cases have to be treated in a similar way. That leads to a doctrine known as supervenience. We can't invent moral distinctions that don't correspond to real distinctions, in fact. Another feature is that morality has to do with reciprocity, relations of mutual gain and mutual benefit. Another is that morality involves taking oneself and others as ends and not as mere means.

Those are all normative theories. But if you then ask, well, "What about the metaethical side? Could a pragmatist about ethics say the same things?" And the answer seems to be, yes, the pragmatist sees ethics is essentially about people solving the human problems that they face in ways that meet these kinds of desiderata. The person who believes that there's a rationalist foundation, believes that you can know a priori that these constraints exist of impartiality and so on. But as you can see from Singer's work, the result of applying his form of rationalism is not dramatically different from the results of applying my form of naturalism. And that's because the target that we're all working on, ethics that is, has a great deal of determinant structure. And so any metaethical theory is going to have to capture a lot of that structure.

Lucas Perry: And so, sorry, what is the relationship about how this is instructive for why metaethics matters for AI alignment?

Peter Railton: Well, the suggestion was, well, we should know something about what ethics is in order to answer that question about how we might gain moral knowledge. If we can gain moral knowledge, what moral knowledge might consist in? That's where we started. And then I tried to suggest a bunch of considerations, a bunch of features, that I could call obvious features of moralities of practice. Because I think our practice is not just at the normative level. People also have implicit metaviews in ethics. They demonstrate that by, for example, their knowledge of how you can determine morally relevant considerations in situations. So they understand what kinds of considerations are or aren't morally relevant. They understand the distinction between morality and etiquette, between morality and law, between morality and self-interest. So they have a grasp of a bunch of these obvious features of morality.

And those are not just features of one or another normative theory. They're are features of virtually all normative theories and features that any metaethic is going to have to accommodate, unless it's going to be skeptical. So that's why I say that there's a great deal of common ground, not because the fundamental explanations are going to be the same, but there is an explanatory target, which has a great deal of structure and which indeed all these theories have to explain. And that requires then that metaethical theories be adequate to that.

Lucas Perry: I see. So that is already structuring metaethical epistemology is what you're saying?

Peter Railton: Yeah. It gives you quite a bit of structure.

Lucas Perry: Yeah. That's just reminding me about how Peter Singer talks about this one philosopher in his book, The Point of View of the Universe, discusses how there are a few axioms of morality and they seem to touch upon these convergent principles that you're talking about here. Now, on a realist's account of metaethics, there would be something like a one true moral theory. And if one takes the one true moral theory view seriously, then the problem of AI alignment would be to cultivate a procedure for coming up with the correct moral epistemology in order to find the one true moral theory, or to discover the one true moral theory ourselves, and then align AI systems to that.

Now, if one believes that there is not one true moral theory, and there is only the evolution and extrapolation of human normative processes, and preferences, and metapreferences, then one might not want to come at the AI alignment problem from the perspective of a one true moral theory approach. And as a general note, I'm taking this language from Iason Gabriel, who will be on the podcast soon. And so in the secondary scenario, that is not using the one true moral theory approach, one would want to come up with a broadly acceptable procedure for aligning AI systems that didn't presume to try to discover a one true moral theory. Do you have any reactions to these two ideas or approaches to alignment?

Peter Railton: Yeah. The question of whether one thinks there is one true theory is somewhat different from the question of whether when things were close to it or we have good ways of knowing it. I myself, although I'm a realist, I recognize that there's a good chance that my moral views are wrong and my metaethical views are wrong. And so I don't want to just put all of my energy into thinking, "Well, how would we discover the one true moral Theory?" I would want to think more robustly. And again, I can make an analogy with epistemology. If you go into a philosophy of science department or a statistics department, you'll find that there's a tremendous debate between people who think that Bayesianism is the right kind of approach for evidence and people who think that standard methods of social science are the best methods of evidence gathering.

You'll find a tremendous amount of disagreement. So if we're trying to build a robot who understands its environment, we don't want to say, "Well, we have to figure out which one of those theories is correct before we can build a robot to understand its environment." You might say, "We want a robot that's got a robust capacity to learn, and that would deliver results, reasonably approximated by a Bayesian, or an inductivist, or someone using social science statistics. They're not going to agree on everything. Where there's overlap, we should try to build a machine that can stay in the overlap, we should try to build the machine that's not brittle, such that it makes epistemic commitments that are at the far edge of one or another of these views.

And so I would say our task is to build a system that's robust. And that means building into it the fact that we don't know what this one true theory is. And so therefore we want as far as possible to accommodate an array of approaches, all of which have very strong reasoning behind them. You could think that we're not trying to build an AI system that discovers the one true theory. We're trying to build one that isn't going to be dependent upon exactly the target that it hits, but rather could be successful in a array of possible environments.

Lucas Perry: So, I mean, adjacent to this and promoted and discussed by people like Toby Ord and William MacAskill, would be this human existential procedure for moving into the future, where it's like, we're going to align AI systems, whatever that means. And that alignment will hopefully not lock in any values or any particular kind of alignment procedure, but will ensure existential security for humanity, such that existential risk just keeps going down to zero and is near zero. And then we use this existentially secure situation to do a long reflection on value, and what is good, and what may be true or not true about ethics. And then with sufficient consideration, then we can engage in populating the stars and optimizing things the way that we see fit. So what is your view on this proposed long reflection?

Peter Railton: Insofar as I understand it, I don't have any objection to it. I'm not sure I do understand it. One of the things that you just in passing was that we were going to try to design these systems to behave as we see fit. I myself am not sure I know how it is fit to behave. And I certainly know that I have some mistaken beliefs about that. And I would hope that just as artificial intelligence may help us correct certain of our views on cosmology or in medicine, artificial intelligence could help us correct certain of our views and ethics.

We've seen a tremendous amount of evolution in people's fundamental moral convictions over time. Some have stayed relatively similar. Others have changed dramatically. And we would, I think, do best to think of the artificial extension of intelligence as one way in which we can get a perspective on these issues and situations and problems that isn't just our own, and that won't have the same priors as our own, and won't have the same presuppositions, and they should be included. We should think of these as his agents.

They will have interests just as we have interests, and the standard would not be, what do we see fit, where we mean something like we humans, but what will we see fit as we, the humans and the artificial systems continue our evolution and our cultural development. And we want to think that the path that we should follow is one that leaves open that kind of development rather than constraining it to fit what happens to be our current set of moral convictions, which again are not shared. There are too many disagreements in order to think that we could just write down the rules. Long reflection, I think will also tell us that we need a dynamic picture. And we should have some convictions that are more confident, closer to the core. We should have methods and practices that meet reasonable standards of justification and objectivity, and we should be prepared to learn.

I can't, I'm afraid, to think of a way to guarantee against the existential risk from artificial intelligence or even our own intelligence, which may be more problematic. But I do suspect that the best way to contend with problems with existential risk is to face them as communities of inquirers.

Lucas Perry: All right. So I think you've done an excellent job explaining the importance of moral learning and moral epistemology here, given that the ongoing cultivation of more wholesome and enlightened moral value and moral thinking is always on the horizon. Now, you have some perspective and research that you've done on moral learning in humans and the importance and necessity of that. I'm curious here now then to relate some of that research that you've done in moral learning in humans to how AI systems of increasing autonomy may also wish to take on the kind of moral epistemology that infants and young humans may have.

Peter Railton:

I wouldn't say that I've done research in this exactly. I've certainly explored others' research in this and try to best I can to learn from it. One of the things that's impressed me in the literature as it's evolved over the last couple of decades is how much the learning of children is accomplished, not via the explicit teaching, but by the children's own experience. What we've learned recently, and this is not from developmental psychology, but from various kinds of models of machine learning is that very complex structures can be learned experientially. There are powerful techniques which we can add to that kind of probabilistic learning in order to create knowledge of general principles, to do something like build a structured understanding of language that would enable a child to speak fluently, to understand what others are saying and to engage with them that does not require either an innate grammar or explicit instruction in language as such. That's a kind of a model of how we also seem to acquire our social normative knowledge.

If you think about the perspective of the infant, one thing that we've learned from the animal research is that animals don't just build a spatial map in relation to themselves. They don't just build an egocentric map of their environment. They also build grid-like maps that are non perspectival, and they navigate by combining these two kinds of information, perspectival and non-perspectival information. Infants seem to do something similar in learning about learning. They not only represent their relations with individual adults and whether those benefit them or not, but they also seem to construct general representations of whether a given adult is competent or helpful in third party interactions and to use that aperspectival information to make decisions about who they're going to learn from or pay more attention to. They start doing this surprisingly early on. And so at the same time that they're constructing the ego centered world, they're constructing a non-centered representation of the world that includes normative features like reliability, competency, helpfulness, cooperativeness.

And so the child in coming to represent the world around them is constructing representations that have the initial form of moral representations. It turns out to be efficient for learning to be a successful human being that one construct representations spontaneously that have this quasi-moral structure. And that would suggest to me that if machines develop as agents, agents interacting with other agents, agents capable of solving a range of problems, capable of having sustained interactions with humans to solve open-ended problems, that they will also find that they do better if they can construct these quasi-moral representations of situations. And so that means that they will be acquiring sensitivity to morally relevant information through the very task of acquiring social competence, linguistic competence, epistemic competence in a social world.

So there's a kind of picture here that congrues nicely with the fact that we now know that complex models can be acquired through experiential learning. That suggests that there is a promising pathway toward the development of theory of mind, causal inference, representation of social value from a objective or non-personal perspective. There is an argument for thinking that that's actually a fundamental core part of our capacity as intelligent beings capable of successful social interaction. That suggests that this is not a peculiarity. It's not culturally specific. And so why not use similar methods in our interactions with artificial agents to enable artificial agents to acquire these kinds of quasi-moral mappings?

Lucas Perry: So the key thing to draw out from here is that there is this distinction between explicit and implicit learning of morality, and you're remarking about how there isn't much explicit moral learning in infants and children. Most of this moral learning comes from simply experience and interacting with the world rather than explicit instruction about what is right and wrong.

Peter Railton: There's tremendous cultural variability in that within our society and across societies as to how much explicit moral instruction children are given. What's fascinating is that even in societies where children get very little explicit moral instruction, they nonetheless acquire these capacities. Similarly with language, there are some societies like upper middle class US society where parents talk extensively with children. There are other societies where parents do not, and yet the children can become fluent linguistic agents. So my thought is that the explicit theory isn't really the thing that's doing the fundamental work. Even to understand what parents are trying to do when they give you explicit world instructions to understand how to apply those or what they might mean, the child is already going to have to have quite a complex aperspectival representation of the social situation. The thought here is that there's some places explicit theory, some places less explicit theory, but the result in terms of the development of behaviors are very similar.

A good example of this is that around age three or four children who are given a command by an adult in authority, if that command violates a reasonable norm against harm will balk and refuse to perform it. So if a substitute teacher comes in one day and says, "I'm the teacher today, and in my classroom, you have to raise your hand before you speak," children in the classroom will start raising their hand before they speak. If the teacher says instead, "I'm the teacher here, and in my classroom, children jab the point of their pencil into the child next to them when they wish to speak," they'll stop. They won't do this. And if they're asked why they won't say, "Well, that's not the way we do it." They'll say, "It would harm the other child."

And so that suggests that even an attempt by a figure of authority to give a norm in a situation where children can perfectly well understand that there is a scope of legitimate authority, put your hand up before you speak, they will distinguish between that kind of conventional authority and moral authority. And that's an autonomous action on their part. They're not getting rewarded for it. In fact, the teachers, they either send them out of the room, send a note home to their parents, but they balk because they can represent the situation in these quasi-moral terms. And when they do that, they say, "No, this is not a good solution to the problem." That suggests to me that even if we were to think that children learn by being given explicit instructions by people in authority, they actually independently learn that they can resist that and will resist it.

Lucas Perry: Right. So we're in a position where evolution has cultivated and embedded in us, a kind of moral learning, where there is a certain degree of implicit and explicit moral learning, depending on your culture and where you're from. And as you're saying, luckily there's strong convergence on this ability of moral learning to lead human beings to agreeing on say in the case of stabbing the other child, that would be something like a principle of unnecessary harm to another person. That seems to be for most human beings something that is strongly converged upon pretty early, unless your environment is particularly pernicious or something. And that there is this convergence because of how our moral learning is structured given evolution. And that, that moral learning enables in us a kind of moral autonomy that's there from an early age.

And there is a question of how this moral learning is best structured in say both people and in machine systems. And then there's the question of moral learning from the outside. What kind of environment is most conducive to moral learning? Are there insights into this that can begin pivoting us into the relationship or importance of moral learning in AI systems?

Peter Railton: Perhaps so. Actually there's a fair amount of evidence that even infants brought up in some very difficult situations will nonetheless develop these forms of pro sociality and cooperativeness, partly because they become especially important in those situations even to solving the most basic problems or meeting the most basic needs. So I wouldn't think that the mere difficulty of the situation was sufficient to prevent this kind of learning. On the other hand, if the child is given the wrong incentives, they're also going to learn a whole bunch of other stuff like you can't count on other people, you can't trust other people.

So put this from the standpoint of artificial agents. We want the artificial agents in our world, whether they're a companion for an elderly person or a autonomous vehicle or a telephone answering service system, we want those systems to be sensitive to these kinds of moral considerations and capable of a degree of autonomy. If for example, there is a system that's looking after an elderly person and some vital sign of the elderly person is showing a problem, and the person says, "I don't want to report that. I don't like having people know this information about me," or maybe they're concerned that the doctor will prescribe something that they won't like, I hope to have systems, which can in that situation think, "Is this the kind of thing that I should keep from the physician? It's the preference of this individual, but this preference may not be the best interest of the individual in this case."

And so on autonomous system would be able to make that kind of assessment. Could get it wrong, could get it right, could learn from it, but I wouldn't want a system to be such that they would simply take over wholesale the preferences of the person that they are interacting with. And of course the same thing is going to be true with self-driving cars and with question answering systems and so on. They will need a certain amount of autonomy in order to do those jobs effectively. And in order for that to happen for them to have that autonomy, they'll have to have their own representations of the moral structures of the situations and have the capacity to construct those.

I suspect that if we really do want to create intelligent systems that are capable of this kind of autonomous self-critical and critical moral thought, the way to do so is very much like the way children do so. And in so doing, we run the risk of creating some autonomy systems won't always agree with us, but have we done what's appropriate so that when they exercise that autonomy, their chance of getting things right is good at least as our chance of getting things, right? So you could think of this in the kind of adversarial picture where you're trying to see if you can discriminate between the moral judgments of the machine and the moral judgments of the individual and the machine, and the individual could be part of a learning process that improves the machine's overall model and generative model of situations.

Lucas Perry: So there would be the question of, how do you structure a system such that it can learn moral learning in a way that would be broadly endorsed or would satisfy other ethical or meta-ethical principles that we have? That is double-edged in so far as if you screw it up, then the thing is autonomous and can disagree with you. And the capacity to disagree would either be detrimental in the case in which it is wrong in its moral learning, or it would be enlightening for both us and the world and the machine if it were right about morality when we weren't. How do you think about and balance this risk between the possible enlightenment that may come from embedding AI systems with moral learning and also the potential catastrophe if it's done too quickly and incorrectly?

Peter Railton: Yeah. Wish I had an answer. If you think about it, the existence of humans with malicious intentions means that if artificially intelligent systems don't have this kind of moral autonomy, they're going to be very willing servants. So you might say, "Well, there's a risk on the other side, which is that if they aren't capable of any kind of criticism or autonomy, then they will be much too willing and much too readily deployed and much too manipulable by humans whose purposes I'm afraid to say are not always benign." If you were thinking about the problem of raising a child, you would say, "Well, I don't want to raise a child who simply take orders. I want to raise a child who can raise questions as well."

I think our only defense against malicious humans with extremely intelligent systems at their disposal is to try to ally with intelligence systems to create a comparable counter force. And that counter force is going to be operating out way past our understanding because it's going to be in competition with systems. They can operate extremely fast and take into account a large number of variables. And so we better be building systems which, as they get further and further out in this kind of a competition, have some kind of a core where they are responsive to morally relevant features even at the far extent of their development.

And so if you think about it as trying to build a moral core, then that core can figure in their operation even as they become more and more intelligent. They can use the intelligence to gain information and perspective and capacity to understand situations that can improve their understanding. But if we don't do something like this, we will really be and other artificial systems will be prey to those who have and want to implement malicious and manipulative intentions. So I balanced the risk partly by thinking, I can't think of a very good way to defend against the perils of malicious combinations of human and artificial intelligence other than to develop more trustworthy forms of human and artificial intelligence interaction. And that requires according these systems some autonomy and some trust.

Lucas Perry: That makes sense to me. And I think it addresses some important dimensions of the soon to be proliferation of AI.

Peter Railton: To me, what are the most exciting features of more recent developments in artificial intelligence is that they give us for the first time, I think, a plausible model of intuitive knowledge and knowledge that it could be implicit, but nonetheless be highly structured, contain a great deal of information, contain a capacity to engage in simulation and evaluation. So I would expect that the structure of moral knowledge could be like our structure of common sense knowledge generally. It could be quite distributed. It could be quite a complicated system, not a system of extracted principles. There might be some general features that are important, and I think that's bound to be true. And that is true when these systems learn, but we don't have to think that the kind of competency that they would have, if it isn't something like that, is therefore undisciplined and therefore lacks power or reliability.

So for the first time, anyhow, I thought here is a picture of how intuitive intelligence might look. And of course we can't introspect the structure of such knowledge and it does not have a readily introspectable propositional structure. But it is capable nonetheless of carrying and modeling and engaging in quite complex computations, simulations, action guidance, control of motor systems in ways that look like intuitive intelligence. Now I realize we're a long way from the way the brain actually functions, but even to have these models, it gives us a kind of proof of concept of the possibility of something like intuitive knowledge.

Lucas Perry: Right. So if we're building AI systems as willing slaves who optimize the preferences of whoever is able to embed those in the machine, there's no defense in that world against malevolent preferences other than not allowing the proliferation of AI to begin with.

Peter Railton: And we're already past that point. Enough has proliferated and there's enough inequality of wealth and power in the world to guarantee that other proliferation will take place. It's already the case that we can't count on keeping this genie in the bottle and obviously don't want to do so. I'd say we're now in the phase where we need to have an active, constructive program of starting to build AI agents that are actively responsive to morally relevant considerations, are good at solving coordination problems, are good at this kind of interaction and capable of the kind of insight needed to be potential moral agents.

Lucas Perry: Right. And you argue that as the systems inhabit increasingly social roles in society and are constantly interacting with other agents and with the world, it's increasingly important that they be sensitive to morally relevant features. Without this, again, malevolent humans or humans with misaligned values that are counter to most of the rest of humanity can abuse or use systems more freely if they're not already sensitive to morally relevant features. And that if there is an ecosystem of AIs, purely altruistic systems which are not tuned into morally relevant features can be abused by other AIs as well.

Peter Railton: Yes, that's right. One thing that's gotten me to feel some conviction about this possibility is that the one kind of experiments that I do run are thought experiments. And I've been for years running moral thought experiments in my moral philosophy classes. And in recent years, I've been able to do so using a system that allows students to confidentially record their answers to problems like moral dilemmas or questions about interpreting moral situations or motives. And what's impressed me over the years is how coherent and consistent these responses are.

And what leapt out, for example, from the familiar trolley problem was that mediating their moral judgments seem to be a model of the agents that are involved, a model of what kind of an agent would perform an action of a certain kind. And what kind of responses such an agent would receive from others in the community? Would they be trustworthy? Would they not be trustworthy? And so, instead of thinking there's just these arbitrary differences in preference between throwing a switch and pushing someone off a footbridge, and there's no real principle there, and no one's found a principle to cover these cases, you can think now there's this intuitive competency people have and understanding situations and characters and what kinds of persons would respond in what ways and situations and what it would be like to have those persons in our community.

And once you look at it that way you can get a tremendous amount of consistency in people's responses, which suggested to me that they are doing this kind of generative modeling of situations and doing so in a way that does predict to their actual judgments. And if I ask, "Well, why did you make that judgment?" they'll say, "I don't know. It was just an intuition."

Lucas Perry: Yeah. So the thought experiment that you're pointing to, a lot of people would flip the switch in the trolley thought experiment to switch it to the track where there's only one person and then if you changed it so that there's a person on a bridge who is sufficiently large, that if you push them off the bridge, they will stop the trolley from killing five people on the track. The intuitive response that you're pointing out is that people are less likely to want to push someone off of a bridge than to flip a switch. And you're like, well, what's really the difference? In the thought experiment, there's not much of a difference, but the intuition that you're pointing out, the morally relevant feature that is subtle and implicit is that we don't want to live in a world where there are the kinds of people who have the capacity to push people off of bridges.

Peter Railton: In that kind of a setting, yes.

Lucas Perry: Yeah.

Peter Railton: And you can give them a whole array of other scenarios in which the agent would have to do something like pushing someone to a grisly death and where they will agree that it should be done for example, in situations where self-defense is needed against, for example, the terrorist action. And again, you've asked them, "Well, would you trust an agent who would perform such an action?" then the answer is they would actually have more trust in such an agent. So again, they're modeling the situation, not in response to this or that minor tweak of the situational features, but in terms of a quite deep understanding of the motivations and attitudes that are involved. And then if you go over to the psychological literature, you find the dispositions to give the push verdict in the footbridge case correlate more with antisocial behavior, with lack of altruism, with lack of perspective taking, with indifference to harm than with altruism or any kind of a generalized utilitarian perspective. So the psychologists seem to confirm the understanding that my students implicitly had of the situation.

Lucas Perry: What's relevant to extract here is that there are deep levels of morally salient features, that human beings taken to account, and that are increasingly needed to be modeled and understood by machine systems for them to successfully operate in the world.

Peter Railton: Yeah. And to be trustworthy. I'm one of those people who thinks emotion is not a magical substance either, and that artificial systems could have and acquire emotions. And that part of the answer to the question of how do you build a core that is resistant against certain types of manipulation is to look at how it's done in humans and indeed another animals and discovered that the affective system plays a pivotal role in just these kinds of situations. And so I suspect that's another avenue of development. And children's moral emotions undergo a similar kind of evolution through their upbringing, but through their direct experience because the emotions are there before they're told what to feel. Indeed how would you tell the child what to feel?

Lucas Perry: Are there any other points that you'd like to wrap up here on then on the advantages of reflecting on AIs, which are sensitive to morally relevant features?

Peter Railton: I try to be as accurate as I can in understanding what we're learning from the literature on pro sociality, for example, both with regard to individual human development and with regard to human communities, going back, looking at hunter gatherer communities. And even as there have been changes in morality, and I have emphasized that there's been changes over time, the kinds of features that people take to be morally relevant, many of those have been relatively constant. And you can think of our changes in our moral views that have taken place over the years is getting better and better at winnowing out the ones that aren't really morally relevant, like gender, ethnicity, sexual orientation, and so on, because they can easily become culturally relevant without being morally relevant. Fortunately, we have the critical capacity as agents to challenge that.

Lucas Perry: Yeah, that makes sense. The core importance that I'm extracting from everything is the baseline importance of moral learning in general, and also the understanding and capturing what human normative processes are like and what they entail and how they unfold. And that participating in a world of humans requires knowledge of both moral learning and the ability to learn morally.

Peter Railton: And this is not saying that people will always behave well, just in the same way that acquiring linguistic competence doesn't mean people are always going to speak well or truthfully, or honestly, but rather that the competency will be acquired. One example that I like is sexual orientation. When I was growing up, it was considered fatal for someone's social identity to be discovered to be gay. And there was a great deal of belief about the characteristics of gay individuals. In the 90s and so on, a large number of gay individuals were courageous enough to indicate their orientation. And what was discovered, we all discovered, was that the world was full of gay individuals whom we admired, whom we had standard relationships with, who were excellent colleagues, coworkers, friends, and that therefore we were operating on a bad dataset because we had not really had, we here I'm talking about heterosexuals, had insufficient experience with gay individuals. And so we could believe all kinds of things about them.

So I would emphasize that if it's a learning system, it's going to be very sensitive to the data. And if the data's bad, the learning system is going to have a problem. So I don't think it's a magic solution, but I think the question to ask is, so how do we build on this? How do we provide more representative experiences and less biased samples so that the learning can take place and not pick up cultural biases?

Lucas Perry: Yeah, those are really big problems that exist today and a lot of the solution right now is human beings having to do a lot of hard work in datasets. We can't keep that up forever. Something else is needed. I think this has been instructive about the importance of structure of moral learning and I want to pivot back into our discussion of meta ethics and your conversations with Derek Parfit and what your metaethical view is and how views on metaethical epistemology or metaphysics may bring to bear intuitions about what moral learning is like or what it might entail. It's Derek Parfit, right? Who has essays on, Does Anything Really Matter?

Peter Railton: Yes.

Lucas Perry: So I guess that's the question here then for this part of the conversation, is, does anything really matter? So you were in conversation with Derek Parfit and it seems like your views have converged and are different in ways from Peter Singer, though it seems like you guys are all realists. Could you unpack and explain a little bit about the history here and what went down between you Parfit and Singer?

Peter Railton: Yeah, sure. I have to warn those who are listening, buckle up, this is going to have to be a philosophy talk, but I'm sure that many people have these philosophical questions themselves. So let's just begin with the title that Parfit chose for his master work, On What Matters, is the title. And you might say that mattering is the core notion of value, that if you had a universe full of rocks, it would not matter to the rocks, what happened. It would not matter to the rest of the universe, what happened. And so there wouldn't be any positive or negative value in that universe. Introduce creatures for whom something matters, even if it's just as simple as nutrition or avoiding pain, then you can begin to talk about states of affair as being better or worse than one another, about improving or degrading the situation or the characteristics of the world.

And so mattering is poor to the idea of value. And once we grasp that, we begin to realize that value is not some new entity in the world. It's not something we add to the world. Once you have mattering, then things will have value, and they'll have positive and they'll have negative value. And of course, for different creatures, different things will matter. And learning what matters to a creature is understanding what would be good or harmful to that creature, and this of course includes humans. So I was very moved when I was on a committee, looking into questions of animal research, to know that the veterinarians learned a lot about what situations animals preferred and did what they could to try to give them situations in which they were happier, more lively, more disposed to cooperate and learn. And that means that they were trying to learn something about what matters to a rat.

And we now know a fair amount about what matters to a rat. Company matters, exercise, the capacity to engage in activities, build nests. And so when these things matter to rats and so we can give rats a good or a bad existence by thinking about, well, what does matter to rats? Now, what matters to rats is different from what matters to humans, but the basic idea is the same. So there's value there and it's thanks to the existence of creatures for whom something matters that value comes into existence in the world. That's a perfectly naturalistic perspective. Treating value as something that is realized by natural states of affairs in the world. Now it turns out that even someone who's an arch non-naturalist like Derek Parfit agrees that pain is bad, not because it has the non-natural property of being disvaluable, but because of what it's like in its natural features, those features suffice to make the pain bad.

And if they didn't suffice to make the pain bad, there would be no value feature we could sprinkle on it that would make it bad. But given that it has those features, there is also no value feature we can sprinkle on it that will make it good. And so Parfit and I can agree that non-naturalism is important in ethics, not because the world is populated with non-natural entities like values. That's a widespread confusion. It's reifying a notion of value as if it were some kind of a new domain of entities. And naturally once you've done that, it becomes very unclear how we learn about these, what relationship they have to the natural world. If instead, you think, no value is something that is brought into existence by certain relational features in the natural world, then you can say, "Ah, that's common ground between Derek Parfit and myself."

And if Derek's explaining what's bad about pain, he'll give the same explanation that I would give about what's bad about pain. So we agree on that. The badness in the case of pain, pain is really used for two different things. It's used for certain types of physical sensation, and it's used for suffering. That physical sensation isn't always suffering. So for example, when you put hot sauce on your food, you fire up pain circuits, but you enjoy that. You may seek the burn of exercise. And so there are times when the physical sensation of pain is sought and liked, desirable. It's part of good experiences. It shows that pain can matter in different ways. It's the mattering where the value resides, not in the physical sensation just in itself. So the mattering is a relationship between a subjectivity and agent and the physical sensation, and it could be positive or negative in a given case, but the value resides in that relationship.

Lucas Perry: But they're just two contents of consciousness, right? There is the content of consciousness of the sensation of pain on my arm if I scratch it, and I might derive another sensation from that sensual pain, that is pleasure. Wouldn't the goodness here need to come from this higher level, more pristine pleasure that I gain from the pain, which is more of an emotion and that which is intuitive to the other sensation or the other content of consciousness?

Peter Railton: I think you're right to bring in higher level mental states as well. Because part of the reason why pain in certain circumstances is desirable is because of the representation that you have of it. And this is true with many features of the world, is because you understand them in certain ways that they produce in you the positive or negative experience they do. And if you ask a psychologist, the positivity and the negativity in the mind does not reside in the impulses of the pain system or the pleasure or reward system. It resides in the effective system, which encodes value as positive or negative. And it encodes as well, the behaviors and the responses that are characteristic of positive and negative value, positive is approach negative is withdrawal. Fear involves a certain distinctive suite of responses. Anger involves another distinctive suite of responses, but the affective system is where the value is encoded, and that's the common currency of value in the brain.

So that's where we should be looking to discover. And it's the affective system that, which is the root of our emotions, whether they're aroused emotions like anger or fear or non aroused emotions like assurance and trust. That system is a system which encodes this relational feature of value. You're quite right to think that we should move up a level, and in doing so, we encounter the affective system and its properties. And it's a system that we share with all of our mammalian relatives and with other species as well. It's evolutionarily a highly conserved system. And that's because it is the core of valuation, and valuation is a core activity of living creatures because they're going to base their actions on value assignments. You're right to think that in the mind you will have tiers and that you need to find the right level in order to understand what value or disvalue looks like in the mind.

Lucas Perry: So there's the view where some content of consciousness is clearly seen as bad given its nature. If some state of consciousness is like something from a consciousness realist perspective, and it is also natural because it's part of the natural world, it's a physical fact and there are facts about consciousness, then value comes in from what it's like to be conscious. Whereas it seems like you're bringing in the more computational, and physical side of things, like an evaluative affective system, which may not be separate from how things are experienced in consciousness, but I feel confused about these two different levels and where the 'what matters' comes from.

Peter Railton: Well, yes, you're quite right. There are views about value in which it's only conscious states that could have value or disvalue. I don't particularly hold such a view. I think that we are intrinsically concerned with, and that there is intrinsic value in non-conscious states. And that's why I wouldn't sign up for the experience machine. The experience machine could provide an unending stream supposedly of positive conscious states, but why wouldn't I sign on for it? Well, because the actual content of my values is not that I have certain conscious states, it's that I have certain relations with people, with the external world, that I have a certain engagement with things that have a consciousness and that matter. And so I wouldn't agree that the only place, the only locus of value or disvalue is conscious states.

Lucas Perry: So then from a cosmological and evolutionary perspective, there has been the development and arising of sentient creatures on this planet who have ever complexifying neural algorithms for modeling themselves and the world and making predictions and interacting with it. And amongst these evolved architectures include evaluative ones, which take the shape of valuing or disvaluing certain aspects of the world. And so that is enough for you for talking about intrinsic value. You feel like you don't need to bring consciousness into it. You're fine with just talking about the computation.

Peter Railton: Oh, I think consciousness plays a role because one of the good making features is a positive state of consciousness. It's just, it's not the only one. And so there are differences in the world that would not show up as differences in conscious states. And that's what the experience machine is meant to show, but which would nonetheless constitute things that matter in the sense of matter that we were just describing, namely, that these are objects of concern, love, affection, interest on the positive side, objects of dislike, disvaluation, disapproval on the negative side. I don't think there's any reason to think that only conscious states can be locuses of value, but it may be that consciousness plays a role.

Lucas Perry: So what are these other good making features and why are they good making?

Peter Railton: Well, take, for example, a theory like a preference satisfaction theory. I would prefer other things equal that after I'm gone, my children have lives that they find meaningful. Now is that because I want to have the positive experience of thinking that their lives are meaningful? No, I want them to have those lives. And so it's part of the content of my informed preferences, let's say that it would survive information, is part of the content of my informed preferences that the world be such, that my children have a certain kind of life. And you say, "Well, doesn't the meaningfulness of their life just consist in their conscious states?" And I'd say, "Well, no, not at all. I would think that a life in an experience machine would have the same meaning as a life with similar stream of conscious states that was lived in engagement with the world."

And so when I want them to have meaningful lives, I want them to have lives in which they act in ways that matter to them. And that they do things that matter to themselves and to others and their intrinsic preferences, like my intrinsic preferences, aren't just going to be for conscious states. And so it may be that you need something like preference or interest to get value off the ground mattering, but the content of what interests us, or the content of what our preferences are, won't just be the conscious states. So you can't satisfy my preferences just by giving me conscious states, for example.

Lucas Perry: So I don't share that intuition with you. I still don't understand why you feel that something like a preference is good making. I guess that just comes down to intuitions. I mean, when someone could ask me, why do I think consciousness is the only thing that is good making, but I don't know, what is a preference? It's like a concept about some computational architecture that prefers some state of the world over another. But when you pass away, for example, your preference goes away. So why does it need to be respected still? I mean, we're getting into some waters here, but is the short version of this that when you just do these philosophical thought experiments, that your intuitions aren't satisfied by consciousness being necessary and sufficient for value?

Peter Railton: Well, all of our knowledge, whether it's knowledge of value or the external world, we can push it back to a point at which, again, we can't give some further derivation of the assumption that we're making. And so my thinking here is that it seems to me extremely plausible that the one intelligible notion I can get of something like value is that there can be a subjectivity such that states of affairs can go better or worse for that subjectivity. And then value would consist in that, which makes the states of affairs better or worse for that individual. And then I asked myself, well, does that satisfy our concept of value?

Well, value should have various different features and we can list those. It should be something that when we understand it's intrinsically motivating, it should apply to the sorts of things that we ordinarily identify as being values. It should capture a certain role in the guidance of action. It should be something like a goal in action. We should see it as structuring a behavior of individuals. And when I look at all those conditions, I think, yeah, this satisfies those conditions. It's not a proof. It's just saying that if we lay down the conditions that we would give for something satisfying the concept of value, these states do indeed satisfy those conditions and that many other candidate states don't. But I can't tell you for example, that you shouldn't have some other concept of calue instead of value and ask what would satisfy calue in the same way that I can't in the case of knowledge of the external world, give you a derivation of the importance of knowledge, as opposed to shmowledge, you can operate with the concept of knowledge and see what it requires and see whether it would apply to what we are doing.

But that's not a proof that there isn't another scheme of shmowledge of which the same thing could be said. So that's where we get down to these fundamental assumptions and can they be non arbitrary? Well, they can, for example, if, when applying them, you can be put in a situation where you give them up. A concept that we had, that we thought we were happy with, turns out to be confused. Or it turns out that the only things that would satisfy the concept are things which we ordinarily think the concept doesn't apply to. So we think there's a mismatch between the criteria and the paradigm cases. So it's not arbitrary if you're willing to use it critically, but it can't be proven.

Lucas Perry: Okay. Bit of a side path from where you were to Parfit. I was curious about what you really meant by how you guys were agreeing about value being some natural thing, instead of having to sprinkle value.

Peter Railton: The way I would put it, the disagreement that I have with Parfit is a disagreement at the conceptual level. Initially, at any rate, it looked like we had a conflict of opinions because it looked as if he was committed to their being in the world, these non-natural features, and that they somehow explained the role that value has in our lives. And I couldn't understand what that would mean, but he was perfectly content to say, "No, the good making features are these natural features. They explain the role that value has in our lives, but our concept of value is a non-natural concept." And what does it mean to say that? Well, the same situation, the same configuration of matter could be described with physical concepts, chemical concepts, biological concepts, Oh, it's an "organism." It could be described in social concepts. It's a person. Any given situation can be characterized in various different conceptual systems.

And it can be argued, plausibly, that you can't reduce, for example, the conceptual system of biology to the conceptual system of particle physics. Because biology deals in functions, reproduction, metabolism, and so on, and there's no one to one correspondence, no easy correspondence, between those functions and any particular physical realization. You could have living beings made out of carbon. You could have living beings made out of silicon. So the concept of living being, the concept of an organism is a concept of biology. It's a way to organize the description of the world and explanation and biology is conceptually not reducible to physics. That doesn't mean biologists can ignore physics because they think, most anyway do, that what satisfies their biological concept are physical systems. And so it's an important question, what kinds of physical system would satisfy these concepts like self replication and so on?

And so they do microbiology and they study the physical systems that do satisfy these concepts. But the point is that the conceptual system has a degree of autonomy from the physical system. And that even discovering that self-replicating molecules have a certain chemical composition in this world is not discovering that the concept of a self replicating organism is simply a physical concept. Parfit has the same view about normative concepts. He and I agree about what pain is and what makes pain bad, but he says you could describe a situation either, as you were saying, in terms of some physical or biochemical processes, or you could describe it as bad, or as good or something that ought not to exist. And that's another level of conceptual characterization. And his thought is that that level of conceptual characterization can't be reduced to the concepts of natural order.

So there is an element in normative concepts that's always beyond what is translatable without loss of meaning into the natural. Once one recognizes that, then you can be as naturalistic as you like about the nature of value and also believe that the concept of value is a non-natural concept. Just as if you can be as physicalist as you like about the fundamental furniture of the universe and still believe that the biological level of description is not reducible to the physical level of description. You could say the same debate went on when people were thinking about life. 19th century, we find people thinking, well, there's got to be this special elan vital or spirit or something like that. You can't just take a bunch of matter and put it together and have life. By and by, biochemistry develops and people, actually you can't put a bunch of matter together and have life.

And the same thing is true with value. You don't need some value-vital, some kind of further substance to add to the world. You can put together the natural stuff of the world and get value. Once you frame it that way, then Parfit and I actually agree. Because when he talks about the irrreducibility of the normative, he really means, should mean, and I think agrees that he means, a conceptual irreproducibility. And once we establish that, then I can say, "Yes, I agree with you, normative concepts aren't definable entirely in terms of non-normative concepts, they involve some idea of ought or some idea of value that isn't present to the non-normative." But my interest as a philosopher and metaethicist is an interest in what kinds of natural conditions satisfy these concepts and how that makes it possible for us to have knowledge in a non-normative conceptual scheme, like ethics or theory of value. So that's where I do my work. His work is done in carefully distinguishing the concepts.

Lucas Perry: So there is reality as it is, there is the base reality, base metaphysics, call it ultimate reality or whatever, and all human conceptualization supervenes upon that because it's couched within that context and is identical to it. Yet that conceptualization you argue is lossy with respect to ultimate reality, because it doesn't necessarily carve reality at the joints, but that conceptual structure is still supervenient upon it. And at the level of conceptualization, there are facts about the world that can be satisfied or not satisfied that will make some proposition true or false.

Peter Railton: Yeah.

Lucas Perry: So you're arguing that value isn't part of metaphysical bedrock, but metaphysical bedrock creates neural architectures that create concepts that contain within them necessary and sufficient conditions for being satisfied. And when agents are able to gain clarity with one another over concepts and satisfying necessary and sufficient conditions, then they can have concrete discussions about ethics.

Peter Railton: That would be one common basis. And so the image that Parfit gave in his first volume of On What Matters was that he thought, ultimately, you could see the utilitarian and the Kantian as climbing two different sides of the same mountain so that they would eventually meet at the summit. I suggested to him, well in metaethics, the same as the case, I'm a naturalist, I'm climbing one side of the mountain. You're a non-naturalist, you're climbing the other side of the mountain.

But as our views develop, and as we understand better the different elements of the views, then actually they're going to come such that as we approach the summit, we aren't really disagreeing with each other. And he accepted that picture. I would only add to what you were saying by way of summary. Our concepts typically don't give us necessary and sufficient conditions, they are more open ended and open textured than that. And that's part of why we can have unending debates about questions like value and so on.

But you mentioned truth and might say, truth is another very good example of a concept that's not reducible to a concept of physics. Because true presupposes representations, and representations are characterizable not in terms of their physical constituents, but in terms of their role in thought. And so people who are skeptical about value because they say, "I don't see where value is in the world," they should be equally skeptical about truth. Because truth is not some new substance we add. If there's a representation and it accurately reflects the world, then we have truth. So true, again, is a relational matter between a subject something, like a representation and this case, a state of the world, and it's when that relationship obtains that you get truth.

Lucas Perry: Right, but that's truth in the epistemological agent centered sense, but then there's the more metaphysical view about truth, where there are mind independent facts. And they're true, whether or not we know anything about them. Maybe the same distinction is important here to make. There are potentially moral truths within the conceptual framework that we're participating in. And it feels weird to me to call that moral realism. But then there's another claim where there's mind independent truths about morality like that there's an intrinsic quality to suffering that is what bad means. Does that make sense?

Peter Railton: I think you've put things in a very good way. One of the features of the setup that I was describing is that it's very easy to slide from a position that for example, whenever a value judgment obtains, then some or other natural state obtains, it's very easy to slide from that to thinking that the natural state actually is the normative fact. It doesn't satisfy the concept. And so you could have the concept of the good, and it could be that there are eternal truths about good I suspect. That's a reasonable candidate, just as there can be eternal truths in mathematics. The claim isn't that the conceptual domain is somehow identical with the natural domain. It supervenes, but it's not a relationship of identity. And the language in which those claims are stated, and the way in which we adjudicate them might be as in the case of mathematical claims, it could be quite a priori.

And that's where Parfit's view and mine differ and Singer's likewise, because he thinks you can do this a priori in a way that I don't think you can, but that's a question in epistemology. It doesn't require a different metaphysics in order to have that view. So you can be a physicalist and believe that there is mathematical truth. And that's because, for example, you think that mathematical truths are true via a set of axioms, definitions, rules of inference. And so they are made true not by distributions of molecules, but by logical relationships that can be specified in terms of axioms and rules.

Lucas Perry: Okay. So I feel a little bit confused still about why your view is a kind of moral realism if it requires no strong metaphysical view. Whereas other moral realists that I'm familiar with hold a strong metaphysical view about suffering in consciousness and joy in consciousness as being the intrinsic valence carriers of value.

Peter Railton: Well, I'm not sure about the last part of your question. I'll have to think about how to interpret that. But am I a realist about organisms, if I believe that the concept of an organism is distinct from any particular physical instantiation? Am I prevented from being a realist about organisms because I think the organismic level of description is irreducible to the physical level of description? You see, no, actually, because you think that the concept organism is satisfied by some physical system, you're a realist about organisms, you think there are organisms. To me that's a perfectly realistic position. And you realist or non-realistic would say, "Well, I guess there aren't any organisms then, because they're not part of the fundamental furniture of the universe."