Contents

FLI OCTOBER, 2018 NEWSLETTER

Three New FLI Podcasts

Peter Singer on moral realism, Martin Rees on the planet’s greatest threats, and Alexander Verbeek and John Moorhead on the recent IPCC report

AI Alignment Podcast: On Becoming A Moral Realist

with Peter Singer

Are there such things as moral facts? If so, how might we be able to access them? Peter Singer started his career as a preference utilitarian and a moral anti-realist, and then over time became a hedonic utilitarian and a moral realist. How does such a transition occur, and which positions are more defensible? How might objectivism in ethics affect AI alignment? What does this all mean for the future of AI?

Are there such things as moral facts? If so, how might we be able to access them? Peter Singer started his career as a preference utilitarian and a moral anti-realist, and then over time became a hedonic utilitarian and a moral realist. How does such a transition occur, and which positions are more defensible? How might objectivism in ethics affect AI alignment? What does this all mean for the future of AI?

Peter Singer is a world-renowned moral philosopher known for his work on animal ethics, utilitarianism, global poverty, and altruism. He’s a leading bioethicist, the founder of The Life You Can Save, and currently holds positions at both Princeton University and The University of Melbourne.

Topics discussed in this episode include:

- Peter’s transition from moral anti-realism to moral realism

- Why emotivism ultimately fails

- Parallels between mathematical/logical truth and moral truth

- Reason’s role in accessing logical spaces, and its limits

- Why Peter moved from preference utilitarianism to hedonic utilitarianism

- How objectivity in ethics might affect AI alignment

To listen to the podcast, click here, or find us on SoundCloud, iTunes, Google Play and Stitcher.

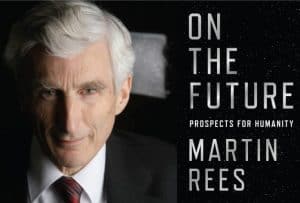

Martin Rees on the Prospects for Humanity: AI, Biotech, Climate Change, Overpopulation, Cryogenics, and More

How can humanity survive the next century of climate change, a growing population, and emerging technological threats? Where do we stand now, and what steps can we take to cooperate and address our greatest existential risks?

In this special podcast episode, Ariel speaks with Martin Rees about his new book, On the Future: Prospects for Humanity, which discusses humanity’s existential risks and the role that technology plays in determining our collective future. Martin is a cosmologist and space scientist based in the University of Cambridge.

Topics discussed in this episode include:

- Why growing inequality could be our most underappreciated global risk

- Earth’s carrying capacity and the dangers of overpopulation

- Space travel and why Martin is skeptical of Elon Musk’s plan to colonize Mars

- The ethics of artificial meat, life extension, and cryogenics

- How intelligent life could expand into the galaxy

- Why humans might be unable to answer fundamental questions about the universe

Can We Avoid the Worst of Climate Change?

with Alexander Verbeek and John Moorhead

“There are basically two choices. We’re going to massively change everything we are doing on this planet, the way we work together, the actions we take, the way we run our economy, and the way we behave towards each other and towards the planet and towards everything that lives on this planet. Or we sit back and relax and we just let the whole thing crash. The choice is so easy to make, even if you don’t care at all about nature or the lives of other people. Even if you just look at your own interests and look purely through an economical angle, it is just a good return on investment to take good care of this planet.” – Alexander Verbeek

“There are basically two choices. We’re going to massively change everything we are doing on this planet, the way we work together, the actions we take, the way we run our economy, and the way we behave towards each other and towards the planet and towards everything that lives on this planet. Or we sit back and relax and we just let the whole thing crash. The choice is so easy to make, even if you don’t care at all about nature or the lives of other people. Even if you just look at your own interests and look purely through an economical angle, it is just a good return on investment to take good care of this planet.” – Alexander Verbeek

Alexander Verbeek is a Dutch diplomat and former strategic policy advisor at the Netherlands Ministry of Foreign Affairs. He created the Planetary Security Initiative. John is President of Drawdown Switzerland, an act tank to support Project Drawdown and other science-based climate solutions that reverse global warming.

Topics discussed in this episode include:

- The crucial difference between 1.5 and 2 degrees C of global warming

- Why the economy needs to fundamentally change to save the planet

- Climate change’s relation to international security problems

- How we can avoid runaway climate change and a “Hothouse Earth”

- Drawdown’s 80 existing technologies and practices to solve climate change

- “Trickle up” climate solutions — why individual action is just as important as national and international action

- What all listeners can start doing today to address climate change

To listen to the podcast, click here, or find us on SoundCloud, iTunes, Google Play and Stitcher.

IPCC 2018 Special Report Paints Dire — But Not Completely Hopeless — Picture of Future

By Ariel Conn

The recent IPCC report concludes that, if society continues on its current trajectory — and even if the world abides by the Paris Climate Agreement — the planet will hit 1.5°C of warming in a matter of decades, and possibly in the next 12 years. And every half degree more that temperatures rises is expected to bring on even more extreme effects.

By Kirsten Gronlund

There are already a number of do-it-yourself (DIY) genome editing kits on the market today, and these relatively inexpensive kits allow anyone, anywhere to edit DNA using CRISPR technology. Do these kits pose a real security threat? Millett explains that risk level can be assessed based on two distinct criteria: likelihood and potential impact.

Trump To Pull US Out Of Nuclear Treaty

By Jolene Creighton

When the INF was signed into effect by Former U.S. President Ronald Reagan and Former Soviet President Mikhail Gorbachev, it led to the elimination of nearly 2,700 short- and medium-range missiles. More significantly, it helped bring an end to a dangerous nuclear standoff between the two nations, and the trust that it fostered played a critical part in defusing the Cold War.

What We’ve Been Up to This Month

Richard Mallah and Lucas Perry were interviewed by George Gantz and the Long Now Boston audience on FLI and the future of AI alignment.

Ariel Conn spoke about AI/ML ethical issues with CODATA, which is CU Boulder’s team of data scientists, machine learners, and statisticians.

FLI in the News

VICE: The Dawn of Killer Robots

FORBES: Is Black Box Human Better Than Black Box AI?

GLOBAL TIMES: China’s AI involvement needed for better global collaboration: experts

If you’re interested in job openings, research positions, and volunteer opportunities at FLI and our partner organizations, please visit our Get Involved page.

Highlighted opportunity:

The Centre for the Study of Existential Risk (CSER) invites applications for an Academic Programme Manager.