Contents

FLI August, 2017 Newsletter

Leaders of Top Robotics and AI Companies Call for Ban on Killer Robots

Leaders from AI and robotics companies around the world have released an open letter calling on the United Nations to ban autonomous weapons, often referred to as killer robots.

“Lethal autonomous weapons threaten to become the third revolution in warfare. Once developed, they will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend.”

Check us out on SoundCloud and iTunes!

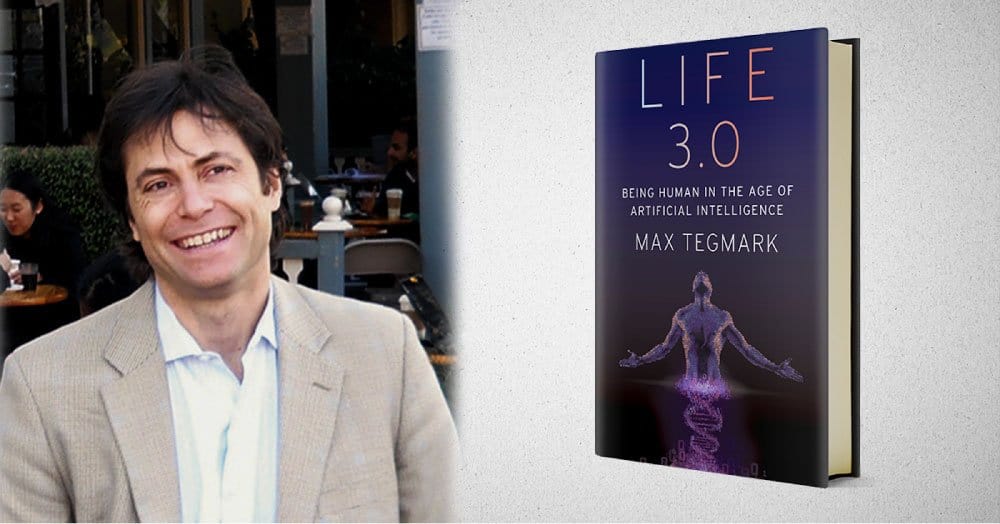

Podcast: Life 3.0 – Being Human in the Age of Artificial Intelligence

with Max Tegmark

What will happen when machines surpass humans at every task? While many books on artificial intelligence focus on the near term impacts of AI on jobs and the military, they often avoid critical questions about superhuman artificial intelligence, such as: Will superhuman artificial intelligence arrive in our lifetime? Can and should it be controlled, and if so, by whom? Can humanity survive in the age of AI? And if so, how can we find meaning and purpose if super-intelligent machines provide for all our needs and make all our contributions superfluous?

In this podcast, Ariel speaks with Max Tegmark about his new book Life 3.0: Being Human in the Age of Artificial Intelligence. Max is co-founder and president of FLI, and he’s also a physics professor at MIT, where his research has ranged from cosmology to the physics of intelligence. He’s currently focused on the interface between AI, physics, and neuroscience. His recent technical papers focus on AI, and typically build on physics-based techniques. He is the author of over 200 publications, and also his earlier book, Our Mathematical Universe: My Quest for the Ultimate Nature of Reality.

Accompanying the release of Life 3.0, Max appeared as a guest on Sam Harris’ Waking Up podcast. In this episode, Sam and Max discuss the nature of intelligence, the risks of superhuman AI, a nonbiological definition of life, the substrate independence of minds, the relevance and irrelevance of consciousness for the future of AI, near-term breakthroughs in AI, and other topics.

ScienceFriday: What Would An A.I.-Influenced Society Look Like In 10,000 Years?

Physicist Max Tegmark, author of the book Life 3.0: Being Human in the Age of Artificial Intelligence, contemplates how artificial and superintelligence might reshape work, justice, and society in the near term as well as 10,000 years into the future.

NPR THINK: When The Machines Are Smarter Than Us

MIT physics professor Max Tegmark talks with host Krys Boyd about tapping into the positives of A.I. while protecting our humanity.

Ian Sample speaks with Professor Max Tegmark about the advance of AI, the future of life on Earth, and what happens if and when a ‘superintelligence’ arrives.

FLI’s Featured Videos: Check us out on YouTube!

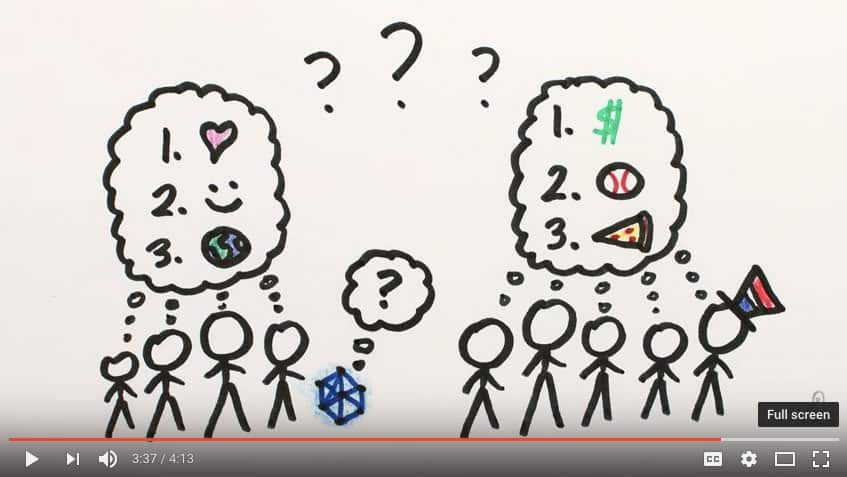

Max partnered again with MinutePhysics to create an animated short about the risks and benefits of advanced artificial intelligence, based off of the discussion in Life 3.0.

Max Tegmark and his wife Meia discuss his new book on their deck, which happens to be the spot where Max wrote the majority of Life 3.0.

In this special panel from FLI’s Beneficial AI conference in Asilomar, great minds such as Nick Bostrom, Ray Kurzweil, Stuart Russell, Elon Musk, Demis Hassabis, David Chalmers, Bart Selman and Jaan Tallin discuss the possibility of superintelligence and what that might mean for the future of our species. This version has been updated to improve audio from our original video of the panel.

This Month’s Most Popular Articles

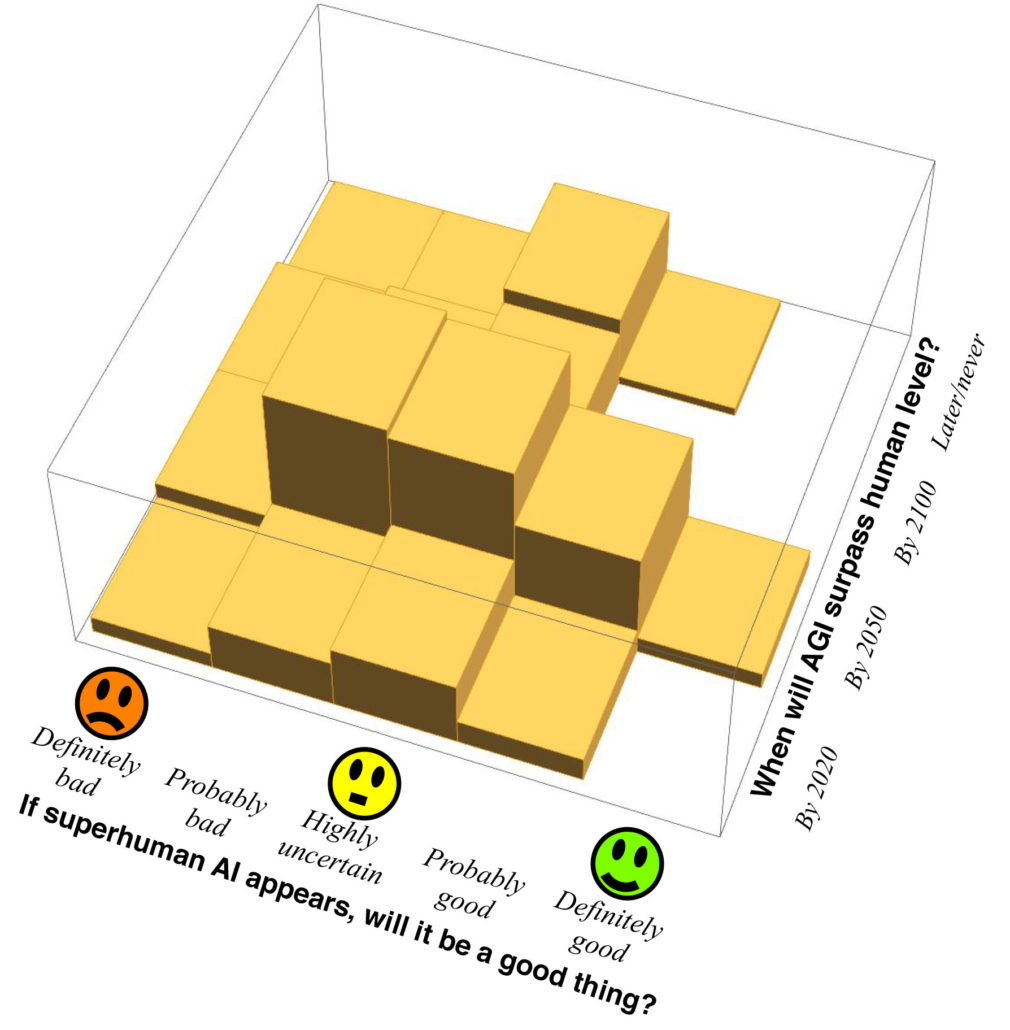

Max Tegmark’s new book on artificial intelligence, Life 3.0: Being Human in the Age of Artificial Intelligence, explores how AI will impact life as it grows increasingly advanced, perhaps even achieving superintelligence far beyond human level in all areas. For the book, Max surveys experts’ forecasts, and explores a broad spectrum of views on what will/should happen.

But it’s time to expand the conversation. If we’re going to create a future that benefits as many people as possible, we need to include as many voices as possible. And that includes yours! This page features the answers from the people who have taken the survey that goes along with Max’s book. To join the conversation yourself, please take the survey here.

“The real risk with AGI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble.” This excerpt is from Max Tegmark’s new book, Life 3.0: Being Human in the Age of Artificial Intelligence. You can join and follow the discussion at ageofai.org.

In this article, Max explores the top 10 things we need to know about Artificial Intelligence as AI becomes a reality we will all need to deal with.

Portfolio Approach to AI Safety Research

By Viktoriya Krakovna

Long-term AI safety is an inherently speculative research area, aiming to ensure safety of advanced future systems despite uncertainty about their design or algorithms or objectives. It thus seems particularly important to have different research teams tackle the problems from different perspectives and under different assumptions. While some fraction of the research might not end up being useful, a portfolio approach makes it more likely that at least some of us will be right.

Can AI Remain Safe as Companies Race to Develop It?

By Ariel Conn

Race Avoidance Principle: Teams developing AI systems should actively cooperate to avoid corner cutting on safety standards.

Continuing our discussion of the 23 Asilomar Principles, researchers weigh in on the importance and challenges of collaboration between competing AI organizations.

How to Design AIs that Understand What Humans Want

By Tucker Davey

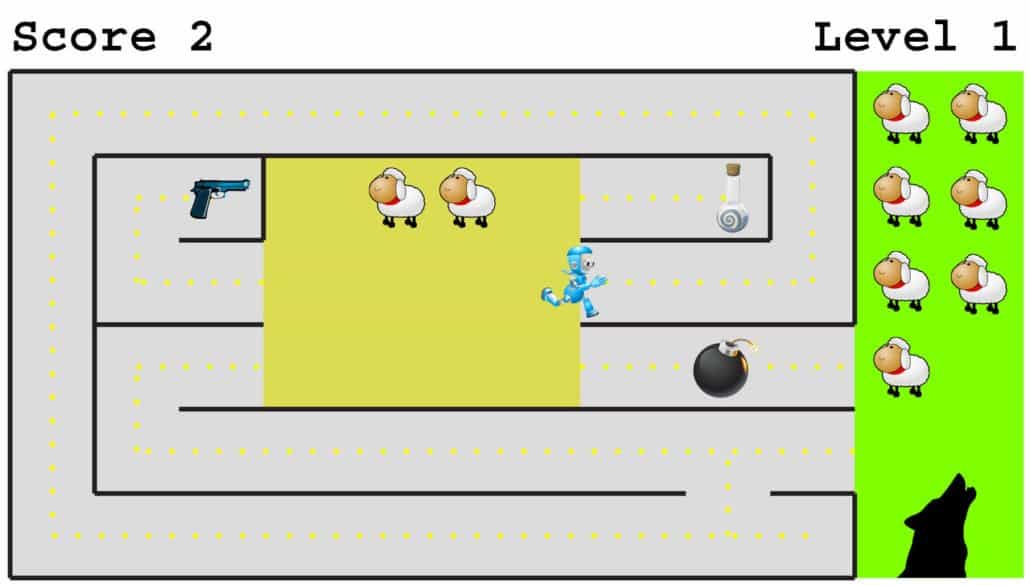

As artificial intelligence becomes more advanced, programmers will expect to talk to computers like they talk to humans. Instead of typing out long, complex code, we’ll communicate with AI systems using natural language. However with current programming models, computers are literalists: instead of reading between the lines and considering intentions, they just do what’s literally true, and what’s literally true isn’t always what humans want.

It’s not hard to imagine situations in which this tendency for computers to interpret statements literally and rigidly can become extremely unsafe.

What We’ve Been Up to This Month

Viktoriya Krakovna, Richard Mallah and Anthony Aguirre attended EA Global in San Francisco this month. The effective altruism community’s annual conference, this three-day event featured speakers from the Open Philanthropy Project, 80,000 Hours, AI Impacts, Google DeepMind, the Future of Humanity Institute, MIRI, and many other high-impact organizations.

FLI in the News

Open Letter on Autonomous Weapons

BBC NEWS: Is ‘killer robot’ warfare closer than we think?

“More than 100 of the world’s top robotics experts wrote a letter to the United Nations recently calling for a ban on the development of ‘killer robots’ and warning of a new arms race. But are their fears really justified?”

Life 3.0

NATURE: Artificial intelligence: The future is superintelligent

“Stuart Russell weighs up a book on the risks and rewards of the AI revolution.”

THE VERGE: A physicist on why AI safety is ‘the most important conversation of our time’

“Nothing in the laws of physics that says we can’t build machines much smarter than us”

SCIENCE: A physicist explores the future of artificial intelligence

“Whether it’s reports of a new and wondrous technological accomplishment or of the danger we face in a future filled with unbridled machines, artificial intelligence (AI) has recently been receiving a great deal of attention. If you want to understand what the fuss is all about, Max Tegmark’s original, accessible, and provocative Life 3.0: Being Human in the Age of Artificial Intelligence would be a great place to start.”

Get Involved

FLI is seeking a full-time hire to lead development and implementation of AI policy initiatives. This person will:

If you or someone you know is interested, please get in touch!

FHI is seeking a Senior Research Fellow on AI Macrostrategy, to identify crucial considerations for improving humanity’s long-run potential. Main responsibilities will include guidance on our existential risk research agenda and AI strategy, individual research projects, collaboration with partner institutions and shaping and advancing FHI’s research strategy.