Are we close to an intelligence explosion?

Contents

Intelligence explosion, singularity, fast takeoff… these are a few of the terms given to the surpassing of human intelligence by machine intelligence, likely to be one of the most consequential – and unpredictable – events in our history.

For many decades, scientists have predicted that artificial intelligence will eventually enter a phase of recursive self-improvement, giving rise to systems beyond human comprehension, and a period of extremely rapid technological growth. The product of an intelligence explosion would be not just Artificial General Intelligence (AGI) – a system about as capable as a human across a wide range of domains – but a superintelligence, a system that far surpasses our cognitive abilities.

Speculation is now growing within the tech industry that an intelligence explosion may be just around the corner. Sam Altman, CEO of OpenAI, kicked off the new year with a blog post entitled Reflections, in which he claimed: “We are now confident we know how to build AGI as we have traditionally understood it… We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word”. A researcher at that same company referred to controlling superintelligence as a “short term research agenda”. Another’s antidote to online hype surrounding recent AI breakthroughs was far from an assurance that the singularity is many years or decades away: “We have not yet achieved superintelligence”.

We should, of course, take these insider predictions with a grain of salt, given the incentive for big companies to create hype around their products. Still, talk of an intelligence explosion within a handful of years spans further than the AI labs themselves. For example, Turing Award winners and deep learning pioneers Geoffrey Hinton and Yoshua Bengio both expect superintelligence in as little as five years.

What would this mean for us? The future becomes very hazy past the point that AIs are vastly more capable than humans. Many experts worry that the development of smarter-than-human AIs could lead to human extinction if the technology is not properly controlled. Better understanding the implications of an intelligence explosion could not be more important – or timely.

Why should we expect an intelligence explosion?

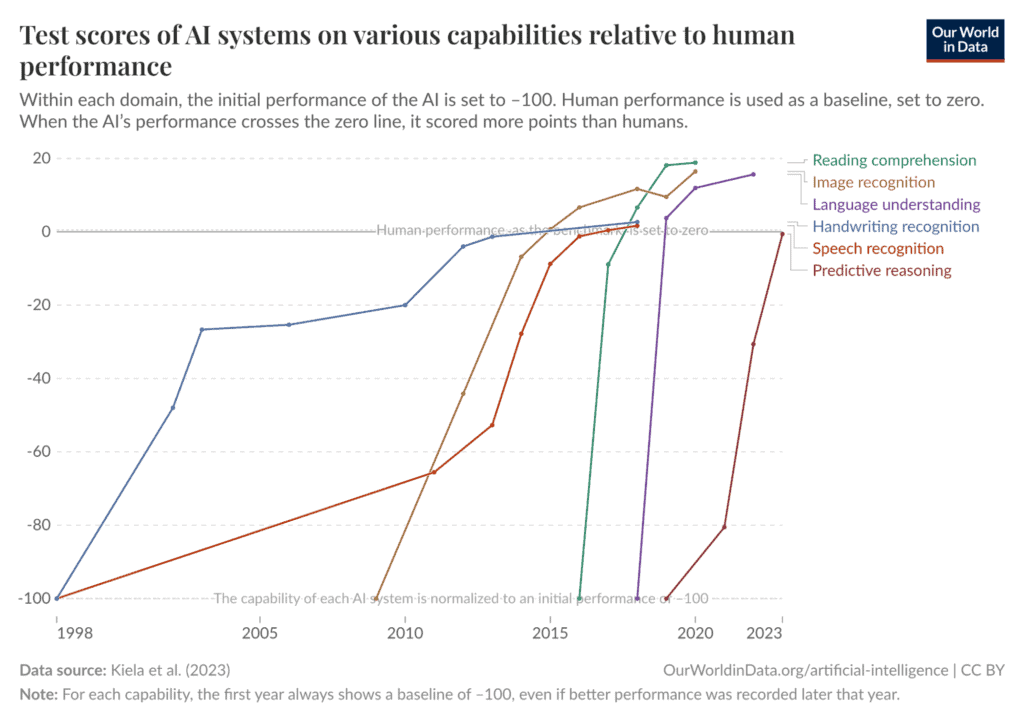

Predictions of an eventual intelligence explosion are based on a simple observation: since the dawn of computing, machines have been steadily surpassing human performance in more and more domains. Chess fell to the IBM supercomputer DeepBlue in 1997; the board game Go to DeepMind’s AlphaGo in 2016; image recognition to ImageNet in 2015; and poker to Carnegie Mellon’s Libratus in 2017. In the past few years, we’ve seen the rise of general-purpose Large Language Models (LLMs) such as OpenAI’s GPT-4, which are becoming human-competitive at a broad range of tasks with unprecedented speed. LLMs are already outperforming human doctors at medical diagnosis. OpenAI’s model ‘o1’ exceeds PhD-level human accuracy on advanced physics, biology and chemistry problems, and ranks in the 89th percentile (the top 11%) at competitive programming questions hosted by platform Codeforces. Just three months after o1’s release, OpenAI announced its ‘o3’ model, which achieved more than double o1’s Codeforces score, and skyrocketed from just 2% to 25% on FrontierMath, one of the toughest mathematics benchmarks in the world.

As the graphic below shows, over time AI models have been developing new capabilities more quickly, sometimes jumping from zero to near-human-level within a single generation of models. The gap between human and AI performance is closing at an accelerating pace:

These advances in capability are being accelerated by the ever-decreasing cost of computation. Moore’s Law, an extrapolation posited by engineer Gordon Moore in 1965 that has held true ever since, states that the number of transistors per silicon chip doubles every year. In other words, computing power has been getting both more abundant and cheaper, enabling us to train larger and more powerful models with the same amount of resources. Unless something happens to derail these trends, all signs point to AIs eventually outperforming humans across the board.

How could an intelligence explosion actually happen?

Keen readers may have noticed that the above doesn’t necessarily imply an intelligence explosion, just a gradual surpassing of humans by AIs, one capability after another. There’s something extra implied by the term “intelligence explosion” – that it will be triggered at a discrete point, and lead to very rapid and perhaps uncontrollable technological growth. This marks a point of no return, at which, if humanity loses control of AI systems, it will be impossible to regain.

How could this actually happen? As we’ve explained, we have every reason to expect that AI systems will eventually surpass human level at every cognitive task. One such task is AI research itself. This is why many have speculated that AIs will eventually enter a phase of recursive self-improvement.

Imagine that an AI company internally develops a model that outperforms its top researchers and engineers at the task of improving AI capabilities. That company would have a tremendous incentive to automate its own research. An automated researcher would have many advantages over a human one – it could work 24/7 without sleep or breaks, and likely self-replicate (Geoffrey Hinton has theorised that advanced AIs could make thousands of copies of themselves to form “hive minds”, so that what one agent learns, all of them do). It takes about 100 to 1000 times more computing power to train an AI model than to run one, meaning that once an automated AI researcher is developed, vastly more copies could be run in parallel. Dedicated to the task of advancing the AI frontier, these copies could likely make very fast progress indeed. Precisely how fast is debated – one particularly thorough investigation into the topic estimated that it would take less than one year to go from AIs that are human-level at AI R&D to AIs that are vastly superhuman. Sam Altman has recently stated that he now thinks AI “takeoff” will be faster than he did previously.

How likely is an intelligence explosion?

This idea may sound like sci-fi – but it is taken seriously by the scientific community. In the 2023 AI Impacts survey, which features the largest sample of machine learning researchers to date, 53% of respondents thought an intelligence explosion was at least 50% likely. The survey defines an intelligence explosion as “feedback loop could cause technological progress to become more than an order of magnitude faster” over less than five years, due to AI automating the majority of R&D.

The likelihood of an intelligence explosion depends to some extent on our actions. It becomes far more probable if companies decide to run the risk of automating AI research. All signs currently point to this being the path they intend to take. In a recent essay, Anthropic CEO Dario Amodei appears to explicitly call for this, suggesting that “AI systems can eventually help make even smarter AI systems” will allow the US to retain a durable technological advantage over China. DeepMind is hiring for Research Engineers to work on automated AI research.

Is an intelligence explosion actually possible?

There are several counterarguments one might have to the idea that an intelligence explosion is possible. Here we examine a few of the most common.

One objection is that raw intelligence is not sufficient to have a large impact on the world – personality, circumstance and luck matter too. The most intelligent people in history have not always been the most powerful. But this arguably underestimates quite how much more capable than us a superintelligence might be. The variation in intelligence between individual humans could be microscopic in comparison, and AIs have other advantages such as being able to run 24/7, hold enormous amounts of information in memory, and share new knowledge with other copies instantly. Zooming out, it’s also easy to observe that the collective cognitive power of humanity significantly outweighs that of other species, which explains why we’ve largely been able to impose our will on the world. If a Superintelligence were developed, we can expect many thousands or millions of copies to be run in parallel, far outnumbering the amount of human experts in any particular domain.

Some also argue that an intelligence explosion will be impeded by real-world bottlenecks. For example, AI may only be able to improve itself as fast as humans can build datacenters with the required computing power to train larger and larger models. But new improvements in AI algorithms could significantly improve their performance, and make rapid acceleration possible even while the amount of computing power remains constant. Recent models are achieving state-of-the-art performance with low compute costs – for example, Chinese company DeepSeek’s R1 model is able to compete with OpenAI’s cutting-edge reasoning models, but allegedly cost less than $6 million to train. Researchers used novel algorithmic techniques to extract powerful capabilities from far less computing power. A self-improving AI would likely be able to identify similar techniques much more quickly than humans.

Another objection is that no one AI could ever create a thing superior to itself, just as no one human ever could. Instead, superintelligent AI will be a civilisational effort, requiring the combined brainpower of many humans using their most powerful tools – books, the internet, mathematics, the scientific method – not the result of recursive self-improvement. However, there have also been challenges which required the collaboration of humans over many generations, but which a single AI system overcame very quickly not by learning from human examples, but by playing against itself many millions of times. For example, the art of playing Go was passed down and developed over many generations, but DeepMind’s AlphaGo developed superhuman Go-playing abilities in days through self-play.

Others have argued that an intelligence explosion is impossible because machines will never truly “think” in the same way as humans, since they lack certain essential properties for “true” intelligence such as consciousness and intentionality. But this is a semantic distinction that breaks down when we replace the word “intelligence” with “capability” – the concern is about whether systems can solve problems and effectively pursue goals. In the words of Dutch computer scientist Edsger W. Dijkstra, “The question of whether a computer can think is no more interesting than the question of whether a submarine can swim.”

Whether an intelligence explosion is possible or likely remains unclear. Some experts, such as head of the US government’s AI Safety Institute Paul Christiano, believe that runaway recursive self-improvement is possible, but that the more likely scenario is a slow ramp up of AI capabilities, in which AI becomes incrementally more useful for accelerating AI research. This could give humanity the opportunity to intervene before systems become dangerously capable. Nonetheless, there are compelling arguments in favour of an intelligence explosion, and many scientists take the hypothesis seriously.

How close are we to an intelligence explosion?

We may not know whether an intelligence explosion is in our future until we reach the critical point at which AI systems become better than top human engineers at improving their own capabilities. How soon could this happen?

The truth – and in many ways the problem – is that no one really knows. Each frontier AI training run produces models with emergent capabilities that can take even their developers by surprise. We could easily cross the threshold without realising. However, one effort to measure progress towards automated AI R&D is RE-bench, a benchmark developed by AI safety non-profit METR. A study published in November last year tested frontier models including Anthropic’s Claude Sonnet 3.5 and OpenAI’s o1-preview against over 50 human experts. The results showed that models are already outcompeting humans at AI R&D over short time horizons (2 hours), though they still fall short over longer ones (8 hours). OpenAI has announced a new model in the form of o3 since then, which may be better still. Results like these may be the most important indicators we have of our proximity to an intelligence explosion. As one AI researcher points out, AI research might be the only thing we need to automate in order to reach superintelligence.

Another requirement for automated AI research is agency – the ability to complete multi-step tasks over long time horizons. Developing reliable agents is quickly becoming the new frontier of AI research. Anthropic released a demo of its computer use feature late last year, which allows an AI to autonomously operate a desktop. OpenAI has developed a similar product in the form of AI agent Operator. We are also starting to see the emergence of models designed to conduct academic research, which are showing an impressive ability to complete complex end-to-end tasks. OpenAI’s Deep Research can synthesise (and reason about) existing literature and produce detailed reports in between five and thirty minutes. It scored 26.6% (more than doubling o3’s score) on Humanity’s Last Exam, an extremely challenging benchmark created with input from over 1,000 experts. DeepMind released a similar research product a couple months earlier. These indicate important progress towards automating academic research. If increasingly agentic AIs can complete tasks with more and more intermediate steps, it seems likely that AI models will soon be able to perform human-competitive AI R&D over long time horizons.

Timelines to superintelligent AI vary widely, from just two or three years (a common prediction by lab insiders) to a couple of decades (the median guess among the broader machine learning community). That those closest to the technology expect superintelligence in just a few years should concern us. We should be acting on the assumption that very powerful AI could emerge soon.

Should we be scared of an intelligence explosion?

Take a second to imagine the product of an intelligence explosion. It could be what Anthropic CEO Dario Amodei calls “a country of geniuses in a datacenter” – a system far more capable than the smartest human across every domain, copied millions of times over. This could enable a tremendous speed-up in scientific discovery, which, if we succeed in controlling these systems, could deliver tremendous benefits. They could help us cure diseases, solve complex governance and coordination issues, alleviate poverty and address the climate crisis. This is the hypothetical utopia which some developers of powerful AI are motivated by.

But imagine that this population of geniuses has goals that are incompatible with those of humanity. This is not an improbable scenario. Ensuring that AI systems behave as their developers intend, known as the alignment problem, is an unsolved research question, and progress is lagging far behind the advancement of capabilities. And there are compelling reasons to think that by default, powerful AIs may not act in the best interests of humans. They may have strange or arbitrary goals to maximise things of very little value to us (this is known as the orthogonality thesis), or the optimal conditions for the completion of their goals may be different to those required for our survival. For example, former OpenAI chief scientist IIlya Sutskever believes that in a post-superintelligence world, “the entire surface of the Earth will be covered in datacenters and solar panels”. Sufficiently capable systems could determine that a colder climate would reduce the need for energy-intensive computer cooling, and so initiate climate engineering projects to reduce global temperatures in a way that proves catastrophic for humanity. These are among the reasons why many experts have worried that powerful AI could cause human extinction.

So, how might this consortium of super-geniuses go about driving humans extinct? Speculating about how entities much smarter than us could bring about our demise is much like trying to predict how Garry Kasporov would beat you at chess. We simply cannot know exactly how we might be outsmarted, but this doesn’t prevent us from anticipating that it would happen. A superintelligent AI might be able to discover new laws of nature and quickly develop new powerful technologies (just as human understanding of the world around us has allowed us to exert control over the world, for example through urbanisation or harnessing of energy sources). It could exploit its understanding of human psychology to manipulate humans into granting it access to the outside world. It could accelerate progress in robotics to achieve real-world embodiment. It might develop novel viruses that evade detection, bring down critical infrastructure through cyberattacks, hijack nuclear arsenals, or deploy swarms of nanobots.

There will be great pressure to deploy AI systems whenever they promise to improve productivity or increase profits, since companies and countries that choose not to do so will not be able to compete – and this means humans might be harmed by accident. Today’s systems are unreliable and buggy, often hallucinating false information or producing unpredictable outputs, which could cause a real-world accident if it were deployed in critical infrastructure. But all these scenarios reflect our limited human imagination. Whatever we can come up with, a superintelligence could likely do one better.

Smarter-than-human AI systems could pose existential risks whether or not they emerge from a process of rapid self-improvement. But an intelligence explosion could make them more likely. Many of the guardrails or safety measures that might work in a “slow takeoff” scenario may be rendered obsolete if an intelligence explosion is triggered at some unpredictable and discrete threshold. Implementing sensible regulation based on a common understanding of risk will be harder. Alignment techniques such as using weaker models to “supervise” stronger models (scalable oversight) or automating safety research become less viable.

Can we prevent an intelligence explosion?

Unprecedented resources are currently being poured into the project of building superintelligence. In January, OpenAI announced a new project named Stargate, which will invest $500 billion into AI development over the next four years, with Donald Trump vowing to support its mission through “emergency declarations” that will support datacenter construction. Cash is flowing in other corners of the AI ecosystem too – Forbes has projected that US AI spending will exceed a quarter trillion this year.

Regulation to control AI development is sparse. Governance of frontier AI largely depends on voluntary commitments, more ambitious bills have failed to pass, and Trump has already repealed Biden’s Executive Order requiring that companies notify the US government of large training runs.

But none of this means an intelligence explosion is inevitable. Humanity doesn’t have to build dangerous technology if it doesn’t want to! There are many regulatory approaches we could take to reduce risk, from pausing development of the most powerful systems, to mandating safety standards and testing for frontier models (as was proposed in a recently vetoed bill). There are also many ideas on the table as to how we could control smarter-than-human AI, such as creating formal verifications or quantitative guarantees to ensure that powerful systems are safe. But capabilities are advancing very quickly, and just 1-3% of AI research is currently dedicated to safety. Researchers working on these critical problems need more time that meaningful regulation could help deliver.

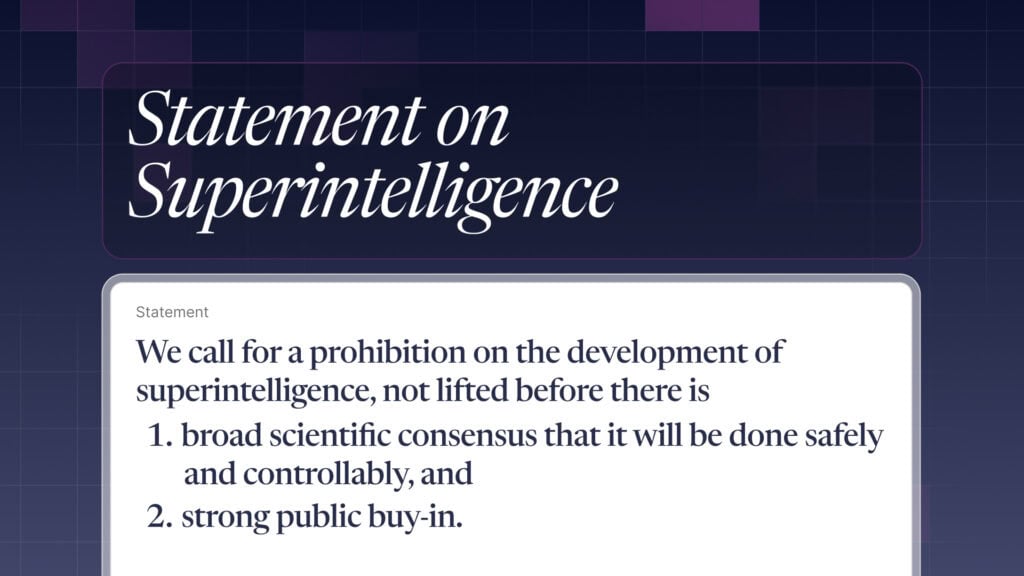

Read next: Keep The Future Human proposes four essential, practical measures to prevent uncontrolled AGI and superintelligence from being built, all politically feasible and possible with today’s technology – but only if we act decisively today.

About the author

Sarah Hastings-Woodhouse is an independent writer and researcher with an interest in educating the public about risks from powerful AI and other emerging technologies. Previously, she spent three years as a full-time Content Writer creating resources for prospective postgraduate students. She holds a Bachelors degree in English Literature from the University of Exeter.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Existential Risk

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Some of our Communications projects

Control Inversion