Contents

FLI April, 2018 Newsletter

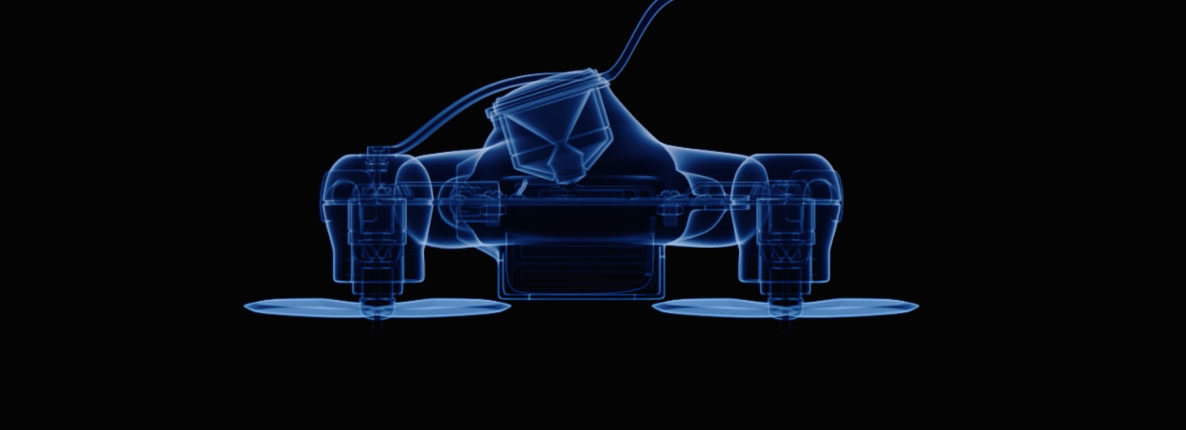

Can We Stop Lethal Autonomous Weapons?

UN CCW Meets to Discuss Lethal Autonomous Weapons

From April 9-13, the United Nations Convention on Conventional Weapons met for the second time to work towards a treaty on lethal autonomous weapons systems (LAWS). Throughout the week, states and civil society gave statements and presented working papers (including China and the US) about their stances on LAWS. Nearly every nation agreed that it is important to retain human control over autonomous weapons, but many disagreed about the definition of “meaningful human control.”

During the week, the group of nations that explicitly endorse banning LAWS expanded to 26, as China, Austria, Colombia, and Djibouti joined during the negotiations. However, five states explicitly rejected moving to negotiate new international law on fully autonomous weapons: France, Israel, Russia, the United Kingdom, and the United States.

The UN CCW is slated to resume discussions in August 2018, however, given the speed with which autonomous weaponry is advancing, many advocates worry that they are moving too slowly.

You can find written and video recaps from each day of the UN CCW meeting here, written by Reaching Critical Will. And if you want to learn more about the technological, political, and social developments of autonomous weapons, check out the Research & Reports page on autonomousweapons.org. You can find relevant news stories and updates at @AIweapons on Twitter and autonomousweapons on Facebook

South Korea Tech Institute Backtracks on AI Weapons after Researcher Boycott

After learning that the Korea Advanced Institute of Science and Technology (KAIST) planned to open an AI weapons lab in collaboration with a major arms company, AI researcher Toby Walsh led an academic boycott of the university. Over 50 of the world’s leading AI and robotics researchers from 30 countries joined the boycott, and and after less than a week, KAIST backtracked and agreed to “not conduct any research activities counter to human dignity including autonomous weapons lacking meaningful human control.” The boycott was covered by CNN and The Guardian.

Can We Rewrite Our Children’s History?

FLI is partnering with Sapiens Plurum on their newest short fiction contest (1st place prize $1000), which encourages writers to imagine an alternative future. How might the future be different if some older or current technology could be reimagined and/or reintroduced? If ethical and safety implications had been understood and considered sooner, would today’s world be different? What might that mean for the future? We encourage everyone to get creative and submit a story — we’re looking for new voices to consider these possibilities, so even if short fiction isn’t your forte, consider submitting a story anyway!

FLI is partnering with Sapiens Plurum on their newest short fiction contest (1st place prize $1000), which encourages writers to imagine an alternative future. How might the future be different if some older or current technology could be reimagined and/or reintroduced? If ethical and safety implications had been understood and considered sooner, would today’s world be different? What might that mean for the future? We encourage everyone to get creative and submit a story — we’re looking for new voices to consider these possibilities, so even if short fiction isn’t your forte, consider submitting a story anyway!

Check us out on SoundCloud and iTunes!

Podcast: What Are the Odds of Nuclear War?

with Seth Baum and Robert de Neufville

What are the odds of a nuclear war happening this century? And how close have we been to nuclear war in the past? Few academics focus on the probability of nuclear war, but many leading voices like former US Secretary of Defense, William Perry, argue that the threat of nuclear conflict is growing.

On this month’s podcast, Ariel spoke with Seth Baum and Robert de Neufville from the Global Catastrophic Risk Institute (GCRI), who recently coauthored a report titled A Model for the Probability of Nuclear War. The report examines 60 historical incidents that could have escalated to nuclear war and presents a model for determining the odds are that we could have some type of nuclear war in the future.

Topics discussed in this episode include:

- The most hair-raising nuclear close calls in history

- Whether we face a greater risk from accidental or intentional nuclear war

- China’s secrecy vs the United States’ transparency about nuclear weapons

- Robert’s first-hand experience with the false missile alert in Hawaii

- How researchers can help us understand nuclear war and craft better policy

You can listen to this podcast here.

Podcast: Inverse Reinforcement Learning and Inferring Human Preferences

with Dylan Hadfield-Menell

To kick off our new podcast series on AI value alignment, Lucas Perry speaks with Dylan Hadfield-Menell about designing algorithms that can learn about and pursue the intended goal of their users, designers, and society in general. Dylan’s recent work primarily focuses on algorithms for human-robot interaction with unknown preferences and reliability engineering for learning systems.

Topics discussed in this episode include:

- Goodhart’s Law and it’s relation to value alignment

- Corrigibility and obedience in AI systems

- IRL and the evolution of human values

- Ethics and moral psychology in AI alignment

- Human preference aggregation

News from our friends

Envision Conference: Looking for new leadership

Envision Conference: Looking for new leadership

This conference provides a focal point for critical thinking about the far future for promising college students and recent graduates. By focusing on future domain leaders and emphasizing existential risk without exclusively focusing on it, Envision’s goal is to build long-term capacity, increase critical thinking, and heighten awareness of existential and catastrophic risks among domain leaders. For more information, see Envision’s State of Affairs and their website.

What We’ve Been Up to This Month

Max Tegmark gave a TED talk on AI safety this month. The video is coming soon. Max also spoke about AI & unemployment at the International Monetary Fund in Washington, D.C. this month.

Max Tegmark gave a TED talk on AI safety this month. The video is coming soon. Max also spoke about AI & unemployment at the International Monetary Fund in Washington, D.C. this month.

Max Tegmark, Richard Mallah and Lucas Perry attended and participated in EAGx Boston this month. Max gave a talk titled “The World We Want AGI To Make For Us” and participated in an anti-debate there on nuclear disarmament, while Richard and Lucas co-hosted a brief workshop on x-risk related skills and considerations.

Ariel Conn attended CSER’s workshop on existential risk, which included four areas of consideration: challenges of evaluation and impact, challenges of evidence for existential risks, challenges of scope and focus (what might we be missing/neglecting?), and challenges in communication (communicating risk responsibly).

Ariel Conn attended CSER’s workshop on existential risk, which included four areas of consideration: challenges of evaluation and impact, challenges of evidence for existential risks, challenges of scope and focus (what might we be missing/neglecting?), and challenges in communication (communicating risk responsibly).

She also visited the Trinity Test Site with ICAN President and one of this year’s Nobel Laureates, Susi Snyder (see image).

Lastly, Ariel helped organize some of the panels at this year’s Conference on World Affairs at CU Boulder, which included speakers who have collaborated with FLI in the past, including Susi Snyder, Heather Roff, Charlie Oliver, and Joe Cirincione.

Viktoriya Krakovna attended a workshop on AI safety at the Center for Human-Compatible AI (CHAI) at UC Berkeley.

RIchard Mallah participated in the inaugural in-person session of the Partnership on AI’s working group on AI, Labor, and the Economy, in NYC this month.

FLI in the News

CNN: Scientists call for boycott of South Korean university over killer robot fears

“Some weapons are better to be kept out of the battlefield,” Walsh told CNN. “We decided that with biological weapons, chemical weapons and nuclear weapons and use the same technology for better, peaceful purposes, and that’s what we hope to could happen here.”

THE EXPRESS TRIBUNE: Ready to lose your job?

“As Max Tegmark has demonstrated in his book Life 3.0, unlike previous versions of intelligence on earth, AI has the capacity to redesign its software as well as hardware. And here is the kicker. Unlike the cost of human labour, information technology gets cheaper every day.”

PAPER MAGAZINE: How Afraid of AI Should We Be?

“One thing most top researchers seem to agree on is that the main concern regarding AI is not with malevolent robots, but, as the Future of Life Institute explains, ‘with intelligence itself: specifically, intelligence whose goals are misaligned with ours.’ The institute gives several examples of ways AI with goals ‘misaligned with ours’ could operate to hurt society: ‘outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand.'”

CLEAN TECHNICA: “Open the Pod Bay Doors, HAL.” Chris Paine’s New Film On AI Explains Why The Dire Warnings Echoed In Sci-Fi Movies May Be Valid

“’The major cause of the recent AI breakthrough isn’t that some dude had a brilliant insight all of a sudden, but simply that we have much bigger data to train them on, and vastly better computers,’ says Max Tegmark.”

If you’re interested in job openings, research positions, and volunteer opportunities at FLI and our partner organizations, please visit our Get Involved page.