Contents

FLI September, 2017 Newsletter

Countries Sign UN Treaty to Outlaw Nuclear Weapons

On September 20th, 50 countries took an important step toward a nuclear-free world by signing the United Nations Treaty on the Prohibition of Nuclear Weapons. This is the first treaty to legally ban nuclear weapons, just as we’ve seen done previously with chemical and biological weapons.

The Treaty on the Prohibition of Nuclear Weapons was adopted on July 7, with a vote of approval from 122 countries. As part of the treaty, the states who sign agree that they will never “evelop, test, produce, manufacture, otherwise acquire, possess or stockpile nuclear weapons or other nuclear explosive devices.” Signatories also promise not to assist other countries with such efforts, and no signatory will “llow any stationing, installation or deployment of any nuclear weapons or other nuclear explosive devices in its territory or at any place under its jurisdiction or control.”

Not only had 50 countries signed the treaty at the time this article was written, but 3 of them also already ratified it. The treaty will enter into force 90 days after it’s ratified by 50 countries.

Stanislav Petrov, the Man Who Saved the World, Has Died

By Ariel Conn

All of us at FLI were saddened to learn that Stanislav Petrov passed away this past May. News of his death was announced earlier this month. Petrov is widely credited for having saved millions if not billions of people with his decision to ignore satellite reports, preventing accidental escalation into what could have become a full-scale nuclear war. This event was turned into the movie “The Man Who Saved the World,” and Petrov was honored at the United Nations and given the World Citizen Award.

Check us out on SoundCloud and iTunes!

Podcast: Choosing a Career to Tackle the World’s Biggest Problems

with Rob Wiblin and Brenton Mayer

If you want to improve the world as much as possible, what should you do with your career? Should you become a doctor, an engineer or a politician? Should you try to end global poverty, climate change, or international conflict? These are the questions that the research group, 80,000 Hours tries to answer. They try to figure out how individuals can set themselves up to help as many people as possible in as big a way as possible.

To learn more about their research, Ariel invited Rob Wiblin and Brenton Mayer of 80,000 Hours to the FLI podcast. In this podcast we discuss “earning to give”, building career capital, the most effective ways for individuals to help solve the world’s most pressing problems – including artificial intelligence, nuclear weapons, biotechnology and climate change. If you’re interested in tackling these problems, or simply want to learn more about them, this podcast is the perfect place to start.

ICYMI: This Month’s Most Popular Articles

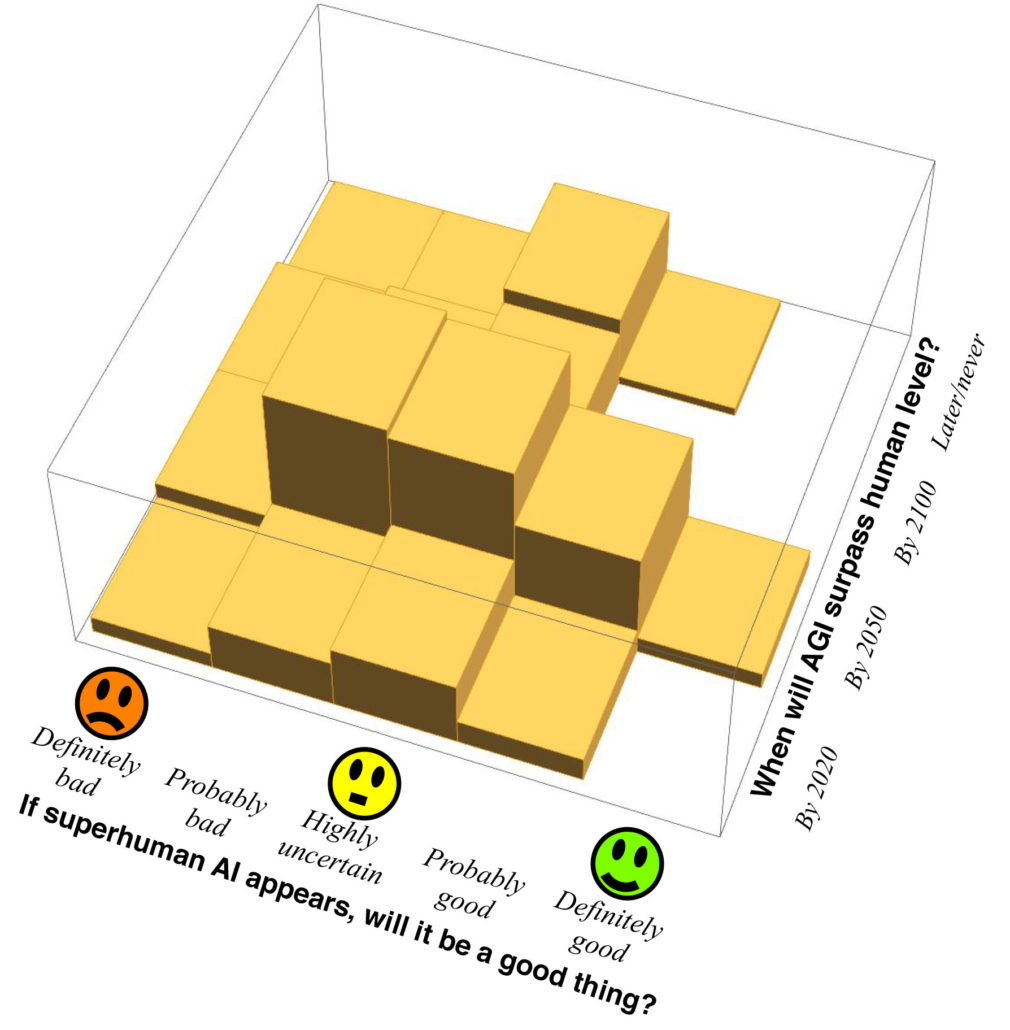

The Future of AI: What Do You Think?

Max Tegmark’s new book on artificial intelligence, Life 3.0: Being Human in the Age of Artificial Intelligence, explores how AI will impact life as it grows increasingly advanced, perhaps even achieving superintelligence far beyond human level in all areas. For the book, Max surveys experts’ forecasts, and explores a broad spectrum of views on what will/should happen.

But it’s time to expand the conversation. If we’re going to create a future that benefits as many people as possible, we need to include as many voices as possible. And that includes yours! This page features the answers from the people who have taken the survey that goes along with Max’s book. To join the conversation yourself, please take the survey here.

By Ariel Conn

Safety Principle: AI systems should be safe and secure throughout their operational lifetime and verifiably so where applicable and feasible.

Continuing our discussion of the 23 Asilomar AI principles, researchers weigh in on safety issues. This principle gets to the heart of the AI safety research initiative: how can we ensure safety for a technology that is designed to learn how to modify its own behavior?

According to computer scientist Dan Weld, understanding and trusting machines is “the key problem to solve” in AI safety, and it’s necessary today. He explains, “Since machine learning is at the core of pretty much every AI success story, it’s really important for us to be able to understand what it is that the machine learned.”

Understanding the Risks and Limitations of North Korea’s Nuclear Program

By Kirsten Gronlund

Pyongyang has now successfully detonated nuclear weapons in six underground tests, but these tests have been carried out in ideal conditions, far from the reality of a ballistic launch. Wright and others believe that North Korea likely has warheads that can be delivered via short-range missiles that can reach South Korea or Japan. They have deployed such missiles for years. But it remains unclear whether North Korean warheads would be deliverable via long-range missiles.

START from the Beginning: 25 Years of US-Russian Nuclear Weapons Reductions

By Eryn MacDonald

For the past 25 years, a series of treaties have allowed the US and Russia to greatly reduce their nuclear arsenals—from well over 10,000 each to fewer than 2,000 deployed long-range weapons each. These Strategic Arms Reduction Treaties (START) have enhanced US security by reducing the nuclear threat, providing valuable information about Russia’s nuclear arsenal, and improving predictability and stability in the US-Russia strategic relationship.

With increased tensions between the US and Russia and an expanded range of security threats for the US to worry about, this longstanding foundation is now more valuable than ever.

News from Partner Organizations

Existential Risk and Cost-Effective Biosecurity

By Piers Millett & Andrew Snyder-Beattie (Future of Humanity Institute)

This paper provides an overview of biotechnological extinction risk, makes some rough initial estimates for how severe the risks might be, and compares the cost-effectiveness of reducing these extinction level risks with existing biosecurity work. The authors find that reducing human extinction risk can be more cost-effective than reducing smaller-scale risks, even when using conservative estimates. This suggests that the risks are not low enough to ignore and that more ought to be done to prevent the worst-case scenarios.

Pricing Externalities to Balance Public Risks and Benefits of Research

By Sebastian Farquhar, Owen Cotton-Barratt & Andrew Snyder-Beattie (FHI)

Certain types of flu experiments in the past decade have created viruses that could potentially create a global pandemic. This led to fierce debates as to how to balance the risks and benefits of this research. This paper advances a policy that tackles this issue by either requiring labs to purchase liability insurance for their research or by having the risks specifically incorporated into the cost of the research proposal. If properly implemented, this policy could reduce unwarranted risks from dual-use research.

Human Agency and Global Catastrophic Biorisk

By Piers Millett & Andrew Snyder-Beattie (FHI)

In this essay, FHI researchers Millett and Snyder-Beattie pose some arguments for why a global biological catastrophe is more likely to come from human technology as opposed to from a natural pandemic. The authors discuss the impact this has for prioritising risks and encourage further research collaboration between health and security communities.

By Simon Beard (Center for the Study of Existential Risk)

Dr Simon Beard has written a solid piece featured in the Huffington Post answering the question “Should We Care About the Worst-Case Scenario When it Comes to Climate Change?” This piece draws on themes from an ESRC funded workshop on Risk, Uncertainty and Catastrophe Scenarios convened by Simon and Dr Kai Spiekerman. The report from the workshop can be found here.

What We’ve Been Up to This Month

With the launch of Life 3.0, Max Tegmark has been traveling the country on a book tour. After giving talks on the US west coast, including at Microsoft and Google headquarters, Max will return to speak in the Boston area, Toronto, and then London and Stockholm. You can see the full schedule here.

FLI in the News

Open Letter on Autonomous Weapons

TIME: Why We Must Not Build Automated Weapons of War

“Over 100 CEOs of artificial intelligence and robotics firms recently signed an open letter warning that their work could be repurposed to build lethal autonomous weapons — “killer robots.” They argued that to build such weapons would be to open a ” ‘Pandora’s Box.’ This could forever alter war.”

THE GUARDIAN: Humans will always control killer drones, says ministry of defence

“New doctrine issued in response to expert letter citing fears over artificial intelligence in remote weapons.”

Life 3.0

THE GUARDIAN: Max Tegmark: ‘Machines taking control doesn’t have to be a bad thing’

“The artificial intelligence expert’s new book, Life 3.0, urges us to act now to decide our future, rather than risk it being decided for us.”

GEEKWIRE: ‘Life 3.0’ gives you a user’s guide for superintelligent AI systems to come

“In his newly published book, ‘Life 3.0: Being Human in the Age of Artificial Intelligence,’ Tegmark lays out a case for what he calls ‘mindful optimism’ about beneficial AI — artificial intelligence that will make life dramatically better for humans rather than going off in unintended directions.”

FLI

FORBES: In Praise Of Dystopias: ‘Black Mirror,’ ‘1984,’ ‘Brave New World’ And Our Technology-Defined Future

“Great thinking exists, but we need more of it and more people involved. MIT cosmologist Max Tegmark’s Future of Life Institute, philosopher Nick Bostrom’s Future of Humanity Institute at Oxford University and Singularity University (SU) in Silicon Valley offer a few places to start.”

Get Involved

FHI: AI policy and Governance Internship

The Future of Humanity Institute at the University of Oxford seeks interns to contribute to our work in the areas of AI policy, AI governance, and AI strategy. Our work in this area touches on a range of topics and areas of expertise, including international relations, international institutions and global cooperation, international law, international political economy, game theory and mathematical modelling, and survey design and statistical analysis. Previous interns at FHI have worked on issues of public opinion, technology race modelling, the bridge between short-term and long-term AI policy, the development of AI and AI policy in China, case studies in comparisons with related technologies, and many other topics.

If you or anyone you know is interested in this position, please follow this link.

FHI: AI Safety and Reinforcement Learning Internship

The Future of Humanity Institute at the University of Oxford seeks interns to contribute to our work in the area of technical AI safety. Examples of this type of work include Cooperative Inverse Reinforcement Learning, Learning the Preferences of Ignorant, Inconsistent Agents, Learning the Preferences of Bounded Agents, and Safely Interruptible Agents. The internship will give the opportunity to work on a specific project. The ideal candidate will have a background in machine learning, computer science, statistics, mathematics, or another related field. This is a paid internship. Candidates from underrepresented demographic groups are especially encouraged to apply.

If you or anyone you know is interested in this position, please follow this link.

The Fundraising Manager will have a key role in securing funding to enable us to meet our aim of increasing the preparedness, resources & ability (knowledge & technology) of governments / corporations / humanitarian organizations / NGOs / people to be able to feed everyone in the event of a global catastrophe through recovery of food systems. To reach this ambitious goal, the Fundraising Manager will develop a strategic fundraising plan, and interface with the funder community, including foundations, major individual donors, and other non-corporate institutions.

If you or anyone you know is interested in this position, please follow this link.

To learn more about job openings at our other partner organizations, please visit our Get Involved page.