Contents

FLI July, 2017 Newsletter

United Nations Adopts Ban on Nuclear Weapons

This month, 72 years after their invention, states at the United Nations formally adopted a treaty which categorically prohibits nuclear weapons.

With 122 votes in favor, one vote against, and one country abstaining, the “Treaty on the Prohibition of Nuclear Weapons” was adopted Friday morning and will open for signature by states at the United Nations in New York on September 20, 2017. Civil society organizations and more than 140 states have participated throughout negotiations.

On adoption of the treaty, ICAN Executive Director Beatrice Fihn said:

“We hope that today marks the beginning of the end of the nuclear age. It is beyond question that nuclear weapons violate the laws of war and pose a clear danger to global security. No one believes that indiscriminately killing millions of civilians is acceptable – no matter the circumstance – yet that is what nuclear weapons are designed to do.”

In a public statement, Former Secretary of Defense William Perry said:

“The new UN Treaty on the Prohibition of Nuclear Weapons is an important step towards delegitimizing nuclear war as an acceptable risk of modern civilization. Though the treaty will not have the power to eliminate existing nuclear weapons, it provides a vision of a safer world, one that will require great purpose, persistence, and patience to make a reality. Nuclear catastrophe is one of the greatest existential threats facing society today, and we must dream in equal measure in order to imagine a world without these terrible weapons.”

Read more about the effort to ban nuclear weapons and what the treaty will encompass.

Check us out on SoundCloud and iTunes!

Podcast: The Art of Predicting

with Anthony Aguirre and Andrew Critch

How well can we predict the future? In this podcast, Ariel speaks with Anthony Aguirre and Andrew Critch about the art of predicting the future, what constitutes a good prediction, and how we can better predict the advancement of artificial intelligence. They also touch on the difference between predicting a solar eclipse and predicting the weather, what it takes to make money on the stock market, and the bystander effect regarding existential risks.

Anthony is a professor of physics at the University of California at Santa Cruz. He’s one of the founders of the Future of Life Institute, of the Foundational Questions Institute, and most recently of Metaculus, which is an online effort to crowdsource predictions about the future of science and technology. Andrew is on a two-year leave of absence from MIRI to work with UC Berkeley’s Center for Human Compatible AI. He cofounded the Center for Applied Rationality, and previously worked as an algorithmic stock trader at James Street Capital.

This Month’s Most Popular Articles

Op-ed: Should Artificial Intelligence Be Regulated?

By Anthony Aguirre, Ariel Conn and Max Tegmark

Should artificial intelligence be regulated? Can it be regulated? And if so, what should those regulations look like?

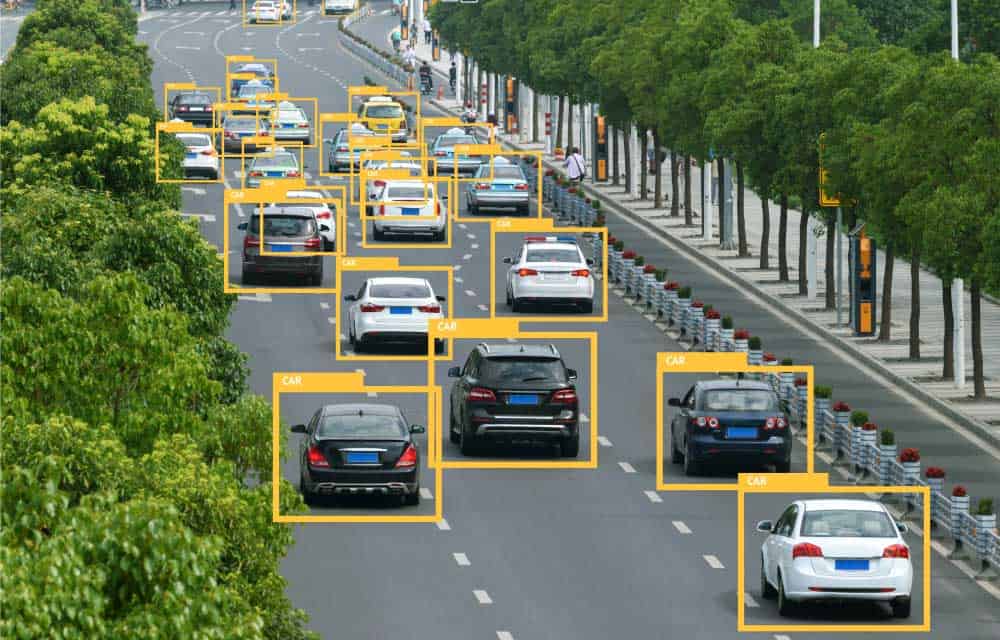

These are difficult questions to answer for any technology still in development stages – regulations, like those on the food, pharmaceutical, automobile and airline industries, are typically applied after something bad has happened, not in anticipation of a technology becoming dangerous. But AI has been evolving so quickly, and the impact of AI technology has the potential to be so great that many prefer not to wait and learn from mistakes, but to plan ahead and regulate proactively.

Aligning Superintelligence With Human Interests

By Sarah Marquart

The trait that currently gives humans a dominant advantage over other species is intelligence. Human advantages in reasoning and resourcefulness have allowed us to thrive. However, this may not always be the case.

Although superintelligent AI systems may be decades away, Benya Fallenstein – a research fellow at the Machine Intelligence Research Institute – believes “it is prudent to begin investigations into this technology now.” The more time scientists and researchers have to prepare for a system that could eventually be smarter than us, the better.

Towards a Code of Ethics in Artificial Intelligence

By Tucker Davey

AI promises a smarter world – a world where finance algorithms analyze data better than humans, self-driving cars save millions of lives from accidents, and medical robots eradicate disease. But machines aren’t perfect. Whether an automated trading agent buys the wrong stock, a self-driving car hits a pedestrian, or a medical robot misses a cancerous tumor – machines will make mistakes that severely impact human lives.

Safe Artificial Intelligence May Start with Collaboration

By Ariel Conn

Research Culture Principle: A culture of cooperation, trust, and transparency should be fostered among researchers and developers of AI.

Continuing our discussion of the 23 Asilomar Principles, researchers weigh in on the importance and challenges of collaboration between competing AI organizations.

Susan Craw Interview on Asilomar AI Principles

By Ariel Conn

Craw is a Research Professor at Robert Gordon University Aberdeen in Scotland. Her research in artificial intelligence develops innovative data/text/web mining technologies to discover knowledge to embed in case-based reasoning systems, recommender systems, and other intelligent information systems.

Joshua Greene Interview on Asilomar AI Principles

By Ariel Conn

Greene is an experimental psychologist, neuroscientist, and philosopher. He studies moral judgment and decision-making, primarily using behavioral experiments and functional neuroimaging (fMRI). Other interests include religion, cooperation, and the capacity for complex thought. He is the author of Moral Tribes: Emotion, Reason, and the Gap Between Us and Them.

What We’ve Been Up to This Month

Viktoriya Krakovna attended a conference at the Leverhulme Center for the Future of Intelligence, on July 13 – 14, where speakers discussed the development and future of artificial intelligence. Topics discussed included visions for the future, how humans perceive intelligent robots, trust and understanding with AIs, and the ethical and legal complications from AI.

FLI in the News

REUTERS: Futureproof

Jaan Tallinn was recently featured in a video interview about the risks posed by artificial intelligence.

FUTURISM: Experts Want Robots to Have an “Ethical Black Box” That Explains Their Decision-Making

Divestment Luncheon on August 6th

Do you live in the Boston area? Join us for a nuclear weapons divestment lunch on August 6th! Steven Dray, a portfolio manager from Zevin Asset Management, will be on hand to help anyone interested in divesting their money from nuclear weapons producers. Steven has years of experience in socially responsible investing and is certified both as a financial planner and chartered financial analyst. The location of the event is still TBD, but you can expect a free lunch and food for thought. Space is very limited, so this will be first-come-first-served. If you are interested, please fill out this application form.

Job Openings at the Future of Humanity Institute

FHI is seeking a Senior Research Fellow on AI Macrostrategy, to identify crucial considerations for improving humanity’s long-run potential. Main responsibilities will include guidance on our existential risk research agenda and AI strategy, individual research projects, collaboration with partner institutions and shaping and advancing FHI’s research strategy.

FHI is also seeking a Research Fellow for their Macrostrategy team. Reporting to the Director of Research at the Future of Humanity Institute, the Research Fellow will be evaluating strategies that could reduce existential risk, particularly with respect to the long-term outcomes of technologies such as artificial intelligence.

To learn more about job openings at our other partner organizations, please visit our Get Involved page.