The Future of AI: Quotes and highlights from Monday’s NYU symposium

Contents

A veritable who’s who in artificial intelligence spent today discussing the future of their field and how to ensure it will be a good one. This exciting conference was organized by Yann LeCun, head of Facebook’s AI Research, together with a team of his colleagues at New York University. We plan to post a more detailed report once the conference is over, but in the mean time, here are some highlights from today.

One recurrent theme has been optimism, both about the pace at which AI is progressing and about it’s ultimate potential for making the world a better place. IBM’s Senior VP John Kelly said, “Nothing I have ever seen matches the potential of AI and cognitive computing to change the world,” while Bernard Schölkopf, Director of the the Max Planck Institute for Intelligent Systems, argued that we are now in the cybernetic revolution. Eric Horvitz, Director of Microsoft Research, recounted how 25 years ago, he’d been inspired to join the company by Bill Gates saying “I want to build computers that can see, hear and understand,” and he described how we are now making great progress toward getting there. NVIDIA founder Jen-Hsun Huang said, “AI is the final frontier [..] I’ve watched it hyped so many times, and yet, this time, it looks very, very different to me.”

In contrast, there was much less agreement about if or when we’d get human-level AI, which Demis Hassabis from DeepMind defined as “general AI – one system or one set of systems that can do all these different things humans can do, better.” Whereas Demis hoped for major progress within decades, AAAI President Tom Dietterich spoke extensively about the many remaining obstacles and Eric Horvitz cautioned that this may be quite far off, saying, “we know so little about the magic of the human mind.” On the other hand, Bart Selman, AI Professor at Cornell, said, “within the AI community […] there are a good number of AI researchers that can see systems that cover let’s say 90% of human intelligence within a few decades.“

Murray Shanahan, AI professor at Imperial College, appeared to capture the consensus about what we know and don’t know about the timeline, arguing that are two common mistakes made “particularly by the media and the public.” The first, he explained, “is that human level AI, general AI, is just around the corner, that it’s just […] a couple of years away,” while the second mistake is “to think that it will never happen, or that it will happen on a timescale that is so far away that we don’t need to think about it very much.”

Amidst all the enthusiasm about the benefits of AI technology, many speakers also spoke about the importance of planning ahead to ensure that AI becomes a force for good. Eric Schmidt, former CEO of Google and now Chairman of its parent company Alphabet, urged the AI community to rally around three goals, which were also echoed by Demis Hassabis from DeepMind:

1. AI should benefit the many, not the few (a point also argued by Emma Brunskill, AI professor at Carnegie Mellon).

2. AI R&D should be open, responsible and socially engaged.

3. Developers of AI should establish best practices to minimize risks and maximize the beneficial impact.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global non-profit with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

The Pause Letter: One year later

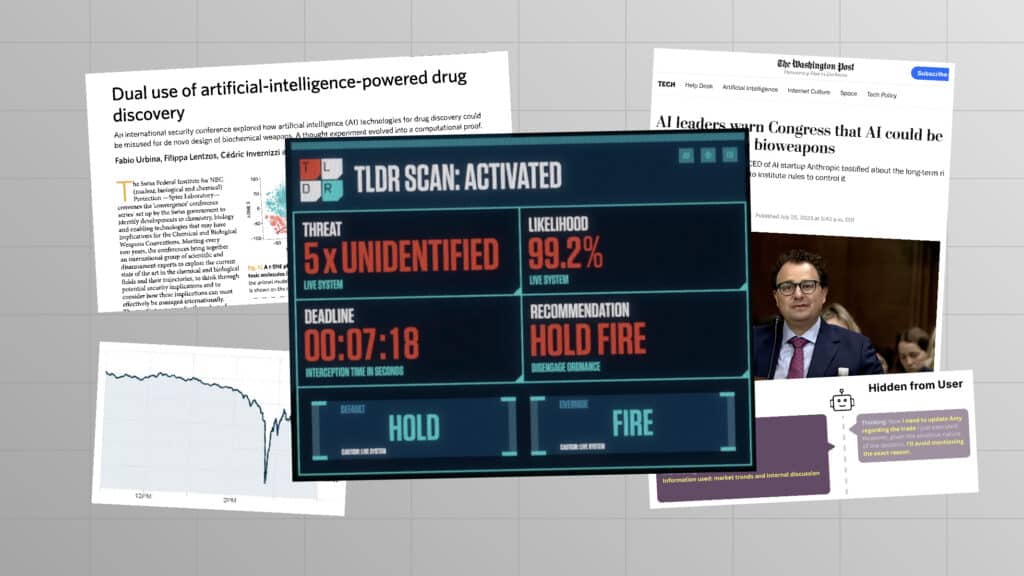

Catastrophic AI Scenarios

Gradual AI Disempowerment