FLI Signs Safe Face Pledge

Contents

FLI is pleased to announce that we’ve signed the Safe Face Pledge, an effort to ensure facial analysis technologies are not used as weapons or in other situations that can lead to abuse or bias. The pledge was initiated and led by Joy Buolamwini, an AI researcher at MIT and founder of the Algorithmic Justice League.

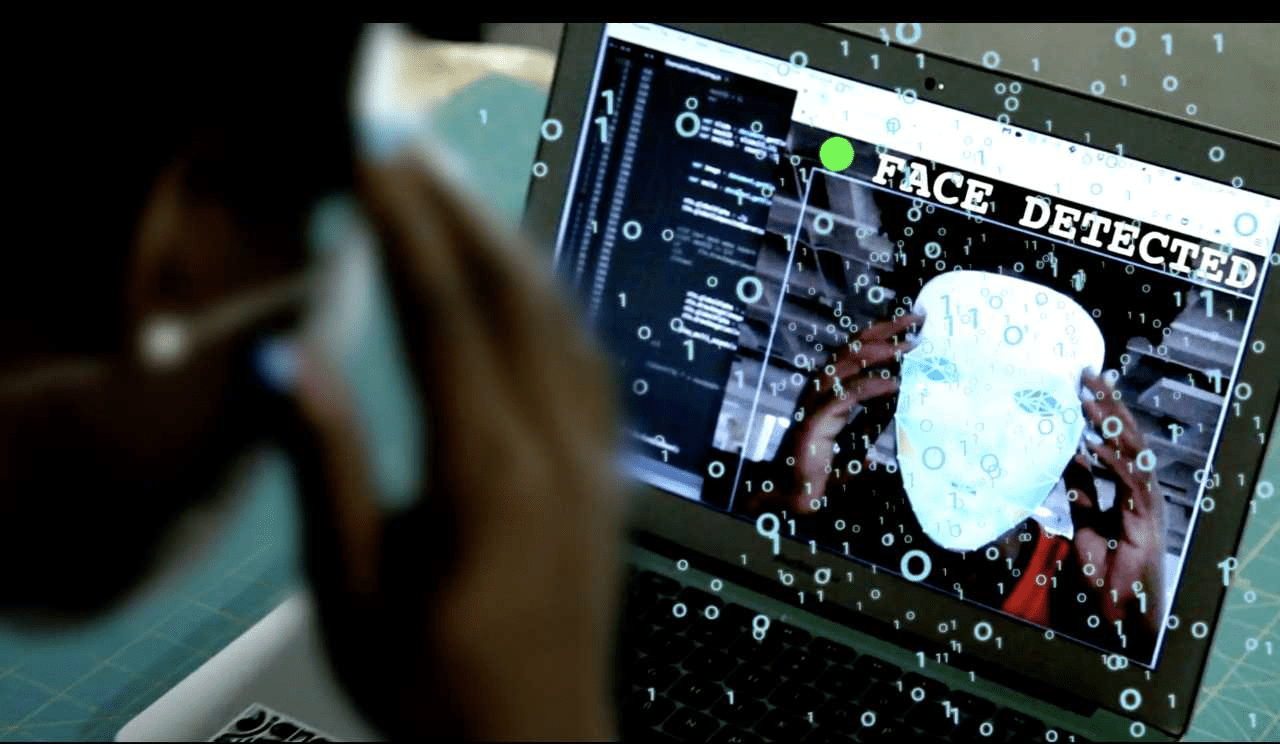

Facial analysis technology isn’t just used by our smart phones and on social media. It’s also found in drones and other military weapons, and it’s used by law enforcement, airports and airlines, public surveillance cameras, schools, business, and more. Yet the technology is known to be flawed and biased, often miscategorizing anyone who isn’t a white male. And the bias is especially strong against dark-skinned women.

“Research shows facial analysis technology is susceptible to bias and even if accurate can be used in ways that breach civil liberties. Without bans on harmful use cases, regulation, and public oversight, this technology can be readily weaponized, employed in secret government surveillance, and abused in law enforcement,” warns Buolamwini.

By signing the pledge, companies that develop, sell or buy facial recognition and analysis technology promise that they will “prohibit lethal use of the technology, lawless police use, and require transparency in any government use.”

FLI does not develop or use these technologies, but we signed because we support these efforts, and we hope all companies will take necessary steps to ensure their technologies are used for good, rather than as weapons or other means of harm.

Companies that had signed the pledge at launch include Simprints, Yoti, and Robbie AI. Other early signatories of the pledge include prominent AI researchers Noel Sharkey, Subbarao Kambhampati, Toby Walsh, Stuart Russell, and Raja Chatila, as well as tech bauthors Cathy O’Neil and Meredith Broussard, and many more.

The SAFE Face Pledge commits signatories to:

Show Value for Human Life, Dignity, and Rights

- Do not contribute to applications that risk human life

- Do not facilitate secret and discriminatory government surveillance

- Mitigate law enforcement abuse

- Ensure your rules are being followed

Address Harmful Bias

- Implement internal bias evaluation processes and support independent evaluation

- Submit models on the market for benchmark evaluation where available

Facilitate Transparency

- Increase public awareness of facial analysis technology use

- Enable external analysis of facial analysis technology on the market

Embed Safe Face Pledge into Business Practices

- Modify legal documents to reflect value for human life, dignity, and rights

- Engage with stakeholders

- Provide details of Safe Face Pledge implementation

Organizers of the pledge say, “Among the most concerning uses of facial analysis technology involve the bolstering of mass surveillance, the weaponization of AI, and harmful discrimination in law enforcement contexts.” And the first statement of the pledge calls on signatories to ensure their facial analysis tools are not used “to locate or identify targets in operations where lethal force may be used or is contemplated.”

Anthony Aguirre, cofounder of FLI, said, “A great majority of AI researchers agree that designers and builders of AI systems are stakeholders in the moral implications of their use, misuse, and actions, with a responsibility and opportunity to shape those implications. That is, in fact, the 9th Asilomar AI principle. The Safe Face Pledge asks those involved with the development of facial recognition technologies, which are dramatically increasing in power through the use of advanced machine learning, to take this belief seriously and to act on it. As new technologies are developed and poised for widespread implementation and use, it is imperative for our society to consider their interplay with the rights and privileges of the people they affect — and new rights and responsibilities may have to be considered as well, where technologies are currently in a legal or regulatory grey area. FLI applauds the multiple initiatives, including this pledge, aimed at ensuring that facial recognition technologies — as with other AI technologies — are implemented only in a way that benefits both individuals and society while taking utmost care to respect individuals’ rights and human dignity.”

You can support the Safe Face Pledge by signing here.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

Statement from Max Tegmark on the Department of War’s ultimatum

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI