How Could a Failed Computer Chip Lead to Nuclear War?

Contents

The US early warning system is on watch 24/7, looking for signs of a nuclear missile launched at the United States. As a highly complex system with links to sensors around the globe and in space, it relies heavily on computers to do its job. So, what happens if there is a glitch in the computers?

Between November 1979 and June 1980, those computers led to several false warnings of all-out nuclear attack by the Soviet Union—and a heart-stopping middle-of-the-night telephone call.

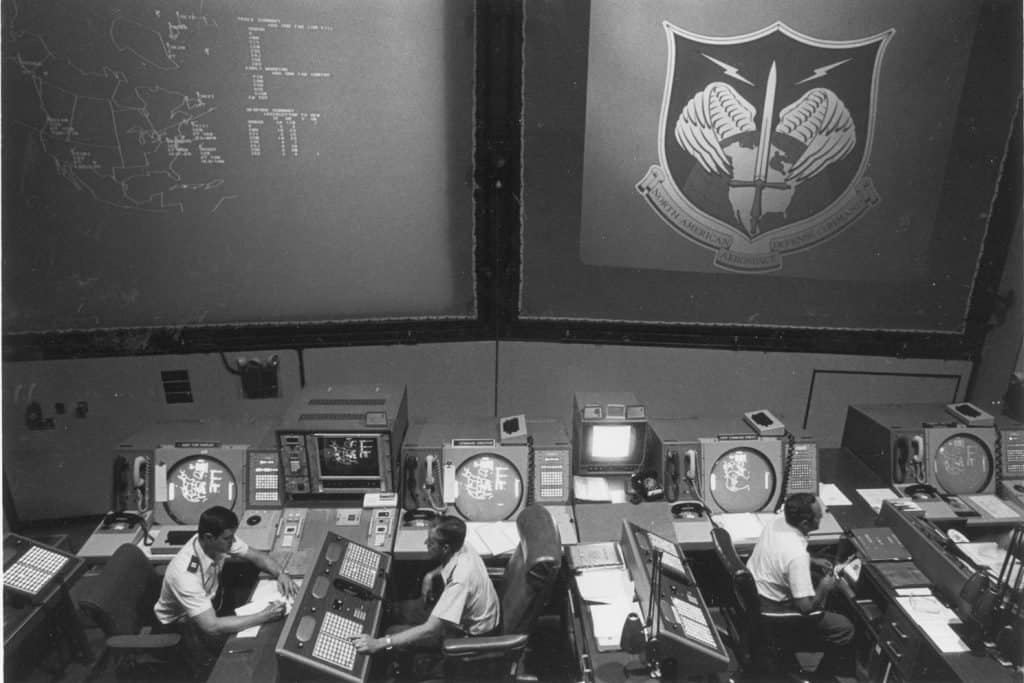

NORA command post, c. 1982. (Source: US National Archives)

I described one of these glitches previously. That one, in 1979, was actually caused by human andsystems errors: A technician put a training tape in a computer that then—inexplicably—routed the information to the main US warning centers. The Pentagon’s investigator stated that they were never able to replicate the failure mode to figure out what happened.

Just months later, one of the millions of computer chips in the early warning system went haywire, leading to incidents on May 28, June 3, and June 6, 1980.

The June 3 “attack”

By far the most serious of the computer chip problems occurred on early June 3, when the main US warning centers all received notification of a large incoming nuclear strike. The president’s National Security Advisor Zbigniew Brezezinski woke at 3 am to a phone call telling him a large nuclear attack on the United States was underway and he should prepare to call the president. He later said he had not woken up his wife, assuming they would all be dead in 30 minutes.

Like the November 1979 glitch, this one led NORAD to convene a high-level “Threat Assessment Conference,” which includes the Chair of the Joint Chiefs of Staff and is just below the level that involves the president. Taking this step sets lots of things in motion to increase survivability of U.S. strategic forces and command and control systems. Air Force bomber crews at bases around the US got in their planes and started the engines, ready for take-off. Missile launch offices were notified to standby for launch orders. The Pacific Command’s Airborne Command Post took off from Hawaii. The National Emergency Airborne Command Post at Andrews Air Force Base taxied into position for a rapid takeoff.

The warning centers, by comparing warning signals they were getting from several different sources, were able to determine within a few minutes they were seeing a false alarm—likely due to a computer glitch. The specific cause wasn’t identified until much later. At that point, a Pentagon document matter-of-factly stated that a 46-cent computer chip “simply wore out.”

Short decision times increase nuclear risks

As you’d hope, the warning system has checks built into it. So despite the glitches that caused false readings, the warning officers were able to catch the error in the short time available before the president would have to make a launch decision.

We know these checks are pretty good because there have been a surprising number of incidents like these, and so far none have led to nuclear war.

But we also know they are not foolproof.

The risk is compounded by the US policy of keeping its missile on hair-trigger alert, poised to be launched before an incoming attack could land. Maintaining an option of launching quickly on warning of an attack makes the time available for sorting out confusing signals and avoiding a mistaken launch very short.

For example, these and other unexpected incidents have led to considerable confusion on the part of the operators. What if the confusion had persisted longer? What might have happened if something else had been going on that suggested the warning was real? In his book, My Journey at the Nuclear Brink, former Secretary of Defense William Perry asks what might have happened if these glitches “had occurred during the Cuban Missile Crisis, or a Mideast war?”

There might also be unexpected coincidences. What if, for example, US sensors had detected an actual Soviet missile launch around the same time? In the early 1980s the Soviets were test launching 50 to 60 missiles per year—more than one per week. Indeed, US detection of the test of a Soviet submarine-launch missile had led to a Threat Assessment Conference just weeks before this event.

Given enough time to analyze the data, warning officers on duty would be able to sort out most false alarms. But the current system puts incredible time pressure on the decision process, giving warning officers and then more senior officials only a few minutes to assess the situation. If they decide the warning looks real, they would alert the president, who would have perhaps 10 minutes to decide whether to launch.

Keeping missiles on hair-trigger alert and requiring a decision within minutes of whether or not to launch is something like tailgating when you’re driving on the freeway. Leaving only a small distance between you and the car in front of you reduces the time you have to react. You may be able to get away with it for a while, but the longer you put yourself in that situation the greater the chance that some unforeseen situation, or combination of events, will lead to disaster.

In his book, William Perry makes a passionate case for taking missiles off alert:

“These stories of false alarms have focused a searing awareness of the immense peril we face when in mere minutes our leaders must make life-and-death decisions affecting the whole planet. Arguably, short decision times for response were necessary during the Cold War, but clearly those arguments do not apply today; yet we are still operating with an outdated system fashioned for Cold War exigencies.

“It is time for the United States to make clear the goal of removing all nuclear weapons everywhere from the prompt-launch status in which nuclear-armed ballistic missiles are ready to be launched in minutes.”

To see what other incidents have increased the risks posed by nuclear weapons over the years, visit our new Wheel of Near Misfortune.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Nuclear, Recent News

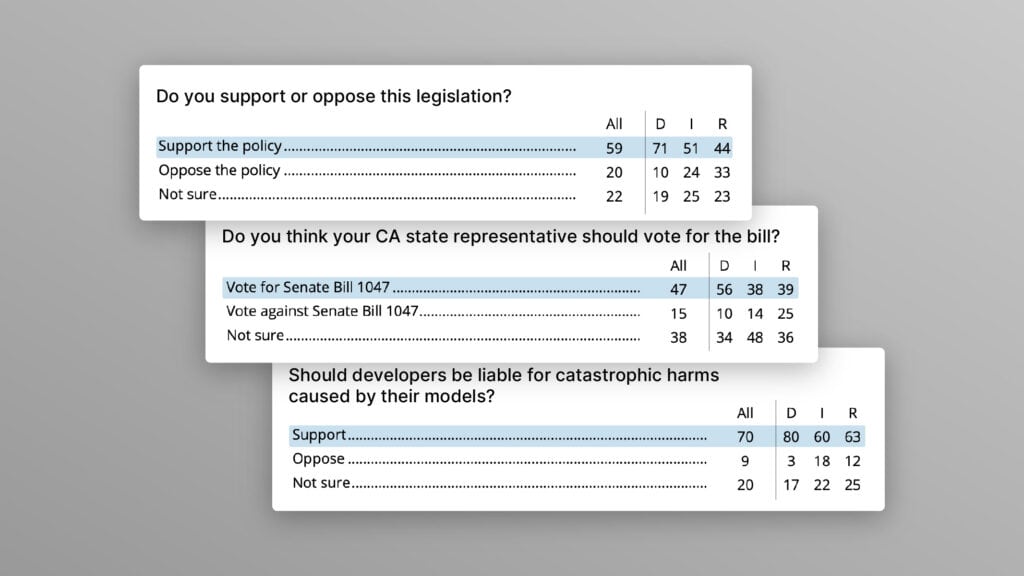

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Poll Shows Broad Popularity of CA SB1047 to Regulate AI