Contents

FLI January 2021 Newsletter

Reflections on 2020 from FLI’s President

2020 reminded us that our civilization is vulnerable. Will we humans wisely use our ever more powerful technology to end disease and poverty and create a truly inspiring future, or will we sloppily use it to drive ever more species extinct, including our own? We’re rapidly approaching this fork in the road: the past year saw the power of our technology grow rapidly, exemplified by GPT3, mu-zero, AlphaFold 2 and dancing robots, while the wisdom with which we manage our technology remained far from spectacular: the Open Skies Treaty collapsed, the only remaining US-Russia nuclear treaty (New Start), is due to expire next month, meaningful regulation of harmful AI remains absent, AI-fuelled filter bubbles polarize the world, and an arms-race in lethal autonomous weapons is ramping up.

It’s been a great honor for me to get to work with such a talented and idealistic team at our institute to make tomorrow’s technology help humanity flourish rather than flounder. With Bill Gates, Tony Fauci & Jennifer Doudna, we honored the heroes who helped save 200 million lives by eradicating smallpox. As other examples of tech-for-good, FLI members researched how machine-learning can help with the UN Sustainable Development Goals and developed free online tools for better predictions and helping people break out of their filter bubbles.

On the AI policy front, FLI was the civil society co-champion for the UN Secretary General’s Roadmap for Digital Cooperation: Recommendation 3C on Artificial Intelligence alongside Finland, France and two UN organizations, whose final recommendations included “life and death decisions should not be delegated to machines”. FLI also produced formal and informal advice on AI risk management to the U.S. Government, the European Union, and other policymaking fora, resulting in a series of high-value successes. On the nuclear disarmament front, we previously organized an open letter signed by 30 Nobel Laureates and thousands of other scientists from 100 countries in support of the UN Treaty on the Prohibition of Nuclear Weapons. This treaty has now gathered enough signatures to enter into force January 22, 2021, which will help stigmatize the new nuclear arms race and pressure the nations driving it to reduce their arsenals down towards the minimim levels needed for deterrence.

On the outreach front, our FLI podcast grew 23% in 2020, with about 300,000 listens to fascinating conversations about existential risk and related topics, with guests including Yuval Noah Harari, Sam Harris, Steven Pinker, Stuart Russell, George Church and thinkers from OpenAI, DeepMind and MIRI. They reminded us that even seemingly insurmountable challenges can be overcome with creativity, willpower and sustained effort. Technology is giving life the potential to flourish like never before, so let’s seize this opportunity together!

Max Tegmark

Policy & Advocacy Efforts

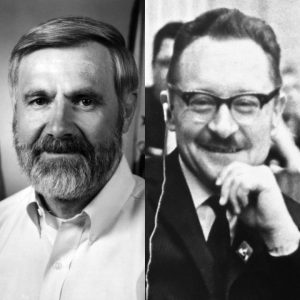

On 9th December 2020, the Future of Life Award was bestowed upon William Foege and Viktor Zhdanov for their critical contributions towards the eradication of smallpox. The $100,000 Future of Life Award was presented to Dr. William Foege and Dr. Viktor Zhdanov by FLI’s co-founder Max Tegmark in an online ceremony attended by Bill Gates, Dr. Anthony Fauci, freshly minted Nobel Laureate Dr. Jennifer Doudna and Dr. Matthew Meselson, Winner of the 2019 Future of Life Award. Since Dr. Viktor Zhdanov passed away in 1987, his sons Viktor and Michael accepted the award on his behalf.

The lessons learned from overcoming smallpox remain highly relevant to public health and, in particular, the COVID-19 pandemic. “In selecting Bill Foege and Viktor Zhdanov as recipients of its prestigious 2020 award, the Future of Life Institute reminds us that seemingly impossible problems can be solved when science is respected, international collaboration is fostered, and goals are boldly defined. As we celebrate this achievement quarantined in our homes and masked outdoors, what message could be more obvious or more audacious?”, Dr. Rachel Bronson, President and CEO of the Bulletin of Atomic Scientists, says.

The National Artificial Intelligence Initiative Act Passes Into US Law

Throughout 2020, FLI actively supported and advocated for the National AI Initiative Act (NAIIA) in the U.S. The Act authorises nearly $6.5 billion across the next five years for AI research and development.

The new law is likely to result in significant improvements to federal support for safety-related AI research, an outcome FLI will continue to advocate for in the coming year. It authorises $390 million for the National Institute of Standards and Technology to support the development of a risk-mitigation framework for AI systems as well as guidelines to promote trustworthy AI systems, for instance.

FLI’s President Max Tegmark Spoke at a Symposium on Responsible AI

On 16th December 2020, Max Tegmark spoke at a European symposium on “Responsible Artificial Intelligence” hosted by NORSUS Norwegian Institute for Sustainable Research and INSCICO. Accompanied by a distinguished panel of key actors in the fields of ethics and AI, Max presented on “Getting AI to work for democracy, not against it.”

New Podcast Episodes

The Future of Life Award 2020 Podcast; Saving 200,000,000 Lives by Eradicating Smallpox

In its last century, smallpox killed around 500 million people. Its eradication in 1980, due in large part to the efforts of William Foege and Viktor Zhdanov, is estimated to have saved 200 million lives – so far. In the Future of Life Award 2020 podcast, we are joined by William Foege and Viktor Zhdanov’s sons, Viktor and Michael, to discuss Foege’s and Zhdanov’s personal background, their contributions to the global efforts to eradicate smallpox, the history of smallpox itself and, more generally, issues of biology in the 21st century, including COVID-19, bioterrorism and synthetic pandemics.

In its last century, smallpox killed around 500 million people. Its eradication in 1980, due in large part to the efforts of William Foege and Viktor Zhdanov, is estimated to have saved 200 million lives – so far. In the Future of Life Award 2020 podcast, we are joined by William Foege and Viktor Zhdanov’s sons, Viktor and Michael, to discuss Foege’s and Zhdanov’s personal background, their contributions to the global efforts to eradicate smallpox, the history of smallpox itself and, more generally, issues of biology in the 21st century, including COVID-19, bioterrorism and synthetic pandemics.

Sean Carroll on Consciousness, Physicalism, and the History of Intellectual Progress

In this episode of the Future of Life Podcast, theoretical physicist Sean Carroll joins us to discuss the intellectual movements that have at various points changed the course of human progress, including the Age of Enlightenment and Scientific Revolution, the metaphysical theses that have arisen from these movements, including spacetime substantivalism and physicalism, and how these theses bear on our understanding of free will and consciousness. The conversation also touches on the roles of intuition and data in our moral and epistemological frameworks, the Many-Worlds interpretation of quantum mechanics and the importance of epistemic charity in conversational settings.

News & Opportunities

Are you a Superforecaster? Register for Metaculus’ AI Prediction Tournament

Are you a Superforecaster? Register for Metaculus’ AI Prediction TournamentMetaculus, a community dedicated to generating accurate predictions about real-world future events by collecting and aggregating the collective wisdom and intelligence of its participants, has launched a large-scale, comprehensive forecasting tournament dedicated to predicting advances in artificial intelligence.Sponsored by Open Philanthropy, Metaculus’ aim is to build an accurate map of the future of AI by collecting a massive dataset of AI forecasts over a range of time-frames and then training models to aggregate those forecasts. In addition to contributing to the development of insights about the development of AI, participants put themselves in the running for cash prizes that total $50,000 in value.Round One is now open. Register here.

With GPT-3, OpenAI showed that a single deep-learning model could be trained to use language – to produce sonnets or code – by providing it with masses of text. It went on to show that by substituting text with pixels, AI could be trained to produce incomplete images.

Now, they’ve announced DALL·E, a 12-billion parameter version of GPT-3 trained to generate images from text descriptions (e.g. “an armchair in the shape of an avocado”), using a dataset of text–image pairs. It doesn’t seem to be the case that DALL·E is simply regurgitating images as some might have worried since its responses to unusual prompts (“a snail made of a harp”) are as impressive as its responses to rather ordinary ones. Indeed, its ability to manage bizarre prompts is suggestive of its ability to perform zero-shot visual reasoning

The function of a protein is closely linked with its unique 3D shape. The protein folding problem in biology is the challenge of trying to predict a protein’s shape. If biologists were able to figure out proteins’ shape, this would pave the way for a multitude of breakthroughs, including the development of treatments for diseases.

And now, the Critical Assessment of Protein Structure Prediction, a biennial blind assessment intended to identify state of the art technology in protein structure prediction, has recognised DeepMind’s AI system AlphaFold as the solution to the protein folding problem. It’s has been called a ‘once in a generation advance’ by Calico’s Founder and CEO, Arthur Levinson, and is indicative of ‘how computational methods are poised to transform research in biology.’