The Impact of AI in Education: Navigating the Imminent Future

Contents

This post comes from a member of our AI Existential Safety Community.

Artificial Intelligence (AI) is rapidly transforming various sectors, and education is no exception. As AI technologies become more integrated into educational systems, they offer both opportunities and challenges. This article will focus on the strategic decisions that must be considered for this technology in educational settings, as well as the impact it is having on children considering both what we should be teaching in a future where it is embedded throughout society and the shorter term risks of students using tools such as Snapchat’s ‘My AI’ without knowledge of data and privacy risks.

This blog post explores the impact of AI in education, drawing insights from recent conferences and my experiences as an AI in Education consultant in the UK.

AI in Education: A Global Perspective

In early September, I attended the UN Education Conference in Paris. The summit highlighted both the positive and negative effects of AI and discussed ways to mitigate and support these changes.

Countries like Australia and South Korea have been particularly successful at integrating AI technologies into real-world applications. For instance, many Australian states have developed large-scale Copilots built upon Azure data sets, which provide strategic support for learners while collecting new data as they use educational chatbots (a step towards “personalized” learning). This is an important stepping stone for education; in the past data sets have been held by schools individually, whereas this collective method of holding and using data on a strategic level opens more opportunities for targeted applications. However, it also increases vulnerability to cyber threats and the broader impacts of AI misalignment if not monitored thoroughly.

During the conference, Mistral AI and Apple highlighted the major differences between AI models based on how they are trained. Mistral emphasized that their model has been trained to be multilingual, rather than originally trained in English and then translated. This has a significant impact on the learning interface for students and underscores how subtle differences in training data or techniques can have major impacts on educational outcomes. Education institutions across the world must consider which tools are best aligned to their students’ outcomes and unique learning needs, rather than making rash decisions to acquire new technologies that are misaligned with their values.

Collaboration between Educators & Policy Makers to shape the Future of Education

Although the guidance which has been produced by UNESCO for AI in education is brilliant, there is a disconnect between those who write policy for the education sector and those who are actually involved in education. During my time in Paris, I observed that many educators are already well-versed in using AI tools, while policymakers were in awe of basic custom chatbots like those built in Microsoft Copilot Studio, Custom GPTs, or Google Gems. This concerns me as, while technology in the education sector is often poorly funded, the technical and pedagogical understanding of AI’s potential in education is mostly held by those working in schools.

There must be more opportunities for alignment between educators and government moving forward. Stakeholder meetings that include experts in education should not be limited to university researchers, but also include teachers who are using these tools regularly. Without this, governance will continue to miss out on the detailed knowledge of those in the education sector and simply rely on statistical research or the interpretation of researchers.

Deepfakes

Another concern highlighted in Paris was the rise of deepfakes. I have seen the impact they’ve had in schools in South Korea and the UK, with students easily generating deepfakes of their peers and teachers. This is an area that must be addressed by policymakers. Many schools are trying to tackle this “in house” through wellbeing programs, while other schools have taken a more innovative approach. In China for example, they have created deepfakes of their headteachers to promote an understanding of the potential risks and harms of the technology. DeepMind’s development of SynthID, featured in the journal Nature, may help increase the transparency of AI-generated content.

Innovative Tools and Ethical Considerations

Whilst working on an advisory panel with Microsoft, aiding in their development of AI tools grounded in pedagogy and centered around safety for users, I have witnessed a range of innovative tools that will transform the education landscape. One of the most significant developments is Microsoft Copilot 365, which is set to revolutionize teaching practices. This product can mark assignments, create lesson resources, and conduct data analytics within the 365 suite, which many schools already use. I believe this product has the potential to solve the global teaching shortage by streamlining the administrative elements of education, allowing teachers to focus solely on pedagogy and pastoral care.

However, there are concerns regarding the dominance of Microsoft and Google in the education space. Although both companies have vast educator support and input, there are questions about transparency and ethical considerations in their products’ design. Additionally, many student-facing chatbots are designed for rote learning, yet we live in a time where creativity and critical thinking are more important than ever. This highlights the need for more governance and oversight in the space of AI in education.

Data and Cybersecurity

Data and cybersecurity are significant concerns in education. Although many schools have moved to cloud computing to make systems more secure, the centralization of data by governments and councils, such as in Australia, creates enticing targets and vulnerabilities for hackers.

A further concern associated with this data, which includes emotional, social, and medical information, is how it is used by companies and AI tools themselves. Microsoft explicitly states that this data is not used by Microsoft and is not communicated to OpenAI, a company which has been slow to report its own data breaches. Google does not provide a clear explanation of how its education data is held.

We must also raise the question of how we can ensure this information is not creating biased outputs. Could the echo chamber of a student’s “personalized” chatbot actually be altering their worldview in detrimental ways? The more personalized these models become for students, the higher the risk.

Although AI usage has been well measured in adults by companies such as Microsoft and OpenAI, how young people use generative AI is not well understood globally. Snapchat’s MyAI has been used by over 150 million users so far. The majority of this user base consists of young people in the USA & UK. Some education studies also show wide scale use of ChatGPT by young people, but without the commercial data protection offered by certain organizations, and given Snapchat’s lack of adequate privacy controls, data protection and content filters, there is growing concern in the education community about the impact this could have.

To address this, we need parent consultation and digital safety education. These companies must also be held accountable for allowing children to access AI tools without suitable privacy and safety controls. This has not been the priority of all governments, companies and policy makers so far in AI development, with economic growth being prioritized over children’s safety. This is demonstrated by the veto of the SB 1047 Safety Bill, a bill which was proposed in California aimed at regulating advanced AI systems to prevent catastrophic harms. It would do this by requiring developers to conduct risk assessments, implement emergency shutdown mechanisms, and include whistleblower protections. Governor Gavin Newsom vetoed the bill on September 29, 2024, arguing that it might give a false sense of security by focusing only on large-scale models and potentially stifle innovation. This veto highlights that children’s digital safety is not a priority of those in governance and so must become a deeper priority for those working with young people.

A Curriculum for the Future

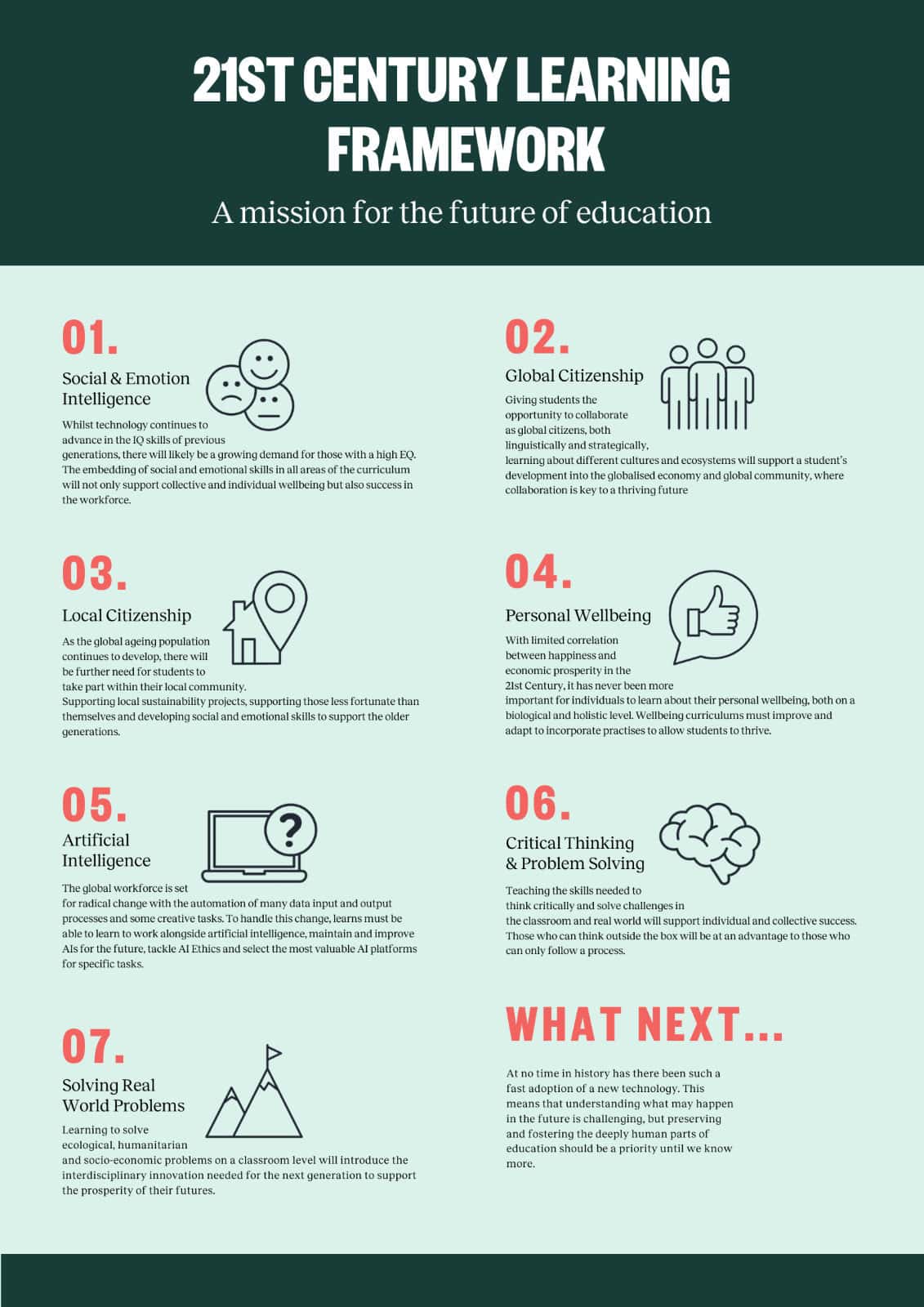

Beyond the practical deployment of AI in education, there begs the question as to what we should be teaching young people today. This question has been raised by many, with answers provided by The Economist, World Economic Forum, and Microsoft. However, education must go beyond the economic focus that the sector has today with curricula centered around economic growth, and look to educate children in a holistic way that will enable them to thrive in the future. UNESCO provides an outstanding guideline for this, as shown below:

Clifton High School in the UK has launched a course tailored to execute this framework, with students learning fundamental skills such as emotional intelligence, citizenship, innovation, and collaboration through different cultures, as well as learning about AI. This “Skills for Tomorrow course aims to provide a proof-of-concept for schools across the world to replicate, providing an education that is complementary to the changes we are seeing in the 21st century. It is vital that governments and policymakers take action to reduce the risk of redundant skills in the next generation.

Access and Socio-Economic Divides

A global issue in education that exacerbates socio-economic divides is the question of access. With 2.9 billion people still offline and major technological divides within countries, those who can and are able to learn how to use AI in schools will benefit from these tools, while those who cannot are left behind. During my time at the UN however, I was made aware of promising projects in this area. One example is the AU Continental Strategy for Africa, which aims to focus education in African countries on capturing the advantages of artificial intelligence. It hopes to harness the adaptability of Africa’s young population, with 70% of African countries having an average age below 30. I also saw AI used to support social and emotional learning in Sweden and Norway.

However, it has been highlighted by Everisto Benyera in their report titled “The Fourth Industrial Revolution and the Recolonisation of Africa” that strategies such as the AUCSA could be hijacked by technology companies and lead to data and employment colonization, leading to asymmetrical power relations (unevenly distribution of resources and knowledge) and further challenges for global equality in the education sector.

Conclusion

There is a lot to be optimistic about as AI becomes integrated into education, but the pace at which it is integrated could lead to detrimental outcomes due to improper governance, outdated national curriculums, and teachers themselves not being ready. Action must be taken, and not simply theorized, by governments to prepare the education sector globally for this technology. Its rapid development is even leaving edtech experts chasing a train of innovation.

Positive legislative efforts, such as the EU AI Act built upon the EU GDPR Law, will mean that safety is at the forefront of further testing on AI and that steps are taken to make sure that the data of children is not misused. While companies abiding by such laws should be praised, those that are designing education tools with safety as their secondary objective must realign their values.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Ethics, Guest post

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI