Contents

FLI August, 2018 Newsletter

State of California Endorses Asilomar AI Principles

Our August newsletter is coming to you a bit later than normal because we had some big news come through at the end of the month:

On August 30, the State of California unanimously adopted legislation in support of the Future of Life Institute’s Asilomar AI Principles.

The Asilomar AI Principles are a set of 23 principles intended to promote the safe and beneficial development of artificial intelligence. The principles – which include research issues, ethics and values, and longer-term issues – have been endorsed by AI research leaders at Google DeepMind, GoogleBrain, Facebook, Apple, and OpenAI. Signatories include Demis Hassabis, Yoshua Bengio, Elon Musk, Ray Kurzweil, the late Stephen Hawking, Tasha McCauley, Joseph Gordon-Levitt, Jeff Dean, Tom Gruber, Anthony Romero, Stuart Russell, and more than 3,800 other AI researchers and experts.

With ACR 215 passing the State Senate with unanimous support, the California Legislature has now been added to that list.

Assemblyman Kevin Kiley, who led the effort, said, “By endorsing the Asilomar Principles, the State Legislature joins in the recognition of shared values that can be applied to AI research, development, and long-term planning — helping to reinforce California’s competitive edge in the field of artificial intelligence, while assuring that its benefits are manifold and widespread.

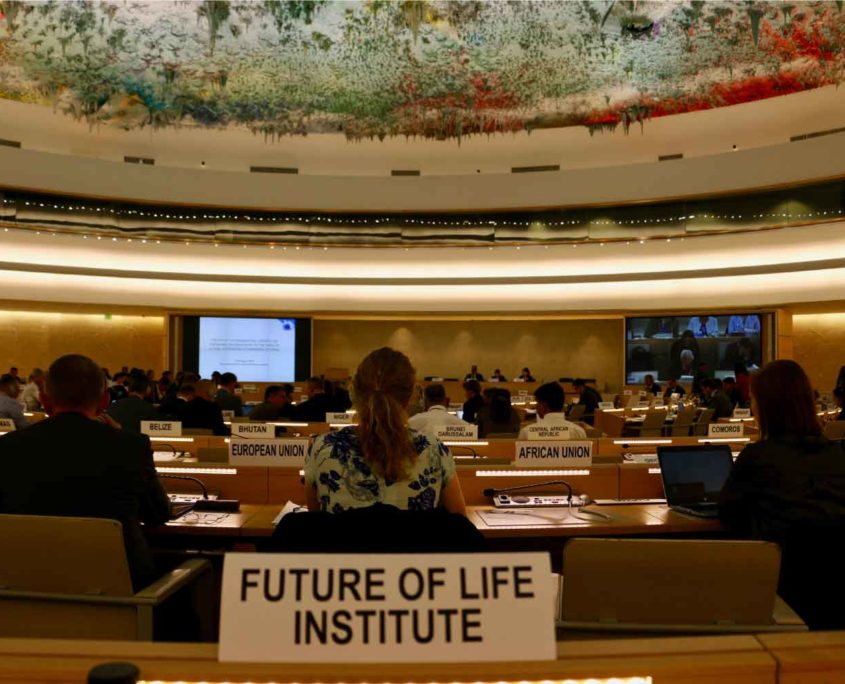

FLI at the United Nations

During the last week of August, the Convention on Conventional Weapons (CCW) Group of Governmental Experts (GGE) on lethal autonomous weapons (LAWS) met at the United Nations in Geneva to discuss, essentially, the future of their discussions. This was the second of two sets of meetings scheduled for the CCW GGE, after the first meeting back in April.

The options discussed by the group were to move forward next year to negotiate a ban on LAWS, to move forward to discuss a political declaration, or to just keep talking in the same manner as this year.

FLI participated in the discussions this year, giving a statement on the floor of the UN and representing all of the scientists who have signed our Pledge and Open Letter against LAWS.

The CCW acts by consensus only. So while the majority of countries have now called for discussions to move forward to consider a ban on LAWS, a small handful of countries have been able to obfuscate the process and prevent progress. As of the end of this past meeting, it looks as though the CCW GGE will continue with the same discussions next year. The group will meet again briefly in November where they will confirm their plans for 2019.

Learn more:

- about the risk of LAWS with this piece Ariel wrote for Metro.

- about the daily updates from last week’s CCW meeting, put together by Reaching Critical Will.

- about LAWS, their history and their ethics, with this recent FLI podcast: Six Experts Explain the Killer Robots Debate.

WINNERS of the 2018 Earth-Day Short-Fiction Contest

Sponsored by Sapiens Plurum and the Future of Life Institute

Can we re-write our children’s history? This year, Sapiens Plurum and FLI co-hosted a short-fiction contest about the future of life on Earth. You can see the first and second place winners here!

Listen: Podcasts on AI Alignment & AI Policy

The Metaethics of Joy, Suffering, and Artificial Intelligence

with Brian Tomasik and David Pearce

What role does metaethics play in AI alignment and safety? How do issues in moral epistemology, motivation, and justification affect value alignment? What might be the metaphysical status of suffering and pleasure?

Topics discussed in this episode include:

- Moral realism vs antirealism

- Emotivism

- Moral status of hedonic tones vs preferences

- Moving forward given moral uncertainty

Artificial Intelligence – Global Governance, National Policies, and Public Trust

with Allan Dafoe and Jessica Cussins

Experts predict that artificial intelligence could become the most transformative innovation in history, eclipsing both the development of agriculture and the industrial revolution. How can local, national, and international governments prepare for such dramatic changes and help steer AI research and use in a more beneficial direction?

On this month’s podcast, Ariel spoke with Allan Dafoe and Jessica Cussins. Allan is the Director of the Governance of AI Program at the Future of Humanity Institute, and Jessica is an AI Policy Specialist with the Future of Life Institute.

Topics discussed in this episode include:

- Three lenses through which to view AI’s transformative power

- Emerging international and national AI governance strategies

- The importance of public trust in AI systems

- The dangers of an AI race

If you want to learn more about emerging national and international AI strategies, policy recommendations and principles for AI, and suggested reading materials, check out FLI’s AI policy resource, compiled by Jessica Cussins.

AI Safety Research Highlights

Governing AI: An Inside Look at the Quest to Regulate Artificial Intelligence

By Jolene Creighton

Governments and policy experts have started to realize that we need to start planning for the arrival of advanced AI systems today. Nick Bostrom’s Strategic Artificial Intelligence Research Center seeks to assist in resolving this issue by understanding, and ultimately shaping, the strategic landscape of long-term AI development on a global scale.

What We’ve Been Up to This Month

Ariel Conn participated in the UN CCW meeting in Geneva at the end of the month. She was a panelist during a side event hosted by the Campaign to Stop Killer Robots, where she spoke about scientists’ support for a ban. Later in the week, she read a statement on the floor of the UN on behalf of FLI.

Ariel Conn participated in the UN CCW meeting in Geneva at the end of the month. She was a panelist during a side event hosted by the Campaign to Stop Killer Robots, where she spoke about scientists’ support for a ban. Later in the week, she read a statement on the floor of the UN on behalf of FLI.

Earlier in the month, Ariel also helped organize and moderate a two-day meeting in Pretoria, South Africa for local and regional UN delegates, regarding the nuclear ban treaty and lethal autonomous weapons systems (LAWS). She also presented a talk on nuclear winter to the delegates.

Jessica Cussins participated in the second meeting of the Partnership on AI’s Working Group on Fair, Transparent, and Accountable AI held in Princeton, New Jersey. She also gave testimony on behalf of FLI at a State Senate Committee Hearing in Sacramento, California.

FLI in the News

THE VERGE: Inside the United Nations’ Effort to Regulate Autonomous Killer Robots

ZD NET: What is artificial general intelligence?

GOVERNMENT CIO MEDIA: No Killer Robots, OK, But What Else Should AI Promise Not to Do?

BULLETIN OF ATOMIC SCIENTISTS: JAIC: Pentagon debuts artificial intelligence hub

If you’re interested in job openings, research positions, and volunteer opportunities at FLI and our partner organizations, please visit our Get Involved page.