Contents

U.S. Mayors Support Cambridge Nuclear Divestment

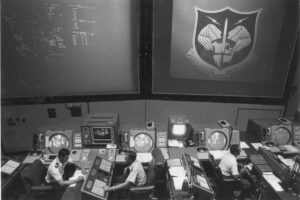

Cambridge Mayor Denise Simmons announced the city’s $1 billion divestment

from nuclear weapons at the MIT nuclear conference in April.

On June 28th, the United States Conference of Mayors unanimously adopted a resolution that calls on the next U.S. President to “pursue diplomacy with other nuclear-armed states,” to “participate in negotiations for the elimination of nuclear weapons,” and to “cut nuclear weapons spending and redirect funds to meet the needs of cities.” In the resolution, they officially commended “Mayor Denise Simmons and the Cambridge City Council for demonstrating bold leadership at the municipal level by unanimously deciding on April 2, 2016 to divest their one-billion-dollar city pension fund from all companies involved in production of nuclear weapons systems and in entities investing in such companies.”

In response to this news, Mayor Simmons told FLI, “I am honored to receive such commendation from the USCM, and I hope this is a sign that nuclear divestment is just getting started in the United States. Divestment is an important tool that politicians and citizens alike can use to send a powerful message that we want a world safe from nuclear weapons.“

Read more about the USCM resolution.

Some of the greatest minds in science and policy also spoke at the conference.

Their videos can all be seen on the FLI YouTube channel, including:

William Perry’s Nuclear Nightmare

Former Secretary of Defense William Perry presents his nuclear nightmare and discusses why he believes the risk of nuclear catastrophe is greater now than it was at any time during the Cold War.

Joe Cirincione: Current Nuclear Policy

Joe Cirincione talks about how and why Obama’s nuclear policy failed and what we need to do to move forward more successfully in the future.

Max Tegmark: Accidental Nuclear War

Max Tegmark highlights visual data to show how many nuclear weapons we have today, how damaging they can be, and how close we are to launching an accidental nuclear war.

ICYMI: This Month’s Most Popular Articles

The White House Considers the Future of AI

By Ariel Conn

Artificial intelligence may be on the verge of changing the world forever. But our current federal system is woefully unprepared to deal with both the benefits and challenges these advances will bring. In an effort to address concerns, the White House is hosting four conferences about AI. The first two are discussed here.

The Collective Intelligence of Women Could Save the World

By Phil Torres

Today’s greatest existential risks stem from advanced technologies like nuclear weapons, biotechnology, synthetic biology, nanotechnology, and even artificial superintelligence. These are all problems that can be solved, but according to a paper in Science, we’ll increase our odds of survival if we get more women involved.

Will We Use the New Nuclear Weapons?

By Annie Mehl

The B61-12 is a part of the government’s $1 trillion dollar plan to redesign older nuclear weapons to be both more accurate and more lethal. The weapon has a dial-a-yield technology that can hold a range of warheads, including those nearly 50 times smaller than the Hiroshima bomb. Experts believe this small option may make us more likely to use the new nukes.

The Trillion Dollar Question Obama Left Unanswered in Hiroshima

By Max Tegmark and Frank Wilczek

Should we spend a trillion dollars to replace each of our thousands of nuclear warheads with a more sophisticated substitute attached to a more lethal delivery system? Or should we keep only enough nuclear weapons needed for a devastatingly effective deterrence against any nuclear aggressor, investing the money saved into other means of making our nation more secure?

Digital Analogues (Intro): Artificial Intelligence Systems Should Be Treated Like…

By Matt Scherer

Because they operate with less human supervision and control than earlier technologies, the rising prevalence of autonomous A.I. raises the question of how legal systems can ensure that victims receive compensation if (read: when) an A.I. system causes physical or economic harm during the course of its operations.

New AI Safety Research Agenda From Google Brain

By Viktoriya Krakovna

Google Brain just released an inspiring research agenda, Concrete Problems in AI Safety, co-authored by researchers from OpenAI, Berkeley and Stanford. This document is a milestone in setting concrete research objectives for keeping reinforcement learning agents and other AI systems robust and beneficial.