Poll Shows Broad Popularity of CA SB1047 to Regulate AI

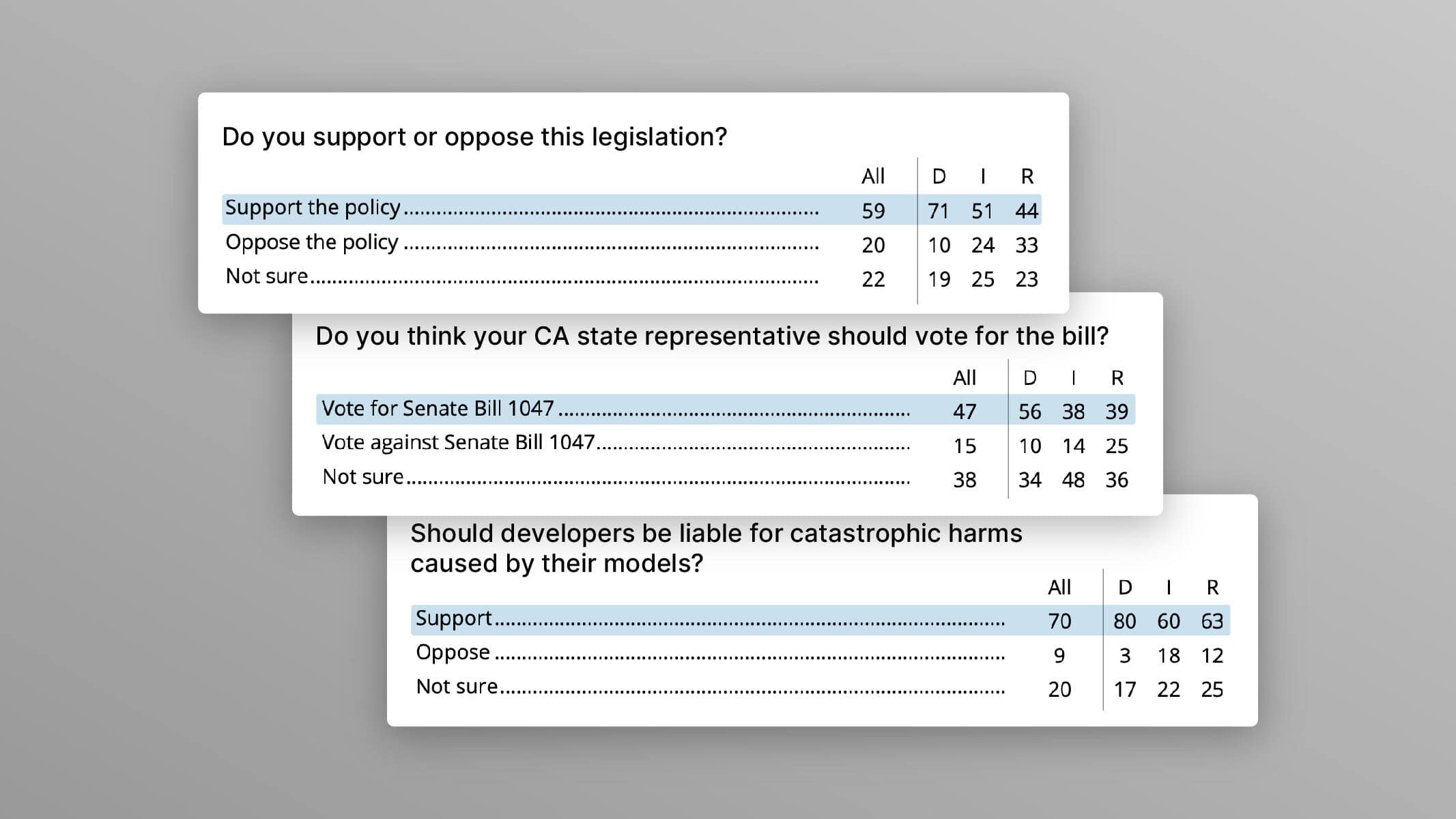

We are releasing a new poll from the AI Policy Institute (view the executive summary and full survey results) showing broad and overwhelming support for SB1047, Sen. Scott Wiener’s bill to evaluate whether the largest new AI models create a risk of catastrophic harm, which is currently moving through the California state house. The poll shows 59% of California voters support SB1047, while only 20% oppose it, and notably, 64% of respondents who work in the tech industry support the policy, compared to just 17% who oppose it.

Recently, Sen. Wiener sent an open letter to Andreessen Horowitz and Y Combinator dispelling misinformation that has been spread about SB1047, including that it would send model developers to jail for failing to anticipate misuse and that it would stifle innovation. The letter points out that the “bill protects and encourages innovation by reducing the risk of critical harms to society that would also place in jeopardy public trust in emerging technology.” Read Sen. Wiener’s letter in full here.

Anthony Aguirre, Executive Director of the Future of Life Institute:

“This poll is yet another example of what we’ve long known: the vast majority of the public support commonsense regulations to ensure safe AI development and strong accountability measures for the corporations and billionaires developing this technology. It is abundantly clear that there is a massive, ongoing disinformation effort to undermine public support and block this critical legislation being led by individuals and companies with a strong financial interest in ensuring there is no regulation of AI technology. However, today’s data confirms, once again, how little impact their efforts to discredit extremely popular measures have been, and how united voters–including tech workers–and policymakers are in supporting SB1047 and in fighting to ensure AI technology is developed to benefit humanity.”

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI Policy, Recent News

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI

Michael Kleinman reacts to breakthrough AI safety legislation

Context and Agenda for the 2025 AI Action Summit

Paris AI Safety Breakfast #4: Rumman Chowdhury

Some of our Policy & Research projects

Control Inversion