Contents

Welcome to the Future of Life Institute newsletter. Every month, we bring 27,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

If you’ve found this newsletter helpful, why not tell your friends, family and colleagues to subscribe here?

Today’s newsletter is a 5-minute read. We cover:

- The continuing impact of our open letter advocating for a pause on training large models

- Why we’re living in the movie Don’t Look Up

- The AI Now Institute 2023 Annual Report

- How Dr. Matthew Meselson helped ban biological weapons

- The NLP community’s thoughts about AI existential risk

Open Letter Impact!

FLI’s open letter – calling on AI labs to temporarily pause training AI models more powerful than GPT-4 – continues to have a policy and cultural impact.

▶ Members of the European Parliament respond: Spurred on by FLI’s open letter,a group oftwelve European lawmakers are calling for tailored rules for foundation models in the EU AI Act, a high level global summit on AI, more democratic oversight and international cooperation, and responsible corporate behaviour.

▶ International standards organisations call for dialogue: Leaders of the ISO, the IEC, and the ITU – three leading standard-setting organisations – cited FLI’s letter in their call for industry and civil society stakeholders to engage with technical standards as a means of mitigating AI harms.

▶ The US Senate convenes experts: Members of the US Senate Subcommittee on Cybersecurity and expert witnesses discussed FLI’s open letter during a hearing on the use of AI and Machine Learning in defence.

▶ AI safety goes mainstream: Our letter has led to intense media coverage and debate about AI safety in publications like the New York Times, Fox, Time, NBC, Vox, The Financial Times, and The Economist.

If you’re interested in a longer discussion about the open letter, watch FLI President Max Tegmark on the Lex Fridman podcast:

Governance and Policy Updates

AI policy:

▶ The US Department of Commerce is inviting public comments on how to make AI safer and more transparent.

▶ The European Data Protection Board created a ‘GPT Task Force’.

▶ China’s cyberspace regulator unveiled new draft rules to regulate generative AI systems.

Nuclear security

▶ The G7 Foreign Ministers Communique called for ‘the immediate commencement of long-overdue negotiations of a treaty banning the production of fissile material for nuclear weapons or other nuclear explosive devices.’

Updates from FLI

▶ FLI President Max Tegmark argued in Time Magazine that the global reaction to the existential threat from unaligned super-intelligence is just like the movie Don’t Look Up – a satirical film about society collectively failing to address the threat of an impending asteroid strike.

▶ Deutsche Welle interviewed three signatories to FLI’s open letter – FLI co-founder Jaan Tallinn, Nell Watson, and Vincent Conitzer – to discuss the state of AI, the alignment problem, and the reasons for concern.

▶ Our podcast host Gus Docker interviewed Lennart Heim about compute governance and Connor Leahy about the alignment problem. Watch both the episodes here:

▶ FLI published a policy brief outlining concrete steps that regulators could take to ensure that powerful AI systems are developed and deployed safely. Read the policy brief here:

New Research: AI Now Institute 2023 Annual Report

AI and Big Tech: The AI Now Institute published its 2023 Annual Report outlining, among other things, the concentration of power in AI development, new solutions for algorithmic accountability, and how geopolitical dynamics shape AI deployment.

Why this matters: The use of AI systems affect so many different political, economic and national security arrangements that regulators are often left scrambling for tools to govern them. The AI Now report helpfully points lawmakers to existing tools and new solutions that can address these challenges.

General Purpose AI: The AI Now Institute also joined 50 leading AI scientists and experts in calling for the EU not to exempt General Purpose AI from the AI Act. Read their letter and brief here.

What We’re Reading

▶ The Pathogens Project: The Bulletin of the Atomic Scientist’s Pathogens Project convened a group of scientists and public health leaders to discuss how the international community could improve oversight of dangerous pathogens research. Watch the proceedings here.

▶ Sidelining Responsible AI: The Financial Times reports that many Big Tech firms are cutting or downsizing AI ethics staff even as they accelerate the deployment of large language models.

▶ Nuclear Weapons Ban Monitor: The international community has made excellent progress on the Treaty on the Prohibition of Nuclear Weapons (TPNW) in 2022. According to the International Campaign to Abolish Nuclear Weapons, ‘just four more states need to sign or accede to the TPNW to exceed 50% of the world’s states committing to the treaty.’

Hindsight is 20/20

On April 9, 1972, the Biological Weapons Convention – an international ban that preventing one of the most inhumane forms of warfare known to humanity – was opened for signatures.

Dr. Matthew Meselson was the driving force behind this convention. Concerned by the US’ research into and production of Anthrax, Meselson campaigned tirelessly within the US and internationally to stop these weapons of mass destruction.

Meselson’s long career is studded with highlights: proving Watson and Crick’s hypothesis on DNA structure, solving the Sverdlovsk Anthrax mystery, ending the use of Agent Orange in Vietnam. But it is above all his work on biological weapons that makes him an international hero.

In 2019, we presented him with the Future of Life Award to recognise his contribution to humanity. Take a minute today to learn about and share his story!

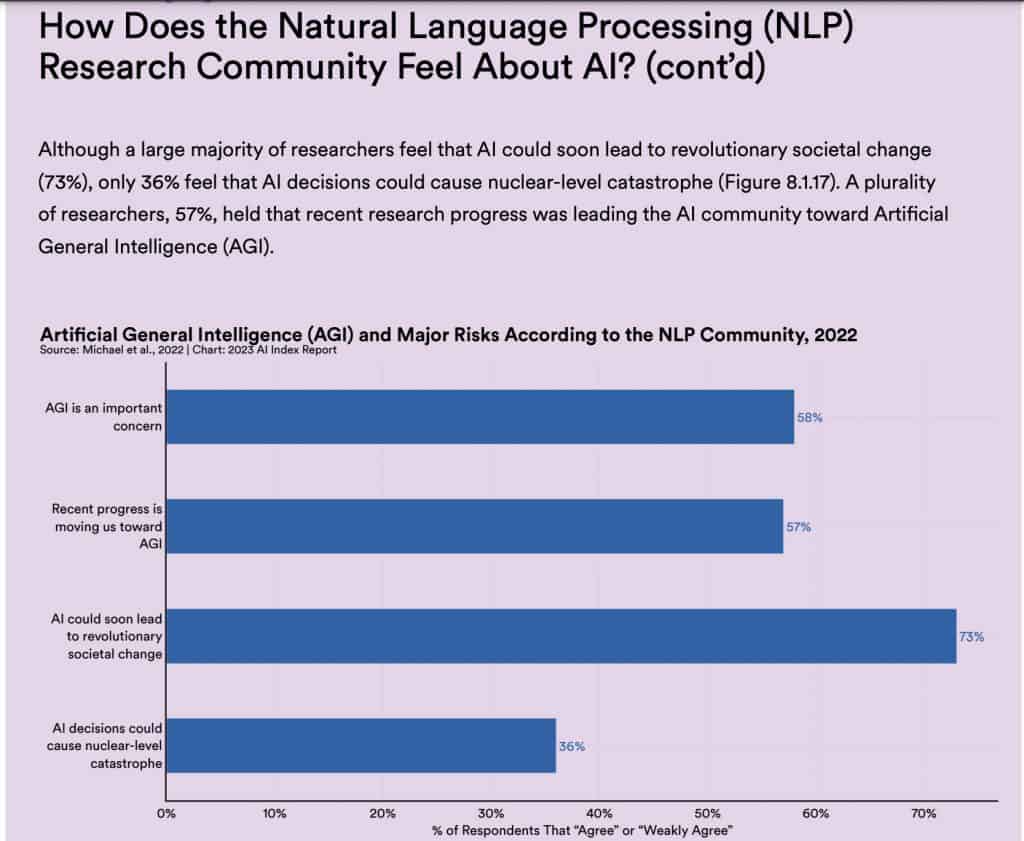

Chart of the Month

The Stanford Institute for Human-Centered Artificial Intelligence published its 2023 AI Index Report. This figure reports the result of a small survey of the concerns that NLP researchers have about AI.

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.