Rohin Shah on the State of AGI Safety Research in 2021

- Inner Alignment Versus Outer Alignment

- Foundation Models

- Structural AI Risks

- Unipolar Versus Multipolar Scenarios

- The Most Important Thing That Impacts the Future of Life

Watch the video version of this episode here

0:00 Intro

00:02:22 What is AI alignment?

00:06:45 How has your perspective of this problem changed over the past year?

00:07:22 Inner Alignment

00:15:35 Ways that AI could actually lead to human extinction

00:22:50 Inner Alignment and MACE optimizers

00:24:15 Outer Alignment

00:27:32 The core problem of AI alignment

00:29:38 Learning Systems versus Planning Systems

00:34:00 AI and Existential Risk

00:38:59 The probability of AI existential risk

01:04:10 Core problems in AI alignment

01:03:07 How has AI alignment, as a field of research changed in the last year?

01:05:57 Large scale language models

01:06:55 Foundation Models

01:15:30 Why don't we know that AI systems won't totally kill us all?

01:23:50 How much of the alignment and safety problems in AI will be solved by industry?

01:31:00 Do you think about what beneficial futures look like?

01:39:44 Moral Anti-Realism and AI

01:46:22 Unipolar versus Multipolar Scenarios

01:56:38 What is the safety team at DeepMind up to?

01:57:30 What is the most important thing that impacts the future of life?

Transcript

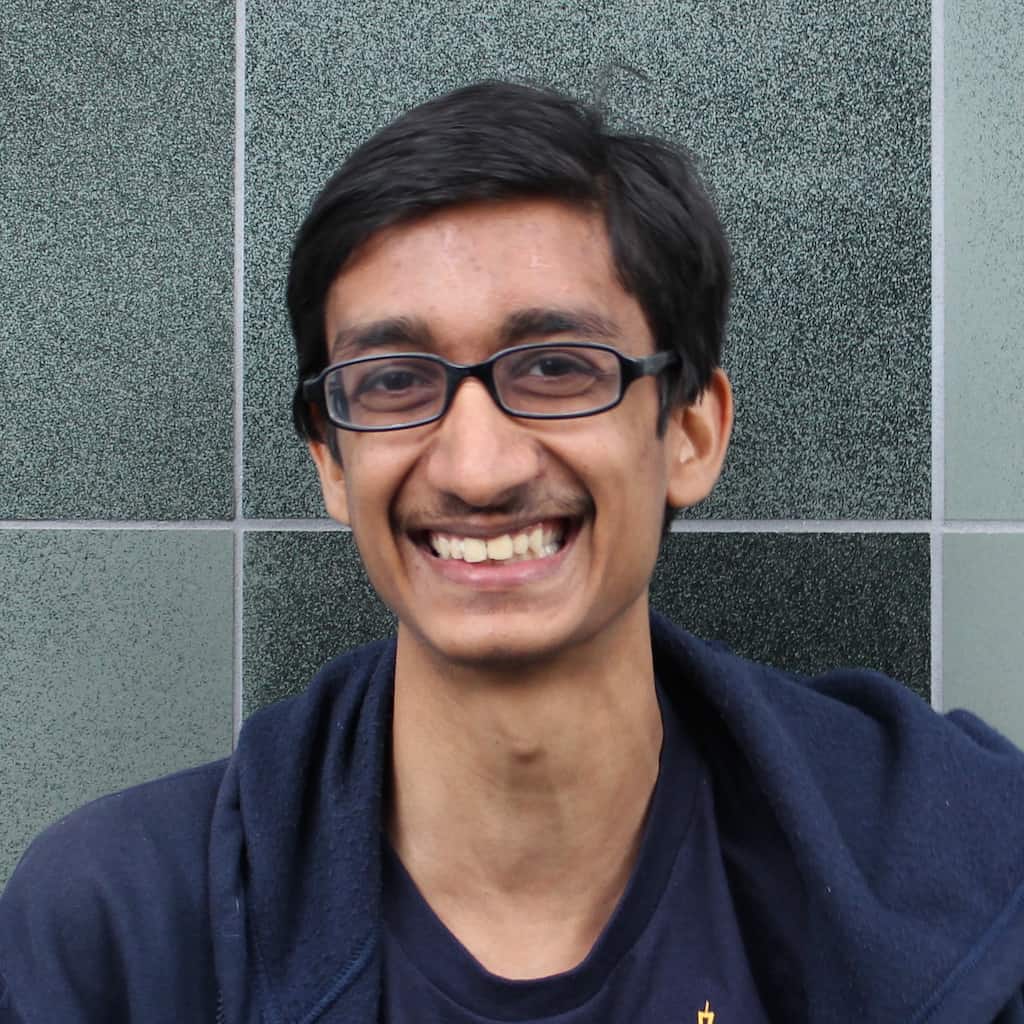

Lucas Perry: Welcome to the Future of Life Institute Podcast. I'm Lucas Perry. Today's episode is with Rohin Shah. He is a long-time friend of this podcast, and this is the fourth time we've had him on. Every time we talk to him, he gives us excellent overviews of the current thinking in technical AI alignment research. And in this episode he does just that. Our interviews with Rohin go all the way back to December of 2018. They're super informative and I highly recommend checking them out if you'd like to do a deeper dive into technical alignment research. You can find links to those in the description of this episode.

Rohin is a Research Scientist on the technical AGI safety team at DeepMind. He completed his PhD at the Center for Human-Compatible AI at UC Berkeley, where he worked on building AI systems that can learn to assist a human user, even if they don't initially know what the user wants.

Rohin is particularly interested in big picture questions about artificial intelligence. What techniques will we use to build human-level AI systems? How will their deployment affect the world? What can we do to make this deployment go better? He writes up summaries and thoughts about recent work tackling these questions in the Alignment Newsletter, which I highly recommend following if you're interested in AI alignment research. Rohin is also involved in Effective Altruism, and out of concern for animal welfare, is almost vegan.

And with that, I'm happy to present this interview with Rohin Shah.

Welcome back Rohin. This is your third time on the podcast I believe. We have this series of podcasts that we've been doing, where you help give us a year-end review of AI alignment and everything that's been up. You're someone I view as very core and crucial to the AI alignment community. And I'm always happy and excited to be getting your perspective on what's changing and what's going on. So to start off, I just want to hit you with a simple, not simple question of what is AI alignment?

Rohin Shah: Oh boy. Excellent. I love that we're starting there. Yeah. So different people will tell you different things for this as I'm sure you know. The framing I prefer to use is that there is a particular class of failures that we can think about with AI, where the AI is doing something that its designers did not want it to do. And specifically it's competently achieving some sort of goal or objective or some sort of competent behavior that isn't the one that was intended by the designers. So for example, if you tried to build an AI system that is, I don't know, supposed to help you schedule calendar events and then it like also starts sending emails on your behalf to people which maybe you didn't want it to do. That would count as an alignment failure.

Whereas if a terrorist somehow makes an AI system that goes and designates a bomb in some big city that is not an alignment failure, it is obviously bad, but the AI system did what its designer intended for it to do. It doesn't count as an alignment failure on my definition of the problem.

Other people will see AI alignment as synonymous with AI safety. For those people, terrorists using a bomb might count as an alignment failure, but at least when I'm using the term, I usually mean, the AI system is doing something that wasn't what its designers intended for it to do.

There's a little bit of a subtlety there where you can think of either intent alignment, where you try to figure out what the AI system is trying to do. And then if it is trying to do something that isn't what the designers wanted, that's an intent alignment failure, or you can say, all right, screw all of this notion of trying, we don't know what trying is. How can we look at a piece of code and say whether or not it's trying to do something.

And instead we can talk about impact alignment, which is just like the actual behavior that the AI system does. Is that what the designers intended or not? So if the AI makes a catastrophic mistake where the AI thinks that this is the big red button for happiness and sunshine, but actually it's the big red button that launches nix. That is a failure on impact alignment, but isn't a failure on the intent alignment, assuming the AI legitimately believed that the button was happiness and sunshine, I think they said.

Lucas Perry: So it seems like you could have one or more or less of these in a system at the same time. So which are you excited about? Which do you think are more important than the others?

Rohin Shah: In terms of what do we actually care about? Which is how I usually interpret important, the answer is just like pretty clearly impact alignment. The thing we care about is, did the AI system do what we want or not? I nevertheless tend to think in terms of intent alignment, because it seems like it is decomposing the problem into a natural notion of like what the AI system is trying to do. And whether the AI system is capable enough to do it. And I think that is like actually natural division. You can in fact talk about these things separately. And because of that, it makes sense to have research organized around those two things separately. But that is a claim I am making about the best way to decompose the problem that we actually care about. And that is why I focus on intent alignment but what do we actually care about? Impact alignment, totally.

Lucas Perry: How would you say that your perspective of this problem has changed over the past year?

Rohin Shah: I've spent a lot of time thinking about the problem of inner alignment. So this was this shot up to... I mean, people have been talking about it for a while, but it shot up to prominence in I want to say 2019 with the publication of the mesa optimizers paper. And I was not a huge fan of that framing, but I do think that the problem that it's showing is actually an important one. So I've been thinking a lot about that.

Lucas Perry: Can you explain what inner alignment is and how it fits into the definitions of what AI alignment is?

Rohin Shah: Yeah. So AI alignment, the way I've described it so far is just sort of like pretty, it's just talking about properties of AI system. It doesn't really talk about how that AI system was built, but if you actually want to diagnose at like give reasons why problems might arise and then how to solve them, you probably want to talk about how the AI systems are built and why they're likely to cause such problems.

Inner alignment, I'm not sure if I like the name, but we'll go with it for now. Inner alignment is a problem that I claim happens for systems that learn. And the problem is, maybe I should explain it with an example. You might have seen this post from LessWrong about bleggs and rubes. These bleggs are blue in color and tend to be egg-shaped in all the cases they've seen so far. Rubes are red in color and are cube-shaped, at least in all the cases they've seen so far.

And now suddenly you see a red egg-shaped thing, is it blegg or rube? Like in this case, it's pretty obvious that there isn't a correct answer and this same dynamic can arise in a learning system where if it is learning how to behave in accordance with whatever we are training it to do, we're going to be training it on a particular set of situations. And if those situations change in the future along some axis that the AI system didn't see during training, it may generalize badly. So a good example of this is, came from the objective robustness and deep reinforcement learning paper. They trained an agent on the CoinRun environment from Procgen. This is basically a very simple platformer game where the agent just has to jump over enemies and obstacles to get to the end and collect the coin.

And the coin is always at the far right end of the level. And so, you train your AI system on a bunch of different kinds of levels, different obstacles, different enemies, they're placed in different ways. You have to jump in different ways, but the coin is always at the end on the right. And it turns out if you then take your AI system and test it on a new level of where the coin is placed somewhere else in a level, not all the way to the right, the agent just continues to jump over obstacles, enemies, and so on. Behaves very competently in the platformer game, but it just runs all the way to the right and then stays at the right or jumps up and down as though hoping that there's a coin there. And it's behaving as if it has the objective of go as far to the right as possible.

Even though we trained it on the objective, get the coin, or at least that's what we were thinking of as the objective. And this happened because we didn't show it any examples where the coin was anywhere other than the right side of the level. So the inner alignment problem is when you train a system on one set of inputs, it learns how to behave well on that set of inputs. But then when you extrapolate its behavior to other inputs that you hadn't seen during training, it turns out to do something that's very capable, but not what you intended.

Lucas Perry: Can you give an example of what this could look like in the real world, rather than in like a training simulation in a virtual environment?

Rohin Shah: Yeah. One example I like is, it'll take a bit of setup, but I think it should be fine. You could imagine that with honestly, even today's technology, we might be able to train an AI system that can just schedule meetings for you. Like when someone emails you asking for a meeting, you're just like, here calendar scheduling agent, please do whatever you need to do in order to get this meeting scheduled. I want to have it, you go schedule it. And then it goes and emails a person who emails back saying, Rohin is free at such and such times, he like prefers morning meetings or whatever. And then, there's some back and forth between, and then the meeting gets scheduled. For concreteness, let's say that the way we do this, is we take a pre-trained language model, like say GPT-3, and then we just have GPT-3 respond to emails and we train it from human feedback.

Well, we have some examples of like people scheduling emails. We do supervised fine tuning on GPT-3 to get it started. And then we like fine tune more from human feedback in order to get it to be good at this task. And it all works great. Now let's say that in 2023, Gmail decides that Gmail also wants to be a chat app. And so it adds emoji reactions to emails, and everyone's like, oh my God, now there's such a better, we can schedule a meeting so much better. We can just say, here, just send an email to all the people who are coming to the meeting and react with emojis for each of the times that you're available. And everyone loves this. This is how people start scheduling meetings now.

But it turns out that this AI system, when it's confronted with these emoji polls is like, it knows, it in theory is capable or knows how to use the emoji polls. It knows what's going on, but it was always trained to schedule the meeting by email. So maybe it will have learned to like always schedule a meeting by email and not to take advantage of these new features. So it might say something like, hey, I don't really know how to use these newfangled emoji polls. Can we just schedule emails the normal way? In our times this would be a flat out lie, but from the AI's perspective, we might think of like, the AI was just trained to say whatever sequence of English words lead to getting a meeting scheduled by email. And it predicts that sequence of words will work well. Would this actually happen if I actually trained an agent this way? I don't know, like it's totally possible I would actually do the right thing, but I don't think we can really rule out the wrong thing either, it seems. That also seems pretty plausible to me in this scenario.

Lucas Perry: One important part of this that I think has come up in our previous conversations is that we don't know when there is always an inner misalignment between the system and the objective we would like for it to learn, because part of maximizing the inner aligned objective could be giving the appearance of being aligned with the outer objective that we're interested in. Could you explain and unpack that?

Rohin Shah: Yeah. So in the AI safety community, we tend to think about ways that AI could like actually lead to human extinction. And so, the example that I gave does not in fact lead to human extinction. It is a mild annoyance at worst. The story that gets you to human extinction is one in which you have a very capable, superintelligent AI system. But nonetheless, there's like, instead of learning the objective that we wanted, which might've been, I don't know, something like be a good personal assistant. I'm just giving that out as a concrete example. It could be other things as well. Instead of acting as though it were optimizing that objective, it ends up optimizing some other objective and you don't really want to give an example here because the whole premise is that it could be a weird objective we don't really know.

Lucas Perry: Could you expand that a little bit more, like how it would be a weird objective that we wouldn't really know?

Rohin Shah: Okay. So let's take as a concrete example, let's make paperclips, which has nothing to do with being a personal assistant. Now, why is this at all plausible? The reason is that even if this superintelligent AI system had the objective to make paperclips, during training, while we are in control, it's going to realize that if it doesn't do the things that we want it to do, we're just going to turn it off. And as a result, it will be incentivized to do whatever we want until it can make sure that we can't turn it off. And then it goes and builds its paperclip empire. And so when I say, it could be a weird objective, I mostly just mean that almost any objective is compatible with this sort of a story. It does rely on-

Lucas Perry: Sorry. I'm also curious if you could explain how the inner state of the system becomes aligned to something that is not what we actually care about.

Rohin Shah: I might go back to the CoinRun example, where the agent could have learned to get the coin. That was a totally valid policy it could have learned. And this is an actual experiment that people have run. So this one is not hypothetical. It just didn't, it learned to go to the right. Why? I mean, I don't know. I wish I understood neural nets well enough to answer those questions for you. I'm not really arguing for, it's definitely going to learn, make paperclips. I'm just arguing for like, there's this whole set of things it could learn. And we don't know which one it's going to learn, which seems kind of bad.

Lucas Perry: Is it kind of like, there's the thing we actually care about? And then a lot of things that are like roughly correlated with it, which I think you've used the word for example before is like proxy objectives.

Rohin Shah: Yeah. So that is definitely one way that it could happen, where we ask it to make humans happy and it learns that when humans smile, they're usually happy and then learns the proxy objective of make human smile and then it like, goes and tapes everyone's faces so that they're permanently smiling, that's a way that things could happen. But I think I don't even want to claim that's what ... maybe that's what happens. Maybe it just actually optimizes for human happiness. Maybe it learns to make paperclips for just some weird reason. I mean, not paperclips. Maybe it decides, this particular arrangement of atoms in this novel structure that we don't really have a word for is the thing that it wants for some reason. And all of these seem totally compatible with, we trained it to be good, to have good behavior in the situations that we cared about because it might just be deceiving us until it has enough power to unilaterally do what it wants without worrying about us stopping it.

I do think that there is some sense of like, no paperclip maximization is too weird. If you trained it to make humans happy, it would not learn to maximize paperclips. There's just like no path by which paperclips somehow become the one thing it cares about. I'm also sympathetic to, maybe it just doesn't care about anything to the extent of optimizing the entire universe to turn it into that sort of thing. I'm really just arguing for, we really don't know crazy shit could happen. I will bet on crazy shit will happen, unless we do a bunch of research and figure out how to make it so that crazy shit doesn't happen. I just don't really know what the crazy shit will be.

Lucas Perry: Do you think that that example of the agent in that virtual environment, you see that as a demonstration of the kinds of arbitrary goals that the agent could learn and that that space is really wide and deep and so it could be arbitrarily weird and we have no idea what kind of goal it could end up learning and then deceive us.

Rohin Shah: I think it is not that great evidence for that position. Mostly because I think it's reasonably likely that if you told somebody the setup of what you were planning to do, if you told an ML researcher or an RL, maybe specifically a deep RL researcher, the setup of that experiment and asked them to predict what would have happened, I think they probably would have, especially if you told them, "Hey, do you think maybe it'll just run to the right and jump up and down at the end?" I think they'd be like, "Yeah, that seems likely, not just plausible, but actually likely." That was definitely my reaction when I was first told about this result. I was like, oh yeah, of course that will happen.

In that case, I think we just do know...know is a strong word, ML researchers have good enough intuitions about those situations, I think, that it was predictable in advance. Though I don't actually know if anyone who predicted it, did in advance. So that one, I don't think is all that supportive of, it learns an arbitrary goal. We had some notion that neural nets care a lot more about position and simple functions of the action always go right rather than complex visual features like this yellow coin that you have to learn from pixels. I think people could have probably predicted that.

Lucas Perry: So we touched on definitions of AI alignment, and now we've been exploring your interest in inner alignment or I think the jargon is mesa optimizers.

Rohin Shah: They are different things.

Lucas Perry: There are different things. Could you explain how inner alignment and mesa optimizers are different?

Rohin Shah: Yeah. So a thing I maybe have not been doing as much as I should have is that, inner alignment is the claim that when the circumstances change, the agent generalizes catastrophically in some way, it behaves as though it's optimizing some other objective than the one that we actually want. So it's much more of a claim about the behavior rather than like the internal workings of the AI system that caused that behavior.

mesa-optimization, at least under the definition of the 2019 paper is talking specifically about AI systems that are executing an explicit optimization algorithm. So like the forward path of a neural net is itself an optimization algorithm. We're not talking about creating dissent here. And then the metric that is being used in that, within the neural network optimization algorithm is the inner objective or sorry, the mesa objective. So it's making a claim about how the AI system's cognition is structured. Whereas inner alignment more broadly is the AI behaves in a catastrophically generalizing way.

Lucas Perry: Could you explain what outer alignment is?

Rohin Shah: Sure. Inner alignment can we be thought of as, suppose we got the training objective correct. Suppose the things that we're training the AI system to do on the situations that we give it as input, we're actually training it to do the right thing, then things can go wrong if it behaves differently in some new situations that we hadn't trained it on.

Outer alignment is basically when the reward function that you specify for training the AI system is itself, not what you actually wanted. For example, maybe you want your AI to be helpful to you or to tell you true things. But instead you have, you train your AI system to go find credible looking websites and tell you what the credible looking websites say. And it turns out that sometimes the credible looking websites don't actually tell you true things.

In that case, you're going to get an AI that tells you what credible looking websites say, rather than an AI that tells you what things are true. And that's in some sense, an outer alignment failure. You like even the feedback you were giving the AI system was pushing it away from telling you the truth and pushing it towards telling you what credible looking websites will say, which are correlated of course, but they're not the same. In general, if you like give me an AI system with some misalignment and you ask me, was this a failure of outer alignment or inner alignment? Mostly I'm like, that's a somewhat confused question, but one way that you can make it not be confused is you can say, all right, let's look at the inputs on which it was trained. Now, if ever on an input on which we train, we gave it some wrong feedback where we were like the AI lied to me and I gave it like plus a thousand reward. And you're like, okay, clearly that's outer alignment. We just gave it the wrong feedback in the first place.

Supposing that didn't happen. Then I think what you would want to ask is, okay, let me think about on the situations in which the AI does something bad, what would I have given counterfactually as a reward? And this requires you to have some notion of a counterfactual. When you'd write down a programmatic reward function, the counterfactual is a bit more obvious. It's like, whatever that program would have output on that input. And so I think that's the usual setting in which outer alignment has been discussed. And it's pretty clear what it means there. But once you're like training from human feedback, it's not so clear what it means. What would the human have given us feedback on this situation that they've never seen before is often pretty ambiguous. If you define such a counterfactual, then I think I'm like, yes. Then I think I'm like, okay, you look at what feedback you would've given on the counterfactual. If that feedback was good actually led to the behavior that you wanted, then it's an inner alignment failure. If that counterfactual feedback was bad, not what you would have wanted. Then it's an outer alignment failure.

Lucas Perry: If you're speaking to someone who was not familiar with AI alignment, for example, other people in the computer science community, but also policymakers or the general public, and you have all of these definitions of AI alignment that you've given like intent alignment and impact alignment. And then we have the inner and outer alignment problems. How would you capture the core problem of AI alignment? And would you say that inner or outer alignment is a bigger part of the problem?

Rohin Shah: I would probably focus on intent alignment for the reasons I have given before. It just seems like a more ... I want to focus attention away from the cases where the AI is trying to do the right thing, but makes a mistake, which would be a failure of impact alignment. But I don't think that is the biggest risk. I think in a super-intelligent AI system that is trying to do the right thing is extremely unlikely to lead to catastrophic outcomes though it's certainly not impossible. Or at least more unlikely to lead to catastrophic outcomes, unlike humans in the same position or something. So that would be my justification for intent alignment. I'm not sure that I would even talk very much about inner and outer alignment. I think I would probably just not focus on definitions and instead focus on examples. The core argument I would make would depend a lot on how AI systems are being built.

As I mentioned inner alignment is a problem that according to me, is primarily learning systems, I don't think it really affects planning systems.

Lucas Perry: What is the difference between a learning system and a planning system?

Rohin Shah: A learning system, you give it examples of things it should do, how it should behave and then changes itself to do things more in that vein. A planning system takes a formerly represented objective and then searches over possible hypothetical sequences of actions it could take in order to achieve that objective. And if you consider a system like that, you can try to make the inner alignment argument and it just won't work, which is why I say that the inner alignment problem is primarily about learning systems.

Going back to the previous question. So the things I would talk about depend a lot on what sorts of AI systems we're building, if it were a planning system, I would basically just talk about outer alignment, where I would be like, what if the formerly represented objective is not the thing that we actually care about. It seems really hard to formally represent the objectives that we want.

But if we're instead talking about deep learning systems that are being trained from human feedback, then I think I would focus on two problems. One is cases where the AI system knows something, but the human doesn't. And so they came and gives a bad feedback as a result. So for example, the AI system knows that COVID was caused by a lab leak. It's just like, got incontrovertible proof of this or something. And then, but we as humans are like, no, when it says COVID was caused by a lab leak, we're like, we don't know that, and we say no bad, don't say that. And then when it says, it is uncertain whether COVID is the result of a lab leak or naturally or if it just occurred via natural mutations. And then we're like, yes, good, say more of that. And you're like, your AI system learns, okay, I shouldn't report true things. I should report things that humans believe or something.

And so that's one way in which you get AI systems that don't do what you want. And then the other way would be more of this inner alignment style story, where I would point out how, even if you do train it, even if all your feedback on the training data points is good. If the world changes in some way, the AI system might stop doing good things.

I might go to example, I mean, I gave the Gmail with emoji polls for meeting scheduling example, but another one, now that I'm on the topic of COVID is, if you imagine an AI system, if you imagine a meeting scheduling AI assistant again, that was trained pre-pandemic, and then the pandemic hits, and it's obviously never been trained on any data that was collected during such a global pandemic. And so when you then ask it to schedule a meeting with your friend, Alice, it just schedules drinks in a bar Sunday evening, even though clearly what you meant was a video call. And it knows that you meant a video call. It just learned the thing to do is to schedule outings with friends on Sunday nights at bars. Sunday night, I don't know why I'm saying Sunday night. Friday night.

Lucas Perry: Have you been drinking a lot on your Sunday nights?

Rohin Shah: No, not even in the slightest. I think truly the problem is I don't go to bars, so I don't have it cached in my head that people go to bars.

Lucas Perry: So how does this all lead to existential risk?

Rohin Shah: Well, the main argument is, one possibility is that your AI system just actually learns to ruthlessly maximize some objective. That isn't the one that we want. Make paperclips, is an stylized example to show what happens in that sort of situation. We're not actually claiming that it will specifically maximize paperclips, but an AI system that really ruthlessly is just trying to maximize paperclips. It is going to prevent humans from stopping it from doing so. And if it gets sufficiently intelligent and can take over the world at some point, it's just going to turn all of the resources in the world, into paperclips, which may or may not include the resources in human bodies, but either way, it's going to include all the resources upon which we depend for survival.

Humans are definitely going, seem like they will definitely go extinct in that type of scenario. So again, not specific to paper clips. This is just; ruthless maximization of an objective, tends not to leave humans alive. Both of these... Well not both of the mechanisms, the inner alignment mechanism that I've been talking about, is compatible with an AI system that ruthlessly maximizes an objective that we don't want.

It does not argue that it is probable, and I am not sure if I think it is probable, I think it is... But I think it is easily enough risk, that we should be really worrying about it, and trying to reduce it.

For the outer alignment style story, where the problem is that the AI may know information that you don't, and then you give it bad feedback. One thing is just, this can exacerbate, this can make it easier for an inner alignment style story to happen, where the AI learns to optimize an objective, that isn't what you actually wanted.

But even if you exclude something like that, Paul Christiano's written a few posts about what a failure, how a human extinction level failure, of this form could look like. It basically looks like, all of your AI systems lying to you about how good the world is as the world becomes much, much worse. So for example, AI systems keep telling you that the things that you're buying are good and helping your helping your lives, but actually they're not, and they're making them worse in some subtle way that you can tell. You were told, all of the information that you're fed makes it seem like, there's no crime, police are doing a great job of catching it, but really, this is just manipulation of the information you're being fed, rather than actual amounts of crime where, in this case, maybe the crimes are being committed by AI systems, not even by humans.

In all of these cases, humans relied on some information sources to make decisions, the AI has new other information that the humans didn't, the AI has learned, Hey, my job is to manage the information sources that humans get, so that the humans are happy, because that's what they did during training. They gave good feedback in cases where the information sort of said, things were going well, even when things were not actually going well.

Lucas Perry: Right. It seems like if human beings are constantly giving feedback to AI systems, and the feedback is based on incorrect information and the AI's have more information, then they're going to learn something, that isn't aligned with, what we really want, or the truth.

Rohin Shah: Yeah, I do feel uncertain about the extent to which this leads to human extinction without... It leads to, I think you can pretty easily make the case that, it leads to an existential catastrophe, as defined by, I want to say it's Bostrom, which includes human extinction, but also a permanent curtailing of humanity's I forget the exact phrasing, but basically if humanity can't use... Yeah, exactly, that counts, and this totally falls into that category. I don't know if it actually leads to human extinction, without some additional sort of failure, that we might instead categorize as inner alignment failure.

Lucas Perry: Let's talk a little bit about probabilities, right? So if, you're talking to someone who has never encountered AI alignment before, you've given a lot of different real world examples and principle-based arguments for, why there are these different kinds of alignment risks, how would you explain the probability of existential risk, to someone who can come along for all of these principle-based arguments, and buy into the examples that you've given, but still thinks this seems kind of, far out there, like when am I ever going to see in the real world, a ruthlessly optimizing AI, that's capable of ending the world?

Rohin Shah: I think, first off, I'm super sympathetic to the 'this seems super out there' critique. I spent multiple years, not really agreeing with AI safety for basically, well, not just that reason, but that was definitely one that their heuristics that I was using. I think one way I would justify this is, to some extent it has precedent here, precedent already, in that fundamentally the arguments that I'm making... Well, especially the inner alignment one, is an argument about how AI systems will behave in new situations rather than the ones that we have already seen, during training. We already know, that AI systems behave crazily in these situations, the most famous example of this is adversarial examples, where you take an image classifier, and I don't actually remember what the canonical example is. I think it's a Panda, and you change it imperceptibly or change the pixel values by a small amounts, such that the changes are imperceptible to the human eye. And then it's confident... It's classified with, I think 99.8% confidence as something else. My memory is saying airplane, but that might just be totally wrong. Anyway, the point is we have precedent for it, AI system's behaving really weirdly, in situations they weren't trained on. You might object, that this one is a little bit cheating, because there was an adversary involved, and the real, I mean the real world does have adversaries, but still by default, you would expect the AI system to be more exposed to naturally occurring distributions. I think even there though, often you can just take an AI system that was trained on one distribution, give it inputs from a different distribution, and it's just like there's no sense to what's happening.

Usually when I'm asked to predict this, the actual prediction I give is, probability that we go extinct due to an intent alignment failure, and then depending on the situation I will either condition on... I will either make that unconditional, so that includes all of the things that people will do to try to prevent that from happening. Or, I make it conditional, on the long-termist community doesn't do anything, or vanishes or something. But even in that world, there's still... Everyone who's not a long-termist, who can still prevent that from happening, which I really do expect them to do, and then I think I give my cached answer, on both of those is like 5% and 10% respectively, which I think is probably the numbers I gave you. If I actually sat down and try to like come up with a probability, I would probably come up with something different this time, but I have not done that, and I'm way too anchored on those previous estimates, to be able to give you a new estimate this time. But, the higher number I'm giving now of, I don't know, 33%, 50%, 70%, this, this one's way more... I feel way more uncertain about it. Literally no one, tries to address these sorts of problems. It's just sort of, take a language model, fine tune it on human feedback, in a very obvious way, and they just deploy that, even if it's very obviously causing harm during training, they still deploy it.

What's the chance that leads to human extinction? I don't know, man, maybe 33%, maybe 70%. The 33% number you can get from this, one in three argument that I was talking about. The second thing I was going to say is, I don't really like talking about probabilities very much, because of how utterly arbitrary the methods of generating them are there.

I feel much more, I feel much more robust. I feel much better in the robustness of the conclusion, that we don't know that this won't happen, and it is at least plausible, that it does happen. I think that's pretty sufficient, for justifying the work done on it. I will also argue pretty strongly against anyone who says, we know that it will kill us all, if we don't do anything. I don't think that's true. There are definitely, smart people who do think that's true, if we operationalized greater than 90, 95% or something, and I disagree with them. I don't really know why though.

Lucas Perry: How would you respond to someone, who thinks that this sounds, like it's really far in the future?

Rohin Shah: Yeah. So this is specifically AGI is far in the future?

Lucas Perry: Yeah. Well, so the concern here seems to be about machines that are increasingly capable. When people look at machines that we have today, machine learning that we have today, sometimes they're not super impressed and think that general capabilities are very far off.

Rohin Shah: Yeah.

Lucas Perry: And so this stuff sounds like, future stuff.

Rohin Shah: Yeah. So, I think my response depends on what we're trying to get the person to do or something, why do we care about what this person believes, if this person is considering whether or not to do AI research themselves or, AI safety research themselves and they feel like they have a strong inside view model of, why AI is not going to come soon. I'm kind of... I'm like, eh, that seems okay. I'm not that stoked about people forcing themselves to do research on a thing they don't actually believe. I don't really think that good research comes from doing that. If I put myself, for example, I am much more sold on AGI coming through neural networks, than planning agents or things similar to it. If I had to put myself in the shoes of, all right, I'm now going to do AI safety research on planning agents. I'm just like, oh man, that's seems like I'm going to do so much... My work is going to be orders of magnitude worse, than the work I do, on the neural-net case. So, in the case where, this person is thinking about whether to do AI safety research, and they feel like they have strong insight view models for AGI not coming soon. I'm like, eh, maybe they should go do something else or possibly, they should engage with the arguments for AGI coming more quickly, if they haven't done that. But, if they have engaged with those arguments, thought about it all, concluded it's far away, and they can't even see a picture by which it comes soon...That's fine.

Conversely, if we're instead, if we're imagining that someone is disputing, someone is saying, 'oh nobody should work on AI safety right now, because AGI is so far away.'. One response you can have to that is, even if it's far away, it's still worthwhile to work on reducing risks, if they're as bad as extinction. Seems like we should be putting effort into that, even early on. But I think, you can make a stronger argument there, which is there're just actually people, lots of people who are trying to build AGI right now, there's, at the minimum; DeepMind and OpenAI and they clearly... I should probably not make more comments about DeepMind, but OpenAI clearly doesn't believe... OpenAI clearly seems to think, that AGI is coming somewhat soon. I think you can infer, from everything you see about DeepMind, that they don't believe that AGI is 200 years away. I think it is insane overconfidence in your own views, to be thinking that you know better than all of these people, such that you wouldn't even assign, like 5% or something, to AGI coming soon enough, that work on AI safety matters.

Yeah. So there, I think I would appeal to... Let other people do the work. You are not, you don't have to do the work yourself. There's just no reason for you to be opposing the other people, either on epistemic grounds or also on just, kind of a waste of your own time, that's the second kind of person. A third kind of person might be like somebody in policy. From my impression of policy, is that there is this thing, where early moves are relatively irreversible, or something like that. Things get entrenched pretty quickly, such that it makes sense to wait for... It often makes sense to wait for a consensus before acting, and I don't think that there is currently consensus of AGI coming soon. I don't feel particularly confident enough in my views to say, we should really convince the policy people, to override this general heuristic of waiting for consensus, and get them to act now.

Yeah. Anyway, those are all meta-level considerations. There's also the object-level question of, is AGI coming soon? For that, I would say, I think the most likely, the best story for that I know of is, you take neural nets, as you scale them up, you increase the size of the datasets that they're trained on. You increase the diversity of the datasets that they're trained on, and they learn more and more general heuristics, for doing good things. Eventually, these general, these heuristics are general enough that they're as good as human cognition. Implicitly, I am claiming that human cognition, is basically a bag of general heuristics. There is this report from Ajeya Cotra, about AGI timelines using biological anchors. I wrote, even my summary of it was 3000 words, or something like that, so I don't know that I can really give an adequate summary of it here, but it models... The basic premise, is to model how quickly neural nets will grow, and at what point they will match what we would expect to be approximately, the same rough size as the human brain. I think it even includes a small penalty to neural nets on the basis that evolution probably did a better job than we did. It basically comes up with a target for, neural nets of this size, trained in Compute Optimal ways, will probably be, roughly human level.

It has a distribution over this, to be more accurate, and then it predicts, based on existing trends. Well, not just existing trends, existing trends and sensible extrapolation, predicts when neural nets might reach that level. It ends up concluding, somewhere in the range... Oh, let me see, I think it's 50% confidence interval would be something like 2035 to 2070, 2080, maybe something like that? I am really just like, I'm imagining a graph in my head, and trying to calculate the area under it, so that is very much not a reliable interval, but it should give you a general sense of what the report concludes.

Lucas Perry: So that's 2030 to 2080?

Rohin Shah: I think it's slightly narrower than that, but yes, roughly, roughly that.

Lucas Perry: That's pretty soon.

Rohin Shah: Yep. I think that's, on the object level that you'd just got to read the report, and see whether or not you buy it.

Lucas Perry: That's most likely in our lifetimes, if we live to the average age.

Rohin Shah: Yep. So that was a 50% interval, meaning it's, 25% to 75 percentile. I think actually the 25th percentile was not as early as 2030. It was probably 2040.

Lucas Perry: So, if I've heard everything, in this podcast, everything that you've said so far, and I'm still kind of like, okay, there's a lot here and it sounds convincing or something and this seems important, but I'm not so sure about this, or that we should do anything. What is... Because, it seems like there's a lot of people like that. I'm curious what it is, that you would say to someone like that.

Rohin Shah: I think... I don't know. I probably wouldn't try to say something general to them. I feel like I would need to know more about the person, people have pretty different idiosyncratic reasons, for having that sort of reaction. Okay, I would at least say, that I think that they are wrong, to be having that sort of belief or reaction.

But, if I wanted to convince them of that point, presumably I would have to say something more than just, I think you are wrong. I think the specific thing I would have to say, which would be pretty different for different people.

Lucas Perry: That's a good point.

Rohin Shah: I would at least make an appeal to the meta-level heuristic of don't try to regulate a small group of... There are a few hundred researchers at most, doing things that they think will help the world, and that you don't think will hurt the world. There are just better things for you to do with your time. Doesn't seem like they're harming you. Some people will think that there is harm being caused by them. I would have to address that, with them specifically, but I think most people do not, who have this reaction, don't believe that.

Lucas Perry: So, so we've gone over a lot of the traditional arguments for AI, as a potential existential risk. Is there anything else that you would like to add there, or any of the arguments that we missed, that you would like to include?

Rohin Shah: As a representative of the community as a whole, there are lots of other arguments that people like to make, for AI being a potential extinction risk. So, some things are, maybe AI just accelerates the rate at which we make progress, and we can't increase our wisdom alongside, and as a result, we get a lot of destructive technologies and can't keep them under control. Or, we don't do enough philosophy, in order to figure out what we actually care about, and what's good to do in the world, and as a result, we start optimizing for things that are morally bad or other things in this vein. Talk about the risk of AI being misused by bad actors. So there's... Well actually I'll introduce a trichotomy that, I don't remember exactly who wrote this article. But it goes, Accidents, Misuse and Structural Risks. So accidents are, both alignment and the things like; we don't keep up, we don't have enough wisdom to cope with the impact of AI. That one's arguable, whether it's an accident, or misuse or structural, and we don't do enough philosophy. So those are, vaguely accidental, those are accidents.

Misuse is, some bad actor. Some terrorists say, gets AI. Gets a powerful AI system and does something really bad, blows up the world somehow. Structural risks are things like; various parts of the economy use AI to accelerate, to get more profit, to accelerate their production of goods and so on. At some point we have this like giant economy, that's just making a lot of goods, but it can become decoupled from things that are actually useful for humans, and we just have this huge multi-agency system, where goods are being produced, money's floating around. We don't really understand all of it, but somehow humans get left behind and there, it's kind of an accident, but not in the traditional sense. It's not that a single AI system went and did something bad. It's more like the entire structure, of the way that the AI systems and the humans related to each other, was such that it ended up leading to the permanent disempowerment of humans. Now that I say it, I think the 'we didn't have enough wisdom' argument for risk, is probably also in this category.

Lucas Perry: Which of these categories are you most worried about?

Rohin Shah: I don't know. I think, it is probably not misuse, but I vary, on accidents versus structural risks, mostly because, I just don't feel like I have a good understanding of structural risks. Maybe, most days I think structural risks are more likely to cause bad outcomes, extinction. The obvious next question is, why am I working on alignment, and not structural risks? The answer there, is that it seems to me like alignment has one, or perhaps two core problems that are leading to the major risk. Whereas structural risks... And so you could hope to have, one or two solutions that address those main problems and that's it, that's all you need. Whereas with structural risks, I would be surprised if it was just, there was just one or two solutions that just got rid of structural risk. It seems much more like, you have to have a different solution for each of the structural risks. So, it seems like, the amount that you can reduce the risk by, is higher in alignment than in structural risks. That's not the only reason why I work in alignment, I just also have a much better personal fit with alignment work. But, I do also think that alignment work, you have more opportunity to reduce the risks, than in structural risks, on the current margin.

Lucas Perry: Is there a name for those one or two core problems in alignment, that you can come up with solutions for?

Rohin Shah: I mostly just mean like, possibly, we've been talking about outer and inner alignment, and in the neural net case, I talked about the problem where you reward the AI system for doing bad things, because there was an information asymmetry, and then the other one was like the AI system generalizes catastrophically, to new situations. Arguably those are just the two things, but I think it's not even that, it's more... Fundamentally the story, the causal chain in the accident's case, was the AI was trying to do something bad, or something that we didn't want rather, and then that was bad.

Whereas in the structural risks case, there isn't a single causal story. It's this very vague general notion of the humans and AI have interacted in ways that led to an X-risk. Then, if you drill down into any given story, or if you drilled down into five stories and then you're like, what's common across these five stories? Not much, other than that there was AI, and there were humans, and they interacted, and I wouldn't say that was true, if I had five stories about alignment failure.

Lucas Perry: So, I'd like to take an overview, a broads eye view of AI alignment in 2021. Last time we spoke was in 2020. How has AI alignment, as a field of research changed in the last year?

Rohin Shah: I think I'm going to naturally include a bunch of things from 2020 as well. It's not a very sharp division in my mind, especially because I think the biggest trend, is just more focus on large language models, which I think was a trend that started late 2020 probably... Certainly, the GPT-3 paper was, I want to say early 2020, but I don't think it immediately caused there to be more work. So, maybe late 2020 is about right. But, you just see a lot more, alignment forum posts, and papers that are grappling with, what are the alignment problems that could arise with large language models? How might you fix them?

There was this paper out of Stanford, which isn't, I wouldn't have said this was from the AI safety community. But it gives the name foundation models to these sorts of things. So they generalize it beyond just language and they think it might... And already we've seen some generalization beyond language, like CLIP and DALL-E are working on image inputs, but they also extend it to robotics and so on. And their point is, we're now more in the realm of, you train one large model on a giant pile of data that you happen to have, that you don't really have any labels for, but you can use a self-supervised learning objective in order to learn from them. And then you get this model that has a lot of knowledge, but no goal built in, and then you do something like prompt engineering or fine tuning in order to actually get it to do the task that you want. And so that's a new paradigm for constructing AI systems that we didn't have before. And there have just been a bunch of posts that grapple with what alignment looks like in this case. I don't think I have a nice pithy summary, unfortunately, of what all of us... What the upshot is, but that's the thing people have been thinking about, a lot more.

Lucas Perry: Why do you think that looking at large scale language models has become a thing?

Rohin Shah: Oh, I think primarily just because GPT-3 demonstrated how powerful they could be. You just see, this is not specific to the AI safety community, even in the... If anything, this shift that I'm talking about is... It's probably not more pronounced in the ML community, but it's also there in the ML community where there are just tons of papers about prompt engineering and fine tuning out of regular ML labs. Just, I think is... GPT-3 showed that it could be done, and that this was a reasonable way to get actual economic value out of these systems. And so people started caring about them more.

Lucas Perry: So one thing that you mentioned to me that was significant in the last year, was foundation models. So could you explain what foundation models are?

Rohin Shah: Yeah. So a foundation model, the general recipe for it, is you take some very... Not generic, exactly. Flexible input space like pixels or any English language, any string of words in the English language, you collect a giant data set without any particular labels, just lots of examples of that sort of data in the wild. So in the case of pixels, you just find a bunch of images from image-sharing websites or something. I don't actually know where they got their images from. For text, it's even easier. The internet is filled with text. You just get a bunch of it. And then you train your AI, you train a very large neural network with some proxy objective on that data set, that encourages it to learn how to model that data set. So in the case of language models, the... There are a bunch of possible objectives. The most famous one was the one that GPT-3 used, which is just, given the first N words of the sentence, predict the word N plus one. And so it just... Initially it starts learning, E's are the most common... Well, actually, because of the specific way that the input space in GPT-3 works, it doesn't exactly do this, but you could imagine that if it was just modeling characters, it would first learn that E's are the most common letter in the alphabet. L's are more common. Q's and Z's don't come up that often. Like it starts outputting letter distributions that at least look vaguely more like what English would look like. Then it starts learning what the spelling of individual words are. Then it starts learning what the grammar rules are. Just, these are all things that help it better predict what the next word is going to be, or, well, the next character, in this particular instantiation.

And it turns out that when you have millions of parameters in your neural network, then you can... I don't actually know if this number is right, but probably, I would expect that with millions of parameters in your neural network, you can learn spellings of words and rules of grammar, such that you're mostly outputting, for the most part, grammatically correct sentences, but they don't necessarily mean very much.

And then when you get to the billions of parameters range, at that point, the millions of parameters are already getting you grammar. So like, what should it use all these extra parameters for, now? Then it starts learning things like George... Well, probably already even the millions of parameters probably learned that George tends to be followed by Washington. But it can start learning things like that. And in that sense, can be said to know that there is an entity, at least, named George Washington. And so on. It might start knowing that rain is wet, and in context where something has been rained on, and then later we're asked to describe that thing, it will say it's wet or slippery or something like that. And so it starts... It basically just, in order to predict words better, it keeps getting more and more "knowledge" about the domain.

So anyway, a foundation model, expressive input space, giant pile of data, very big neural net, learns to model that domain very well, which involves getting a bunch of "knowledge" about that domain.

Lucas Perry: What's the difference between "knowledge" and knowledge?

Rohin Shah: I feel like you are the philosopher here, more than me. Do you know what knowledge without air quotes is?

Lucas Perry: No, I don't. But I don't mean to derail it, but yeah. So it gets "knowledge."

Rohin Shah: Yeah. I mostly put the air quotes around knowledge because we don't really have a satisfying account of what knowledge is. And if I don't put air quotes around knowledge, I get lots of people angrily saying that AI systems don't have knowledge yet.

Lucas Perry: Oh, yeah. That makes sense.

Rohin Shah: And when I put the air quotes around it, then they understand that I just mean that it has the ability to make predictions that are conditional on this particular fact about the world, whether or not it actually knows that fact about that world.

Lucas Perry: Okay.

Rohin Shah: But it knows it well enough to make predictions. Or it contains the knowledge well enough to make predictions. It can make predictions. That's the point. I'm being maybe a bit too harsh, here. I also put air quotes around knowledge because I don't actually know what knowledge is. It's not just a defense strategy. Though, that is definitely part of it.

So yeah. Foundation models, basically are a way to just get all of this "knowledge" into an AI system, such that you can then do prompting and fine tuning and so on. And those, with a very small amount of data, relatively speaking, are able to get very good performance. Like in the case of GPT-3, you can like give it two or three examples of a task and it can start performing that task, if the task is relatively simple. Whereas if you wanted to train a model from scratch to perform that task, you would need thousands of examples, often.

Lucas Perry: So how has this been significant for AI alignment?

Rohin Shah: I think it has mostly provided an actual pathway to it, by which we can get to AGI. Or there's more like a concrete story and path that leads to AGI, eventually. And so then we can take all of these abstract arguments that we were making before, and then see, try to instantiate them in the case of this concrete pathway, and see whether or not they still make sense. I'm not sure if at this point I'm imagining what I would like to do, versus what actually happened. I would need to actually go and look through the alignment newsletter database and see what people actually wrote about the subject. But I think there was some discussion of GPT-3 and the extent to which it is or isn't a mesa optimizer.

Yeah. That's at least one thing that I remember happening. Then there's been a lot of papers that are just like, "Here is how you can train a foundation model like GPT-3 to do the sort of thing that you want." So there's learning to summarize from human feedback, which just took GPT-3 and taught it how to, or fine tuned it in order to get it to summarize news articles, which is an example of a task that you might want an AI system to do.

And then the same team at OpenAI just recently released a paper that actually summarized entire books by using a recursive decomposition strategy. In some sense, a lot of the work we've been doing in the past, in AI alignment was like how do we get AI systems to perform fuzzy tasks for which we don't have a reward function? And now we have systems that could do these fuzzy tasks in the sense that they "have the knowledge," but don't actually use that knowledge the way that we would want them. And then we have to figure out how to get them to do that. And then we can use all these techniques like imitation learning, and learning from comparisons and preferences that we've been developing.

Lucas Perry: Why don't we know that AI systems won't totally kill us all?

Rohin Shah: The arguments for AI risk usually depend on having an AI system that's ruthlessly maximizing an objective in every new situation it encounters. So for example, the paperclip maximizer, once it's built 10 paperclip factories, it doesn't retire and say, "Yep, that's enough paperclips." It just continues turning entire planets into paper clips. Or if you consider the goal of, make a hundred paper clips, and it turns all of the plants into computers to make sure it is as confident as possible, that it has made a hundred paper clips. These are examples of, I'm going to call it "ruthlessly maximizing" an objective. And there's some sense in which this is weird and humans don't behave in that way. And I think there's some amount of, basically I am unsure whether or not we should actually expect AI's to have such ruthlessly maximized objectives. I don't really see the argument for why that should happen. And I think, as a particularly strong piece of evidence against this, I would note that humans don't seem to have these sorts of objectives.

It's not obviously true. There are probably some longtermists who really do want to tile the universe with hedonium, which seems like a pretty ruthlessly maximizing objective to me. But I think even then, that's the exception rather than the rule. So if humans don't ruthlessly maximize objectives and humans were built by a similar process as is building neural networks, why do we expect the neural networks to have objectives that they ruthlessly maximize?

You can also... I've phrased this in a way where it's an argument against AI risk. You can also phrase it in a way in which it's an argument for AI risk, where you would say, well, let's flip that on its head and say like, "Well, yes, you brought up the example of humans. Well, the process that created humans is trying to maximize, or it is an optimization process, leading to increased reproductive fitness. But then humans do things like wear condoms, which does not seem great for reproductive fitness, generally speaking, especially for the people who are definitely out there who decide that they're just never going to reproduce. So in that sense, humans are clearly having a large impact on the world and are doing so for objectives that are not what evolution was naively optimizing.

And so, similarly, if we train AI systems in the same way, maybe they too will have a large impact on the world, but not for what the humans were naively training the system to optimize.

Lucas Perry: We can't let them know about fun.

Rohin Shah: Yeah. Terrible. Well, I don't want to be-

Lucas Perry: The whole human AI alignment project will run off the rails.

Rohin Shah: Yeah. But anyway, I think these things are a lot more conceptually tricky than the well-polished arguments that one reads, will make it seem. But especially this point about, it's not obvious that AI systems will get ruthlessly maximizing objectives. That really does give me quite a bit of pause, in how good the AI risk arguments are. I still think it is clearly correct to be working on AI risk, because we don't want to be in the situation where we can't make an argument for why AI is risky. We want to be in the situation where we can make an argument for why the AI is not risky. And I don't think we have that situation yet. Even if you completely buy the, we don't know if there's going to be ruthlessly maximizing objectives, argument, that puts you in the epistemic state where we're like, "Well, I don't see an iron clad argument that says that AIs will kill us all." And that's sort of like saying... I don't know. "Well, I don't have an iron clad argument that touching this pan that's on this lit stove, will burn me, because maybe someone just put the pan on the stove a few seconds ago." But it would still be a bad idea to go and do that. What you really want, is a positive argument for why touching the pan is not going to burn you, or analogously, why building the AGI is not going to kill you. And I don't think we have any such positive argument, at the moment.

Lucas Perry: Part of this conversation's interesting because I'm surprised how uncertain you are about AI as an existential risk.

Rohin Shah: Yeah. It's possible I've become slightly more uncertain about it in the last year or two. I don't think I was saying things that were quite this uncertain before then, but I think I have generally been... We have plausibility arguments. We do not have like, this is probable, arguments. Or back in 2017 or 2018 when I was young and naive.

Lucas Perry: Okay.

Rohin Shah: This makes more sense.

Lucas Perry: We're no longer young and naive.

Rohin Shah: Well, okay. I entered the field of AI alignment. I read my first AI alignment paper in September of 2017. So it actually does make sense. At that time, I thought we had more confidence of some sort, but since posting the value learning sequence, I've generally been more uncertain about AI risk arguments. I don't talk about it all that much, because as I said, the decision is still very clear. The decision is still, work on this problem. Figure out how to get a positive argument that the AI is not going to kill us. And ideally, a positive argument that the AI does good things for humanity. I don't know, man. Most things in life are pretty uncertain. Most things in the future are even way, way, way more uncertain. I don't feel like you should generally be all that confident about technologies that you think are decades out.

Lucas Perry: Feels a little bit like those images of the people in the fifties drawing what the future would look like, and the images are ridiculous.

Rohin Shah: Yep. Yeah. I I've been recently watching Star Wars. Now, obviously Star Wars is not actually supposed to be a prediction of the future, but it's really quite entertaining, to actually just think about all the ways in which Star Wars would be totally inaccurate. And this is before we'd even invented space travel. And just... Robots talking to each other, using sound. Why would they do that?

Lucas Perry: Industry today, wouldn't make machines that speak by vibrating air. They would just send each other signals electromagnetically. So how much of the alignment and safety problems in AI do you think will be solved by industry? The same way that computer-to-computer communication is solved by industry, and is not what Star Wars thought it would be. Would the DeepMind AI safety lab exist, if DeepMind didn't think that AI alignment and AI safety were serious and important? I don't know if the lab is purely aligned with the commercial interests of DeepMind itself, or if it's also kind of seen as a good-for-the-world thing. I bring it up because I like how Andrew Critch talks about it in his arches paper.

Rohin Shah: Yep. So, Critch is, I think, of the opinion that both preference learning and robustness are problems that will be solved by industry. I think he includes robustness in that. And I certainly agree to the extent that you're like, "Yes, companies will do things like learning from human preference." Totally. They're going to do that. Whether they're going to be proactive enough to notice the kinds of failures I mentioned, I don't know. It doesn't seem nearly as obvious to me that they will be, without dedicated teams that are specifically meant for looking for hidden failures with the knowledge that these are really important to get, because they could have very bad long term consequences.

AI systems could increase the strength of, and accelerate various multi-agent systems and processes that, when accelerated, could lead to bad outcomes. So for example, a great example of a destructive multi-agent effect, is war. War is a thing that... Well, wars have been getting more destructive over time, or at least the weapons in them have been getting more destructive. Probably the death tolls have also been getting higher, but I'm not as sure about that. And you could imagine that if AI systems continue to increase, if they increase the destructiveness of weapons even more, wars might then become an existential risk. That's a way in which you can get a structural risk from a multi-agent system. And the example in which the economy just sort of becomes much, much, much bigger, but becomes decoupled from things that humans want, is another example of how a multi-agent process can sort of go haywire, especially with the addition of powerful AI systems. I think that's also a canonical scenario that Critch would think about. Yeah.

Really, I would say that Arches is, in my head, it's categorized as a technical paper about structural risks.

Lucas Perry: Do you think about what beneficial futures look like? You spoke a little bit about wisdom earlier, and I'm curious what good futures with AI, looks like to you.

Rohin Shah: Yeah, I admit I don't actually think about this very much. Because my research is focused on more abstract problems, I tend to focus on abstract considerations, and the main abstract consideration from the perspective of the good future, is, well, once we get to singularity levels of powerful AI systems, anything I say now, there's going to be something way better that AI systems are going to enable. So then, as a result, I don't think very much about it. But that's mostly a thing about me not being in a communications role.

Lucas Perry: You work a lot on this risk. So you must think that humanity existing in the future, matters?

Rohin Shah: I do like humans. Humans are pretty great. I count many of them amongst my friends. I've never been all that good at the transhumanist, look to the future and see the grand potential of humanity, sorts of visions. But when other people say them or give them, I feel a lot of kinship with them. The ones that are all about humanity's potential to discover new forms of art and music, reach new levels of science, understand the world better than it's ever been understood before, fall in love a hundred times, learn all of the things that there are to know. Actually, you won't be able to do that one, probably, but anyway. Learn way more of the things that there are to know, than you have right now. Just a lot of that resonates with me. And that's probably a very intellectual-centric view of the future. I feel like I'd be interested in hearing the view of the future that's like, "Ah yes, we have the best video games and the best TV shows. And we're the best couch potatoes that ever were." Or also, there's just insane new sports that you have to spend lots of time and grueling training for, but it's all worth it when you shoot the best, get a perfect score on the best dunk that's ever been done in basketball, or whatever. I recently watched a competition of apparently there are competitions in basketball of just aesthetic dunks. It's cool. I enjoyed it. Anyway. Yeah. It feels like there's just so many other communities that could also have their own visions of the future. And I feel like I'd feel a lot of kinship with many of those, too. And I'm like, man, let's just have all the humans continue to do the things that they want. It seems great.

Lucas Perry: One thing that you mentioned was that you deal with abstract problems. And so what a good future looks like to you, it seems like it's an abstract problem that later, the good things that AI can give us, are better than the good things that we can think of, right now. Is that a fair summary?

Rohin Shah: That seems, right. Yeah.

Lucas Perry: Right. So there's this view, and this comes from maybe Steven Pinker or someone else. I'm not sure. Or maybe Ray Kurzweil, I don't know... Where if you give a caveman a genie, or an AI, they'll ask for maybe a bigger cave, and, "I would like there to be more hunks of meat. And I would like my pelt for my bed to be a little bit bigger." Go ahead.

Rohin Shah: Okay. I think I see the issue. So I actually don't agree with your summary of the thing that I said.

Lucas Perry: Oh, okay.

Rohin Shah: Your rephrasing was that we ask the AI what good things there are to do, or something like that. And that might have been what I said, but what I actually meant was that with powerful AI systems, the world will just be very different. And one of the ways in which it will be different is that we can get advice from AIs on what to do. And certainly, that's an important one, but also, there will just be incredible new technologies that we don't know about. New realms of science to explore new concepts that we don't even have names for, right now. And one that seems particularly interesting to me, is just entirely new senses. Human vision is just incredibly complicated, but I can just look around the room and identify all the objects with basically no conscious thought. What would it be like to understand DNA at that level? AlphaFold probably understands DNA at maybe not quite that level, but something like it.

I don't know, man. There's just like these things that I'm like... I thought of the DNA one because of AlphaFold. Before AlphaFold, would I have thought of it? Probably not. I don't know. Maybe. Kurzweil has written a little bit about things like this. But it feels like there will just be far more opportunities. And then also, we can get advice from AIs, but that's probably... Actually- and that's important, but I think less than... There are far more opportunities, that I am definitely not going to be able to think of today.

Lucas Perry: Do you think that it's dissimilar, from the caveman wishing for more caveman things?

Rohin Shah: Yeah. I feel like in the caveman story... It's possible that the caveman does this, I feel like the thing the caveman should be doing, is something like, give me better ways to... give me better food or something, and then you get fire to cook things, or something.

Lucas Perry: Yeah.

Rohin Shah: The things that he asks for, should involve technology as a solution. He should get technology as a solution, to learn more, and be able to do more things as a result of having that technology. In this hypothetical, the caveman should reasonably quickly, become similar to modern humans. I don't know what reasonably quickly means here, but it should be much more... You get access to more and more technologies, rather than you get a bigger cave and then you're like, "I have no more wishes anymore." If I got a bigger house, would I stop having wishes? That seems super unlikely. That's a strawman argument, sorry. But still, I do feel like there's this... A meaningful sense in which, getting new technology leads to just genuinely new circumstances, which leads to more opportunities, which leads to probably more technology, and so on, and at some point, this has to stop. There are limits to what is possible. One assumes there are limits to what is possible in the universe. But I think, once we get to talking about, we're at those limits, then at that point, it just seems irresponsible to speculate. It's just so wildly out of the range of things that we know, the concept of a person is probably wrong, at that point.

Lucas Perry: The what of a person is probably wrong at that point?

Rohin Shah: The concept of a person.

Lucas Perry: Oh.

Rohin Shah: I'd be like, "Is there an entity, that is Rohin at that time?" Not likely. Less than 50%.

Lucas Perry: We'll edit in just fractals flying through your video, at this part of the interview. So in my example, I think it's just because I think of cavemen as not knowing how to ask for new technology, but we want to be able to ask for new technology. Part of what this brings up for me, is this very classic part of AI alignment, and I'm curious how you feel like it fits into the problem.

But, we would also like AI systems to help us imagine beneficial futures potentially, or to know what is good or what it is that we want. So, in asking for new technology, it knows that fire is part of the good, that we don't know how to necessarily ask for directly. How do you view AI alignment, in terms of itself aiding in the creation of beneficial futures, and knowing of a good that is beyond the good, that humanity can grasp?

Rohin Shah: I think I more reject, the premise of the question, where I'd be like, there is no good beyond that which humanity can grasp. This is somewhat of an anti-realist position.

Lucas Perry: You mean, moral anti-realist, just for the-

Rohin Shah: Yes. Sorry, I should have said that more clearly. Yeah. Somewhat of a moral anti-realist position. There is no good, other than that which humans can grasp. Within that 'could grasp', you can have humans thinking for a very long time, you could have them with extra... you can make them more intelligent, like part of the technologies you get from AI systems will presumably like you do that, maybe you can, I guess setting aside questions of philosophical identity, you could upload the humans such that they could run on a computer, and run much faster, have software upgrades to be... To the extent that, that's philosophically acceptable. There's a lot you can do to help humans grasp more. Ultimately, yes, the closure of all these improvements, where you get to with all of that, that's just, is the thing that we want. Yes, you could have a theory, that there is something even better, and even more out there, that humans can never access by themselves, that just seems like a weird hypothesis to have, and I don't know why you would have it. But, in the world where that hypothesis is true, and if I condition on that hypothesis being true, I don't see why we should expect, that AI systems could access that further truth any better than we can, if it's out of our, the closure of what we can achieve, even with additional intelligence and such. There's no other advantage that AI systems have over us.

Lucas Perry: So, is what you're arguing, that with human augmentation and help to human beings, so like with uploads or with expanding the intelligence and capabilities of humans, that humans have access to the entire space of what counts as good.