Planning for Existential Hope

Contents

It may seem like we at FLI spend a lot of our time worrying about existential risks, but it’s helpful to remember that we don’t do this because we think the world will end tragically: We address issues relating to existential risks because we’re so confident that if we can overcome these threats, we can achieve a future greater than any of us can imagine!

As we end 2018 and look toward 2019, we want to focus on a message of hope, a message of existential hope.

But first, a very quick look back…

We had a great year, and we’re pleased with all we were able to accomplish. Some of our bigger projects and successes include: the Lethal Autonomous Weapons Pledge; a new round of AI safety grants focusing on the beneficial development of AGI; the California State Legislature’s resolution in support of the Asilomar AI Principles; and our second Future of Life Award, which was presented posthumously to Stanislav Petrov and his family.

As we now look ahead and strive to work toward a better future, we, as a society, must first determine what that collective future should be. At FLI, we’re looking forward to working with global partners and thought leaders as we consider what “better futures” might look like and how we can work together to build them.

As FLI President Max Tegmark says, “There’s been so much focus on just making our tech powerful right now, because that makes money, and it’s cool, that we’ve neglected the steering and the destination quite a bit. And in fact, I see that as the core goal of the Future of Life Institute: help bring back focus the steering of our technology and the destination.”

A recent Gizmodo article on why we need more utopian fiction also summed up the argument nicely: ”Now, as we face a future filled with corruption, yet more conflict, and the looming doom of global warming, imagining our happy ending may be the first step to achieving it.”

Fortunately, there are already quite a few people who have begun considering how a conflicted world of 7.7 billion can unite to create a future that works for all of us. And for the FLI podcast in December, we spoke with six of them to talk about how we can start moving toward that better future.

The existential hope podcast includes interviews with FLI co-founders Max Tegmark and Anthony Aguirre, as well as existentialhope.com founder Allison Duettmann, Josh Clark who hosts The End of the World with Josh Clark, futurist and researcher Anders Sandberg, and tech enthusiast and entrepreneur Gaia Dempsey. You can listen to the full podcast here, but we also wanted to call attention to some of their comments that most spoke to the idea of steering toward a better future:

Max Tegmark on the far future and the near future:

When I look really far into the future, I also look really far into space and I see this vast cosmos, which is 13.8 billion years old. And most of it is, despite what the UFO enthusiasts say, actually looking pretty dead and wasted opportunities. And if we can help life flourish not just on earth, but ultimately throughout much of this amazing universe, making it come alive and teeming with these fascinating and inspiring developments, that makes me feel really, really inspired.

For 2019 I’m looking forward to more constructive collaboration on many aspects of this quest for a good future for everyone on earth.

Gaia Dempsey on how we can use a technique called world building to help envision a better future for everyone and get more voices involved in the discussion:

Worldbuilding is a really fascinating set of techniques. It’s a process that has its roots in narrative fiction. You can think of, for example, the entire complex world that J.R.R. Tolkien created for The Lord of the Rings series. And in more contemporary times, some spectacularly advanced worldbuilding is occurring now in the gaming industry. So [there are] these huge connected systems that underpin worlds in which millions of people today are playing, socializing, buying and selling goods, engaging in an economy. These are vast online worlds that are not just contained on paper as in a book, but are actually embodied in software. And over the last decade, world builders have begun to formally bring these tools outside of the entertainment business, outside of narrative fiction and gaming, film and so on, and really into society and communities. So I really define worldbuilding as a powerful act of creation.

And one of the reasons that it is so powerful is that it really facilitates collaborative creation. It’s a collaborative design practice.

Ultimately our goal is to use this tool to explore how we want to evolve as a society, as a community, and to allow ideas to emerge about what solutions and tools will be needed to adapt to that future.

One of the things where I think worldbuilding is really good is that the practice itself does not impose a single monolithic narrative. It actually encourages a multiplicity of narratives and perspectives that can coexist.

Anthony Aguirre on how we can use technology to find solutions:

I think we can use technology to solve any problem in the sense that I think technology is an extension of our capability: it’s something that we develop in order to accomplish our goals and to bring our will into fruition. So, sort of by definition, when we have goals that we want to do — problems that we want to solve — technology should in principle be part of the solution.

So I’m broadly optimistic that, as it has over and over again, technology will let us do things that we want to do better than we were previously able to do them.

Allison Duettmann on why she created the website existentialhope.com:

I do think that it’s up to everyone, really, to try to engage with the fact that we may not be doomed, and what may be on the other side. What I’m trying to do with the website, at least, is generate common knowledge to catalyze more directed coordination toward beautiful futures. I think that there a lot of projects out there that are really dedicated to identifying the threats to human existence, but very few really offer guidance on to influence that. So I think we should try to map the space of both peril and promise which lie before us, we should really try to aim for that. This knowledge can empower each and every one of us to navigate toward the grand future.

Josh Clark on the impact of learning about existential risks for his podcast series, The End of the World with Josh Clark:

As I was creating the series, I underwent this transition how I saw existential risks, and then ultimately how I saw humanity’s future, how I saw humanity, other people, and I kind of came to love the world a lot more than I did before. Not like I disliked the world or people or anything like that. But I really love people way more than I did before I started out, just because I see that we’re kind of close to the edge here. And so the point of why I made the series kind of underwent this transition, and you can kind of tell in the series itself where it’s like information, information, information. And then now, that you have bought into this, here’s how we do something about it.

I think that one of the first steps to actually taking on existential risks is for more and more people to start talking about [them].

Anders Sandberg on a grand version of existential hope:

The thing is, my hope for the future is we get this enormous open ended future. It’s going to contain strange and frightening things but I also believe that most of it is going to be fantastic. It’s going to be roaring on the world far, far, far into the long term future of the universe probably changing a lot of the aspects of the universe.

When I use the term “existential hope,” I contrast that with existential risk. Existential risks are things that threaten to curtail our entire future, to wipe it out, to make it too much smaller than it could be. Existential hope to me, means that maybe the future is grander than we expect. Maybe we have chances we’ve never seen and I think we are going to be surprised by many things in future and some of them are going to be wonderful surprises. That is the real existential hope.

Right now, this sounds totally utopian, would you expect all humans to get together and agree on something philosophical? That sounds really unlikely. Then again, a few centuries ago the United Nations and the internet would totally absurd. The future is big, we have a lot of centuries ahead of us, hopefully.

From everyone at FLI, we wish you a happy holiday season and a wonderful New Year full of hope!

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about Recent News

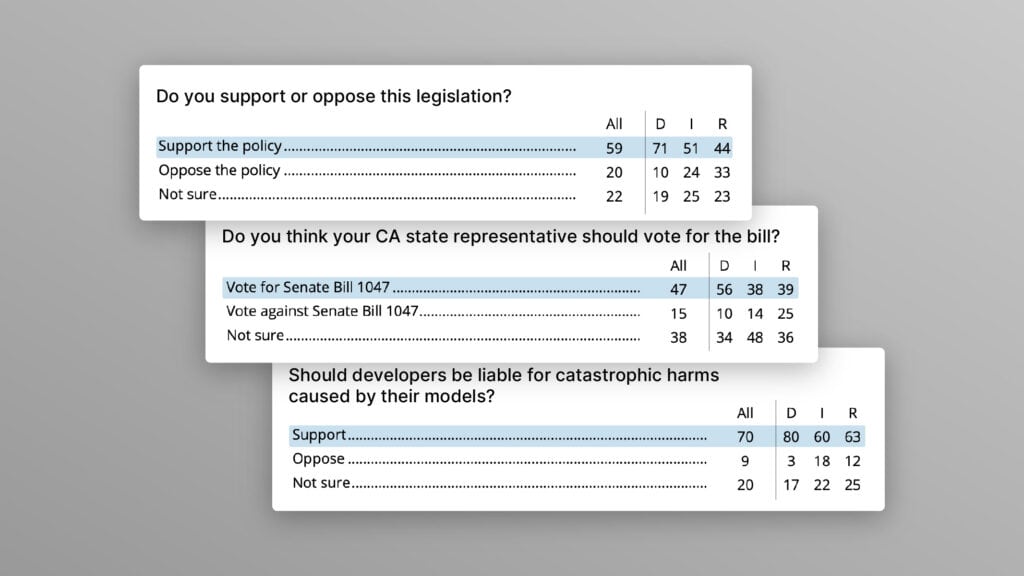

Poll Shows Broad Popularity of CA SB1047 to Regulate AI

FLI Praises AI Whistleblowers While Calling for Stronger Protections and Regulation

US Senate Hearing ‘Oversight of AI: Principles for Regulation’: Statement from the Future of Life Institute