MIRI: Artificial Intelligence: The Danger of Good Intentions

Contents

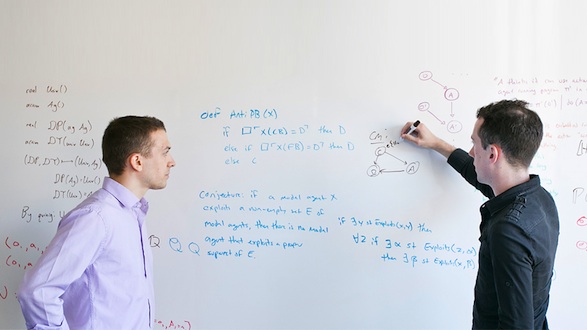

The Machine Intelligence Research Institute

Credit: Vivian Johnson

The Terminator had Skynet, an intelligent computer system that turned against humanity, while the astronauts in 2001: A Space Odyssey were tormented by their spaceship’s sentient computer HAL 9000, which had gone rogue. The idea that artificial systems could gain consciousness and try to destroy us has become such a cliché in science fiction that it now seems almost silly. But prominent experts in computer science, psychology, and economics warn that while the threat is probably more banal than those depicted in novels and movies, it is just as real—and unfortunately much more challenging to overcome.

The core concern is that getting an entity with artificial intelligence (AI) to do what you want isn’t as simple as giving it a specific goal. Humans know to balance any one aim with others and with our shared values and common sense. But without that understanding, an AI might easily pose risks to our safety, with no malevolence or even consciousness required. Addressing this danger is an enormous—and very technical—problem, but that’s the task that researchers at the Machine Intelligence Research Institute (MIRI), in Berkeley, California are taking on.

MIRI grew from the Singularity Institute for Artificial Intelligence (SIAI), which was founded in 2000 by Eliezer Yudkowsky and initially funded by Internet entrepreneurs Brian and Sabine Atkins. Largely self-educated, Yudkowsky became interested in AI after reading about the movement to improve human capabilities with technology in his teens. For the most part, he hasn’t looked back. Though he’s written about psychology and philosophy of science for general audiences, Yudkowsky’s research has always been concerned with AI.

Back in 2000, Yudkowsky had somewhat different aims. Rather than focusing on the potential dangers of AI, his original goals reflected the optimism surrounding the subject, at that time. “Amusingly,” Luke Muehlhauser, now MIRI’s executive director and a former IT administrator who first visited the institute in 2011, says, “the Institute was founded to accelerate toward artificial intelligence.”

Teaching Values

However, it wasn’t long before Yudkowsky realised that the more important challenge was figuring out how to do that safely by getting AI to incorporate our values in their decision making. “It caused me to realize, with some dismay, that it was actually going to be technically very hard,” Yudkowsky says, even by comparison with the problem of creating a hyperintelligent machine capable of thinking about whatever sorts of problems we might give it.

In 2013, SIAI rebranded itself as MIRI, with a largely new staff, in order to refocus on the rich scientific problems related to creating well-behaved AI. To get a handle on this challenge, Muehlhauser suggests, consider assigning a robot with superhuman intelligence the task of making paper clips. The robot has a great deal of computational power and general intelligence at its disposal, so it ought to have an easy time figuring out how to fulfil its purpose, right?

anthropomorphic blind

spots.

Not really. Human reasoning is based on an understanding derived from a combination of personal experience and collective knowledge derived over generations, explains MIRI researcher Nate Soares, who trained in computer science in college. For example, you don’t have to tell managers not to risk their employees’ lives or strip mine the planet to make more paper clips. But AI paper-clip makers are vulnerable to making such mistakes because they do not share our wealth of knowledge. Even if they did, there’s no guarantee that human-engineered intelligent systems would process that knowledge the same way we would.

Worse, Soares says, we cannot just program the AI with the right ways to deal with any conceivable circumstance it may come across because among our human weaknesses is a difficulty enumerating all possible scenarios. Nor can we rely on just pulling the machine’s plug if it goes awry. A sufficiently intelligent machine handed a task but lacking a moral and ethical compass would likely disable the off switch because it would quickly figure out that its presence could prevent it from achieving the goal we gave it. “Your lines of defense are up against something super intelligent,” say Soares.

Pondering the perfect paper clip.

How can we train AI to care less about their goals?

Credit: tktk

So who is qualified to go up against an overly zealous AI? The challenges now being identified are so new that no single person has the perfect training to work out the right direction, says Muehlhauser. With this in mind, MIRI’s directors have hired from diverse backgrounds. Soares, for example, studied computer science, economics, and mathematics as an undergraduate and worked as a software engineer prior to joining the institute—probably exactly what the team needs. MIRI, Soares says, is “sort of carving out a cross section of many different fields,” including philosophy and computer science. That’s essential, Soares adds, because understanding how to make artificial intelligence safe will take a variety of perspectives to help create the right conceptual and mathematical framework.

Programming Indifference

One promising idea to ensure that AI behave well is enabling them to take constructive criticism. AI has built-in incentives to remove restrictions people place on it if it thinks it can reach its goals faster. So how can you persuade AI to cooperate with engineers offering corrective action? Soares’ answer is to program in a kind of indifference; if a machine doesn’t care which purpose it pursues, perhaps it wouldn’t mind so much if its creators wanted to modify its present goal.

There is also an issue about how we can be sure that, even with our best safeguards in place, the AI’s design will work as intended. It turns out that some approaches to gaining that confidence run into some fundamental mathematical problems closely related to Gödel’s Incompleteness Theorem, which says that in any logical system, there are statements that cannot be proved true or false. That’s something of a problem if you want to anticipate what your logical system—your AI—is going to do. “It’s much harder than it looks to formalize” those sorts of ideas mathematically, Soares says.

But it is not just in humanity’s best interests to do so. According to the “tiling problem,” benevolent AIs that we design may be at risk of creating future generations of even smarter AIs. Those systems will likely be outside our understanding, and possibly lie beyond their AI creators’ control too. As outlandish as this seems, MIRI’s researchers stress that humans should never make the mistake of thinking that AIs will think like us, says Soares. “Humans have huge anthropomorphic blind spots,” he says.

For now, MIRI is concentrating on scaling up, by hiring more people to work on this growing list of problems. In some sense, MIRI, with its ever-evolving awareness of the dangers that lie ahead is like an AI with burgeoning consciousness. After all, as Muehlhauser says, MIRI’s research is “morphing all the time.”

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Partner Orgs

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI