Contents

FLI August, 2016 Newsletter

How Can You Do the Most Good?

Cari Tuna and Will McAskill at the EA Global Conference.

How can we more effectively make the world a better place? Over 1,000 concerned altruists converged at the Effective Altruism Global conference this month in Berkeley, CA to address this very question. For two and a half days, participants milled around the Berkeley campus, attending talks, discussions, and workshops to learn more about efforts currently underway to improve our ability to not just do good in the world, but to do the most good.

Since not everyone could make it to the conference, we put together some highlights of the event. Where possible, we’ve included links to videos of the talks and discussions.

Learn more about the EA movement and how you can do the most good!

As part of his own talk during the EA Global conference, Max Tegmark created a new feature on the site that clears up some of the common myths about advanced AI, so we can start focusing on the more interesting and important debates within the AI field.

Podcasts: Follow us on SoundCloud!

Concrete Problems in AI Safety with Dario Amodei and Seth Baum

Many researchers in the field of artificial intelligence worry about potential short-term consequences of AI development. Yet far fewer want to think about the long-term risks from more advanced AI. Why? To start to answer that question, it helps to have a better understanding of what potential issues we could see with AI as it’s developed over the next 5-10 years. And it helps to better understand the concerns actual researchers have about AI safety, as opposed to fears often brought up in the press.

We brought on Dario Amodei and Seth Baum to discuss just that. Amodei, who now works with OpenAI, was the lead author on the recent, well-received paper Concrete Problems in AI Safety. Baum is the Executive Director of the Global Catastrophic Risk Institute, where much of his research is also on AI safety.

What We’ve Been Up to This Month

Noteworthy Events

Congratulations to Stuart Russell for his recently announced launch of the Center for Human-Compatible AI! Read more about his new center, which is funded, primarily, by a generous grant from the Open Philanthropy Project, along with assistance from FLI and DARPA.

Papers

Why does deep learning work so well? Max Tegmark and a student just posted a paper arguing that part of the answer is to be found not in mathematics but in physics: deep learning makes approximations that are remarkably well matched to the laws of nature. We often treat deep learning like a mysterious black box much like the brain of a child: we know that if we train it according to a certain curriculum, it will learn certain skills, but we don’t fully understand why. Such improved understanding might help make AI both capable and more robust.

Conferences

In addition to the EA Global conference, which four FLI members attended, Richard Mallah and Viktoriya Krakovna have also travelled far and wide to share ideas and learn more about AI and machine learning.

Mallah participated in:

Symposium on Ethics of Autonomous Systems (SEAS Europe)

Conversations on Ethical and Social Implications of Artificial Intelligence (An IEEE TechEthics™ Event)

Krakovna attended the Deep Learning Summer School and wrote a post about the highlights of the event.

ICYMI: This Month’s Most Popular Articles

Op-ed: Education for the Future – Curriculum Redesign

By Charles Fadel

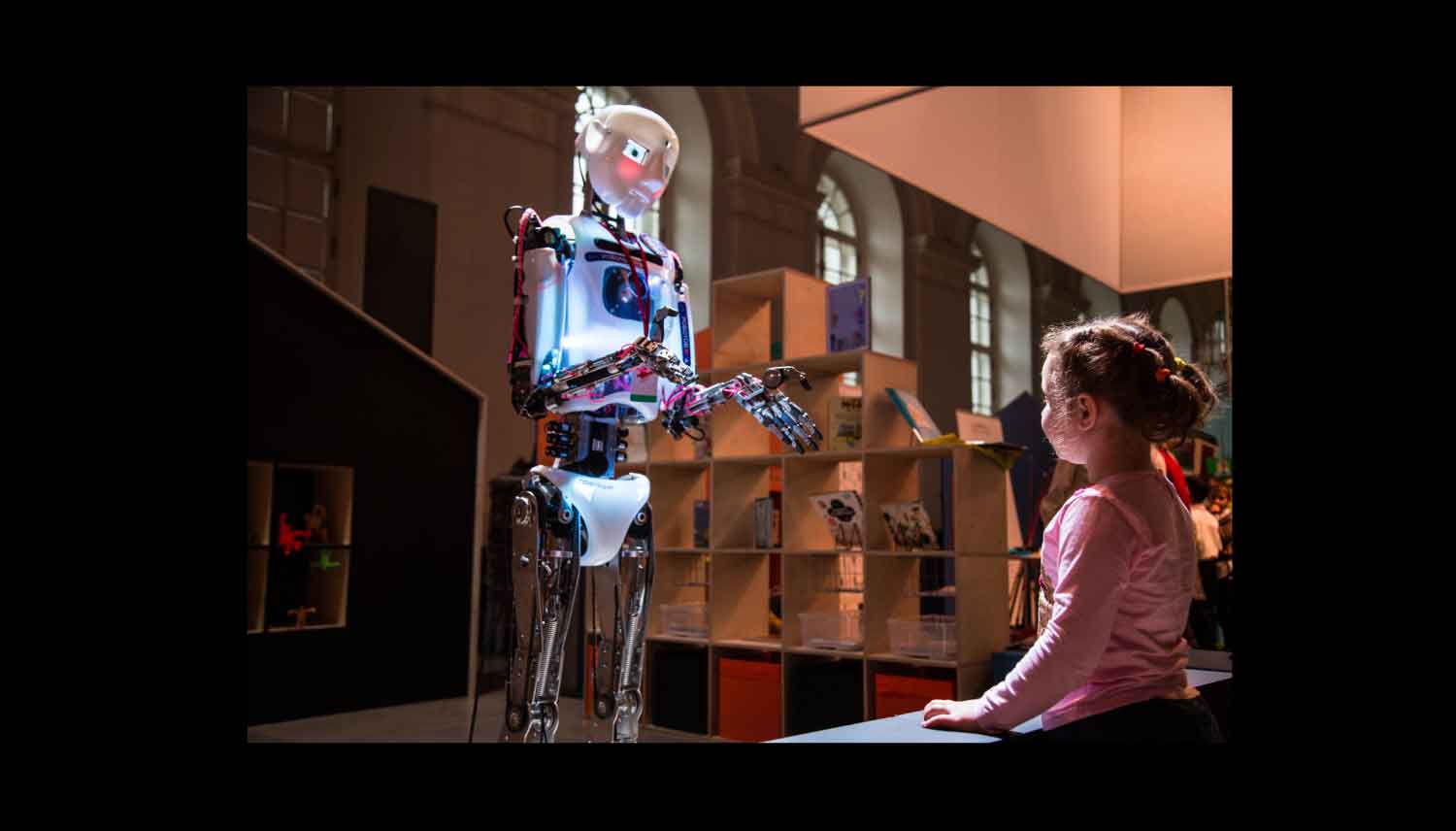

At the heart of ensuring the best possible future lies education. Experts may argue over what exactly the future will bring, but most agree that the job market, the economy, and society as a whole are about to see major changes. Automation and artificial intelligence are on the rise, interactions are increasingly global, and technology is rapidly changing the landscape. Many worry that the education system is increasingly outdated and unable to prepare students for the world they’ll graduate into – for life and employability.

Clopen AI – Openness in Different Aspects of AI Development

By Viktoriya Krakovna

There is disagreement on this question within the AI safety community as well as outside it. Many people are justifiably afraid of concentrating power to create AGI and determine its values in the hands of one company or organization. Many others are concerned about the information hazards of open-sourcing AGI and the resulting potential for misuse.

Developing Countries Can’t Afford Climate Change

By Tucker Davey

Developing countries currently cannot sustain themselves, let alone grow, without relying heavily on fossil fuels. Global warming typically takes a back seat to feeding, housing, and employing these countries’ citizens. Yet the weather fluctuations and consequences of climate change are already impacting food growth in many of these countries. Is there a solution?

Note From FLI:

The FLI website is including an increasing number of op-eds. Among our objectives for the website is to inspire discussion and a sharing of ideas, and as such, we’re posting opinion pieces that we believe will help spur discussion within our community. However, these op-eds do not necessarily represent FLI’s opinions or views.

We’re also looking for more writing volunteers. If you’re interested in writing either news or opinion articles for us, please let us know.