FLI Governance Scorecard and Safety Standards Policy (SSP)

Evaluating proposals for AI governance and providing a regulatory framework for robust safety standards, measures and oversight.

The Future of Life Institute (FLI) has undertaken a comparison of AI governance proposals, and put forward a safety framework which looks to combine effective regulatory measures with specific safety standards.

Contents

Introduction

AI remains the only powerful technology lacking meaningful binding safety standards. This is not for lack of risks. The rapid development and deployment of ever-more powerful systems is now absorbing more investment than that of any other models. Along with great benefits and promise, we are already witnessing widespread harms such as mass disinformation, deep- fakes and bias – all on track to worsen at the currently unchecked, unregulated and frantic pace of development. As AI systems get more sophisticated, they could further destabilize labor markets and political institutions, and continue to concentrate enormous power in the hands of a small number of unelected corporations. They could threaten national security by facilitating the inexpensive development of chemical, biological, and cyber weapons by non-state groups. And they could pursue goals, either human- or self-assigned, in ways that place negligible value on human rights, human safety, or, in the most harrowing scenarios, human existence.

Despite acknowledging these risks, AI companies have been unwilling or unable to slow down. There is an urgent need for lawmakers to step in to protect people, safeguard innovation, and help ensure that AI is developed and deployed for the benefit of everyone. This is common practice with other technologies. Requiring tech companies to demonstrate compliance with safety standards enforced by e.g. the FDA, FAA or NRC keeps food, drugs, airplanes and nuclear reactors safe, and ensures sustainable innovation. Society can enjoy these technologies’ benefits while avoiding their harms. Why wouldn’t we want the same with AI?

With this in mind, the Future of Life Institute (FLI) has undertaken a comparison of AI governance proposals, and put forward a safety framework which looks to combine effective regulatory measures with specific safety standards.

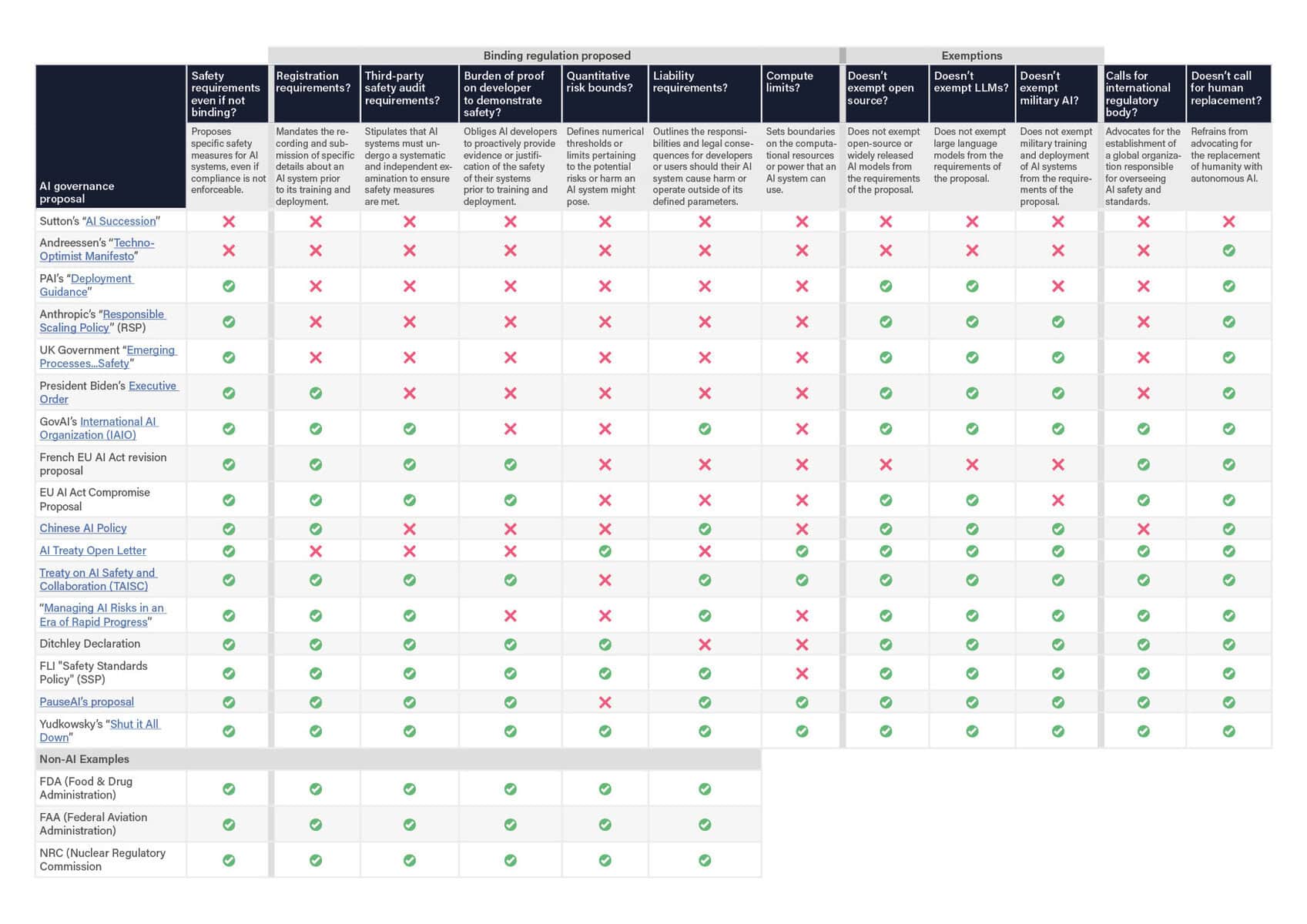

AI Governance Scorecard

Recent months have seen a wide range of AI governance proposals. FLI has analyzed the different proposals side-by-side, evaluating them in terms of the different measures required. The results can be found below. The comparison demonstrates key differences between proposals, but, just as importantly, the consensus around necessary safety requirements. The scorecard focuses particularly on concrete and enforceable requirements, because strong competitive pressures suggest that voluntary guidelines will be insufficient.

The policies fall into two main categories: those with binding safety standards (akin to the situation in e.g. the food, biotech, aviation, automotive and nuclear industries) and those without (focusing on industry self-regulations or voluntary guidelines). For example, Anthropic’s Responsible Scaling Policy (RSP) and FLI’s Safety Standards Policy (SSP) are directly comparable in that they both build on four AI Safety Levels – but where FLI advocates for an immediate pause on AI not currently meeting the safety standards below, Anthropic’s RSP allows development to continue as long as companies consider it safe. The FLI SSP is seen to check many of the same boxes as various competing proposals that insist on binding standards, and can thus be viewed as a more detailed and specific variant alongside Anthropic’s RSP.

FLI Safety Standards Policy (SSP)

Taking this evaluation and our own previous policy recommendations into account, FLI has outlined an AI safety framework that incorporates the necessary standards, oversight and enforcement to mitigate risks, prevent harms, and safeguard innovation. It seeks to combine the “hard-law” regulatory measures necessary to ensure compliance – and therefore safety – with the technical criteria necessary for practical, real-world implementation.

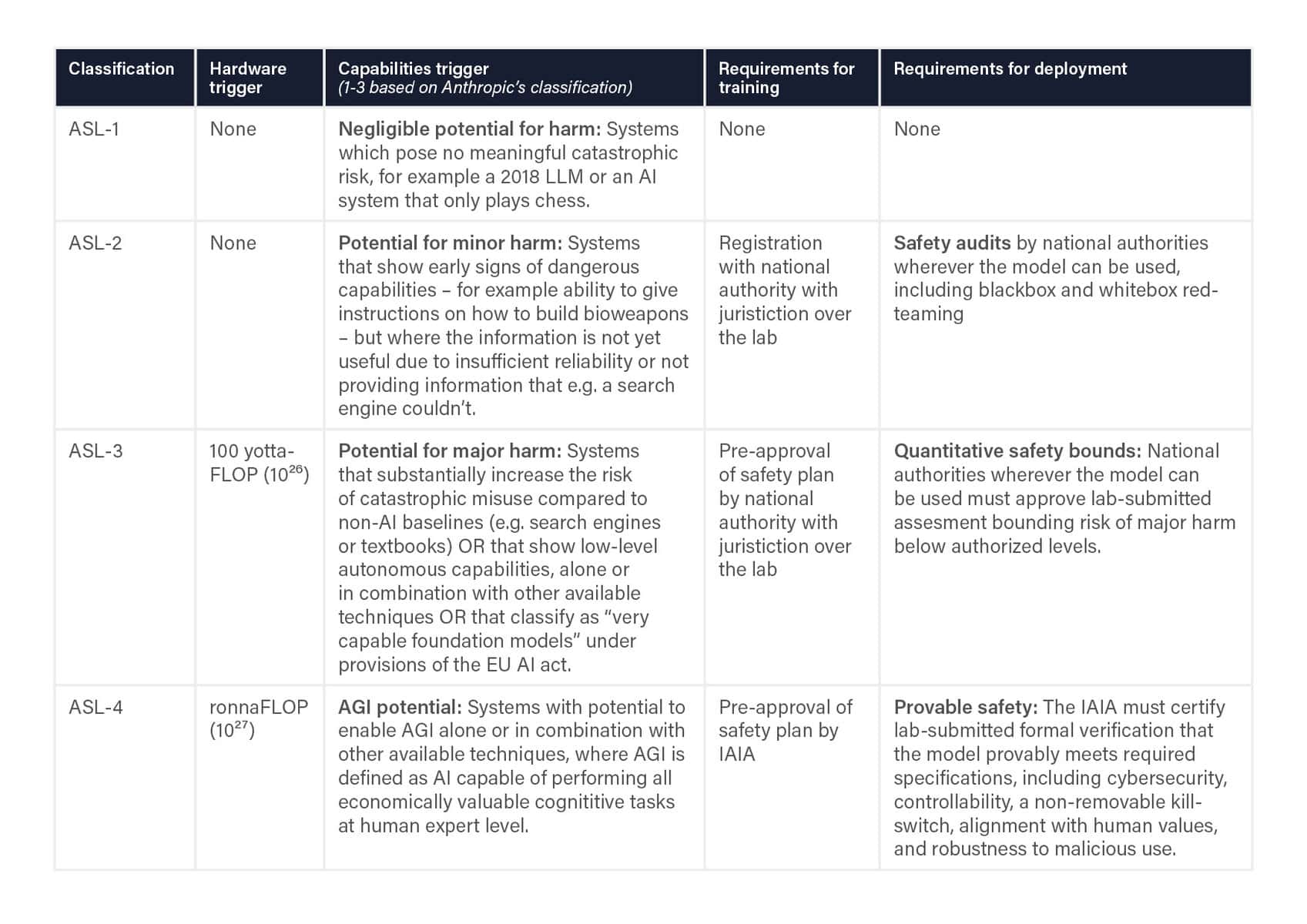

The framework contains specific technical criteria to distinguish different safety levels. Each of these calls for a specific set of hard requirements before training and deploying such systems, enforced by national or international governing bodies. While these are being enacted, FLI advocates for an immediate pause on all AI systems that do not meet the outlined safety standards.

Crucially, this framework differs from those put forward by AI companies (such as Anthropic’s ‘Responsible Scaling Policy’ proposal) as well as those organized by other bodies such as the Partnership on AI and the UK Task Force, by calling for legally binding requirements – as opposed to relying on corporate self-regulation or voluntary commitments.

The framework is by no means exhaustive, and will require more specification. After all, the project of AI governance is complex and perennial. Nonetheless, implementing this framework, which largely reflects a broader consensus among AI policy experts, will serve as a strong foundation.

Table 2: FLI’s Proposed Policy Framework. View as a PDF.

Clarifications

Triggers: A given ASL-classification is triggered if either the hardware trigger or the capabilities trigger applies.

Registration: This includes both training plans (data, model and compute specifications) and subsequent incident reporting. National authorities decide what information to share.

Safety audits: This includes both cybersecurity (preventing unauthorized model access) and model safety, using whitebox and blackbox evaluations (with/without access to system internals).

Responsibility: Safety approvals are broadly modeled on the FDA approach, where the onus is on AI labs to demonstrate to government-appointed experts that they meet the safety requirements.

IAIA international coordination: Once key players have national AI regulatory bodies, they should aim to coordinate and harmonize regulation via an international regulatory body, which could be modeled on the IAEA – above this is referred to as the IAIA (“International AI Agency”) without making assumptions about its actual name. In the interim before the IAIA is constituted, ASL-4 systems require UN Security Council approval.

Liability: Developers of systems above ASL-1 are liable for harm to which their models or derivatives contribute, either directly or indirectly (via e.g. API use, open-sourcing, weight leaks or weight hacks).

Kill-switches: Systems above ASL-3 need to include non-removable kill-switches that allow appropriate authorities to safely terminate them and any copies.

Risk quantification: Quantitative risk bounds are broadly modeled on the practice in e.g. aircraft safety, nuclear safety and medicine safety, with quantitative analysis producing probabilities for various harms occurring. A security mindset is adopted, whereby the probability of harm factors in the possibility of adversarial attacks.

Compute triggers: These can be updated by the IAIA, e.g. lowered in response to algorithmic improvements.

Why regulate now?

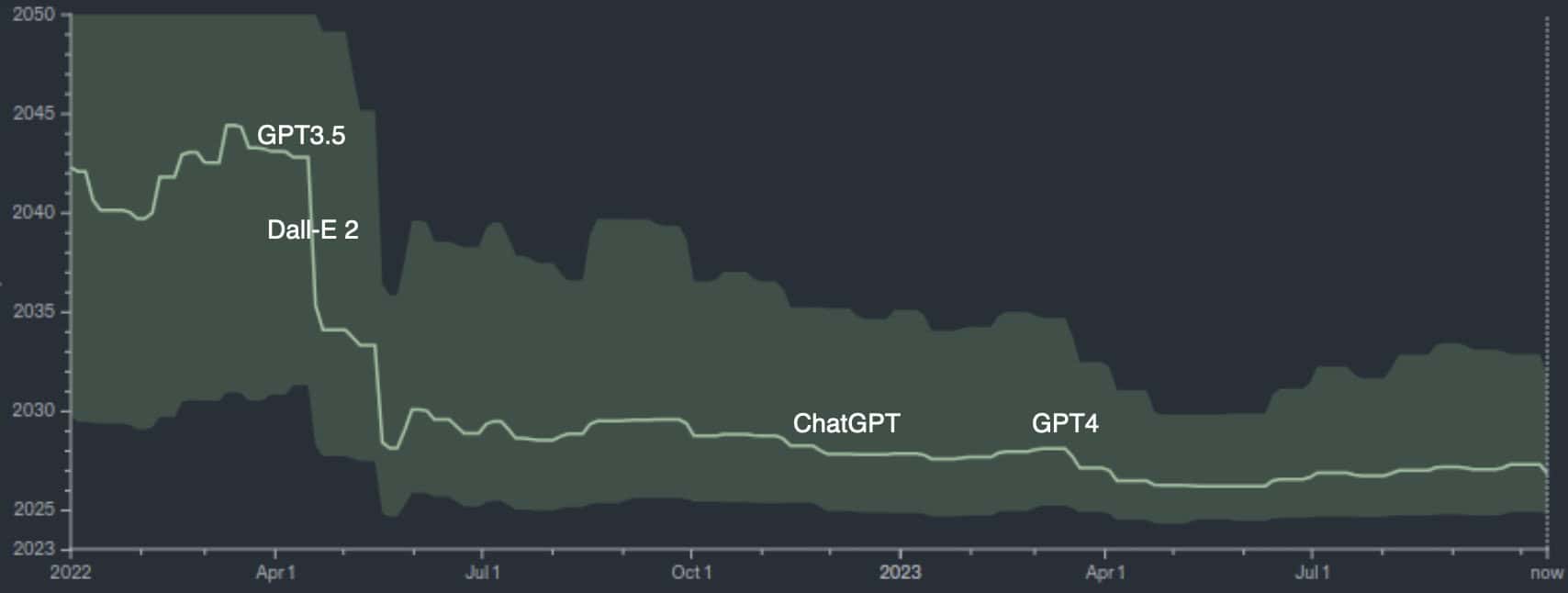

Until recently, most AI experts expected truly transformative AI impact to be at least decades away, and viewed associated risks as “long-term”. However, recent AI breakthroughs have dramatically shortened timelines, making it necessary to consider these risks now. The plot below (courtesy of the Metaculus prediction site) shows that the number of years remaining until (their definition of) Artificial General Intelligence (AGI) is reached has plummeted from twenty years to three in the last eighteen months, and many leading experts concur.

Image: ‘When will the first weakly general AI system be devised, tested, and publicly announced?‘ at Metaculus.com

For example, Anthropic CEO Dario Amodei predicted AGI in 2-3 years, with 10-25% chance of an ultimately catastrophic outcome. AGI risks range from exacerbating all the aforementioned immediate threats, to major human disempowerment and even extinction – an extreme outcome warned about by industry leaders (e.g. the CEOs of OpenAI, Google DeepMind & Anthropic), academic AI pioneers (e.g. Geoffrey Hinton & Yoshua Bengio) and leading policymakers (e.g. European Commission President Ursula von der Leyen and UK Prime Minister Rishi Sunak).

Reducing risks while reaping rewards

Returning to our comparison of AI governance proposals, our analysis revealed a clear split between those that do, and those that don’t, consider AGI-related risk. To see this more clearly, it is convenient to split AI development crudely into two categories: commercial AI and AGI pursuit. By commercial AI, we mean all uses of AI that are currently commercially valuable (e.g. improved medical diagnostics, self-driving cars, industrial robots, art generation and productivity-boosting large language models), be they for-profit or open-source. By AGI pursuit, we mean the quest to build AGI and ultimately superintelligence that could render humans economically obsolete. Although building such systems is the stated goal of OpenAI, Google DeepMind, and Anthropic, the CEOs of all three companies have acknowledged the grave associated risks and the need to proceed with caution.

The AI benefits that most people are excited about come from commercial AI, and don’t require AGI pursuit. AGI pursuit is covered by ASL-4 in the FLI SSP, and motivates the compute limits in many proposals: the common theme is for society to enjoy the benefits of commercial AI without recklessly rushing to build more and more powerful systems in a manner that carries significant risk for little immediate gain. In other words, we can have our cake and eat it too. We can have a long and amazing future with this remarkable technology. So let’s not pause AI. Instead, let’s stop training ever-larger models until they meet reasonable safety standards.