The Challenge of Diversity in the AI World

Contents

Let me start this post with a personal anecdote. At one of the first AI conferences I attended, literally every single one of the 15 or so speakers who presented on the conference’s first day were men. Finally, about 3/4 of the way through the two-day conference, a quartet of presentations on the social and economic impact of AI included two presentations by women. Those two women also participated in the panel discussion that immediately followed the presentations–except that “participated” might be a bit strong of a word, because the panel discussion essentially consisted of the two men on the panel arguing with each other for twenty minutes.

It gave off the uncomfortable impression (to me, at least) that even when women are seen in the AI world, it should be expected that they will immediately fade in the background once someone with a Y chromosome shows up. And the ethnic and racial diversity was scarcely better–I probably could count on one hand the number of people credentialed at the conference who were not either white or Asian.

Fast forward to this past week, when the White House’s Office of Science and Technology Policy released a request for information (RFI) on the promise and potential pitfalls of AI. A Request for Information on AI doesn’t mean that the White House only heard about AI for the first time last week and is looking for someone to send them the link to relevant articles on Wikipedia. Rather, a request for information issued by a governmental entity is a formal call for public comment on a particular topic that the entity wishes to examine more closely.

The RFI on AI specifies 10 areas (plus one “everything else” option) in which it is seeking comment, including:

(1) the legal and governance implications of AI; (2) the use of AI for public good; (3) the safety and control issues for AI; (4) the social and economic implications of AI; (5) the most pressing, fundamental questions in AI research, common to most or all scientific fields . . .

The first four of these topics are the ones most directly relevant to this blog, and I likely will be submitting a comment on, not surprisingly, topic #1. But one of the most significant AI-related challenges is one that the White House probably was not even thinking about–namely, the AI world’s “sea of dudes” or “white guy” problem.

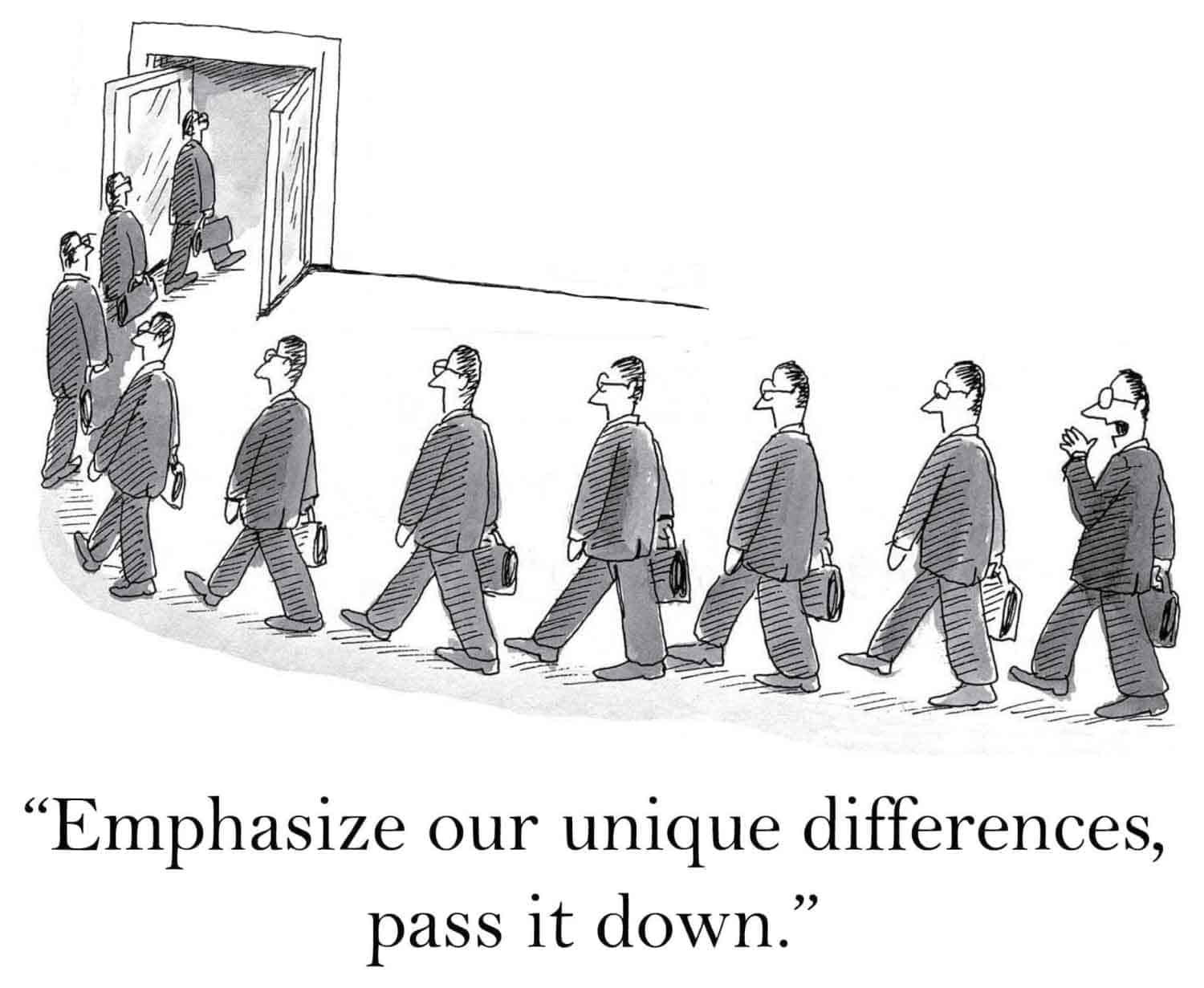

Anyone who has been to an AI conference in the US or Europe can tell you that the anecdote at the top of this post is not an aberration. Attendees at AI conferences are predominantly white and overwhelmingly male. According to a story that ran this week in Bloomberg, 86.3% of NIPS attendees last year were male. Lack of diversity in the tech industry and in computer science is not new, but as the Bloomberg piece notes, it has particularly worrying implications for AI:

To teach computers about the world, researchers have to gather massive data sets of almost everything. To learn to identify flowers, you need to feed a computer tens of thousands of photos of flowers so that when it sees a photograph of a daffodil in poor light, it can draw on its experience and work out what it’s seeing.

If these data sets aren’t sufficiently broad, then companies can create AIs with biases. Speech recognition software with a data set that only contains people speaking in proper, stilted British English will have a hard time understanding the slang and diction of someone from an inner city in America. If everyone teaching computers to act like humans are men, then the machines will have a view of the world that’s narrow by default and, through the curation of data sets, possibly biased.

In other words, lack of diversity in AI is not merely a social or cultural concern; it actually has serious implications for how the technology itself develops.

A column by Kate Crawford that appeared in the New York Times this weekend makes this point even more aggressively, arguing that AI

may already be exacerbating inequality in the workplace, at home and in our legal and judicial systems. Sexism, racism and other forms of discrimination are being built into the machine-learning algorithms that underlie the technology behind many “intelligent” systems that shape how we are categorized and advertised to.

Professor Fei-Fei Li–one of the comparatively small number of female stars in the AI research world–brought this point home at a Stanford forum on AI this past week, arguing that whether AI can bring about the “hope we have for tomorrow” depends in part on broadening gender diversity in the AI world. (Not AI-related, but Phil Torres recently made a post on the Future of Life Institute’s website explaining how greater female participation is necessary to maximize our collective intelligence.)

I actually think the problem goes even deeper than building demographically representative data sets. I actually question whether AI world’s entire approach to “intelligence” is unduly affected by its lack of diversity. I suspect that women and people of African, Hispanic, Middle Eastern, etc descent would bring different perspectives on what “intelligence” is and what directions AI research should take. Maybe I’m wrong about that, but we can’t know unless they are given a seat at the table and a chance to make their voices heard.

So if the White House wants to look at one of “the most pressing, fundamental questions in AI research,” I would heartily suggest that their initiative focus not only on the research itself, but also with the people who are tasked with conducting that research.

About the Future of Life Institute

The Future of Life Institute (FLI) is a global think tank with a team of 20+ full-time staff operating across the US and Europe. FLI has been working to steer the development of transformative technologies towards benefitting life and away from extreme large-scale risks since its founding in 2014. Find out more about our mission or explore our work.

Related content

Other posts about AI, Recent News

The U.S. Public Wants Regulation (or Prohibition) of Expert‑Level and Superhuman AI