Digital Media Accelerator

Applicant Portal

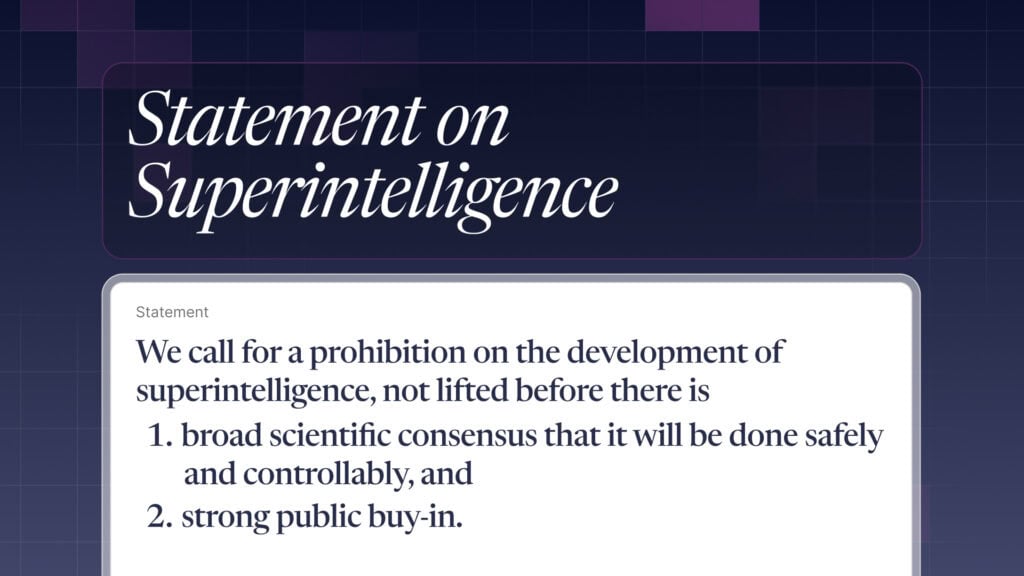

With AI companies recklessly racing unchecked to build increasingly powerful AI - including artificial general intelligence (AGI), despite having no way to control it - the rest of humanity is being hurtled towards a dangerous and uncertain future. Top AI experts agree that mitigating the risk of extinction from AI should be a global priority - with AI company leaders themselves even explicitly addressing the dangers of the technology they're building. It is not too late to change trajectory however. We can bring about a secure, prosperous future with controllable, reliable Tool AI - systems intentionally built to empower humans rather than replace us - if we're willing to take decisive action now.

However, public awareness and understanding remains limited. The FLI Digital Media Accelerator program aims to help creators produce content, grow channels, spread the word to new audiences. We're looking to support creators who can explain complex AI issues - such as AGI implications, control problems, misaligned goals, or Big Tech power concentration - in ways that their audiences can understand and relate to. By supporting various content formats across different platforms, we want to spark broader conversations about the risks of developing evermore powerful AI, and AGI.

We are keen to support creators who:

- Already have a following, and are interested in raising awareness about AI safety and risk;

- Have compelling ideas for impactful pieces of content to bring AI safety/risk awareness to new audiences; and,

- We are especially eager to support creators interested in discussing AI safety in their content on an ongoing basis, incorporating it into their regular output.

Please see below for examples of content we may be interested in funding:

- A series of YouTube explainer videos about AI safety topics

- A channel, such as a TikTok account, dedicated to daily AI news

- A Substack or newsletter dedicated to explaining new AI safety research papers

- An existing account with a specific focus (e.g. education) creating a short series exploring labor impacts of advanced AGI

- An existing tech updates channel sharing more about AI risk

- A podcast series for the general listener on progress towards AGI and its implications

Please reach out to maggie@futureoflife.org if you have any questions.

Application form

Other projects in this area

Control Inversion