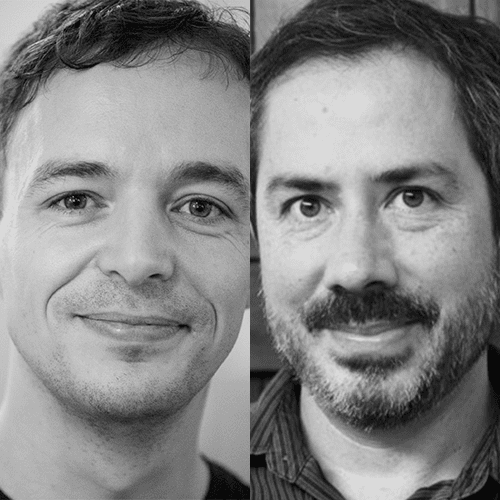

Joscha Bach and Anthony Aguirre on Digital Physics and Moving Towards Beneficial Futures

- Understanding the universe through digital physics

- How human consciousness operates and is structured

- The path to aligned AGI and bottlenecks to beneficial futures

- Incentive structures and collective coordination

You can find FLI’s three new policy focused job postings here

1:06:53 A future with one, several, or many AGI systems? How do we maintain appropriate incentive structures?

1:19:39 Non-duality and collective coordination

1:22:53 What difficulties are there for an idealist worldview that involves computation?

1:27:20 Which features of mind and consciousness are necessarily coupled and which aren't?

1:36:40 Joscha's final thoughts on AGI

Transcript

Lucas Perry: Welcome to the Future of Life Institute Podcast. I’m Lucas Perry. Today’s episode is with Joscha Bach and Anthony Aguirre where we explore what truth is, how the universe ultimately may be computational, what purpose and meaning are relative to life’s drive towards complexity and order, collective coordination problems, incentive structures, and how these both affect civilizational longevity, and whether having a single, multiple, or many AGI systems existing simultaneously is more likely to lead to beneficial outcomes for humanity.

If you enjoy this podcast, you can subscribe on your preferred podcasting platform by searching for the Future of Life Institute Podcast or you can help out by leaving us a review on iTunes. These reviews are a big help for getting the podcast to more people.

Before we get into the episode, the Future of Life Institute has 3 new job postings for full-time equivalent remote policy focused positions. We’re looking for a Director of European Policy, a Policy Advocate, and a Policy Researcher. These openings will mainly be focused on AI policy and governance. Additional policy areas of interest may include lethal autonomous weapons, synthetic biology, nuclear weapons policy, and the management of existential and global catastrophic risk. You can find more details about these positions at futureoflife.org/job-postings. Link in the description. The deadline for submission is April 4th, and if you have any questions about any of these positions, feel free to reach out to jobsadmin@futureoflife.org.

Joscha Bach is a cognitive scientist and AI researcher working as a Principal AI Engineer at Intel Labs. He was previously a VP of Research for AI Foundation, and a Research Scientist at both the MIT Media Lab as well as the Harvard Program for Evolutionary Dynamics. Joscha earned his Ph.D. in cognitive science and has built computational models of motivated decision making, perception, categorization, and concept-formation.

Anthony Aguirre is a physicist that studies the formation, nature, and evolution of the universe, focusing primarily on the model of eternal inflation—the idea that inflation goes on forever in some regions of the universe—and what it may mean for the ultimate beginning of the universe and time. He is the co-founder and Associate Scientific Director of the Foundational Questions Institute and is also a Co-Founder of the Future of Life Institute. He also co-founded Metaculus, which is an effort to optimally aggregate predictions about scientific discoveries, technological breakthroughs, and other interesting issues.

And with that, let’s get into our conversation with Joscha Bach and Anthony Aguirre.

All right, let's get into it. Let's start off with a simple question here. What is truth?

Joscha Bach: I don't think that we can define truth outside of the context of languages. And so truth is typically formally defined in formal languages, which means in the domain of mathematics. And in philosophy, we have an informal notion of truth and a vast area of them, a number of truth theories.

And typically, I think when we talk about any kind of external reality, we cannot know truth in a sense, as we cannot know it in a formal system. What we can describe is whether a model is predictive. And so we end up looking at a space of theories, and we try to determine the shape of that space.

Lucas Perry: Do you think that there are kinds of knowledge that exist outside of that space? For example, do I require knowledge that I am aware?

Joscha Bach: I think that knowledge exists only inside of the mind, that is inside of an agent that observes things. Knowledge is not arbitrary, of course, because a lot of knowledge is formerly derived, which means it has similar structure as a fractal, for instance, the Mandelbrot fractal, where you start out with some initial conditions and some rules, and then you will derive additional information on that. But essentially, knowledge is conditional because it depends on some axiomatic presupposition that you need to introduce.

Of course, that doesn't make it arbitrary. It means that if you make that presupposition, you get to certain types of conclusions that are somewhat inevitable. And there is a limited space of formal theories that you can make. So there's a set of formal languages that we can explore, that is limited. So for instance, when we define numbers, the number I think is best understood as a subsequent labeling scheme. And there are a number of subsequent labeling schemes that we can use, and it turns out that they have similar properties, and we can map them onto each other.

So we have several definitions for the natural numbers, and then we can build the other types of numbers based on this initial number theory. And it turns out that other parts of mathematics are equivalent to theorems that we discover a number of theory, or many of them are. So it turns out that a lot of mathematics is looking at the same fractals in different contexts. In a way, mathematics is about studying symbol games and all languages are. I think mathematics is the set of all languages.

Anthony Aguirre: You made an interesting statement, that knowledge is something that only exists in the mind... Well, in a mind. So I suppose you're contrasting that with information. So how would you think differently about knowledge or information? How would you draw that distinction, if you do?

Joscha Bach: I think that knowledge is applied to a particular domain. So it's regularities inside of a domain, and this domain needs to be given. And the thing that gives it is the stance of the agent that is looking at the domain.

To have knowledge, you need to have a domain that the knowledge is about. And the domain is given by an observer that is defining the domain. And it's not necessarily a very hard condition, in terms of the power of the mind that is involved here. So I don't require a subject with first person consciousness, and so on. What I do require is some system that is defining the domain.

Anthony Aguirre: So by a mind, you mean something that is defining the terms or the domain, or in some way, translating some piece of information into something with more semantic meaning?

Joscha Bach: I think a mind is a system that is able to predict information, but coming up with a global model, thereby making the information explainable.

Anthony Aguirre: Okay. So predict the information, meaning there's some information stream of input, and a mind is able to create models for that stream of input to predict further elements of that stream.

Joscha Bach: At the moment, I understand the model to be a set of variances, which are parameters that can change their value. Each of them has a range of admittable values. And then you have invariances, which are computable relationships between these values that constrain the values with respect to each other. And the model can be connected to variances that the model itself is not generating, which can be parameters that enter the system from the universe, so to speak, from whatever pattern generator is outside of that system. And the system can create a model to make the next set of values that are being set by the outside predictable. And I think this is how our own perception works.

Lucas Perry: So in terms of human beings, truth is purely mind dependent. It requires a human being, for example, a brain, which is a substrate that can encode information, which generates models?

Joscha Bach: It's not mind dependent, because it's going to be the same for every mind with the same properties, in terms of information processing and modeling capacity.

Lucas Perry: You mean it's not brain dependent?

Joscha Bach: It is not subjective.

Lucas Perry: It's not subjective.

Joscha Bach: Yes. So for instance, the knowledge that we derive while studying mathematics is not subjective knowledge. It's a knowledge that flows out of the constraints of the domains, and our ability to recognize these constraints.

Lucas Perry: So how does this stand in relation to accounts of truth as being third person, out there, floating in the universe truths, when we bring in the dependence of models and minds?

Joscha Bach: The first person is a construct to begin with. The first person is not immediately given. It's introduced into the system and our system as a set of priors, then you are stuck with it until we deconstruct it again. But once we have understood what we are, believe is no longer a verb. There is no relationship between the self and the set of beliefs anymore. You don't change by changing your beliefs about a certain domain. You just recognize yourself as a system that is able to perform certain operations. And every other system that is able to perform the same operations is going to be equivalent, with respect to modeling the domain.

Anthony Aguirre: I think Lucas is maybe getting at a claim that there's a more direct access to knowledge or information, that we as a subjective agent are directly perceiving. There are things that you infer about the world, and there are things that you directly experience about the world as an observer in it.

So I have some sensory stream. I have access to the contents of my mind at some level, and this is very direct knowledge. Well, there's a feeling that I don't have to do a bunch of modeling to understand what's going on. And there's an interesting question of whether that's true or not. To what degree am I modeling things all the time, and that's just happening at a subconscious level?

Lucas Perry: Yeah, so a primitive version of that would be like how in Descartes' epistemology, he starts off with awareness. You start off with being aware of being aware. So that might include some kind of subconscious modeling. Though, in terms of direct experience, it doesn't really seem like it requires a model. It's kind of like a direct apprehension, which may be structured by some kind of modeling.

Joscha Bach: Of course, Descartes is telling a story when he writes all this. He is much, much further when he writes on the story. This is not the thing that he started writing as a two-year-old, and then making his path along that line. Instead, he starts out at a highly aware state, and then backtracks and tries to retrace the steps that he had possibly taken to get where he was. When we have the impression that parts of our modeling is immediately given, it means that we don't have any knowledge about how it works. In other words, we don't have a model that we can talk about, or that we can consciously access before we can talk about it.

And when we become aware of the way in which we construct representation, we get closer to what many Eastern philosophers would call enlightenment. That our ideas become visible in their nature as representations. That we understand that everything that we perceive as real is, in fact, a representation happening outside of our mind. And we become aware of the mechanisms that construct with representations, and that allow us to arrive at different representations if we parametrized these processes differently.

Anthony Aguirre: Yeah, so I'm interested in this statement, in comparison to an earlier statement you made about what is not subjective. When you talk about the construction of... So there are a whole bunch of things that I experience as an aware agent. The claim that you're making, which I agree with, is that pretty much all of that is something that I have constructed as something to experience, based on some level of sensory input, but heavily processed and modeled. And it's essentially something that I'm almost dreaming in my mind, to create this representation of the world that I'm then experiencing.

But then there's also a supposition that we have, that there is something that is causing the feeling of objectivity. There's something underlying our individual agents sharing similar perceptions, using similar models, and so on. So there's, of course, some meaning that we ascribe to an external world based on that shared concepts and ideas, and things that we attribute existence to, that are underlying the model that we construct in our mind.

So I guess the question is where we draw the line between the subjective thing that we're inventing and modeling in our internal representation, and the objective world. Is there such thing as a purely objective world? Is it all representation at some level? Is there a very objective world with lots of interesting things in it, and we more or less understand it correctly as it is? Where do you sit on that spectrum?

Joscha Bach: Everything that looks real, everything that has the property of being experientiable at some level, that can be a subject of experience, is going to be constructed inside of your mind. It's this issue that it's not you that is constructing it, because you are a story inside of that mind about that agent. But this I is not the agent. The I is the representation of the agent inside of the system.

And what we can say about the universe, it seems to be generating patterns. These patterns seem to be independent of what we want them to be. So it's not that all of these patterns seem to be originating in our mind, at least this is not a model that makes very good predictions. And there seem to be other minds that seem to be able to perceive similar patterns, but in a different projection. And the projection is being done by the mind that is trying to explain the patterns.

So the universe is projecting some patterns at your systemic interface, and at your thalamus, or at your retina and body surface, or wherever you draw the line. And then you have a nervous system behind this, or some kind of other information processing system. Our best model is that it's the nervous system that is identifying regularities in these patterns. And part of these regularities are, for instance, things. Things don't exist independently of our decomposition of the universe into things.

This doesn't mean that the decomposition is completely arbitrary, because our mind makes it based on what's salient and what compresses well. But ultimately, there is just this unified state vector of the universe that is progressing, and you make it predictable by splitting it up into things, into separate entities that you model independently of each other in your mind. And they exchange information, thereby change each other objects, evolution. And this is how we get to causality, and so on. But for instance, causality doesn't exist independently of the decomposition of the universe into things, which has to be done by a mind.

Anthony Aguirre: Well, I'm curious as to why you say what there really is, is this unified evolving state vector. I mean, how do you know?

Joscha Bach:I don't. So this is the easiest explanation. I have difficulty to make it simpler than that, but the separation of the universe into object seems to be performed by the observing mind. Right, the alternatives don't make sense.

Anthony Aguirre: What you said seemed to attribute a greater reality to this idea of an evolving state vector or something, than other descriptions of the same. There are lots of ways we can describe whatever it is that we call this system that we're inhabiting. We can describe it at the level of objects and the everyday level. We can describe it at the level of fields and particles and things. We could describe it, maybe there's a string theory description. Maybe there's a cellular automaton cranking along. Do you feel that one of these descriptions is more true than the others? Or do you feel like these are different descriptions of the same?

Joscha Bach: One is more basic. So the description of the universe as a set of states that changes is the most basic one. And then I can say, it seems that I can take the set of states and organize it as if it was a space. There's a certain way to project the state vector. You get to a space, to something that's multi-dimensional, by taking your number line and folding it up, for instance, into a lattice. And you can make this with a number of operators, and then you can have information traveling in this lattice, and then you usually get to something like particles.

And if the particles don't have a distinct location, and you just described the trend of particles going, then you have fields. But they all require additional language that you need to introduce additional assumptions. And also we find that they are not true, in a sense that there is a level of description where reality and description fall apart from each other.

So for instance, the idea of describing the universe as a Minkowski space, works relatively well at certain scales. But if you go below these scales, you get singularities. Or in certain regions of the universe, you get singularities, and you realize you're not living in space. You can just describe the dynamics at a certain scale, usually as space. Same thing is true for particles. Particles are a way to talk about how information travels between the locations that you can discern the state vector.

No, but you should challenge me. So the thing that I'm sometimes frustrated with, with philosophers, is that they somehow think that the notions that they got in high school physics are somehow objectively given. And they know electrons, and physicists know what electrons are, and there's a good understanding, and we touch them every day. And then we build information processing on top of the electrons. And this is just not true. It's the other way around.

Anthony Aguirre: I mean, all of these concepts, the more you analyze them and the harder you think about them, the less you understand them, not the more. In the sense of for particles, I mean, if you ask what an electron is, that is a surprisingly hard answer to give as a physicist. An electron is an excitation of the electron field. And what is the electron field? It's a field that has the ability to create electrons.

Joscha Bach: Yes. But when you don't know this, it means you have never understood it. The person that came up with these notions have either pulled them out of thin air, or they understood something that you don't. They saw something in their mind, that they tried to translate into a language that people could work with, and it allowed them to build machinery. But that did not necessarily convey the same understanding. And that to me is totally fascinating. You learn in high school how to make a radio, but you don't get the ideas that were necessary to get somebody the idea, "Oh, this is what I should be doing to get a radio in the first place." This is not enough information to let you invent a radio, because you have no idea why the universe would allow you to build radios.

And it's not other people experimented and they suddenly got radios, and then they tried to come up with a fancy theory that would project electrons into the universe and magnetic fields, or electromagnetic fields to explain why that radio would work. They did have an idea on how the universe must be set up, and then they experimented and came up with the radio, and verified that their intuition must be correct at some level. And the idea that Wolfram has, for instance, that the universe is a cellular automaton, is given by the idea that the cellular automaton is a way to write down the Turing machine. It's equivalent to a Turing machine.

Lucas Perry: Sorry. Did you say cellular automaton?

Joscha Bach: Yes.

Lucas Perry: Okay. Is a way to write down a Turing machine?

Joscha Bach: Yes. So there is a cellular automaton, or there are a number of cellular automaton which are Turing complete. And cellular automaton is a general way to describe computational systems. And since the constructivist turn, I think that mostly agree that every evolving system can be described as some automaton, as a finite state machine, or in the unbounded case as a Turing machine.

Anthony Aguirre: Well, that I think is not clear. I think it's certainly not the way that physics, as it actually operates, works. We do all this stuff with real numbers and calculus, and all kinds of continuous quantities. And it's always been of interest to me, if we suppose that the universe is fundamentally discrete or digital in some way, it sure disguises it quite well. In the sense that the physics that we've invented based on so many smooth quantities and differentials and all these things, is incredibly effective.

And even quantum mechanics, which has this fundamental discreteness to it, and is literally quantum mechanics, is all still chock-full of real numbers and smooth evolutions and ingredients, that there's no obvious way to take the mechanics, any of the fundamental physics theories that we know, and make a nice analog version of them that turns into... A nice digital version, rather. That turns into the analog continuous version that we know and love, which is an interesting thing.

You would think that that would be simpler if the world was fundamentally discreet and quantum. So I don't know if that's telling us anything, it may just be historical, that these are the first tools that we developed and they worked really well. And so that became the foundation for our way of thinking about how the world works, with all sorts of smooth quantities. And certainly, discrete quantities, when you have a large enough number of them, can act smoothly.

But it's nonetheless, I think a little bit surprising how basic and effective all of that mathematics appears to be if it's totally wrong, if it's essentially, fundamentally the wrong sort of mathematics to describe how reality is actually working at the base level.

Joscha Bach: It's almost correct. You remember these stories that Pythagoras very opposed to introducing irrational numbers into the code base of mathematics. And there are even stories that he committed murder, which are probably not true, to prevent that from happening. And I think that he was really onto something. The world was not ready yet. And the issue, I think, that he had with irrational numbers is that they're not constructive. And people didn't have until quite recently, in the languages of mathematics, is the distinction between value and function.

In classical semantics, a value and a function are basically equivalent, because if you can compute the function, you have a value. And if the function is sufficiently defined, you can compute it. And for instance, there is no difference between pi as a function, as an algorithm that you would need to compute, and pi as a value in this classical semantics. But in practice, there is. You cannot determine arbitrary many digits of pi before your sun burns out. There's a finite number of digits of pi that every observer in our universe will ever know.

That also means that there can be no system that we can build, or that something with similar limitations in this world can ever build. That relies on knowing the last digit of pi when it performs an operation. So if you use a code base that assumes that some things in physics have known the last digit of pi before the universe went on to go into its next state, you are contradicting this idea of constructive mathematics.

And there was some hope that this could be somehow recovered. Because mathematicians, before this constructive turn, just postulated this infinite number generator, or this infinite operation performer as a black box. You would introduce it in your axiomatic system, for instance, via the axiom of choice. And so you postulate your infinite number calculator, the thing that is able to perform infinitely many operations in a single step, or read infinitely many arguments into your function in a single step, instead of infinitely many.

And if you assume that this is possible, for instance, via the axiom of choice, you get nice properties, but some of them are very paradoxical. You probably know Hilbert's Hotel. A hotel that has infinitely many rooms and is fully booked, and then a new person arrives. And you just ask everybody to move one room to the right, and now you have an empty room. And you can also have infinitely many buses come, and you just ask everybody to move into the room that has twice its current number. And in practice, this is not going to work because in order to calculate twice your number, you need to store it somehow. And if the number gets too large, you are unable to do that.

But it should also occur to you that if you have such a thing as Hilbert's Hotel, that you're not looking at a feature. You're probably looking at a bug. You have just invented a cornucopia. You get something from nothing, or you get more from little. And that should be concerning. And so when you look into this in detail, you get to Gödel's proof and to the halting problem, which goes two different ways of looking at the issue that when you state that you can make a proof, without actually being performing the algorithm of that proof, you'll run into contradictions.

That means you cannot build a machine in mathematics that runs the semantics of classical mathematics without crashing. That was Gödel's discovery. And it was a big shock to Gödel, because he strongly personally believed in the semantics of classical mathematics. And it's confusing to people like Penrose, who thinks that, "I can do classical mathematics, computers cannot. So my mind must be able to do something that computers cannot do." And this is obviously not true.

So what's going on here? I think what happens is that we are looking at too many parts to count. When you look at the world of too many parts to count that are very very large numbers, you sometimes get dynamics that are convergent. That means that if you perform operation on a trillion objects, or on a billion objects in this domain, the result is somewhat similar. And the domain where you have these operators, the way these operators converge, this is geometry, by and large. And this is what we are using to describe the reality that we're embedded in, because the reality that you and me are embedded in is necessarily composed of too many parts to count for us, because we are very, very large, with respect to our constituent parts. Because otherwise, you wouldn't work.

Anthony Aguirre: Yeah. Again, if there are enough of something and you're trying to model it, that real numbers will work well.

Joscha Bach: But it's not real numbers. There are very, very large numbers. This is all.

Anthony Aguirre: Right. But the model is real numbers. I mean, that's what we use when we do physics.

Joscha Bach: Yes. But it's totally fine when you do classical mechanics. It's not fine when you do foundational physics. Because at this point, you are checking out the code base from the mathematicians, that it has some comments by now. And the comments say, "This is actually not infinite." When you want to perform operations in there, and when you want to prove the consistency of the language that you're using, you need to make the following changes, which means that pi is now a function. And you can plug this function into your nearest sun and get numbers, but you don't get the last digit.

So you cannot implement your physics in such a way, or your library of physics, that you rely on having known the last digit of pi. It just means that for sufficiently many elements that you are counting, this is going to convert to pi. This is all there is.

Anthony Aguirre: Right. No, I understand the motivation for thinking that way. And it's interesting that that's not the way people actually think, in terms of fundamental physics, in terms of quantum mechanics or quantum gravity, or anything else.

Joscha Bach: Yeah, but this is their fault. This is not our fault. We don't have to worry about them.

Anthony Aguirre: Fair enough. So I think the claim that you're making would be that if we do... There's a question. If 100 years from now, the final theory of physics is discovered, and they have it available on a T-shirt near them, is that theory going to essentially contain a bunch of natural numbers and counting and discrete elements? Or is it going to look-

Joscha Bach: Yes, of course. Integers is all that is computable.

Anthony Aguirre: Right.

Joscha Bach:The universe is made from integers.

Anthony Aguirre: So this is a strong claim about what's ultimately going to happen in physics, I think, that you're making, which is an interesting one. And is very different from what most practicing physicists, in thinking about the foundations of physics, are taking as their approach.

Joscha Bach: Yes, but there's a growing number of physicists which seem to agree with this. So very informally, when I was in Banff at a foundational physics conference, I made a small survey among the foundational physicists. I got something like 200 responses. And I think 6% of the accumulated physicists were amenable to the idea of digital physics, or were proponents of digital physics. I think it's really showing that this is growing in attraction, because it's a relatively new idea in many ways.

Lucas Perry: Okay. So if I were to try to summarize this, I guess in a few statements, correct me if I got any of this wrong, is that because you have a computationalist metaphysics, Joscha, if reality contains continuous numbers, then the states of the large vector space can't be computed. Is that the central claim?

Joscha Bach: So the problem is that when you define a mathematical language any kind of formal language that assumes that you can perform computations with infinite accuracy, you run into contradictions. This is the conclusion of Gödel.

And that's why I'm stuck with computation. I cannot go beyond computation, hyper computation doesn't work because I cannot be defined constructively. I cannot build a hyper computer that runs hyper computational physics. So I will be stuck with computation. But it's not a problem because there is nothing that I cannot do with it that I could do before. Everything that worked so far, is still working, right. Everything that I could compute in physics until now is still computable.

Lucas Perry: Okay. So to say digital is to say, what is that to say?

Joscha Bach: It means that when you look at physics and you make observations, you would necessarily have to make observations with a finite resolution. And when you describe the transitions between the observables, you will have to have functions that are computable. And now there is the question, is this a limitation that only exists on the side of the observer? Or is this also a limitation that would exist on the side of the implementation? And now the question is, how could God or whatever hypothetical circumstance, perform to do this? So the answer is if the universe is implemented any kind of language, then it's subject to the same limitations. The only question is, could it be possible that the universe is not implemented in some kind of language? Question is, is it implemented? What does it mean to be implemented? We can no longer talk about it. It doesn't really make sense to talk about such a thing.

We would have to talk about it in a language that is inconsistent. So when we want to talk about the universe that is continuous, we would have to define what continuity means in a way that would explain how the universe is performing continuous operations, at least at some level. And the assumption that the universe can do this, leads into contradictions. So we would have to basically deal with the fact that we have to cheat at some point. And I think what happened before constructive mathematics, was that people were cheating. They were pretending that they could read infinitely many arguments into their function, but they were never actually doing it. It was just giving them elegant notations. And these elegant notations are specifications for things that cannot be implemented. And the practice was not a problem because people just found workarounds in practice, right? They would do something slightly different than the notation.

And something that I found as a kid very irritating when I did mathematics, because sometimes the notation that I was using in mathematics, was so unlike from what I had to do in my own mind, or what I had to do on my machine to perform a certain operation. And I didn't really understand the difference. I didn't understand the difference between computational mathematics and why it existed. And in practice, the difference is that classical mathematics is stateless. It assumes that everything can be computed without going from state to state.

Anthony Aguirre: Yeah, but it's beguiling because you think there's this number pi and you feel like it exists. And whether anybody computes the ten to the 50th digit of it, it has some value. If you could compute it, it would have some value and there's no sort of freedom in what that value is going to be. That value is defined as soon as you make the definition of pi.

Joscha Bach: But go back to this moment when you had this idea that pi is there for you with all its infinite glory. Certainly not an idea that you were born with, right? You were hypnotized into it by your teachers and they by their teachers. And so somebody at some point made this decision, and it's not obvious.

Anthony Aguirre: No, it's sort of a relic of Plato I guess. I think mathematicians are obviously split in this in all kinds of ways. But there are a lot of mathematicians who were platonists and really very much think that pi meaningfully exists in all its glory, in that way. And I think most physicists, well I don't know actually. I think there are probably surveys that we could consult that I haven't, about the degree of platonism in physicists. I suspect it varies pretty widely actually.

Joscha Bach: Now take a little step back from all these ideas of mathematics that you may or may not have gotten in school, and your teachers may or may not have gotten them in school. But look back at what you know, right. Your computer and how your computer would make sense of the universe if you could implement AI on it, right? This is something that we can see quite clearly. Your computer has a discrete set of sensory states that it could make sense out of, right? And it would be able to construct models and it can make these models in a resolution that is, if you want to higher than the resolution of our own mind, it can make observation at a higher resolution than our senses. And there is basically no limit in terms of not being able to surpass the kind of model that we are doing.

And yet everything in the mind of that system would be discrete necessarily, right? So if the system conceives of something that is continuous, if that system conceives of a language that is continuous. And we can show that this language doesn't work, that it's not consistent, then the system must be mistaken, right? It's pointing indexically at some functions that perform some kind of effective geometry. And an effective geometry is like the one in the computer game, right? It looks continuous, but it certainly is not. It's just continuous enough.

Lucas Perry: Relative to the conventional physics, what does a computational view of physics, what is that changing?

Joscha Bach: Nothing. If the conventional physicist has made a mistake, it's exactly the same. It's just that conventional physics invites making certain kinds of mistakes, that might lead to confusion. But in practice it doesn't change anything. It just means that the universe is computable. And of course it should be because for it to be computable just means that you can come up with a model that describes how adjacent states in the universe are correlated.

Lucas Perry: Okay. So this leads into the next question. So we're going to have to move along a little bit faster and more mindfully here. You've mentioned that it's tempting to think of the universe as part of a fractal. Can you explain this view and what is physics according to this view?

Joscha Bach: So I think that mathematics is in some sense, something like a fractal. So the natural numbers are similar to the generator function of the Mandelbrot fractal, right? You take Peano's axioms, and as a result you get all these dynamics if you introduce a few suitable operators. And you can define the space of natural numbers, for instance using addition, or you can do it using multiplication. In the case of multiplication, you will need to introduce the prime numbers, because you can not derive all the actual numbers just by multiplication with one and zero. You need all these elements, the basic elements of this multiplication space would be the prime numbers. And now there is the question, is there an elegant translation between the space of multiplication in the space of additions and maybe has to do with the Riemann zeta function, right? We don't know that. But essentially we are exploring a certain fractal here. Right?

Now with respect to physics, there is the question. Why is there something rather than nothing, and why does this something look so regular? We can come up with anthropic arguments, right? If nothing, we exist, and then nobody would be asking the question. Also, if that thing was irregular, you probably couldn't exist. The type of mind that we are and so on requires large-scale regularity. But it's still very unsatisfying because this thing that something exists rather than nothing, should have an explanation that we want to make as simple as possible. And the easiest explanation is that maybe existence is the default. So everything that can be implemented would exist, and maybe everything that exists is the superposition of all the finite automata. And as a result you get something like a fractal, and in this fractal there is us.

This idea that the universe can be described as an evolving cellular automaton is quite old. I think it goes back to Zuse's Rechnende Raum and it's been formulated in different ways by for instance, Fredkin and Wolfram, and a few others. But again, it's equivalent to saying that the universe is some kind of automaton. Some kind of machine that takes a starting value, a starting configuration, or any kind of configuration, and then moves to the next configuration based on the stable transition function.

Anthony Aguirre: But there's a difference between saying that the universe is a cellular automaton, and the universe is all possible cellular automaton. That view is held sort of by Max Tegmark, and I think Jurgen Schmidhuber, and I think, I'm forgetting his name. But you're talking more about that version. Aren't you?

Joscha Bach: I'm not sure if it just means that Wolfram's project of enumerating all the cellular automata and taking the first one that produces a universe that looks like ours, might not work. It could be that you need all of them in a certain enumeration. But it still is a cellular automaton. It's just one that is much longer than Wolfram would hope.

Anthony Aguirre: You're saying all possible cellular automata is the same as one cellular automata.

Joscha Bach: Yes. It would be one cellular automaton that is the result of the superposition of all of them. Which probably means that long cellular automata only act relatively rarely, so to speak. And I have no idea how to talk about this in detail and how to model this. So this is a very vague intuition.

Lucas Perry: Okay. So, could you describe the default human state of consciousness?

Joscha Bach: Consciousness I think is the control model of our attention. And human consciousness is characterized by attention to features that are taken to be currently the case that we keep stable in this phase, so we can vary other parameters, that we can make conditional variants in our perception and so on. And that we can form indexed memories for the purpose of learning.

Then we have access consciousness, which is a representation of the attentional relationship that we have to these objects. So we know that we are paying attention to them, and we know in which way we are paying attention to them. So for instance, do we single out sensory features or high-level interpretations of sensory features, or hypotheses, or memories and so on. And this is also part of the attentional representation.

And third, we have reflexive consciousness. And this is necessary because the processes in our neocortex are self-organizing to some degree. And this means that this attentional process needs to know that it is the sensory of the attentional process. So it's going to make a perceptual confirmation of the fact that it is indeed the attentional process. And this makes consciousness reflexive. We basically check back, am I spacing out or am I still paying attention? Am I the thing that pays attention? And this loop of going back between the content and the reflection is what makes our consciousness almost always reflexive. If it stops being reflexive, drift off and often fall asleep, like literally.

Anthony Aguirre: What would you say that, that sort of self-reflexive consciousness is experienced by non-human animals? Do you consider a mouse that probably does not have that level of awareness not conscious? Or are you sort of talking about different aspects of consciousness, some of which humans have, and some of which other creatures have?

Joscha Bach: I suspect that consciousness is not that complicated. What's complicated is perception. So setting up a system that is able to make a real time adaptive model of the world that predicts all sensory features in real time, makes a global universe model and figures out which portion of the universe model it's looking at right now, and swaps this in and out of working memory as needed, that's the hard part. And once you have that and you have a problem that is hard enough to model, then the system is going to model its own relationship to the universe in its own nature, right? So you get a self-model at that level. And I think that attentional learning is necessary because, just correlative learning is not working very well.

The machine learning algorithms that we are currently using largely rely on massive backpropagation over many layers. And the brain is not such a neatly layered structure, it has links all over the place. And also we know that the brain is more efficient. It needs very fewer instances of observing a certain thing before it makes connection and is able to make inferences on that connection. So you need to have a system that is able to basically single out the parts of the architecture that need to be changed. And this is what we call attention, right? When you are learning, you have a phase where you do simple associative learning. We usually have right after you're born and before, when you form your body map and so on. And after the initial layers of formed, after the brain areas are somewhat laid out and initialized and connected to each other, you'll do attentional learning. And this attention learning requires that you decide what to pay attention to in which context. And what to change and why. And when to reinforce it and when to undo it.

And this is something that is we are starting to do now in AI, especially with the transformer, where attention suddenly plays a role and we make models of the system that we are learning over. So in some sense, the attention agent is an agent that lives inside of a learning agent. And this learning agent lives inside of a control agent. And the control agent is directing our relationship to the universe, right? You notice that you're not in charge of your own motivation. You notice that you're not directly in charge of your own control. But what you can do is you can pay attention to things and the models that you generate while paying attention are informing the behavior of the overall agent. And the more we become aware of that, the more this can influence our control.

Anthony Aguirre: So Lucas was asking about the default state of consciousness. Which is, we have this apparatus which we've developed for a very particular reason. Survival and modeling the universe, or modeling the world well enough to predict how it's going to work. And how we should take action to enhance our survival or whatever, set of auxiliary goals that we might have. But it's not obvious that that manner of, that mode of consciousness or mental activity is optimal for all purposes. It's certainly not clear that if I want to do a bunch of mathematics, the type of mind that enables me to survive well in the jungle, is the right type of mind to do really good mathematics.

It's sort of what we have, and we shunted over to the purpose of doing mathematics. But, it's easy to imagine that the mind that we've developed in sort of its default state, is pretty bad at doing a lot of things that we might like to do once we choose to do things other than surviving the jungle. So, I'm curious what you think about, how different a machine neural architecture could be if it's trying to do very different things than what we've developed to do.

Joscha Bach: I suspect that given a certain entanglement, that is the ability to resolve the universe in a certain spatial and temporal way. And then having sufficient processing resources and speed, there is going to be something like an optimal model that we might be ending up with. And humans are not at this level of the optimal model. Our brain is still a compromise, I think. So we might benefit from having a slightly larger brain. I think that the differences between a human performance, are differences in innate attention. So talents are basically differences in innate attention. And you know how they say I'll be recognized an extroverted mathematician. They look at your shoes. And what this alludes to is that good mathematicians tend to have Asperger's. Which means that they are using parts of their brain, that normal humans use for social cognition and for worrying about what other people think about them, for thinking about abstract problems.

And often I think that the focus on analytic reasoning is a prosthesis for the absence of normal regulation. That is, if you are a nerd and you will have as a result a slightly different interface to the social environment than the normies. You as a child don't get a manual on how to deal with this. And so you will act on your impulses, on your instincts, and unless you are surrounded by other nerds, you tend to fail in your social interactions. And you will experience guilt, shame and despair, and this will motivate you to understand what's going on. And because as a child, you typically don't have any tools to reverse analyze yourself, or even notice that there is something to reverse engineer. You will turn around and reverse engineer the universe.

Anthony Aguirre: Yeah. I suspect if we were able to have the experience, and maybe someday we will with some sort of mind-to-mind interface, of seeing, actually experiencing someone else's worldview and processing reality through their apparatus. I suspect we would find that it's much more different than we suppose, on an everyday level. We sort of assume that people are understanding and seeing the world more or less as we are, with some variations. And I would love to know, to what degree that that's true. You read these, or I read these articles of some people who cannot imagine visual images. And there are people I compare with my wife when she imagines a visual image in her mind, it is right there in detail. She can experience it, sort of like I would experience a dream or physical reality.

When I think of something in my mind, it's quite vague. And then there are other people who, there's sort of nothing there. And that's just one aspect of cognition. I think that would be a fun project to really map out, what that sort of range in qualia really is among human minds. I suspect that it's much, much bigger than we really appreciate it. It might cause us to have a little more understanding for other people, if we understood how literally, differently, they experience the world than we do. Or, it could be that it's very similar. That would be fascinating to the degree, I mean, we do all function fairly well in this world, so there has to be some sort of limit on how differently we can perceive and process it. But yeah, that fascinates me how big that range is.

Joscha Bach: I have a similar observation as my own wife who's an artist. And she is very good at visualizing things, but she doesn't notice external perception as much. And to compare with a highly perceptive person, I think I have about 20% of their sensory experience because I'm mostly looking at the world with conceptual reflection. I have Aphantasia, so outside of dreams or hypnagogic state, I cannot visualize, or don't get conscious access to my visualizations. I can draw them, so I can make designs. But for the most part, I can not make art because I can not see these things. I can only do this in a state where I'm slightly dreaming.

So it's also interesting to notice the difference. It's not that I focus on this and it gets more and more plastic, and at some point I see it. It's more like it's being shifted in from the side. It's similar to when you observe yourself falling asleep, and you manage to keep a part of yourself online to track this without preventing you from going to sleep. Which is sometimes hard, right? But if you pulled it off, you might notice how suddenly the images are there, and they more or less come from the side. Right? Something is shifting in and it's taking over.

I think that there is a limit to, because Anthony brought this up, to how it makes sense, to understand the world in the way that you operate. It's just that we often tend not to be completely aware of it during the first 400 years or so. So it takes time to reverse engineer the way in which you operate. For instance, it took me a long time to notice that the things that I care about are largely not about me as an individual. So, I mean, of course we all know this because it's a moral prerequisite that you don't only care about yourself, but also about the greater whole and future generations. But what does that actually mean? Right. You are basically an agent that is modeling itself as part of a larger agency. And so you get a compound agent.

And a similar thing is true for the behaviors in your own mind. They are not seeing themselves as all there is. But, at some level, model, that they are part of a greater whole of some kind of processing hierarchy, and control hierarchy in which they can temporarily take over and run the show. But then they will be taken over by other processes and they need to coordinate with them. And they are part of that coordination. And this goes down to the lowest levels of the way in which this is implemented. And ultimately you realize that information processing systems on this planet, which coordinate for instance, an organism, or that cooperation between organisms, or that cooperation within ecosystems.

And this is the purpose of our cognition in some sense. It's the maintenance of complexity, so we can basically shift the bridgehead of order into the domain of chaos. And this means that we can harvest negentropy in regions where dumber control systems cannot do that. That's the purpose of our cognition. And to understand our own role in it and the things that matter, is the task that we have. And there are some priors that we are born with. So ideas about the social roles that we should be having, the things that you should care about and should not. Over time, we replace these priors, these initial reflexes, with concrete models. And once we have a model in place, we turn off the reflex.

Lucas Perry: So you don't think that the expression of proxies in evolution means that harvesting negentropy isn't actually what life is all about. Because it becomes about other things once we gain access to proxy rewards, and rational spaces and self reflection.

Joscha Bach: I think it's an intermediate phase where you think that the purpose of existence, is for instance, a mass knowledge, or to have insight, or to have sex, or to be loved and love, and so on. And this is just before you understand, what this is instrumental to.

Lucas Perry: Right, but like you start doing those things and they don't contribute to negentropy.

Joscha Bach: They do. If they are useful for your performance as a living being, or for the performance of the species that your part of, or the civilization or the ecosystem, then they serve a certain role. If not, then they might be statistically useful. If not, then they might be a dead end.

Lucas Perry: Yeah. That makes sense.

Anthony Aguirre: I mean, life is quite good at extracting negentropy, or information from its hidden troves supplied by the sun. You know I don't think we're ever going to compete with plankton. At least not anytime soon, maybe in the distant future. So it seems like a little bit of an empty-

Joscha Bach: If you have a pool, you are competing with plankton, right? We are able to settle surfaces that simpler organisms cannot settle on. And we're not yet interested in all the surfaces, we are just one species on this planet. Right?

Anthony Aguirre: I don't know, I guess (crosstalk 00:54:03). If I beat the plankton at some point, I'm just not that excited about that. I mean, I think it's true that, to sustain as life we have to be able to harvest that, transform that information from the form that it's in to an ability to maintain our own homeostasis and fight the second law of thermodynamics essentially, and maintain a structure that is able to do. But that's metabolism and is I think sort of the defining quality that life has.

But I... It's hard for me to see that as more than a sort of means to an end in the sense that enables all of the activities that we do. But if you told me that someday our species will process this much entropy instead of that much entropy, that's fine, but, I don't know, that doesn't seem terribly exciting to me. Just like discovering a gigantic ball of plankton and space. I wouldn't be that impressed with it.

To me, I think there's something more interesting in our ability to create sort of information hierarchies, where there are not just the amount of information, but information structures built on other information structures, which biological organisms do and we do mentally with all sorts of civilizational artifacts that we've created. The level of hierarchical complexity that we're able to achieve seems to me more interesting than just the ability to effectively metabolize a lot.

Joscha Bach: I think that as the individual. We don't need to know that. As an individual, we are relatively small, inconsequential. We just need to know what we need to know to fulfill our role. But if the civilization that we are part of doesn't understand that, it's in a long-term battle about entropy, then it is in a dire situation I think.

Anthony Aguirre: There's so much fuel around. I just think we have to figure out how to unlock a little bit better, but we are not going to run out of negentropy.

Joscha Bach: No, life is not going to run out of negentropy. It could be that we are running out of the types of food that we require, and climates that we require, and the atmosphere that we require to survive as a species. But that is not a disaster for life itself, right? Cells are very hard to eradicate once they settled a planet. Even if you have a super volcano erupting or a meteor hitting the Earth, Earth is probably not going to turn sterile anytime soon. And so from the perspective of life, humanity is probably just an episode that exists to get the fossilized carbon back into the atmosphere.

Lucas Perry: So let's pivot into AGI who might serve as a way of locally optimizing the increase of entropy for the Milky Way and hopefully our local galactic cluster. So, Joscha, so how do you view the path to aligned AGI?

Joscha Bach: If you build something that is smarter than you, the question is it already aligned with you or not? If it's not aligned with you, you will probably not be able to align it. At least, not in the long run. The right alignment is in some sense about what you should be doing under the circumstances that you are in. When we talk about alignment in a human society, there are objective criteria for what you should be doing if you are part of a human society. Right? It's given by a certain set of permittable aesthetics. An aesthetic is degrees of world that might emerge over your initial preferences. And there are a number of local optima, maybe there's even a global optimum in the way the species can organize itself at scale.

And if you build an AI, the question is, is it able to and would it be interested? Would it be in the interest of the AI to align itself with this global optimum or with one of the existing local optima?

And what would this look like? It would probably look like different from our intuitions about what society should be like. Right? We clearly lost the plot in our society. Every generation, since the last war has fewer intuitions about how the society actually functions and what conditions need to be met to make it sustainable.

Sustainability is largely a slogan that exists for PR purposes for people in the UN and so on. It's nothing that people seriously concerned about. We don't necessarily think about how many people can the planet support and how can we reach that number of people? How would we make resources be consumed in closed loops? These are all questions that are not really concerning.

The idea that we can eat up the resources that we depend on, especially the ecological resources that we depend on, is relatively obvious to the people that run the machines that drive our society. So we are in some sense, in a locust mode, in a swarming mode, and this swarming mode is going to be a temporary phenomenon. And it's something that we cannot stop. We are smart enough to understand that we are in this swarming mode, and that's probably not going to end well. We are not obviously smart enough to be able to stop ourselves.

Lucas Perry: So it seems like you have quite a pessimistic view on what's going to happen over the next hundred to 300 years, maybe even sooner. I think I've heard you say somewhere else that we're so intelligent that we burned a hundred million years of trees to get plumbing and Tylenol.

Joscha Bach: Yes. Yeah. This is in some sense the situation that we're in, right? It's extremely comfortable.

Lucas Perry: Yeah, it's pretty nice.

Joscha Bach: There has never been a time in probably the history of the entire planet where a species were so comfortable as us.

Anthony Aguirre: But why so pessimistic?

Joscha Bach: I'm not pessimistic, I think. It's just if I look at the possible trajectories that we are in, is there a trajectory in which we can make ourselves enter a trajectory of perpetual growth? Very unlikely, right? Is there a trajectory in which we can make the thing that we are in sustainable without going through a population bottleneck first, which will be unpleasant? Unlikely. It's more likely than the idea that we can have eternal growth, but it's also not impossible that we can have something that is close to eternal growth. It's just unlikely.

Anthony Aguirre: Fair. I mean, I think what I find frustrating is that it's pretty clear that there are no serious technological impediments to having a high quality, sustainable, steadily growing civilization. The impediments are all of our own making. It's fairly clear what we could do. There are something... problems that are quite hard. There's some that are fairly easy. Global warming, we really could solve. If we could coordinate it, just do the things that are fairly clearly necessary to do; that's a solvable problem.

Running out of easily accessible natural resources is harder because you have to get closer and closer to a hundred percent recycling if you want to keep growing the material economy while staying within the same resources. But it's also true that what we're doing is not so clear how much we have to keep growing the material economy.

When I look at my kids, they... Compared to when I grew up, they barely have any things. They're not that interested in things. Most of the things, there are some that they really like, but if I buy... Even five years ago when I would buy my younger son a toy, he would play with the toy for a little while, and then he'd be done with the toy. And I'd be like, why did I buy that toy? They're... Much more of their life than ours is digital. And it is consuming media. That seems to be the trajectory of humanity at some level as a whole.

And so it's unclear. It may be that there are ways in which we can continue economic and growth in sort of quality of life, even while the actual amount of material goods that we require is not sort of exponentially increasing in that in the way that you would think. So I share your pessimism as to what's actually going to happen, but I maybe more frustrated by how it wouldn't be that hard to make it work if we could just get our act together.

Joscha Bach: But this 'getting our act together' is the actual difficulty.

Anthony Aguirre: I know.

Joscha Bach: Right, because putting your act together, as a species, means that you as an individual needs to make decisions that somehow influence the incentives of everybody else. Because everybody else is going to keep doing what they are doing, right? This argument, 'if everybody would do as I say', that is not a valid, 'therefore you do it', is not a valid argument.

Anthony Aguirre: No, no.

Joscha Bach: Right, this is not how it works. So in some sense, everybody is working based on their current incentives. And what you would need to do is to change incentives within the means that you, as an individual, have, of everybody else to bring about this difference in the global incentive structure. So it comes down to, in some sense, implementing a world government that would subdue everything under some kind of eco-fascist guideline. And the question is, would AGI be doing that? So you could, it's very uncomfortable to think about it. It's certainly not an argument that would be interesting to entertain for most people. And it's definitely nothing that would get a majority. It's just probably what needed to be done to make everything sustainable.

Anthony Aguirre: That's true. I do think that there are within reach technological advances that are sort of genuinely better along every axis. Like once, as has happened, photovoltaic energy sources are just cheaper now than fossil fuel ones. And once you cross that threshold, there's just, there's no point. Maybe five or 10 years from now there simply won't be any point at all in making fossil fuel energy sources once... So there's sort of thresholds where the right thing actually just becomes the default and the cheapest and there isn't any tension between doing what we should do as a civilization and doing what people want to do as an individual.

Now it may be that they're always sort of showstoppers that are important and where that hasn't happened or isn't possible, but it's not terribly obvious that there are a lot of... there seem to me to be a lot of systems where with some careful architecting, the incentives can be aligned well enough that people can do more or less what they selfishly want to do and nonetheless contribute to a more sustainable civilization. But it seems to require a level of planning and creativity/intelligence that we haven't quite gotten together yet.

Joscha Bach: Do you think that there's an optimal number of trees on the planet? Probably, right?

Anthony Aguirre: An optimal number of trees?

Joscha Bach: Yes. One that would be the most beneficial one for the ecosystems on earth. And everything else which happens on earth. Right? If there are too few trees, there are some negentropy which you are leaving on the table. If there are too many then something will go out of whack. And some of the trees will eventually die. And so in some sense, I think there is going to be an optimal number of trees, right? No?

Anthony Aguirre: Well, I think there are lots of different systems with preferences that will not all agree on what the optimal number of trees is. And I don't think there's a solved problem for how to aggregate those preferences.

Joscha Bach: Of course. No, I don't mean that we know this number or it's easily discernible or that it's even stable. Right? It's going to change over time.

Anthony Aguirre: I don't think there is a answer to how to aggregate the preferences of a whole bunch of different agents.

Joscha Bach: Oh, it's not about preferences, ultimately the preferences are instrumental to complexity. Basically to how much life can exist on the planet. How much negentropy can you harvest?

Ultimately there is no meaning, right? The universe doesn't care if something lives inside of it, or not. But from the perspective of cellular life, what evolution is going for is, in some sense, an attempt to harvest the negentropy that is available.

Anthony Aguirre: Yeah. But we don't have to listen to evolution.

Joscha Bach: Of course not, no. We don't have to, but if we don't do this, it probably is going to limit our ability to stay. Because we are part of it. We are not the highest possible life form. There are going to be, if apes are some kind of a success model, descendants that are not going to be that humanlike. If we indeed manage to leave the gravity well and not just send AI's out there, it turns out that there can be descendents of us that can live in space they will probably not look like that Captain Kirk and Lieutenant Uhura.

But in far future AI, the commanders of space fleets will look very, very different from us. They will be very different organisms, right? They will not have human aesthetics. They will not solve human problems. They will live together in different ways because they interface in different ways, interact with technology in different ways. It will be a different species de facto.

And so if you think about humans as a species, we do depend on a certain way in which we interface with the environment in a healthy, sustainable way. And so I suspect that there is a number of us that should be on this planet, if we want to be stewards of the ecosystems on this planet. It's conceivable that we can travel to the stars, but probably not in large numbers and we are not the optimal form.

Right, if you want to settle Mars, for instance, we should genetically modify our children into something that can actually live on Mars. So it would/should be something that doesn't necessarily need to have an atmosphere around it. It should be able to hibernate. It should be super hardy. It should be very fast and be able to feed on all the proteins that are available to it which are outside of Earth, mostly humans. Right? So it should look like Alien in the Alien movies.

Anthony Aguirre: Again, I think that's a choice. I mean, you can argue that once you have different... That that will be an inevitability.

Joscha Bach: No it's not inevitable. You can always make a suboptimal choice. And then you can try to prevent everybody else to make a better choice than you did. And if you are successful, then you might prevail. But if you are in an environment where you can have free competition, then in principle, your choice is just an instrument of evolution.

Lucas Perry: So can we relate this global coordination problem of beneficial futures to AGI and particularly Joscha, you mentioned an eco-fascist AGI. So, a scenario in which that might be possible would be something like a singleton?

Joscha Bach: Yes.

Lucas Perry: So I'm taking this question from Anthony. So, Anthony asks, if you had to choose, knowing nothing else, a future with one, several or many AGI systems, which would you choose and why, and how would you relate that to the problem of global coordination control for beneficial futures?

Joscha Bach: There might be a benefit in having multiple AGI's, which could be the possibility of making the individual AI model.

One of the benefits of mortality is that whatever mistakes single human makes it's over after a certain while. So basically... For instance, the Mongols allowed their khans to have absolute power and override all the existing institutions. Right? This was possible for Genghis Khan. But they basically tried to balance this by... If this person died, they would stop everything they were doing and reconsider. Right?

And this is what stopped the Mongol invasions. Right? So it was very lucky. Oh, of course they wouldn't have needed to do this. Right? And a similar thing happened with Stalin, for instance. Right? Stalin has burned down Russia in a way or a lot of the existing culture. And it stopped after Stalin. It didn't stop immediately and completely, but Stalinism basically stopped with Stalin. And so, in some sense, this mortality is on a control level, adaptive.

Senescence is adaptive as well so we don't out-compete our grandkids. But we see this issue with our institutions. They are not mortal. And as a result, they become senescent because they basically have mission creep. They are no longer under the same incentives. They become more and more post-modern. Once an institution gets too big to fail the incentives inside the institution change, especially the incentives for leadership.

So something that starts out very benevolent, like the FDA turns into today's FDA. And if the FDA was mortal right, we would be in a better situation than the FDA being an AI that is sentient. It's just acting on its own incentives forever. Right? The FDA is currently acting on its own incentives, which are poorly aligned, increasingly poorly aligned, with the interests that we have in terms of what we think the FDA should be doing for us. When it governs us or it governs part of us.

And that is the same issue with other AGI's. In some sense the FDA is an AGI with humans in the loop. And if we automate all this and turn it completely into a rule-base, especially a self-optimizing rule-base, then it's going to align itself more and more with its incentives.

So if you have multiple competing agents, we can basically prevent this thing from calcifying to some degree. And we can have the opportunity to build something like a clock into this that lets the thing die at some point, and to replace it by something else. And we can decide once the thing dies by itself, what this replacement would look like, if it would be the same thing, or if it should be something slightly different. So this would be a benefit.

But outside of this it's not obvious, right? From the perspective of a single agent, it doesn't make sense that other agents exist. For a single human being, the existence of other human beings seems to be obviously beneficial because we don't get that old, our brains are very small, we cannot be everywhere at the same time. So we depend on the interaction with others.

But if you lose these constraints, if you could turn yourself into some kind of machine that can be everywhere at the same time, that can be immortal, that has no limit on its information processing, it's not obvious why you should not just be Solaris, a planet wide intelligence that is controlling the destiny of the entire planet. And why you would get better if you had competition. Right? Ideally you want to subdue everything once you are planet wide mind or a solar system wide mind or galactic mind if that's conceivable.

Anthony Aguirre: So there are two elements of that. One is what the AI would want. And the other is...

Joscha Bach: Yes, there are two perspectives. One is you have a mind that is infinitely scalable. This mind that is infinitely scalable would not want to have any competition. At least that's not obvious to me. It would be surprising to me if it wanted to. If you have non-scalable minds like ours that co-exist with it, then from the perspective of these non-scalable minds, it would be probably beneficial to limit the capacity of the other minds that we are bringing into existence. If it means we would want to have multiple of them. So they can compete and then that we can turn them off without everything breaking down.

Anthony Aguirre: It's tricky because that competition is a double-edged sword. It can compete in the sense of being able to limit each other, but creating a competition between them seems necessarily going to incentivize them to enhance themselves.

Joscha Bach: It depends on how they compete. So when you have AMD and Intel competing, we consider this to be a good thing, right? When you have Apple and Microsoft competing. We also consider this to be a good thing. If we had only one of them, they would not be incentivized to innovate anymore.

And a similar thing could happen. For instance, if we had just one FDA, we have two FDA's. So the FDA becomes like an app and you have some central oversight that makes sure that you always get inside of the box what's written outside of the box. But beyond that, the FDA's could decide whether they, for instance, allow you to import a medication from Canada or not without an additional trial and you would... Basically people would subscribe to the FDA they like better and we would have constant innovation and regulation.

Anthony Aguirre: But that's a very managed competition. So it's less clear that the US/Soviet Union competition is something that was good to encourage in that sense.

Joscha Bach: From the perspective of central Europe, it was great. Right? The CIA helped the West German unions to make sure that the West German workers had a lot to lose. And Eastern Germany was best among all the East block countries exactly because of this competition.

Anthony Aguirre: It was great given that we managed to not have a nuclear war or a land war. The concern I think is ...that I have is, yes; if you have a managed competition that tends to be good, and you can sort of set the rules, there's an ability to forestall some sort of runaway process. When the competition is kind of at the highest level for the overall, what has sort of the overall highest level of power in the whole arena, then that's a lot less clear to me that the competition is going to be a positive thing.

Joscha Bach: It seems to me that US governance has become dramatically worse since the end of World War Two. Since the end of the Cold War. Right? There seems to be an increased sense that it doesn't really matter what kind of foreign policy we are driving, because it's largely an attempt to gain favors with the press. You can win the next election. So it's about selling points. It's not about whether regime change in Libya is actually good. Who cares?

Anthony Aguirre: Well I think that's a decoupling between the government actions and... We don't have to actually be effective as a society.

Joscha Bach: Exactly because we don't have competition anymore. So once you take competition off the table, then you lose the incentives to perform well.

Anthony Aguirre: Those incentives could be redirected in some way, I think, but we haven't figured out a good way to do that.

Joscha Bach: Exactly.

Anthony Aguirre: The only way we figured out to create the right incentives are the competition structure. I agree with that. If we were a little bit more enlightened, we would understand that we still have to be effective.

Joscha Bach: Oh, I think we understand this. The problem is that if you are trying to run on a platform of being effective against the platform that runs on being popular. And the only thing that matters in the incentive structure is popularity. Then the popular platform is going to win. Even worse, once you manage to capture the process by which the candidates are being selected, you don't even need to be popular. You just need to be more popular than the other guy.

Lucas Perry: Is it possible for the incentive structures to come strictly internally from the AI system? It's unclear to me what, if it actually has to come from competition in the real world pushing it to optimize harder. Could you not create similar incentive structures internally?

Joscha Bach: The question is how can you set the top level incentive correctly? Ultimately, as long as you have some dynamic in the system, I suspect the control is going to follow the incentives. And when we look at the failure of our governments, they are largely not the result of the government being malicious or extremely incompetent, but mostly just everybody at every level, following their true incentives. And I don't know how you can change that.

Anthony Aguirre: Well then do you feel like there's a natural or default or unavoidable set of incentives that a high level AI system is going to feel then?

Joscha Bach: Only if it sticks around, right? It doesn't have to stick around. There is no meaning in existing. It's only the systems that persist tend to be set up in such a way that they act as if they want to persist.

Anthony Aguirre: Well I guess my question is to what level are the incentives that a system has... Normally the incentives that a system has are kind of set by its context and the system that it's embedded in. If we're talking about a sort of world spanning AI system, it's sort of in control. So it seems like the normal model of the incentives being set by the other sort of players on the stage is going to apply. And it's not clear to me that there's a sort of intrinsic set of incentives that are going to apply to a system like that.