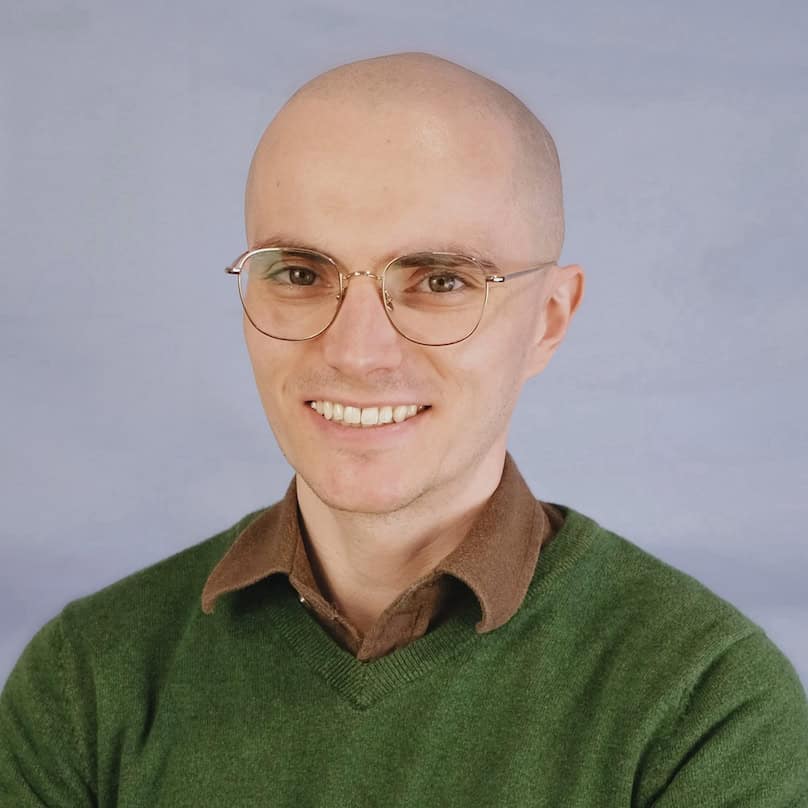

Scott Emmons

Google DeepMind

Why do you care about AI Existential Safety?

COVID-19 shows how important it is to plan ahead for catastrophic risk.

Please give one or more examples of research interests relevant to AI existential safety:

I am interested in both the theory and practice of AI alignment. I have helped characterize how RLHF can lead to deception when the AI sees more than the human, develop multimodal attacks and benchmarks for open-ended agents, and use mechanistic interpretability to find evidence of learned look-ahead in a chess-playing neural network.