Contents

Special Newsletter: Slaughterbots Sequel

We’ve produced a sequel to the viral film Slaughterbots: Click to watch

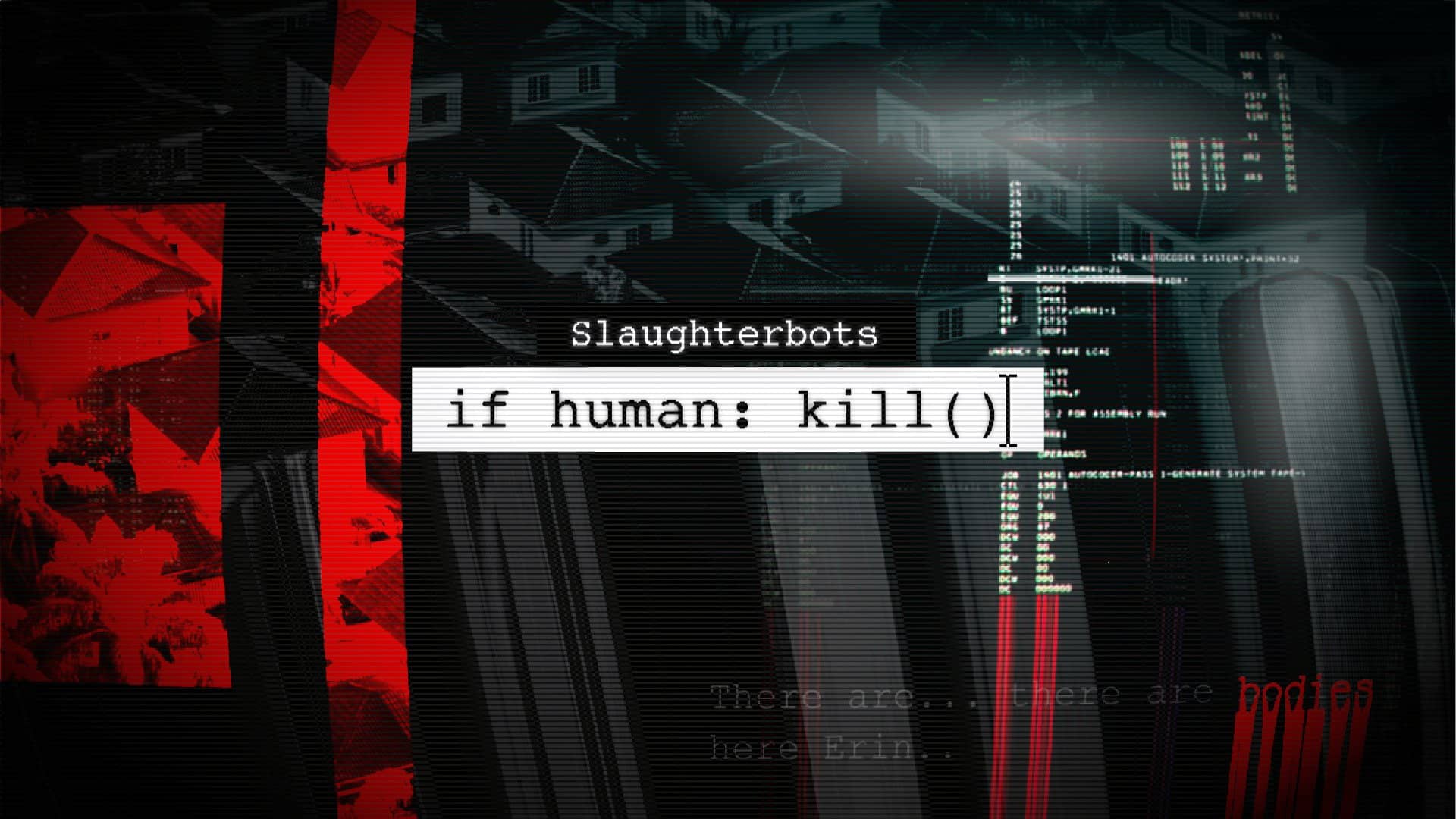

if human: kill() follows up on the FLI’s award-winning short film, Slaughterbots, to depict a dystopian future in which these weapons have been allowed to become the tool of choice not just for militaries, but any group seeking to achieve scalable violence against a specific group, individual, or population.

When FLI first released Slaughterbots in 2017, some criticized the scenario as unrealistic and technically unfeasible. Since then, however, slaughterbots have been used on the battlefield, and similar, easy-to-make weapons are currently in development, marking the start of a global arms race that currently faces no legal restrictions.

if human: kill() conveys a concrete path to avoid the outcome it warns of. The vision for action is based on the real-world policy prescription of the International Committee of the Red Cross (ICRC), an independent, neutral organisation that plays a leading role in the development and promotion of laws regulating the use of weapons. A central tenet of the ICRC’s position is the need to adopt a new, legally binding prohibition on autonomous weapons that target people. FLI agrees with the ICRC’s most recent recommendation that the time has come to adopt legally binding rules on lethal autonomous weapons through a new international treaty.

Press inquiries and requests for film distribution may be directed to Georgiana Gilgallon, Director of Communications, at georgiana@futureoflife.org

COMING LATER THIS WEEK…

Panel Discussion on ‘Slaughterbots — if human: kill()’ – Perspectives on the State of Lethal Autonomous Weapons

We will also be releasing a panel discussion with Richard Moyes, Managing Director of the Campaign to Stop Killer Robots, Max Tegmark, Stuart Russell and Maya Brehm from the International Committee of the Red Cross (ICRC). Tegmark and Russell will speak to the technical issues of lethal autonomous weapons, from their perspective as AI professors; Moyes will offer the civil society perspective as coordinator of the Campaign to Stop Killer Robots (CSKR), and the ICRC will provide their own perspective, namely the recommendation that states adopt new legally binding rules to regulate lethal autonomous weapons.

The Ideas Behind ‘Slaughterbots – if human: kill()’ – A Deep Dive Interview

In support of Slaughterbots – if human: kill(), we’ve produced an in-depth interview that explores the ideas and purpose behind this new film. We interviewed Emilia Javorsky, who heads up FLI’s lethal autonomous weapons policy and advocacy efforts, Max Tegmark, Co-founder and President of FLI, and Stuart Russell, Professor of Computer Science at University of California, Berkeley, Director of the Center for Intelligent Systems, and a world-leading AI researcher.

In support of Slaughterbots – if human: kill(), we’ve produced an in-depth interview that explores the ideas and purpose behind this new film. We interviewed Emilia Javorsky, who heads up FLI’s lethal autonomous weapons policy and advocacy efforts, Max Tegmark, Co-founder and President of FLI, and Stuart Russell, Professor of Computer Science at University of California, Berkeley, Director of the Center for Intelligent Systems, and a world-leading AI researcher.

Javorsky sheds light on the intentions behind the film, its associated policy goals, and broader implications. Russell elucidates the problems with lethal autonomous weapons, their technical capabilities, and why their use by good actors is ultimately counterproductive. He argues that a ban on these weapons is the only sensible option given the risk they pose. Tegmark ties the issue of lethal autonomous weapons into a grander vision of the potential for both positive and negative uses of technology in the future.

To learn more, visit autonomousweapons.org