Future of Life Institute February 2023 Newsletter: Progress on Autonomous Weapons!

Contents

Welcome to the Future of Life Institute newsletter. Every month, we bring 24,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

If you’ve found this newsletter helpful, why not tell your friends, family, and colleagues to subscribe here?

Today’s newsletter is a 5-minute read. We cover:

- The Belén Communiqué on autonomous weapons

- An interview with AI safety researcher Neel Nanda

- Awareness of nuclear winter

- The 2017 Asilomar AI Principles

The Belén Communiqué on Autonomous Weapons

On February 23-24, Costa Rica hosted the first ever regional conference on autonomous weapons.

33 Latin American and Caribbean states convened to promote a binding international treaty on autonomous weapons. You can read their full statement – The Belén Communiqué – here.

This conference is a unique attempt to build international support for a legally binding treaty, gradually sidelining the UN forum in Geneva where a small minority wield vetoes to prevent progress.

FLI President Max Tegmark was at Costa Rica for the conference. Listen to his statement here:

The outcome of this conference adds political momentum to global diplomatic efforts on this issue. Last October, more than seventy states backed binding rules of autonomous weapons at the UN General Assembly. The UN expert group on autonomous weapons will meet in March, followed by a multistakeholder conference hosted by Luxembourg in April.

To learn more about autonomous weapons, visit this dedicated website.

Governance and Policy Updates

AI Policy:

▪ The US State Department released its Political Declaration on the Responsible Military Use of Artificial Intelligence and Autonomy.

Nuclear Security:

▪ Russia suspended its participation in New START, the only bilateral nuclear arms control agreement between the US and Russia.

Biotechnology:

▪ The World Health Organization published a first draft of a new internationally binding treaty on pandemic preparedness.

Updates from FLI

▪ On February 16, FLI’s Mark Brakel and Anna Hehir hosted a panel on military AI and autonomous weapons along with Professor Stuart Russell at the REAIM summit in The Hague. See what they said.

▪ FLI President Max Tegmark joined Professor Melanie Mitchell on TVO Today to discuss advances in language models, AI myths, and the nature of sentience. Watch the full discussion here:

▪ Our podcast host Gus Docker interviewed AI safety researcher Neel Nanda about mechanistic interpretability – the science of reverse engineering the algorithms that a neural network learns.

New Research: Public Awareness of ‘Nuclear Winter’ is Too Low

A new survey by the Cambridge Centre for the Study of Existential Risk found that US and UK respondents were unaware of the risks of nuclear winter.

▪ Why this matters: This lack of awareness is troubling because the risk of nuclear war is higher than it has been since the 1962 Cuban Missile Crisis. Earlier in January, The Bulletin of the Atomic Scientists moved the Doomsday Clock forward from 100 to 90 “seconds to midnight,” indicating humanity’s proximity to self-destruction – mainly because of the risk of nuclear war.

▪ More about nuclear winter: Nuclear winter is the catastrophic environmental fallout from a nuclear war. A 2022 Nature Food study found that soot and debris from a nuclear exchange would rise into the atmosphere and block the sun for decades, causing global drops in temperature and mass crop failure. The authors estimated that over 5 billion people could starve and die. Here’s what that looks like from space:

▪ Dive deeper: In August 2022, FLI presented John Birks, Paul Crutzen, Jeannie Peterson, Alan Robock, Carl Sagan, Georgiy Stenchikov, Brian Toon, and Richard Turco with the Future of Life Award for their roles in discovering and popularising the science of nuclear winter.

We produced two videos on the subject of nuclear winter. You can help improve public awareness about this vital subject by sharing them.

What We’re Reading

▪ Humans-in-the-loop: An oft-cited solution to the ethical and legal challenges of integrating AI systems into nuclear command and control is keeping humans in control of critical safety processes and political decisions. But is this good enough? Humans suffer from automation bias – the tendency to defer to automated systems.

▪ US DoD Directive 3000.09: The Centre for A New American Security helpfully compared the new US new policy on autonomy in weapon systems to its previous iteration, which was issued in 2012.

▪ Biological threats: Two separate reports in Politico and the Financial Timesdetailed how the US and the UK are responding to the threat of biological weapons and pandemics.

▪ ChatGPT and academia: A new comment in Natureadvises researchers and academics on using generative AI to support scholarship.

Hindsight is 20/20

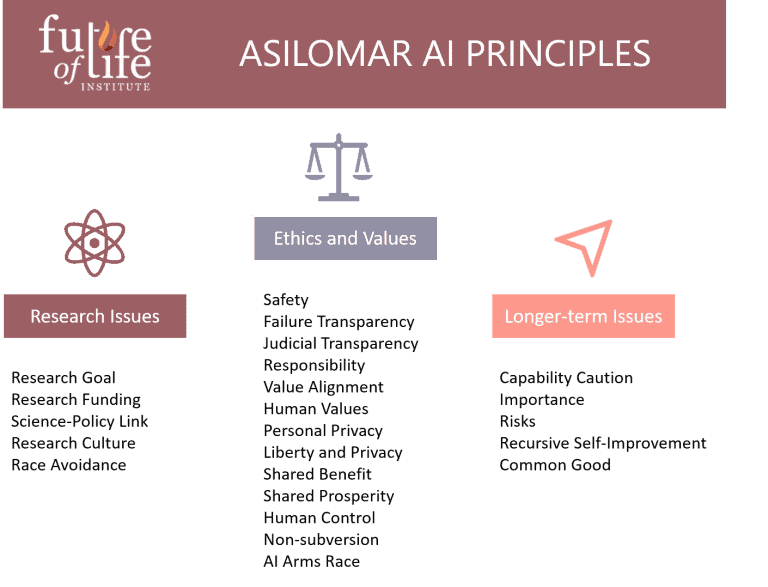

AI Arms Race: AI labs have teamed up with Big Tech to access computational infrastructure and other resources, fuelling media reports of an AI Arms Race, a winner-takes-all approach to developing and deploying AI systems in the market.

An unfortunate victim of this “race” is likely to be AI ethics and safety standards. This seems like an opportune moment to re-plug the 2017 Asilomar AI principles, which explicitly call on teams developing AI systems to avoid race dynamics. Over 5000 leading scientists, activists, and academics have signed these norms.

Chart of the Month

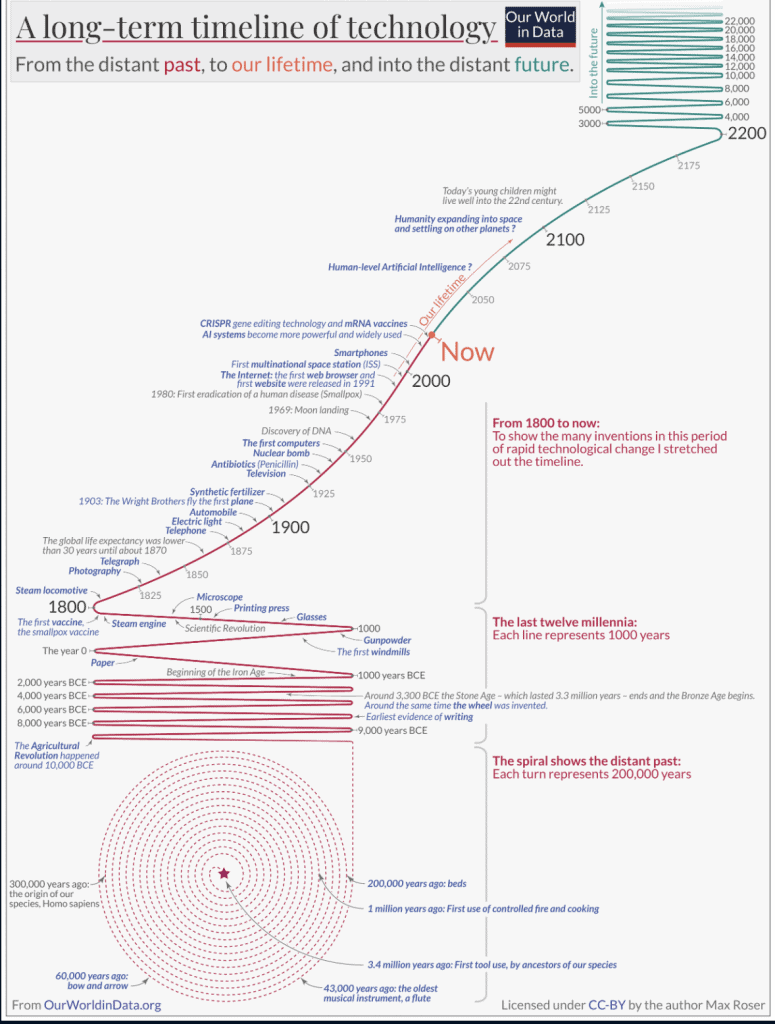

The pace of innovation in the 21st century and the potential for our future only become apparent when put into a historical perspective. Here’s a helpful chart from Our World in Data that does just that:

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.