Contents

FLI October, 2016 Newsletter

What Could Be Scarier Than…

…the Trillion-Dollar-Nukes Budget!

How Would You Spend $1 Trillion?

Nuclear weapons aren’t just terrifying; they’re expensive.

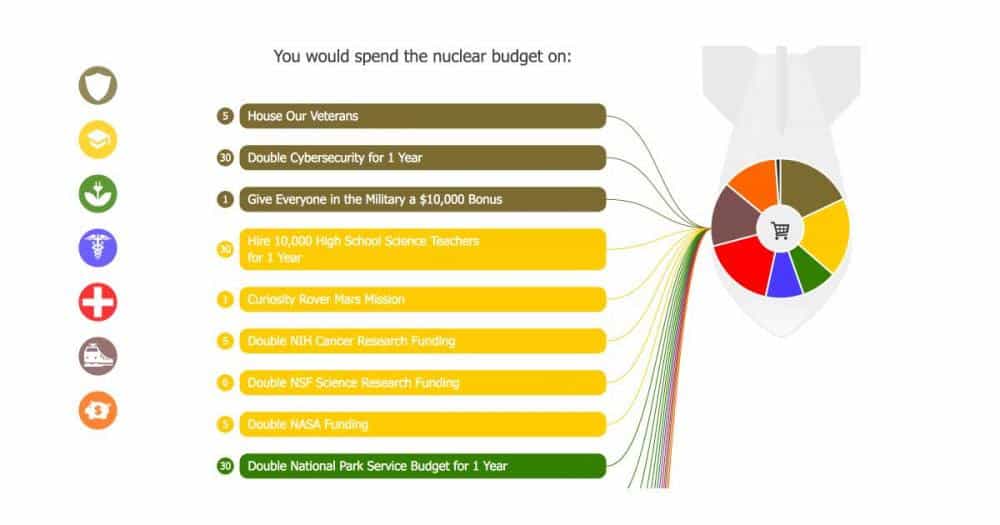

But how much are nuclear weapons really worth? Is upgrading the US nuclear arsenal worth $1 trillion – in the hopes of never using it – when that money could be used to improve lives, create jobs, decrease taxes, or pay off debts? How far can $1 trillion go if it’s not all spent on nukes?

We’ve just launched an app that helps you see just how much the U.S. could do with that money — everything from doubling funding for National Parks to increased cybersecurity to tax cuts for every household and more. Test it out for yourself and see if you think nuclear weapons are worth $1 trillion.

We also want to give a special thank you to Grzegorz Orwiński, the FLI volunteer who designed and built the app!

Since the dawn of the nuclear age, each U.S. president has decided to grow or downsize our arsenal based on geopolitical threats, international treaties, and close calls where nuclear war nearly started by accident. As we look toward the end of the Obama’s term, let’s consider his nuclear legacy (it’s probably scarier than you think!) with this infographic created in partnership with Futurism.com.

The Historic UN Vote On Banning Nuclear Weapons

By Joe Cirincione

History was made at the United Nations today. For the first time in its 71 years, the global body voted to begin negotiations on a treaty to ban nuclear weapons.

Eight nations with nuclear arms (the United States, Russia, China, France, the United Kingdom, India, Pakistan, and Israel) opposed or abstained from the resolution, while North Korea voted yes.

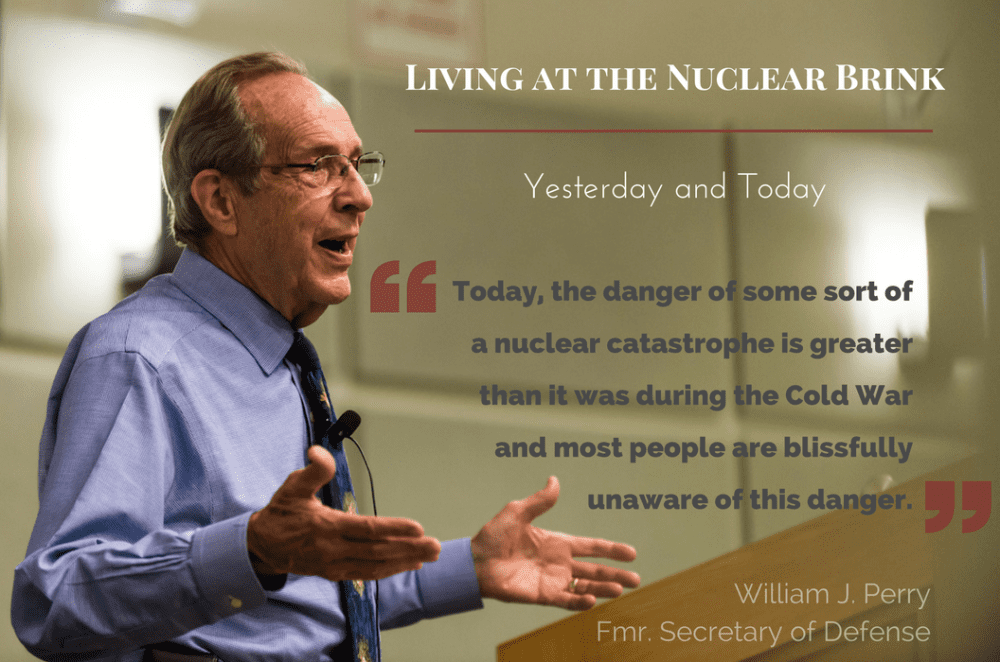

Living at the Nuclear Brink

With Former Defense Secretary William Perry

Last month, Former Defense Secretary William Perry, launched a MOOC about today’s nuclear risks. There are still quite a few weeks left, and you can watch previous lectures on your own schedule. If you want to learn more about how and why nuclear weapons may be more of a threat today than in any point in history, this class is a great place to start!

We’ve updated our nuclear close-calls timeline to include new and more recent nuclear events and problems. We also added insights from FLI members about what each of us thinks is the scariest nuclear close call. Don’t forget to watch the video by the Union of Concerned Scientists at the bottom of the page to learn about hair-trigger alert – which makes accidental nuclear war potentially more likely than an intentional war.

We’re now on SoundCloud and iTunes!

Nuclear Winter With Alan Robock and Brian Toon

The UN voted last week to begin negotiations on a global nuclear weapons ban, but for now, nuclear weapons still jeopardize the existence of almost all people on earth.

I recently sat down with Meteorologist Alan Robock from Rutgers University and physicist Brian Toon from the University of Colorado to discuss what is potentially the most devastating consequence of nuclear war: nuclear winter.

What We’ve Been Up to This Month

OpenAI Unconference on Machine Learning

By Victoria Krakovna

Victoria Krakovna recently attended a self-organizing conference at OpenAI. She writes: “There was a block for AI safety along with the other topics. The safety session became a broad introductory Q&A, moderated by Nate Soares, Jelena Luketina and me. Some topics that came up: value alignment, interpretability, adversarial examples, weaponization of AI.” Read more about the event here.

The Ethical Questions Behind Artificial Intelligence

By Ariel Conn

Many FLI members recently attended the Ethics of AI conference held at NYU, including Max Tegmark and Meia Chita-Tegmark, who gave a joint talk. The event covered two days and 26 speakers, but you can read just some of the highlights and main topics of the event here.

Featured in Nature

“Scientists are beginning to understand why these ‘mini Wall Streets’ work so well at forecasting election results — and how they sometimes fail.”

So starts a recent Nature article that highlighted the success of Anthony Aguirre’s predictive site Metaculus. As they point out, “Already, the site has produced evidence that successful prediction is a skill that can be learned.”

Read the article here, and check out Metaculus to make your own predictions – including a spooky nuclear question!

White House Frontiers Conference

Richard Mallah attended the White House Frontiers Conference in Pittsburgh, which aimed to explore “new technologies, challenges, and goals that will continue to shape the 21st century and beyond”. In the National track, focused on artificial intelligence, the section discussed the future of innovation, safety challenges, embedding values we want, and the economic impacts of AI.

ICYMI: This Month’s Most Popular Articles

When Apple released its software application, Siri, in 2011, iPhone users had high expectations for their intelligent personal assistants. Yet despite its impressive and growing capabilities, Siri often makes mistakes. The software’s imperfections highlight the clear limitations of current AI: today’s machine intelligence can’t understand the varied and changing needs and preferences of human life.

How Can AI Learn to Be Safe?

By Tucker Davey

Sam Harris TED Talk: Can We Build AI Without Losing Control Over It?

By Tucker Davey

The threat of uncontrolled artificial intelligence, Sam Harris argues in a recently released TED Talk, is one of the most pressing issues of our time. Yet most people “seem unable to marshal an appropriate emotional response to the dangers that lie ahead.”

Note From FLI:

We’re also looking for more writing volunteers. If you’re interested in writing research news articles for us, please let us know.