Contents

FLI July, 2016 Newsletter

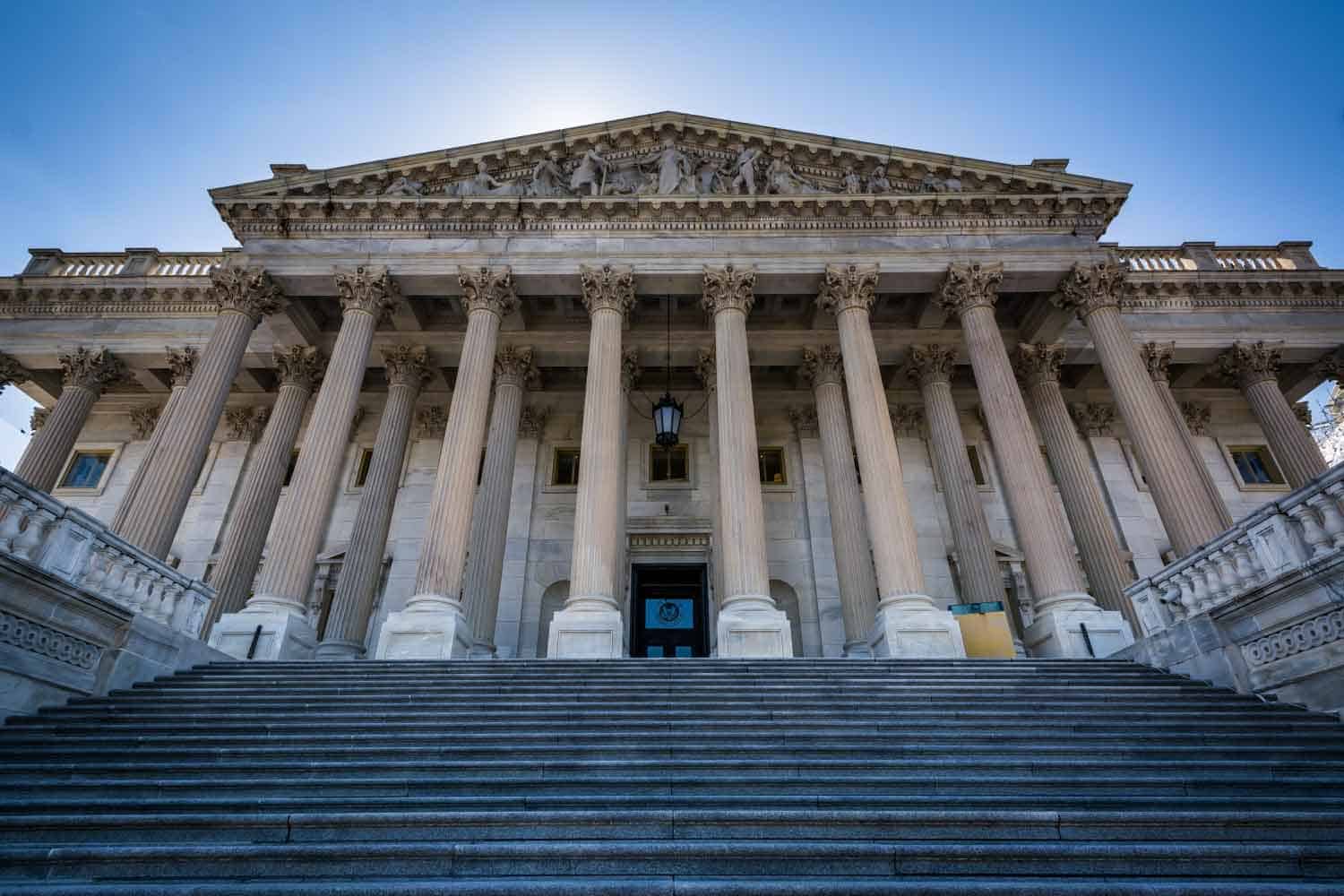

Congress Subpoenas Climate Scientists in Effort to Hamper ExxonMobil Fraud Investigation

ExxonMobil executives may have intentionally misled the public about climate change – for decades. And the House Science Committee just hampered legal efforts to learn more about ExxonMobil’s actions by subpoenaing the nonprofit scientists who sought to find out what the fossil fuel giant knew and when.

For 40 years, tobacco companies intentionally misled consumers to believe that smoking wasn’t harmful. Now it appears that many in the fossil fuel industry may have applied similarly deceptive tactics – and for just as long – to confuse the public about the dangers of climate change.

Investigative research by nonprofit groups like InsideClimate News and the Union of Concerned Scientists (UCS) have turned up evidence that ExxonMobil may have known about the hazards of fossil-fuel driven climate change back in the 1970s. However, rather than informing the public or taking steps to reduce such risks, documents indicate that ExxonMobil leadership chose to cover up their findings and instead convince the public that climate science couldn’t be trusted.

As a result of these findings, the Attorneys General (AGs) from New York and Massachusetts launched a legal investigation to determine if ExxonMobile committed fraud, including subpoenaing the company for more information. That’s when the House Science, Space and Technology Committee Chairman Lamar Smith stepped in.

Chairman Smith, under powerful new House rules, unilaterally subpoenaed not just the AGs, but also many of the nonprofits involved in the ExxonMobile investigation, including groups like the UCS. Smith and other House representatives argue that they’re merely supporting ExxonMobile’s rights to free speech and to form opinions based on scientific research.

FLI is considering a leap into podcasting! Did you know you can follow us on SoundCloud?

Earthquakes as Existential Risks?

ICYMI: This Month’s Most Popular Articles

The Evolution of AI: Can Morality Be Programmed?

By Jolene Creighton

FLI is excited to announce a new partnership with Futurism.com! For our first collaboration, writers at Futurism will be interviewing various AI safety researchers with FLI and writing about their work. They began with Vincent Conitzer who’s researching how to program morality into AI.

Op-ed: Climate Change is the Most Urgent Existential Risk

By Phil Torres

Op-ed: If AI Systems Can Be “Persons,” What Rights Should They Have?

By Matt Scherer

Really, I can think of only one ground rule for legal “personhood”: “personhood” in a legal sense requires, at a minimum, the right to sue and the ability to be sued. Beyond that, the meaning of “personhood” has proven to be pretty flexible.

Op-ed: The Problem With Brexit: 21st Century Challenges Require International Cooperation

By Danny Bressler

Retreating from international institutions and cooperation will handicap humanity’s ability to address our most pressing upcoming challenges. The UK’s referendum in favor of leaving the EU and the rise of nationalist ideologies in the US and Europe is worrying on multiple fronts. Nationalism may lead to a resurgence of some of the worst problems of the first half of 20th century.

By Matt Scherer

As with aerial drones, a bomb disposal robot can deliver lethal force without placing the humans making the decision to kill in danger. The absence of risk creates a danger that the technology will be overused.

Op-ed: When NATO Countries Were U.S. Nuclear Targets

By Ariel Conn

Sixty years ago, the U.S. had over 60 nuclear weapons aimed at Poland, ready to launch. At least one of those targeted Warsaw, where, on July 8-9, allied leaders met for the biennial NATO summit meeting. Most people assume that the U.S. no longer poses a nuclear threat to its own NATO allies, but that assumption may be wrong.

Note From FLI:

The FLI website is including an increasing number of op-eds. Among our objectives for the website is to inspire discussion and a sharing of ideas, and as such, we’re posting opinion pieces that we believe will help spur discussion within our community. However, these op-eds do not necessarily represent FLI’s opinions or views.

We’re also looking for more writing volunteers. If you’re interested in writing either news or opinion articles for us, please let us know.