US AI Safety Institute codification (FAIIA vs. AIARA)

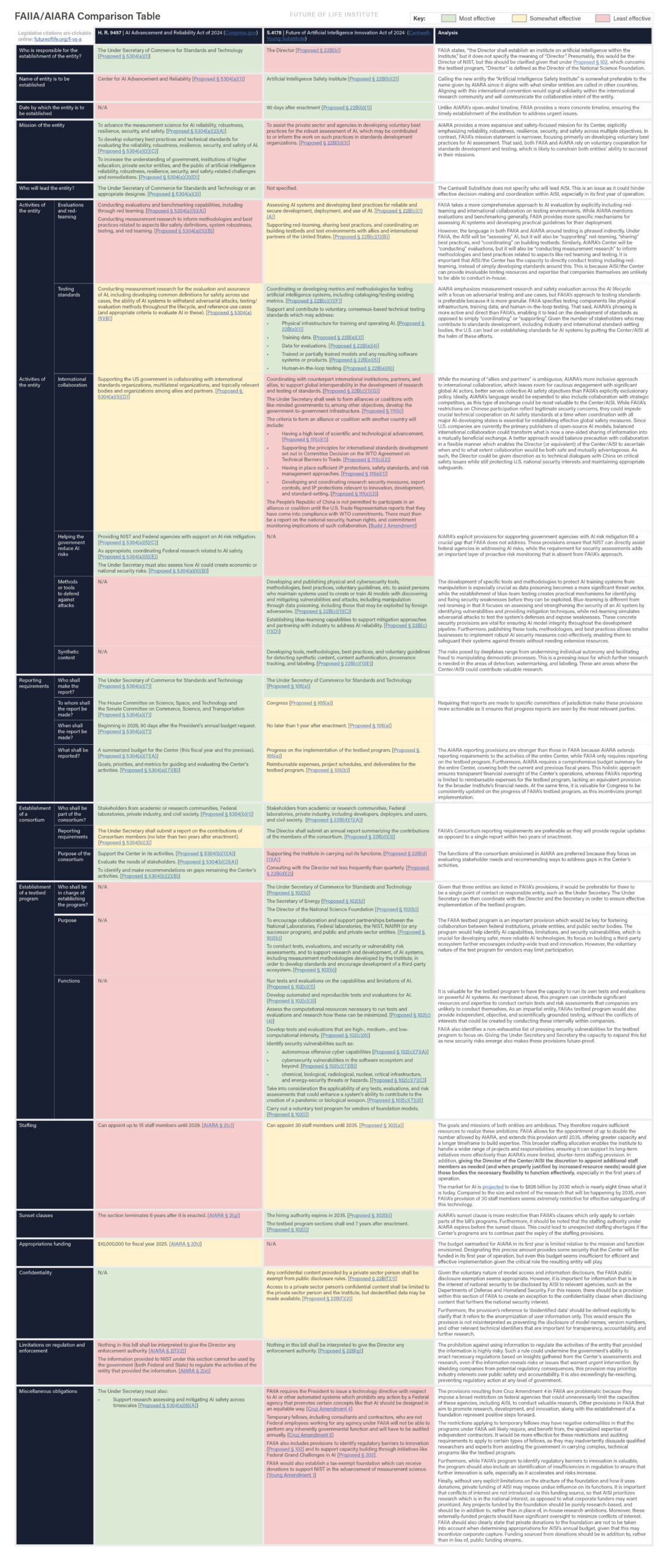

Comparing key provisions in the 'Future of Artificial Intelligence Innovation Act' of 2024 with those of the 'AI Advancement and Reliability Act' of 2024. Both bills aim to codify an entity similar to the current AI Safety Institute.

Here we present a comparison of the key provisions in the 'Future of Artificial Intelligence Innovation Act' of 2024 with those of the 'AI Advancement and Reliability Act' of 2024.

Both bills aim to codify an entity similar to the current AI Safety Institute. We compare them on a range of dimensions, including:

- Who is responsible for establishing the entity;

- What activities the entity will perform;

- Reporting requirements of the entity;

- Establishment of a consortium;

- Establishment of a testbed program;

- Staffing;

- Funding;

- And more.

We also provide some analysis on each of these dimensions, and of the provisions overall.

Contents

Comparison Table: FAIIA vs AIARA

View the full-length comparison table as a PDF.

Analysis

The central goal of both the AI Advancement and Reliability Act (AIARA) and Future of Artificial Intelligence Innovation Act (FAIIA) is the codification of an entity similar to the U.S. AI Safety Institute (AISI) which is currently authorized by Executive Order #14110. Both bills have some beneficial provisions, including the statutory authorization of the US AISI, but they also contain a range of limitations that make them difficult to adopt as wholesale packages.

FAIIA contains several particularly strong provisions, including detailed provisions on the red-teaming, evaluations, and standard-setting functions of its proposed AISI. This AISI would also develop and publish physical and cybersecurity tools, methodologies, and best practices to help those managing AI systems identify and mitigate vulnerabilities, including threats posed by foreign adversaries – something which AIARA lacks. Importantly, FAIIA establishes an AI testbed program to conduct tests, evaluations, and security or vulnerability risk assessments. Providing this structured testing environment would enable stakeholders to systematically identify and address potential weaknesses in powerful AI models, creating an assurance of safety and reliability prior to deployment. Another crucial distinction between the two bills is that AIARA contains a prohibition against the government using any information provided to NIST as part of the program in order to regulate the entity which provided the information. This rule engenders significant risks of preventing the government (both at the Federal and state level) from intervening when it becomes clear that such intervention is urgently necessary, including if a system directly compromises national security. Part of the Center’s mission, as envisioned by AIARA, is to increase the government’s understanding of AI security and resilience. If dangerous capabilities are identified, the priority should be for the government to have flexibility in considering whether intervention is necessary, taking into account national security interests.

On the other hand, FAIIA includes several aspects that make it comparatively weaker. First, the approach to international collaboration it envisions is severely limited. While FAIIA’s exclusion of Chinese engagement addresses mounting geopolitical concerns, it risks hindering essential technical cooperation on AI safety standards at a time when global coordination is critical. The U.S. stands to benefit significantly from establishing a line of communication between the U.S. AISI and its Chinese counterpart, given that U.S. companies frequently open-source their AI models, resulting in what is currently a largely one-sided flow of information. In contrast, AIARA’s more balanced and inclusive approach, which allows for cautious engagement with key international AI actors, better supports collective safety efforts while maintaining the flexibility to protect U.S. security interests. Finally, an amendment adopted in the Senate Commerce Markup has resulted in a set of provisions being embedded in FAIIA which would require the President to issue a technology directive with respect to AI or other automated systems prohibiting any action by a Federal agency that would promote certain concepts such as the equitable development of AI. This restriction on federal agencies could undermine AISI’s ability to conduct crucial research, including with respect to political biases previously identified in industry-leading AI systems and for improving AI system compliance with existing law.

Critically, both of these bills place AISI (or AIARA’s Center) on statutory footing, allowing it the security to engage in longer-term research projects without fear of losing executive authorization prior to their completion. That said, both bills also contain significant shortcomings, many of which are more adequately addressed in their respective legislative counterparts. A formal or informal conference process adopting the strongest provisions from both bills would therefore strengthen the overall framework guiding AISI or the Center. Without a conference process or other means of integrating preferred provisions from each bill, FAIIA remains the marginally better bill despite its imperfections, as it provides more comprehensive direction for implementing the key objectives of the AISI. Should Congress instead elect to adopt the AIARA in full, we strongly advise, at a minimum, removing the provision prohibiting the use of information collected by the Center for the purpose of regulation, as this prohibition could threaten both the efficacy of the Center and the security of the American public.

Priority Provisions

Future of Artificial Intelligence Innovation Act (FAIIA)

| Restrictions on International Collaboration The People’s Republic of China is not permitted to participate in an alliance or coalition until the U.S. Trade Representative reports that they have come into compliance with WTO commitments. There must then be a report on the national security, human rights, and commitment monitoring implications of such collaboration. | While there are legitimate security concerns around collaboration with China, this explicit exclusion risks hindering essential AI safety cooperation at a time when global coordination is crucial. Given that U.S. companies tend to open-source their AI models, balanced international collaboration could shift information sharing from one-sided to mutually beneficial. A more flexible approach would empower the Center’s Director to determine safe, advantageous levels of collaboration with China on critical safety issues. |

| Staffing and Funding Under FAIIA, 30 staff members can be appointed until 2035 . FAIIA would also establish a tax-exempt foundation which can receive donations to support NIST in the advancement of measurement science. | While FAIIA’s staffing provisions offer more capacity than AIARA, the cap of 30 appointees through 2035 remains insufficient for achieving its ambitious goals, especially given the rapid expansion of the AI market. Similar bodies typically require closer to 50–75 staff members to function effectively. The U.K. AISI has started with over 30 technical staff members and is rapidly expanding beyond that number. Moreover, FAIIA does not include any provisions of appropriations. It is important that sufficient funding is provided for AISI, such that it does not have to rely on donations received via the foundation. For reference, the U.K. AISI received £100m in initial funding. If the U.S. is to lead on AI, FAIIA must at least match this level of funding. |

| Technology Directive Restricting Federal Agencies FAIIA requires the President to issue a technology directive on AI which prohibits any action by Federal agencies that promotes certain concepts like that AI should be designed in an equitable way. | These provisions impose an overly broad restriction that could unnecessarily constrain federal agencies, such as the AISI, in their capacity to conduct essential research and development. This restriction could also limit investigations into critical issues, including political biases, or capacity to comply with existing US anti-discrimination laws. |

Artificial Intelligence Advancement and Reliability Act (AIARA)

| Limitations on regulation and enforcement The information provided to NIST under AIARA cannot be used by the government (both Federal and State) to regulate the activities of the entity that provided the information. | The prohibition in AIARA against using information provided to NIST for regulatory purposes is problematic, as it limits the government’s ability to act on critical insights that could indicate significant risks. If information gathered through the research of the Center reveals issues needing urgent intervention, for example on grounds of national security, the government should not be restricted in its ability to regulate. Removing this restriction would ensure that regulatory bodies can respond appropriately to identified risks. AIARA makes it clear that information is voluntarily provided to the Center, and the bill takes adequate measures to protect proprietary rights and strategic interests. These concerns are therefore adequately addressed in other parts of the bill and do not warrant this restriction on the government’s ability to regulate in the future. |

| Lack of a Testbed Program AIARA lacks the testbed program set out in FAIIA which would conduct tests, evaluations, and security or vulnerability risk assessments. | Including a testbed program in AIARA is essential to foster collaboration among government agencies, laboratories, and the private sector, fast-tracking the U.S.’s ability to lead on safe AI. By adopting a testbed program similar to FAIIA, AIARA would encourage and support the development of leading AI systems that meet security and robustness standards, enhancing public trust and supporting companies of all sizes. |

| Staffing and Funding Under AIARA, up to 15 staff members can be appointed until 2029 . $10,000,000 is allocated for fiscal year 2025. | The staffing provision in AIARA is inadequate for meeting the substantial demands of overseeing and guiding AI development. For the reasons mentioned above, a team of only 15 staff members would struggle to address a wide range of responsibilities AIARA envisions, from developing standards to conducting assessments and fostering public-private collaborations. While some funding is set aside for the Center, $10 million represents a sliver of what has been allocated to similar entities, like the U.K.’s AI Safety Institute. |