How AI Handles Uncertainty: An Interview With Brian Ziebart

When training image detectors, AI researchers can’t replicate the real world. They teach systems what to expect by feeding them training data, such as photographs, computer-generated images, real video and simulated video, but these practice environments can never capture the messiness of the physical world.

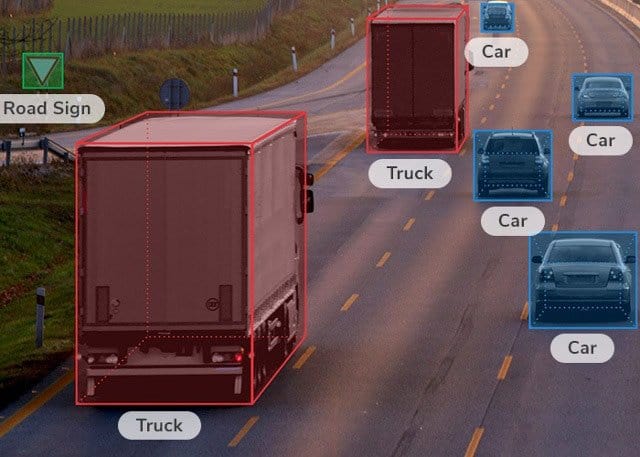

In machine learning (ML), image detectors learn to spot objects by drawing bounding boxes around them and giving them labels. And while this training process succeeds in simple environments, it gets complicated quickly.

It’s easy to define the person on the left, but how would you draw a bounding box around the person on the right? Would you only include the visible parts of his body, or also his hidden torso and legs? These differences may seem trivial, but they point to a fundamental problem in object recognition: there rarely is a single best way to define an object.

As this second image demonstrates, the real world is rarely clear-cut, and the “right” answer is usually ambiguous. Yet when ML systems use training data to develop their understanding of the world, they often fail to reflect this. Rather than recognizing uncertainty and ambiguity, these systems often confidently approach new situations no differently than their training data, which can put the systems and humans at risk.

Brian Ziebart, a Professor of Computer Science at the University of Illinois at Chicago, is conducting research to improve AI systems’ ability to operate amidst the inherent uncertainty around them. The physical world is messy and unpredictable, and if we are to trust our AI systems, they must be able to safely handle it.

Overconfidence in ML Systems

ML systems will inevitably confront real-world scenarios that their training data never prepared them for. But, as Ziebart explains, current statistical models “tend to assume that the data that they’ll see in the future will look a lot like the data they’ve seen in the past.”

As a result, these systems are overly confident that they know what to do when they encounter new data points, even when those data points look nothing like what they’ve seen. ML systems falsely assume that their training prepared them for everything, and the resulting overconfidence can lead to dangerous consequences.

Consider image detection for a self-driving car. A car might train its image detection on data from the dashboard of another car, tracking the visual field and drawing bounding boxes around certain objects, as in the image below:

For clear views like this, image detectors excel. But the real world isn’t always this simple. If researchers train an image detector on clean, well-lit images in the lab, it might accurately recognize objects 80% of the time during the day. But when forced to navigate roads on a rainy night, it might drop to 40%.

“If you collect all of your data during the day and then try to deploy the system at night, then however it was trained to do image detection during the day just isn’t going to work well when you generalize into those new settings,” Ziebart explains.

Moreover, the ML system might not recognize the problem: since the system assumes that its training covered everything, it will remain confident about its decisions and continue “to make strong predictions that are just inaccurate,” Ziebart adds.

In contrast, humans tend to recognize when previous experience doesn’t generalize into new settings. If a driver spots an unknown object ahead in the road, she wouldn’t just plow through the object. Instead, she might slow down, pay attention to how other cars respond to the object, and consider swerving if she can do so safely. When humans feel uncertain about our environment, we exercise caution to avoid making dangerous mistakes.

Ziebart would like AI systems to incorporate similar levels of caution in uncertain situations. Instead of confidently making mistakes, a system should recognize its uncertainty and ask questions to glean more information, much like an uncertain human would.

An Adversarial Approach

Training and practice may never prepare AI systems for every possible situation, but researchers can make their training methods more foolproof. Ziebart posits that feeding systems messier data in the lab can train them to better recognize and address uncertainty.

Conveniently, humans can provide this messy, real-world data. By hiring a group of human annotators to look at images and draw bounding boxes around certain objects – cars, people, dogs, trees, etc. – researchers can “build into the classifier some idea of what ‘normal’ data looks like,” Ziebart explains.

“If you ask ten different people to provide these bounding boxes, you’re likely to get back ten different bounding boxes,” he says. “There’s just a lot of inherent ambiguity in how people think about the ground truth for these things.”

Returning to the image above of the man in the car, human annotators might give ten different bounding boxes that capture different portions of the visible and hidden person. By feeding ML systems this confusing and contradictory data, Ziebart prepares them to expect ambiguity.

“We’re synthesizing more noise into the data set in our training procedure,” Ziebart explains. This noise reflects the messiness of the real world, and trains systems to be cautious when making predictions in new environments. Cautious and uncertain, AI systems will seek additional information and learn to navigate the confusing situations they encounter.

Of course, self-driving cars shouldn’t have to ask questions. If a car’s image detection spots a foreign object up ahead, for instance, it won’t have time to ask humans for help. But if it’s trained to recognize uncertainty and act cautiously, it might slow down, detect what other cars are doing, and safely navigate around the object.

Building Blocks for Future Machines

Ziebart’s research remains in training settings thus far. He feeds systems messy, varied data and trains them to provide bounding boxes that have at least 70% overlap with people’s bounding boxes. And his process has already produced impressive results. On an ImageNet object detection task investigated in collaboration with Sima Behpour (University of Illinois at Chicago) and Kris Kitani (Carnegie Mellon University), for example, Ziebart’s adversarial approach “improves performance by over 16% compared to the best performing data augmentation method.” Trained to operate amidst uncertain environments, these systems more effectively manage new data points that training didn’t explicitly prepare them for.

But while Ziebart trains relatively narrow AI systems, he believes that this research can scale up to more advanced systems like autonomous cars and public transit systems.

“I view this as kind of a fundamental issue in how we design these predictors,” he says. “We’ve been trying to construct better building blocks on which to make machine learning – better first principles for machine learning that’ll be more robust.”

This article is part of a Future of Life series on the AI safety research grants, which were funded by generous donations from Elon Musk and the Open Philanthropy Project.