Contents

Welcome to the Future of Life Institute Newsletter. Every month, we bring 24,000+ subscribers the latest news on how emerging technologies are transforming our world – for better and worse.

Today’s newsletter is a 5-minute read. We cover:

- Our new papers on AI liability law and the EU’s dependence on China and America

- Our open letter against reckless nuclear escalation

- Interviews with Ajeya Cotra and Robin Hanson about AI and aliens

- New research about the governance fora for autonomous weapons

- Dr. Matthew Meselson, 2019 Future of Life Award Winner

AI Liability Directive

AI Liability Directive: A new position paper by our EU Policy Representative Claudia Prettner argues that the EU Commission’s latest proposal for an AI Liability Directive (AILD) does not do enough to protect consumers from AI-related harms.

- Why this matters: A slew of consumer products that rely on AI – such as self driving cars or résumé screening software – can harm individuals or their rights. The AILD makes it easier for consumers to sue producers or deployers of AI systems for such harm.

- What do we want? We’re calling for the AILD to include a higher standard of liability for General Purpose AI systems; place the presumption of fault on the operator for other AI systems; specify immaterial harm, such as discrimination or privacy infringement; and identify liability across the AI value chain.

- Press: The MIT Technology Review quoted Claudia about our position on the AILD. Read their coverage of the issue here.

The EU’s GPAI conundrum: A new policy paper by FLI’s EU Policy Researcher Risto Uuk argues that European companies face a competitive disadvantage in developing General-Purpose Artificial Intelligence (GPAI) compared to their American and Chinese counterparts.

- Why this matters: The EU will likely remain dependent on systems that are developed elsewhere, even as it attempts to regulate GPAI via the AI Act.

- The AI Act: Speaking of the AI Act, FLI recently published a working paper proposing a new definition of GPAI in the AI Act. A condensed version of this paper is featured in the OECD AI Policy Observatory blog. We covered the paper in our October newsletter.

- Additional Context: In May, the French Government proposed that the AI Act should regulate GPAI. FLI has advocated for this position since the EU released its first draft of the AI Act last year. In September, FLI’s Vice-President for Policy and Strategy, Anthony Aguirre, spoke on a panel hosted by the Centre for Data Innovation about how the EU should regulate GPAI.

- Press: Science | Business reported on the policy paper with quotes from Risto. Read the coverage here.

In Case You Missed It

Nuclear Escalation Open Letter

In October, FLI launched an open letter pushing both to de-normalize nuclear threats and to re-mainstream non-appeasing de-escalation strategies that reduce the risk of nuclear war without giving into blackmail.

- Would you like to join as a signatory? This would be wonderful, because it would help reduce the probability of the greatest catastrophe in human history. To read and consider signing, please click here.

Nominate a Candidate for the Future of Life Award!

The Future of Life Award is a $50,000 prize given to individuals who, without much recognition at the time, helped make today dramatically better than it may have otherwise been. Previous winners include individuals who eradicated smallpox, fixed the ozone layer, banned biological weapons, and prevented nuclear wars. Follow these instructions to nominate a candidate. If we decide to give the award to your nominee, you will receive a $3,000 prize!

Applications Open: Vitalik Buterin Postdoctoral Fellowship in AI Existential Safety

The PostDoc Fellowship is designed to support promising researchers for postdoctoral appointments who plan to work on AI existential safety research. It provides an annual $80,000 stipend and a research fund of $10,000 for upto three years. Applications are due on January 2, 2023. Read more about the grant and apply here.

FLI Podcast

In November, we interviewed Ajeya Cotra about ‘Transformative AI’ and Robin Hanson about why ‘Grabby Aliens’ are the reason we still haven’t discovered extraterrestrial intelligence. Watch the episodes here:

Governance and Policy Updates

AI Policy:

- The annual meeting of the UN Convention on Certain Conventional Weapons (UN CCW) concluded in Geneva. States failed to make progress on autonomous weapons.

Nuclear Security:

- The G20 Leaders’ Declaration in Bali denounced the “use, or threat of the use, of nuclear weapons” as “inadmissible.”

Biotechnology:

- The Ninth Review Conference of the Biological Weapons Convention will take place in Geneva, Switzerland, from 28 November to 16 December 2022.

Climate Change:

- The UNFCC CoP-27 concluded in Egypt. Countries made little progress on phasing out fossil fuels but agreed to establish a “loss and damage fund.”

Updates from FLI

FLI Policy Director Mark Brakel spoke on a panel discussion hosted by Dentons Global Advisors about the EU’s plan to govern General Purpose AI. Other panelists included Kilian Gross (Head of the EU Commission AI Unit), MEP Brando Benifei and Jenny Brennan (Senior Researcher Ada Lovelace Institute). Watch their discussion here:

Negotiating a Treaty on Autonomous Weapons

Bidding adieu to the CCW? A new report by the Human Rights Watch and the Harvard Law School International Human Rights Clinic argues that governments should move away from the UN CCW as a forum to discuss autonomous weapons and begin an independent process to negotiate a standalone treaty, as was done for the Mine Ban Treaty and the Convention on Cluster Munitions.

- Why this matters: Multilateral efforts to regulate autonomous weapons have stalled in the CCW due to arcane procedural rules requiring the consensus of all members. If states can find a new forum, more progress can take place in negotiating globally binding rules.

- The good news: Momentum for regulating autonomous weapons is building. At the UN General Assembly earlier in October, seventy states delivered a first-of-a-kind joint statement calling for internationally binding rules for autonomous weapons.

- More good news: Costa Rica and Luxembourg will host conferences in early 2023 to discuss the challenges associated with autonomous weapons.

- Dive deeper: Visit our website to learn more about autonomous weapons. To raise awareness about the ethical, legal, and strategic implications of autonomous weapons, we produced two short films about “slaughterbots”. Watch them here:

What We’re Reading

Biosafety: A three-part investigative series published in The Intercept revealed significant lapses in biosafety standards, a lack of government oversight, and limited transparency at American bio-labs.

- Dive deeper: Listen to our interview with Dr. Filippa Lentzos, Senior Lecturer in Science and International Security at King’s College London, about global catastrophic biological risks.

Nukes + AI = Bad: A new article in War on the Rocks argues that states should commit to retaining human political control over nuclear weapons as a confidence-building measure to guard against the integration of AI into nuclear command and control.

Hindsight is 20/20

Between November 28 and December 16, 2022, states will meet at the UN in Geneva to review the Biological Weapons Convention. You might not know it today, but only a few decades ago, states were aggressively investing in biological weapons as a means of warfare.

We avoided a world in which biological weapons are frequently used thanks to Dr. Matthew Meselson, whose tireless efforts led the world to ban biological weapons.

As an arms control researcher in 1963, he learned that the US government was producing Anthrax. Horrified, he began campaigning against biological weapons on TV, the radio, and in the newspapers. Meselson continued pushing lawmakers and the scientific community until, in 1972, governments signed the Biological Weapons Convention.

For his contributions to making the world safer, we presented him with the Future of Life Award in 2019.

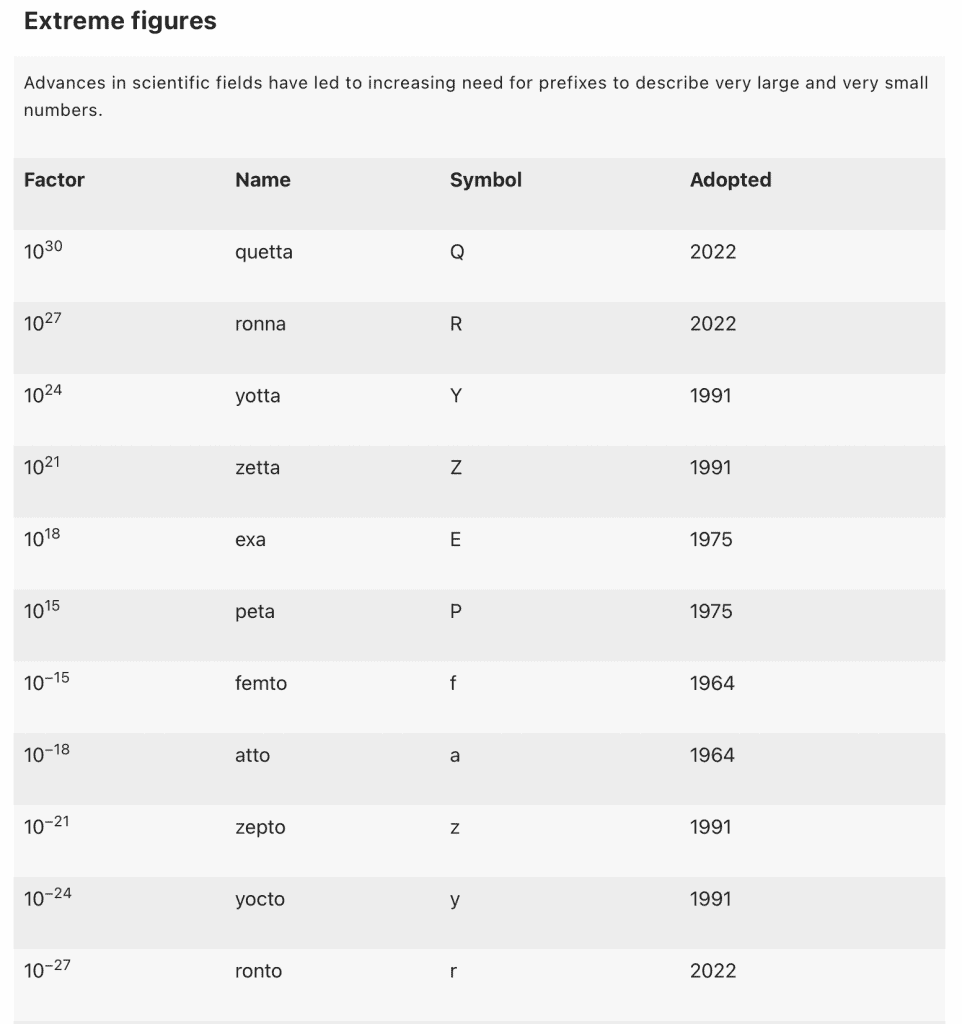

Chart of the Month

According to Nature, the annual volume of data generated globally has already reached zettabytes, that is, 1021. To keep up, government representatives introduced new measures for ridiculously large numbers at the General Conference on Weights and Measures in Paris on 18 November. This table from Nature should catch you up on the latest updates.

FLI is a 501(c)(3) non-profit organisation, meaning donations are tax exempt in the United States. If you need our organisation number (EIN) for your tax return, it’s 47-1052538. FLI is registered in the EU Transparency Register. Our ID number is 787064543128-10.